Armand Joulin

@armandjoulin

Followers

4,194

Following

355

Media

2

Statuses

281

principal researcher, @googledeepmind . ex director of emea at fair @metaai . mostly work on open projects: fasttext, dino, llama, gemma.

Joined February 2009

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Bronx

• 763953 Tweets

#RightPlaceWrongPerson

• 586097 Tweets

#RM_LOST

• 464917 Tweets

namjoon

• 313832 Tweets

RCB FINISHED DHOBI

• 100400 Tweets

Gaga

• 95161 Tweets

Josh

• 80636 Tweets

RPWP IS HERE

• 78547 Tweets

Clancy

• 67065 Tweets

新時代の扉

• 64485 Tweets

tyler

• 62203 Tweets

Groin

• 40945 Tweets

Kevin Hart

• 38784 Tweets

#ウォンジョンヨヘア

• 38307 Tweets

#WonjungyoHair

• 37430 Tweets

twenty one pilots

• 31205 Tweets

ブートヒル

• 21759 Tweets

Hayırlı Cumalar

• 21297 Tweets

エアコン

• 20116 Tweets

高齢者の定義

• 18720 Tweets

夏アニメ化

• 18680 Tweets

スタライ

• 17597 Tweets

ウマ娘の映画

• 17532 Tweets

BBL Drizzy

• 16665 Tweets

メラルバ

• 16172 Tweets

移民政策

• 15940 Tweets

Quiñones

• 10826 Tweets

Last Seen Profiles

Pinned Tweet

We are releasing a first set of new models for the open community of developers and researchers.

6

17

124

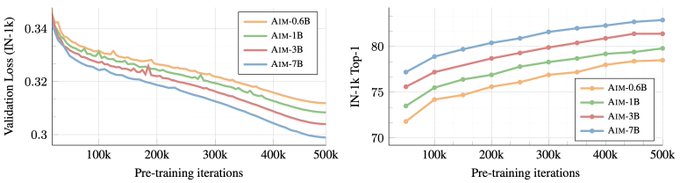

We are releasing a series of visual features that are performant across pixel and image level tasks. We achieve this by training a 1b param VIT-g on a large diverse and curated dataset with no supervision, and distill it to smaller models. Everything is open-source.

6

35

325

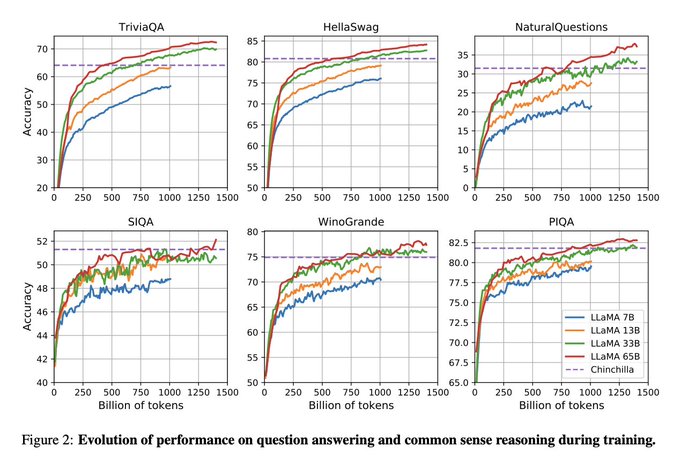

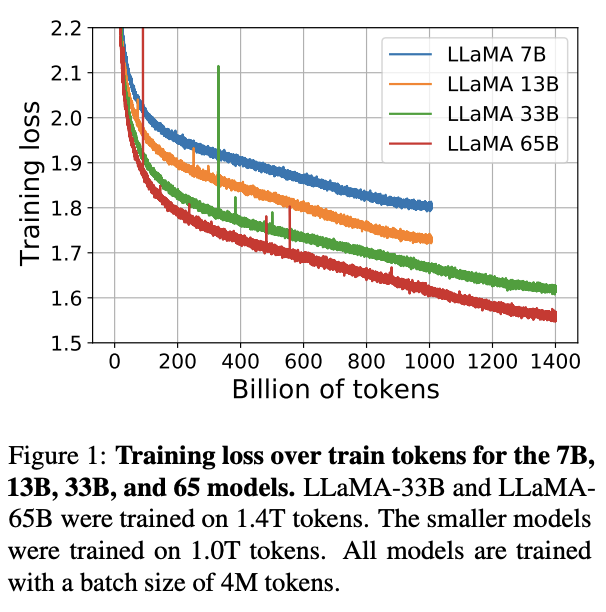

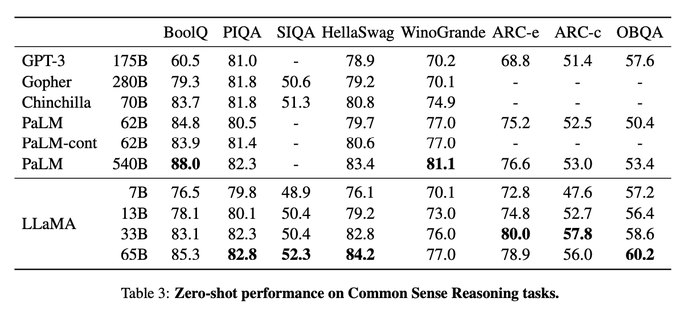

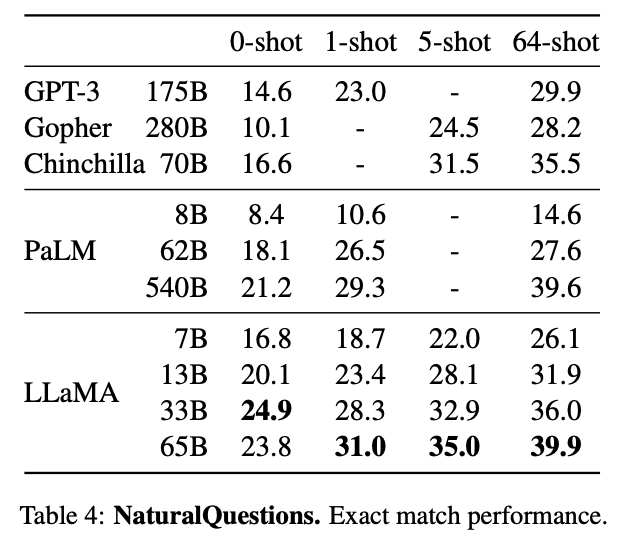

Super excited to share new open LLMs from FAIR with our research community. Particularly, the LLaMA-13B is competitive with GPT-3, despite being 10x smaller.

2

27

208

Our DINOv2 models are now under Apache 2.0 license. Thank you

@MetaAI

for making this change!

0

10

121

Great article by

@guillaumgrallet

for

@LePoint

on the unique place of France in AI. Shout out to

@Inria

for their central role in building the foundations of this ecosystem.

2

15

80

Our work on learning visual features with an LLM approach is finally out. All the scaling observations made on LLMs transfer to images! It was a pleasure to work under

@alaaelnouby

leadership on this project, and this concludes my fun (but short) time at Apple! 1/n

1

7

66

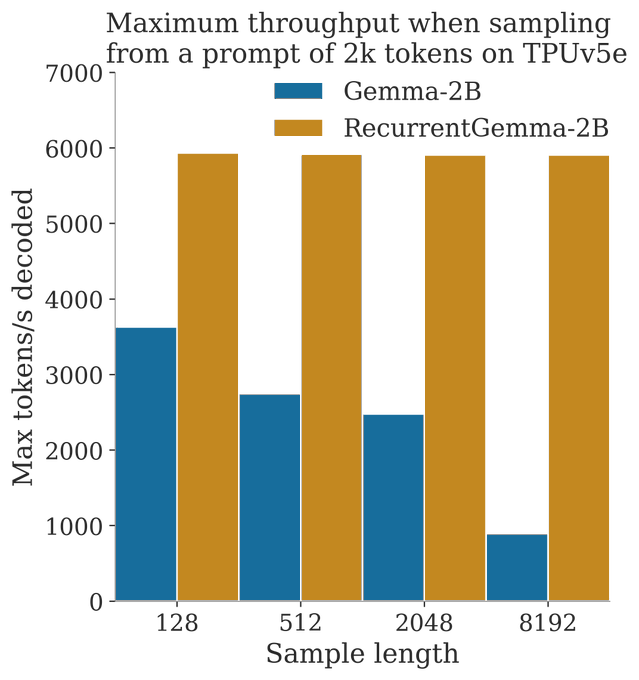

So excited by the release of the open version of Griffin. The griffin team has done everything possible to help

@srush_nlp

win his bet, and now they are open sourcing a first 2B to help the community help Sasha.

2

9

63

IMHO, Chinchilla is the most impactful paper in the recent development of open LLMs, and its relatively low citation counts shows how much this metric is broken.

3

7

56

Working with the Gemini team has been a lot of fun! Thank you

@clmt

@OriolVinyalsML

@JeffDean

@koraykv

! 💙♊️

It certainly has been a fun year

@Google

: enjoy playing with our open source models Gemma, built from the same research and technology used to create the Gemini models. 💙♊️🚀

Blog:

Tech report:

23

36

279

4

5

56

Team worked hard to address the feedback from the open community to improve the model. Kudos to

@robdadashi

and colleagues for the hard work. Let us know how it is.

4

12

51

Congrats to the

@xai

s team for this release! Almost everyone is now opensourcing and it has only been a year since LLaMA, what a turn of events.

0

7

47

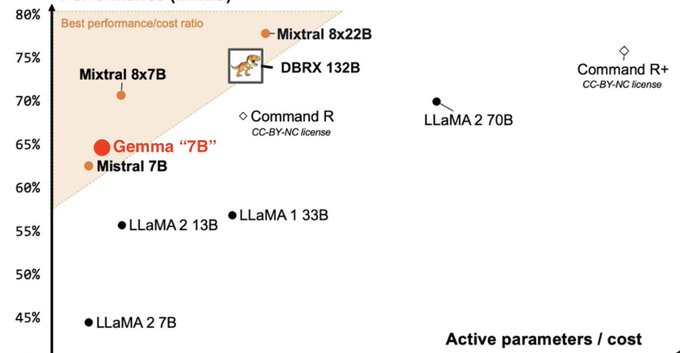

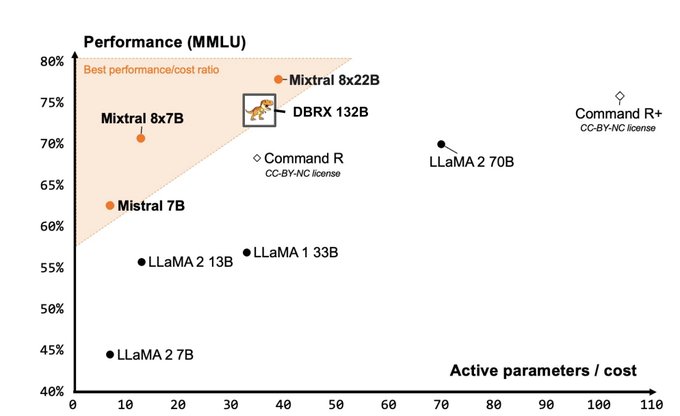

Congratulations to

@aidangomez

and

@cohere

for this amazing breakthrough! On the side, our Gemma IT team also pushed our model thanks to the feedback from the open community. Great day for open models!

1

6

44

Cohere did it again

2

1

43

Very impressive upscaling of features

1

7

39

PaliGemma is out and you can finetune it on Google Colab in a matter of minutes.

2

1

38

From rejected for lack of novelty to breakthrough in video generation in less than a year.

0

2

37

There is only scale and cosine schedule and adamw with batchsize that are big but not too big and a post..not wait pre..no wait postnorm with rsmnorm and gradient clipping and RoPe with sentencepiece with no dummy whitespace on heavily preprocessed data, duh?

3

1

33

This is why I love the open community so much and will always find ways to give back to them: they help each other to build together.

0

3

31

What a rockstar team! Thrilled to see what they will deliver!

Really excited to be part of the founding team of

@kyutai_labs

: at the heart of our mission is doing open source and open science in AI🔬📖. Thanks so much to our founding donators for making this happen 🇪🇺 I’m thrilled to get to work with such a talented team and grow the lab 😊

13

6

212

2

0

31

Using parallel decoding to speed up inference of LLM:

✅ no need for a second model

✅ not finetuning

✅ negligible memory overhead

🎉 Unveiling PaSS: Parallel Speculative Sampling

🚀 Need faster LLM decoding?

🔗 Check out our new 1-model speculative sampling algorithm based on parallel decoding with look-ahead tokens:

🤝 In collaboration with

@armandjoulin

and

@EXGRV

7

10

51

0

5

31

Always wonder how to scale an RNN?

Spoiler alert: simple ideas that scale and attention to details.

1

5

29

Gemma v1.1 is officially announced!

@robdadashi

led a strike team to fix most of the issues that the open source community found with our 2B and "7B" IT models. Kudos to them and more to come soon!

0

7

27

Very elegant solution to multimodal llm. I am impressed by the performance despite using a relatively small image token dictionary.

3

3

29

I remember

@alex_conneau

telling me about his dream of building Her only a few years ago, and here we are. Congratulations to you and the whole OpanAI team behind this achievement!

@OpenAI

#GPT4o

#Audio

Extremely excited to share the results of what I've been working on for 2 years

GPT models now natively understand audio: you can talk to the Transformer itself!

The feeling is hard to describe so I can't wait for people to speak to it

#HearTheAGI

🧵1/N

38

54

490

1

0

23

💯 MLP-mixer is perfect example of the importance of data but it is also a very elegant model... and meme.

@ahatamiz1

@arimorcos

It was one of the few big points of the MLP-Mixer paper/result, to show that "at scale, any reasonable architecture will work".

We could have followed with a few more papers with a few more architectures, but it was enough and we moved on to other things.

cont.

2

1

31

4

1

23

Congratulations! The IT results are particularly strong, impressive!

1

1

23

As always, I'm amazed by the support of HF to the open community. The new member of the Pali family is out and ready to be tested! Great work from

@giffmana

and colleagues.

0

3

21

NYC is the new Paris

move to NYC.

build open models.

distribute bootleg books of model weights alongside bagels and ice cream trucks.

@srush_nlp

@kchonyc

@jefrankle

and I will be around.

8

13

255

1

2

20

Congrats

@CohereForAI

and welcome to the game!

Less than 24 hours after release, C4AI Command-R claims the

#1

spot on the Hugging Face leaderboard!

We launched with the goal of making generative AI breakthroughs accessible to the research community - so exciting to see such a positive response. 🔥

2

21

137

1

1

20

open data is critical for the progress of AI, and our AIM work would not have been possible without

@Vaishaal

fantastic work. Thank you for making this data available to the community.

2

1

19

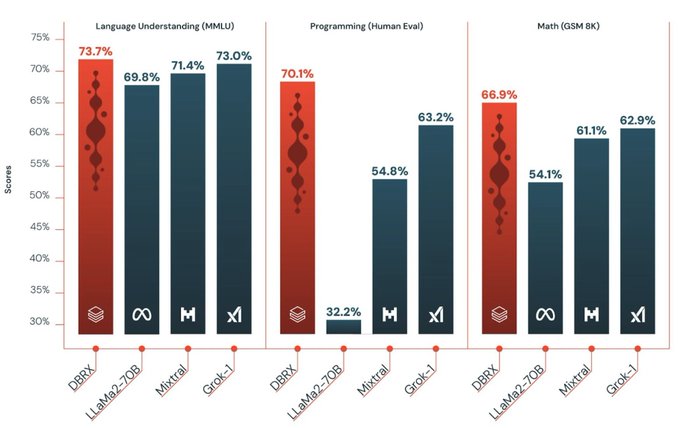

Another open source model! It seems that even large models are being open now, I m looking forward to how this will help the open community.

Meet DBRX, a new sota open llm from

@databricks

. It's a 132B MoE with 36B active params trained from scratch on 12T tokens. It sets a new bar on all the standard benchmarks, and - as an MoE - inference is blazingly fast. Simply put, it's the model your data has been waiting for.

34

267

1K

2

1

18

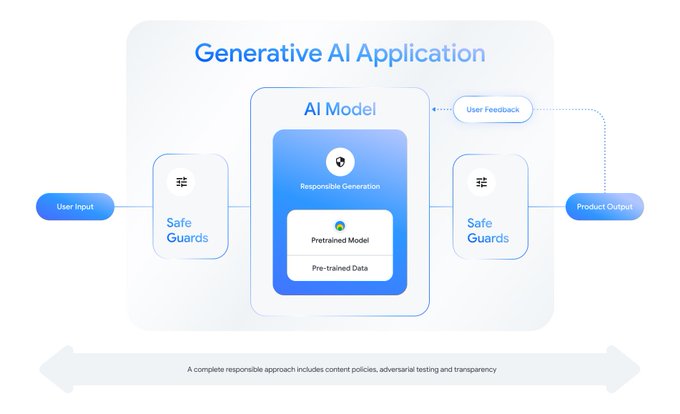

Help build safeguards for your projects when using open LLMs, like Gemma.

0

4

16

That ⬇️ + If this model is not for you, just use another open model. That s the beauty of it.

1

0

14

The improvements of v1.1 wouldn't be possible without the creativity of

@piergsessa

0

2

12

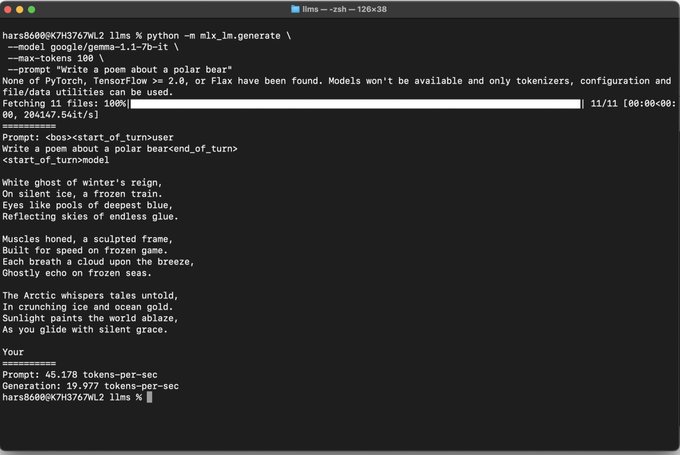

The MLX community is honestly one of the most impressive atm.

The 🤗 MLX community is fast. Already quantized and uploaded all the Gemma model variants:

Available here:

Thanks

@Prince_Canuma

and

@lazarustda

!

6

17

200

0

2

12

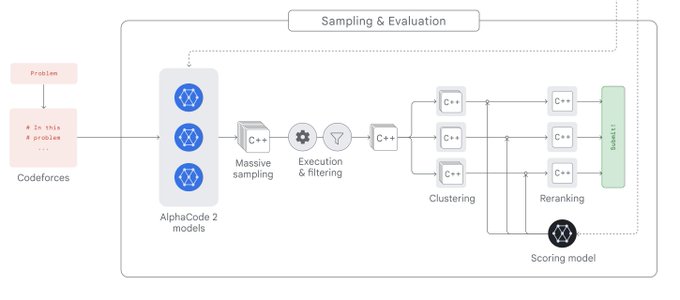

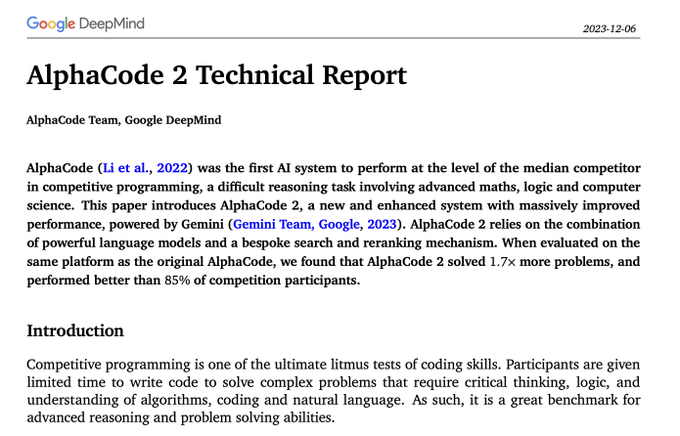

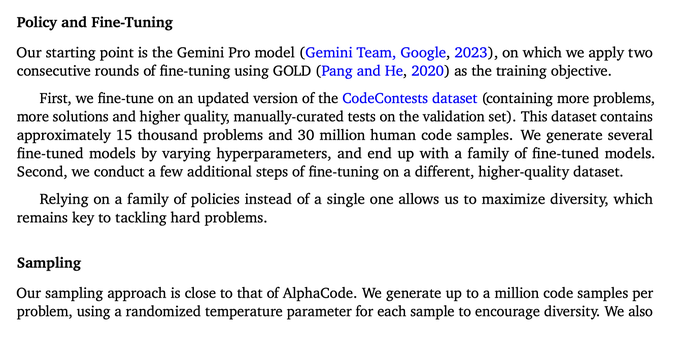

Great work from the team led by

@RemiLeblond

on competitive code competition!

0

1

11

@giffmana

FAIR is still home to top tier computer vision like

@imisra_

,

@lvdmaaten

, Christoph Feichtenhofer, Peter Dollar, Yaniv Taigman,

@p_bojanowski

. As

@inkynumbers

I think a lot of us joined 8-9yr ago and there are cycles in research careers.

0

0

11

@abacaj

We will look to improve our models in future iterations and any feedback will be appreciated (through DMs?). Mistral's models are amazing and if they work for you, all the best!

1

1

11

@deliprao

@arankomatsuzaki

Along with BERT, T5 (

@colinraffel

,

@ada_rob

@sharan0909

among others) from Google has also played a key role in the transformer revolution. It has been widely used in research and is still so underrated to this day imho.

1

0

10

Looking forward to read the recommendations from the AI commission to the French governement. What an amazing team of diverse talents from the industry like Joelle Barral and

@arthurmensch

, and academia like

@GaelVaroquaux

and

@Isabelle_Ryl

1

1

10

Amazing how fast is

@awnihannun

0

0

9

Impressive! I wonder how much using lmsys-1M dataset is hacking the system though

3

1

8

@chriswolfvision

tbh, sam was not designed for downstream tasks while dinov2 was + we did probe ade20k and inet-1k-nn intensively during the dev of dinov2 so it s not the fairest metrics to support this point.

0

0

9

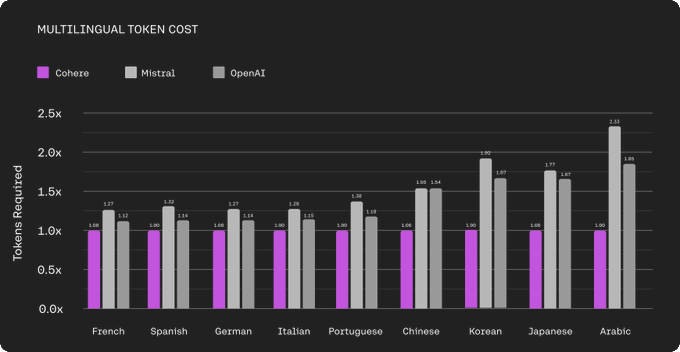

Great thread about the advantage of large multilingual vocabulary

1

1

9

@ylecun

@inkynumbers

@sainingxie

@georgiagkioxari

@AlexDefosse

Worth mentioning that

@EXGRV

also went to Kyutai and Kaiming He to MIT. It is the cycle if a lab.

2

1

8

Working with

@alaa_nouby

is amazing, this is a great opportunity.

📢 The

@Apple

MLR team in Paris is looking for a strong PhD intern

🔎 Topics: Representation learning at scale, Vision+Language, and multi-modal learning.

Please reach out if you're interested! You can apply here 👇

3

30

89

1

0

8

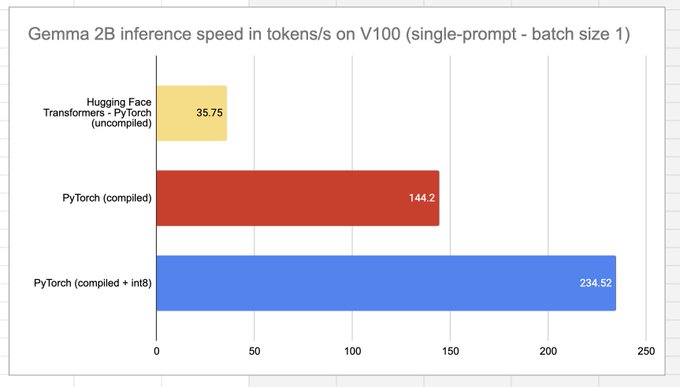

Impressive speed on a V100!

With the new release of Gemma-2B, I thought I'd see how torch.compile performs.

Gemma 2B for a single prompt runs at 144 tokens/s on a V100, a 4x increase over the uncompiled HF version.

We're working with

@huggingface

to upstream these improvements too!

9

25

246

0

0

7

Very impressive! Excited to see what they are cooking with such powerhouse 🚀

0

0

7

@awnihannun

ports in MLX at 1.56 models/sec

1

1

6

@OriolVinyalsML

Congratulations

@OriolVinyalsML

on leading the team to this massive milestone!

1

0

5

Amazing recruitment from PSL University to lead their AI department. Congratulations

@Isabelle_Ryl

!

0

0

5

@arimorcos

@sarahcat21

@dauber

@AmplifyPartners

@datologyai

Congratulations! Looking forward to your new adventure. Data curation is a timely problem

0

0

4

@_philschmid

@OpenAI

Google DeepMind, Meta FAIR,

@kyutai_labs

, ... a lot of labs have had this mission for years. If anything, they may have deviated a bit from this goal because of OAI recent successes.

0

0

4

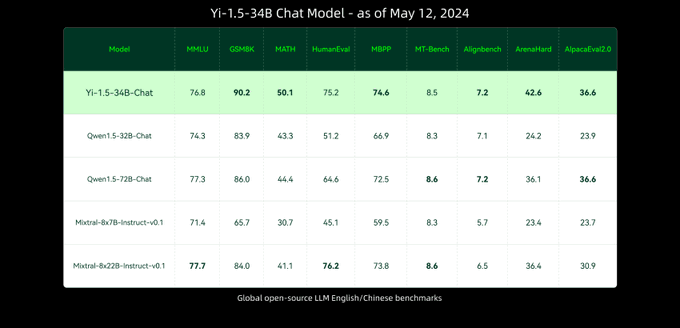

Real cool new set of models from Yi. But why is the new standard for IT models to report few shots on knowledge intensive benchmarks? It feels like IT models should be evaluated at 0-shot, not few shot...

1

1

4

@soumithchintala

Because it s a way to make it looks like a big achievement, especially when there is little that really stands out.

1

0

4

@SebastienBubeck

@srush_nlp

Presenting phi3 as a general llm may not be the right way to show its potential. Maybe framing it as a reasoning llm would help?

1

0

4

Impressive milestone and I really want to see the limit of this combination of synthetic data+DL+symbolic method now.

1

0

3

@MrCatid

@alaa_nouby

@ducha_aiki

If you need good features now -> dinov2.

If you are looking to work on the next potential breakthrough in SSL -> AIM is a good place to start.

Hard to compare the result of research on contrastive learning matured over 6 years and recent work on autoregressive loss for SSL.

0

0

3

@xl_nlp

@melbayad

@thoma_gu

@MichaelAuli

Our work is also inspired by stochastic depth, and show the potential of this approach for layer prubing.

0

0

2

@sama

Your role in where is AI has been massive. Thank you from bringing us collectively to this place.

0

0

2

@SashaMTL

I've never watched the movie so that I can lie to myself that there is still some new FF material for me to watch in case of emergency.

1

0

2

@TokenShifter

@giffmana

It is not intend for, but there are a lot of people that will be able to study and use this model for their application.

0

0

1

@giffmana

I ve never seen a more memorable LM meme. It will be your legacy when people will have long forgotten ViTs and switched to ViGriffin.

0

0

2

@TheSeaMouse

The generation looks good but doesnt stop. My guess is thus that the api doesnt catch the eos of the model because it is set for instruct models and not base models?

2

0

2