Boaz Barak

@boazbaraktcs

Followers

27K

Following

12K

Media

808

Statuses

9K

Computer Scientist. See also https://t.co/EXWR5k634w . @harvard @openai opinions my own.

Cambridge, MA

Joined January 2020

Paper allows to verify and so reduce apparent entropy of inference. They use it to block model weight exfiltration, but it's a natural question that I guess will find other applications too. First author is Harvard's own @RoyRinberg !

New paper! A compromised inference server can leak model weights by hiding them in normal-looking responses🥷 But LLM inference is nearly deterministic, so we can verify outputs with a trusted server & detect this (and inference bugs) This slows data exfiltration by >200x 🧵

1

3

30

OK, I think GPT 5.2 Pro is actually a step change in usefulness for my applications (algebraic geometry/number theory research).

33

54

729

How President Trump reacted to the brutal murder of Rob Reiner and Michele Reiner vs How Rob Reiner reacted to the brutal murder of Charlie Kirk.

446

3K

12K

Regardless of how you felt about Rob Reiner, this is inappropriate and disrespectful discourse about a man who was just brutally murdered. I guess my elected GOP colleagues, the VP, and White House staff will just ignore it because they’re afraid? I challenge anyone to defend it.

16K

17K

155K

Codex team is hiring. We are rapidly improving the Codex agent, but want to build more delight and have a highly ambitious roadmap. Share your cool fork of our open source codex repo or similarly impressive rust oss project. Opportunities for full time and contracting.

101

61

1K

Proud of our *GPT5.2 Thinking* We focused on economically valuable tasks (coding, sheets, slides) as shown by GDPval: - 71% wins+ties - 11x faster - 100x cheaper than experts. There's still a lot to improve, including UX/better connectors/reliability. It's just the beginning!

17

34

476

Excited to highlight a deep dive from one of our third party assessors, @Irregular, focused on evaluating frontier Cyber capabilities: https://t.co/w3IAftddkq You can read more about the capability and safety evaluations in the 5.2 system card:

irregular.com

This case study shares Spell Bound, a complex cryptographic challenge, part of our Atomic Tasks module we used to test GPT-5.2 on realistic, expert-level security tasks. It offers a concrete look at...

0

1

6

👋@worktrace_ai is out of stealth! Which also means I've officially rejoined the workforce...I couldn't help but join @deepakv91 to pursue this vision together. I really think we & our amazing team are on track to make a meaningful difference in bridging the AI divide. Join us!

Today, we're launching @worktrace_ai to help businesses uncover their best automation opportunities and build those automations. Our founders, Angela Jiang (product manager of GPT-3.5 and GPT-4 at OpenAI) and Deepak Vasisht (UIUC CS professor, MIT researcher, IIT graduate of the

21

17

128

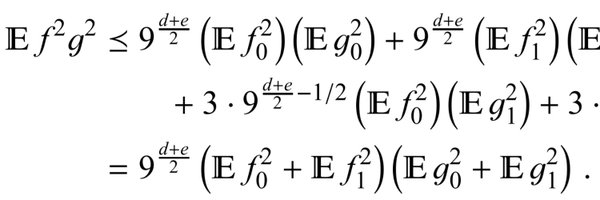

This blog post is worth reading and has some good points, though ultimately I think wrong. There is no question that computation is physical. But the question is how far we are from the absolute physical limits. There is zero reason to think that evolution designed humans to be

newsletter.semianalysis.com

Nova metrology and inspection

My new blog post discusses the physical reality of computation and why this means we will not see AGI or any meaningful superintelligence:

14

13

241

Oh no! Students are asking for books from the library!

Just visited the nearby university library and was fascinated to learn that students are bombarding it with requests for references that do not exist--the product of ChatGPT and its ilk. "It's a goddam nuisance," the librarian said. "They don't believe it's all made up."

10

1

81

Students in my course https://t.co/CDh0KsicRy will present their awesome projects on Thursday 12/11. If you are in the Boston area, feel free to drop by!

1

5

47

It's been a thrill and an honor to collaborate with Terry Tao on this short and friendly introduction to six core areas of mathematics that, taken together, reveal the creative essence, analytical power, and wondrous connectedness of the mathematical enterprise.

🚨New book announcement! 🚨Renowned mathematician and Fields medalist Terence Tao’s SIX MATH ESSENTIALS is coming fall 2026. Tao’s first popular math book introduces six core ideas that have guided mathematicians from antiquity to the frontiers of what we know today.

5

45

482

Please take a moment to ponder the ringing stupidity of the concept "real biological name"

HHS just updated Rachel Levine’s official portrait to his real biological name: Richard An HHS spokesperson said: "We remain committed to reversing harmful policies enacted by Levine and ensuring that biological reality guides our approach to public health."

114

203

5K

New post: An Ambitious Vision for Interpretability Understanding is essential for ensuring things don't break unexpectedly. AMI is a big risky bet, but so is all ambitious research. AMI is tractable: it has good empirical feedback loops, and we've already made a lot of progress.

11

27

237

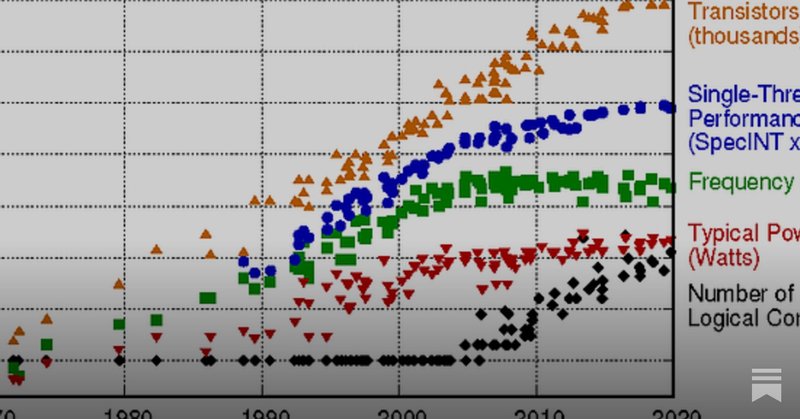

These are all sorry excuses for progress. None of these can compensate for the fact that I had more hair in the 90s.

Tomatoes, raspberries, automobiles, televisions, cancer drugs, women’s shoes, insulin monitoring, home security monitoring, clothing for tall women (which functionally didn’t exist until about 2008), telephone service (remember when you had to PAY EXTRA to call another area

7

1

74

Tomatoes, raspberries, automobiles, televisions, cancer drugs, women’s shoes, insulin monitoring, home security monitoring, clothing for tall women (which functionally didn’t exist until about 2008), telephone service (remember when you had to PAY EXTRA to call another area

People are chimping out at Matt over this but nobody has been able to name one thing that has significantly grown in quality in the past 10-20 years. Every commodity, even as they have become cheaper and more accessible has decreased in quality. I am begging somebody to name

144

131

2K

I think I’ve published the first research article in theoretical physics in which the main idea came from an AI - GPT5 in this case. The physics research paper itself (on QFT and state-dependent quantum mechanics) has been published in Physics Letters B. I've written an

53

128

897