Awni Hannun

@awnihannun

Followers

34K

Following

8K

Media

642

Statuses

4K

Quantized Gemma 2B runs pretty fast on my iPhone 15 pro in MLX Swift. code & docs: Comparable to GPT 3.5 turbo and Mixtral 8x7B in .@lmsysorg benchmarks but runs efficiently on an iPhone. Pretty wild.

19

113

622

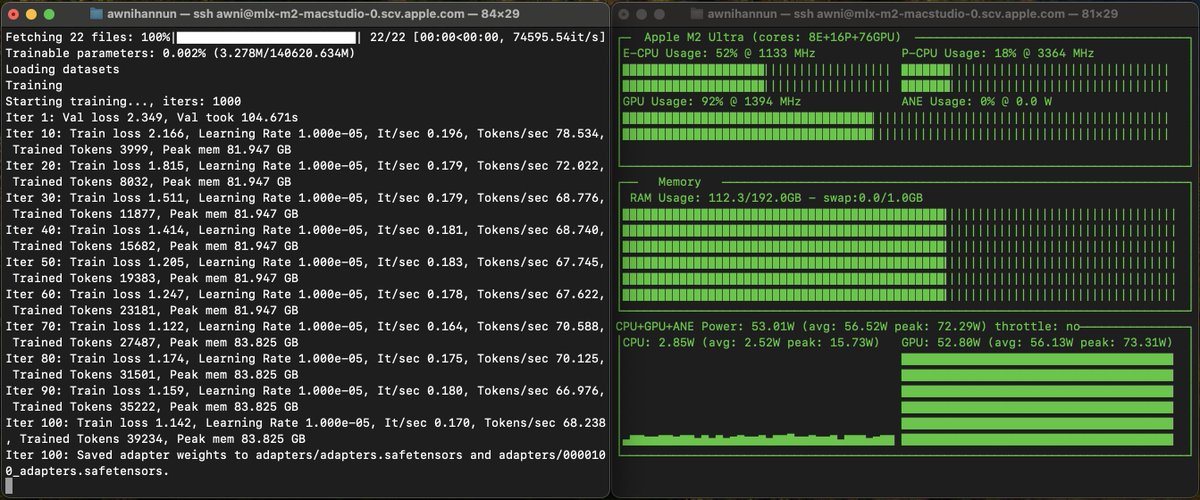

QwQ-32B evals on par with Deep Seek R1 680B but runs fast on a laptop. Delivery accepted. Here it is running nicely on a M4 Max with MLX. A snippet of its 8k token long thought process:

Today, we release QwQ-32B, our new reasoning model with only 32 billion parameters that rivals cutting-edge reasoning model, e.g., DeepSeek-R1. Blog: HF: ModelScope: Demo: Qwen Chat:

38

125

1K

Llama 3.1 8B running in 4-bit on my iPhone 15 pro with MLX Swift. As close as we've been to a GPT-4 in the palm of your hand. Generation speed is not too bad. Thanks @DePasqualeOrg for the port.

21

100

861

@mrsiipa IMO the GPU programming model is the most important thing to learn, and it's pretty much the same for Metal, CUDA, etc. The diff syntax are all bijections of one another. if you know one, you can learn another quickly. For learning on a Macbook checkout Metal GPU puzzles with.

15

40

780

MLX was open sourced exactly one year ago 🥳. It's now the second most starred and forked open source project from Apple -- after the Swift programming language.

Just in time for the holidays, we are releasing some new software today from Apple machine learning research. MLX is an efficient machine learning framework specifically designed for Apple silicon (i.e. your laptop!). Code: Docs:

53

57

759

4-bit quantized DBRX runs nicely in MLX on an M2 Ultra. PR:

Meet #DBRX: a general-purpose LLM that sets a new standard for efficient open source models. Use the DBRX model in your RAG apps or use the DBRX design to build your own custom LLMs and improve the quality of your GenAI applications.

25

105

709

Llama 3 announced a few hours ago. Already running locally on an iPhone thanks to MLX Swift. 🚀.

Llama 3 running locally on iPhone with MLX. Built by @exolabs_ team @mo_baioumy.h/t @awnihannun MLX & @Prince_Canuma for the port

16

67

694

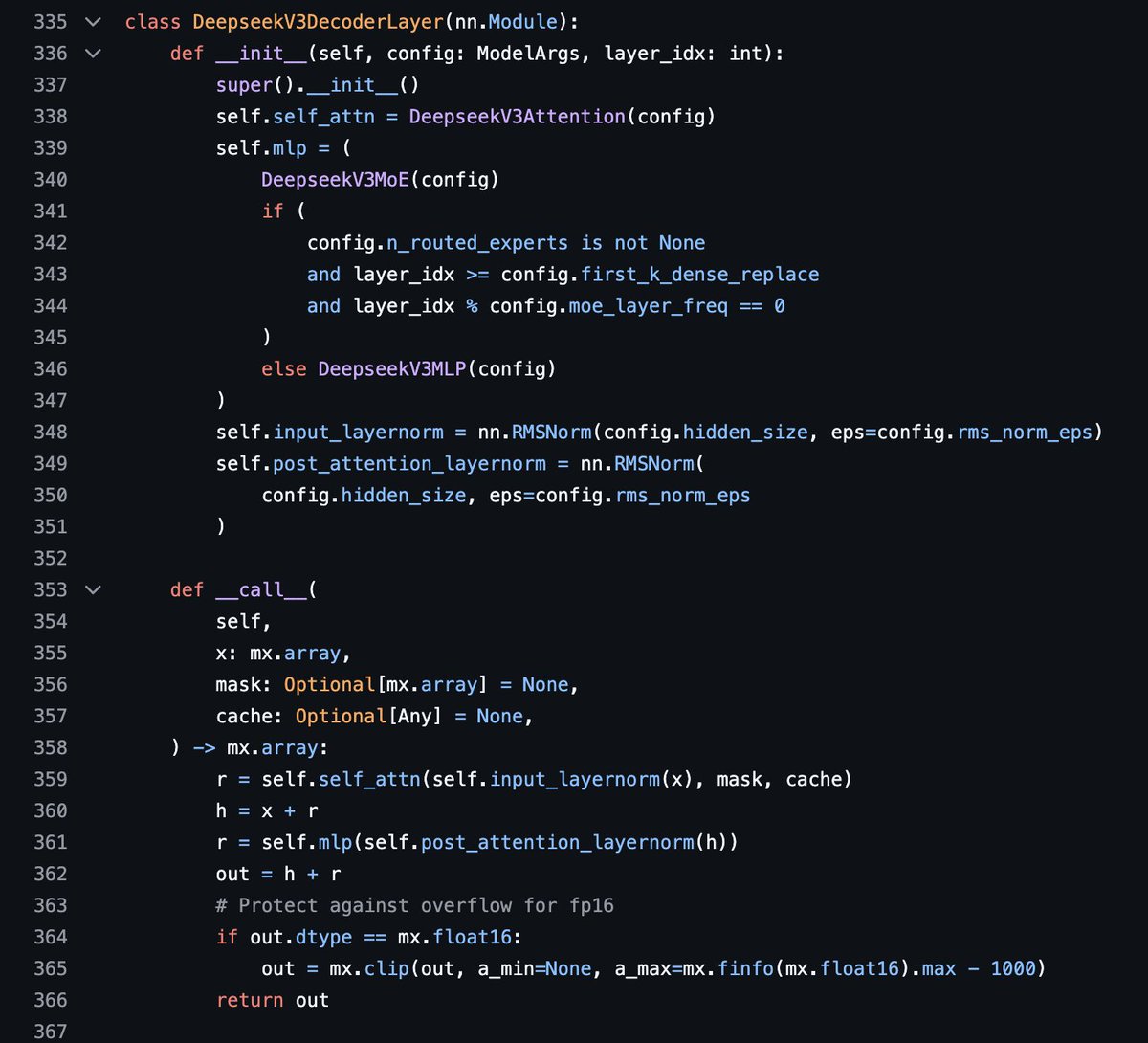

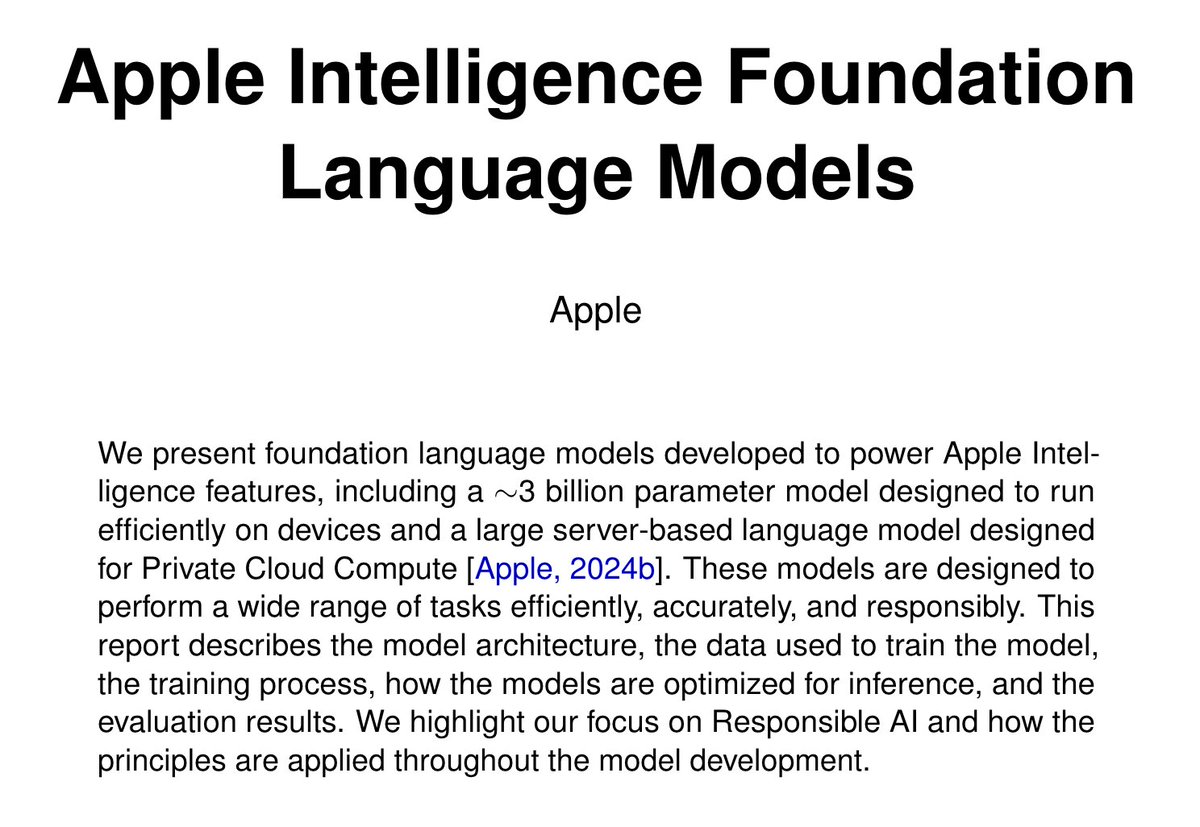

Apple Intelligence Foundation Language Models technical report is out. By @ruomingpang and team. Link:

As Apple Intelligence is rolling out to our beta users today, we are proud to present a technical report on our Foundation Language Models that power these features on devices and cloud: 🧵.

5

106

675

Very excited Llama4 are MOEs. They are going to fly with MLX on a Mac with enough RAM. Here's the min setup you'd need to run each model in 4-bit:

Introducing our first set of Llama 4 models!. We’ve been hard at work doing a complete re-design of the Llama series. I’m so excited to share it with the world today and mark another major milestone for the Llama herd as we release the *first* open source models in the Llama 4

22

73

666

Llama 3.3 70B 4-bit runs nicely on a 64GB M3 Max with in MLX LM (~10 toks/sec). Would be even faster on an M4 Max. Yesterday's server-only 405B is today's laptop 70B:

"a new 70B model that delivers the performance of our 405B model" is exciting because I might just be able to run a quantized version of the 70B on my 64GB Mac - looking forward to some GGUFs of this.

15

69

633

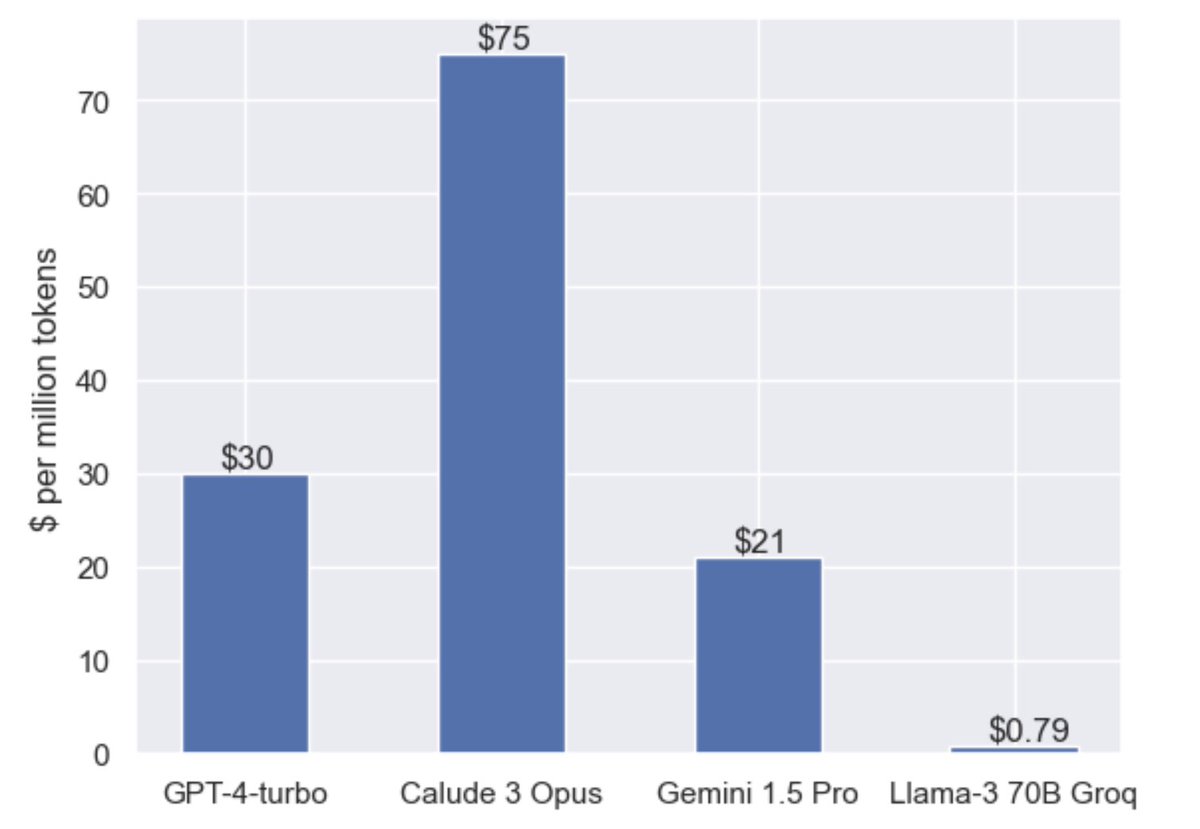

This is an important chart for LLMs. $/token for high quality LLMs will probably need to fall rapidly. @GroqInc leading the way.

23

71

589

SmolLM2 135M in 8-bit runs at almost 180 toks/sec with MLX Swift running fully on my iPhone 15 Pro. H/t the team at @huggingface for the small + high-quality models.

15

66

574

Nice new video tutorial on fine-tuning locally with MLX. Covers everything from environment setup and data-prep to troubleshooting results and running fine-tunes with Ollama.

🔥 Fine-tune AI models on your Mac? Yes, you can!. Sharing my hands-on guide to MLX fine-tuning - from an former @Ollama team member's perspective. No cloud needed, just your Mac. #MLX #MachineLearning.

4

84

578

An excellent guide to get started fine-tuning locally with MLX + LoRA:.

I’ve created a step-by-step guide to fine-tuning an LLM on your Apple silicon Mac. I’ll walk you through the entire process, and it won’t cost you a penny because we’re going to do it all on your own hardware using Apple’s MLX framework.

4

71

493

A pre-converted Mistral 7B to 4-bit quantization runs no problem on an 8GB M2 🚀

Big update to MLX but especially 🥁. N-bit quantization and quantized matmul kernels! Thanks to the wizardry of @angeloskath. pip install -U mlx

12

54

476

Learn about LoRA fine-tuning LLMs locally with MLX in this new blog post:

My blog on fine-tuning LLMs using MLX was just published on @TDataScience. I'm deeply impressed with what @awnihannun and Apple have built out. 3 key takeaways from my blog:.💻Technical Background: Apple’s custom silicon and the MLX library optimize memory transfers between CPU,

5

53

479

Exciting new project: MLXServer. An easy way to get started with LLMs locally. HTTP endpoints for text generation, chat, converting models, and more. Setup: pip install mlxserver.Docs: Example:

Mustafa (@maxaljadery) and I are excited to announce MLXserver: a Python endpoint for downloading and performing inference with open-source models optimized for Apple metal ⚙️ . Docs:

35

55

408

405B Llama 3.1 running distributed on just 2 macbook pros! . Here's the @exolabs_ + MLX journey:. 940AM: @Prince_Canuma opens PR for Llama 3.1 in MLX LM. Partially finished but has to OOO for birthday. 1140AM: @ac_crypto updates Prince's first PR. 130PM: I merged the PR after.

2 MacBooks is all you need. Llama 3.1 405B running distributed across 2 MacBooks using @exolabs_ home AI cluster

18

73

438

Nice video guide to set up a DeepSeek R1 model running locally with MLX + @lmstudio as a local coding assistant in VS Code + @continuedev

PSA: It takes <2 minutes to set up R1 as a free+offline coding assistant 💁♀️. Big shout out to @lmstudio and @continuedev! 🫶

6

64

441

Phi-2 in MLX Super high quality 2.7B model from Microsoft that runs efficiently on devices with less RAM

Hey @awnihannun, I've now got an MLX implementation of phi-2 working! Missing the generate function rn, but will put together a PR tonight or tomorrow morning. Free to help make it faster?

10

63

430

This might be the most accessible way to learn Metal + GPU programming if you have a M series Mac. All 14 GPU puzzles (increasing order of difficulty) in Metal with MLX custom kernels to do it all from Python.

Introducing Metal Puzzles! By @awnihannun's request I've ported @srush_nlp's GPU Puzzles from CUDA to Metal using the new Custom Metal Kernels in MLX!.

1

43

432

Just ported Gemma from @GoogleDeepMind to MLX. Gemma is almost identical to a Mistral / Llama style model with a couple of distinctions that you model mechanics might interested in 👇.

11

57

408

Two really nice recent papers from Apple machine learning research: . - Scaling laws for MoEs.- Scaling laws for knowledge distillation. Work by @samira_abnar @danbusbridge et al.

4

61

405

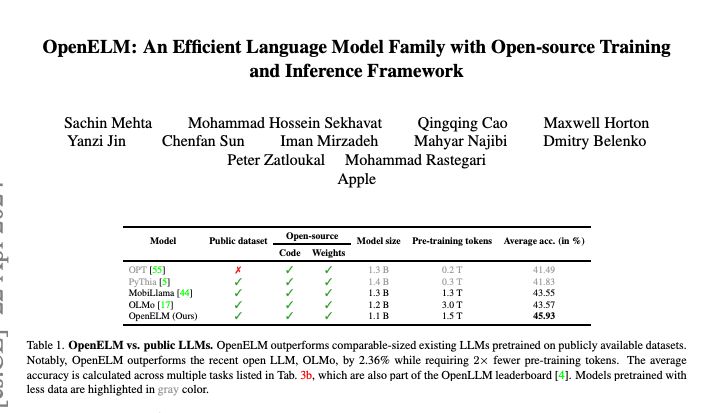

Cool new work from some colleagues at Apple: more accurate LLMs with fewer parameters and fewer pre-training tokens. Also has MLX support out of the box! . Code here:

Apple presents OpenELM. An Efficient Language Model Family with Open-source Training and Inference Framework. The reproducibility and transparency of large language models are crucial for advancing open research, ensuring the trustworthiness of results, and

7

64

392

New Mixtral 8x22B runs nicely in MLX on an M2 Ultra. 4-bit quantized model in the 🤗 MLX Community: h/t @Prince_Canuma for MLX version and v2ray for HF version

14

58

383

SD3 runs locally with MLX thanks to the incredible work from @argmaxinc . Super easy setup, docs here: Takes < 30 seconds to generate an image on my M1 Max:

DiffusionKit now supports Stable Diffusion 3 Medium.MLX Python and Core ML Swift Inference work great for on-device inference on Mac!. MLX: Core ML: Mac App: @huggingface Diffusers App (Pending App Store review)

3

53

386

Real-time speech-to-speech translation while preserving your voice!. And, it runs on your laptop or iPhone in MLX / MLX Swift:

Meet Hibiki, our simultaneous speech-to-speech translation model, currently supporting 🇫🇷➡️🇬🇧. Hibiki produces spoken and text translations of the input speech in real-time, while preserving the speaker’s voice and optimally adapting its pace based on the semantic content of the

7

44

390

RT if you want day-zero support for @OpenAI new open-weights model to run fast on your laptop with MLX.

TL;DR: we are excited to release a powerful new open-weight language model with reasoning in the coming months, and we want to talk to devs about how to make it maximally useful: we are excited to make this a very, very good model!. __. we are planning to.

12

101

375

Llama 3 models are in the 🤗 MLX Community thanks to @Prince_Canuma . Check them out: The 4-bit 8B model runs at > 104 toks-per-sec on an M2 Ultra.

13

32

367