Benjamin Lefaudeux

@BenTheEgg

Followers

1,189

Following

1,838

Media

238

Statuses

7,748

Crafting pixels w PhotoRoom after some time in sunny California and happy Copenhagen. Meta (xformers, FairScale, R&D), EyeTribe (acq) Mostly tweeting around AI

Anywhere by the sea

Joined August 2009

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Messi

• 312968 Tweets

梅雨入り

• 134440 Tweets

#InternationalYogaDay

• 122402 Tweets

अंतर्राष्ट्रीय योग

• 86773 Tweets

lorde

• 81566 Tweets

WANGYIBO LOEWE GBA

• 67956 Tweets

gracie

• 46321 Tweets

Kehlani

• 35547 Tweets

ECHR

• 33169 Tweets

Brandy

• 31712 Tweets

ブラックジャック

• 30388 Tweets

キンキーブーツ

• 28890 Tweets

ドクターキリコ

• 26881 Tweets

FREEZE IS FOREVER

• 26251 Tweets

#StopCopyingTXT

• 25163 Tweets

FILLED BLANK WITH LOVE

• 20601 Tweets

#يوم_الجمعه

• 20362 Tweets

土砂降り

• 18922 Tweets

Hayırlı Cumalar

• 18744 Tweets

MakinKESINI MakinJELAS

• 15392 Tweets

हाई कोर्ट

• 13333 Tweets

デーゲンブレヒャー

• 12695 Tweets

原作改変

• 12561 Tweets

#LUXTWICE2024

• 11872 Tweets

Last Seen Profiles

@hiphopscypher

@VeritasBP

@adamcbest

@howardfineman

Look at the stats and get back here. I’ll help you: Japan homicide rate is 0.02 per 100k per year. US is a thousand times that. What you *think* you know is insignificant when faced with these stats.

2

1

232

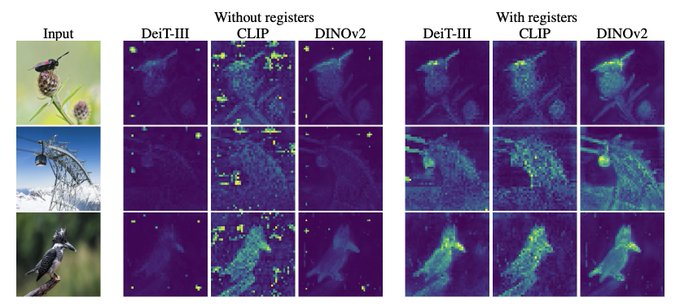

Attention sinks: great read, and pretty close out of principle to

@TimDarcet

's ViT registers

1

22

154

Reading the paper, and as a transformers part nerd (xformers 😘), this feels pretty compelling. One of the first "serious" algorithms I came across was of course Kalman, which has a solid mathematical grounding, good touchstone here (and S4 in general)

Transformers power most advances in LLMs, but its core attention layer can’t scale to long context.

With

@_albertgu

, we’re releasing Mamba, an SSM architecture that matches/beats Transformers in language modeling, yet with linear scaling and 5x higher inference throughput.

1/

42

343

2K

1

7

131

Finally reading Ring Attention (), doesn't look like there's a performant open source implementation in pytorch out there, but feels like something where

@d_haziza

and crew would shine...

Great paper, in Google's garden given TPU strong interconn, Gemini ?

5

11

83

This is really big I think. OpenAI Triton is now compatible with nvidia TRT & AMD Rocm (on top of the original use case with nvidia & python). New linga franca for GPU kernels, well deserved kudos to Philippe Tillet

1

8

75

@hiphopscypher

@VeritasBP

@adamcbest

@howardfineman

Check out this article when you get the chance. Sometimes saying “I don’t know, let’s look at the numbers” is the most reasonable way out.

1

0

57

This is utterly absurd. The planet is burning and we’re focusing on irrelevant and made up problems (crypto a few years ago, now extinction from AI..).

@EU_Commission

seems really poorly informed here, scientific reasoning and asking experts should be a priority

2

8

53

With a little bit of experience, there’s no way these numbers are true, even if they were measured once. Playing this game you should take best implementation from team Jax vs. best from team PyTorch. 4x difference on SAM makes no sense (same for “it’s XLA” explanation

@fchollet

)

4

1

48

@GergelyOrosz

I don’t think somebody can call him/herself “hands on” in software if they don’t code, really ?

4

0

45

@geraintjohn_

We don’t know each other, but now you know that a stranger keeps you in his thoughts. Hold tight and get better ❤️🩹

0

0

41

We worked a lot on that, and it’s just the beginning

5

2

41

A look back on 2023-early 2024 for the ML team

@photoroom_app

, sharing some insights from our diffusion journey

6

13

40

@barf_stepson

@ctatplay

But why don't you US people vote that insanity out ? You're aware that other comparable countries (ahem, Europe for instance) let you learn without digging your debt grave, right ?

17

2

34

Python is atrocious for parallel work, ProcessPool will never cut it because you're stuck in pickling oblivion and the code becomes an unstable spaghetti plate, Asyncio is overrated for anything which is not simple IO, answer is GIL-less project from Sam Gross.

3

0

31

Having a look at datatrove () from

@huggingface

, nice to see this out. There’s also Fondant, but other than that not much in the open for what’s a key building block for modern ML: data pre-processing.

3

7

29

A bit short on ML news at

@photoroom_app

recently (well, quiet for a month) because we're cooking something big, but this we just released: colored shadows, the model understands transparency ! This comes from the app in 5 seconds

2

1

26

@Broun_Dragon

@SmallHandsDon

@RALee85

@AbraxasSpa

Not all leaders steal the country wealth, whataboutism only goes so far.. Putin is billionaire in goods after “leading” an impoverished country. Can you think of another western leader with comparable stealing skills ? So no, not just like any pilot in any country

1

0

23

@kikithehamster

@barf_stepson

@ctatplay

That's just sad.. I understand that everything is not that simple, but frankly the inability of the US society to position itself on some subjects which are in much better shape in other OECD countries (school, healthcare, police violence, guns..) is baffling.

1

1

22

@Suhail

@ID_AA_Carmack

Did you check out

? It’s widely used these days with webui and low memory dreambooth or textual inversion

1

0

22

Some of the examples in the blog post are early engineering demos, updating them today. Here's a more recent "erase" example which I think is nuts. Soon in your pocket, kudos to

@mearcoforte

A look back on 2023-early 2024 for the ML team

@photoroom_app

, sharing some insights from our diffusion journey

6

13

40

3

2

21

Did you know that superresolution is a surprisingly interesting topic ? Like, the frequencies you need to add (hallucinate) are super context-dependent, you don't want to superresolve bokeh. Super proud of the ML team at

@photoroom_app

(pic credits: )

3

3

21

How

@photoroom_app

speeds up

#stablediffusion

using xformers, explained:

Attention still matters.. Matthieu also contributed a PR to

@huggingface

, eventually this should become largely available

1

2

20

Really cool results, even the "broken" part is fascinating, super smart solution ! Interesting parallel with some discussions on autoregressive LLMs being doomed without scratchpads

1

1

20

Training a diffusion model, learning

#42

: stay calm and carry on. Incoming soon on

@photoroom_app

, our model, data and training stacks :)

1

1

19

@francoisfleuret

You would risk overshooting ? Undershooting is not as bad, it could take you more steps but you’ll get there. Overshoot and you may never get there.

1

1

19

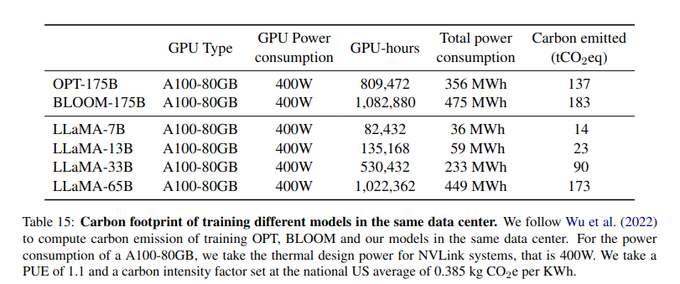

Makes no sense to me, focused on anecdata. Scientific progress doesn’t work like this, when comparing training & architectures what merit is there to which data center the model was trained on ? Aim is repro or improve, past is useless. Even

@JeffDean

gets this backwards it seems

2

1

18

We’re hiring a senior ML engineer in the ML team, remote in Europe is good, and we’re covering many cutting edge topics !

3

8

18

Classification is not a modern computer vision task, I wish people stopped using it for broad architecture prescriptions. Where is MLPMixer again ? “Optimal ViT shape” paper ? Right

2

1

16

@Eastern_Border

He literally says (typical Macron) "neither follow the most warmongering ones, nor abandon the eastern countries so that they have to act alone", it's convoluted but it's actually supportive of Eastern Europe.

7

0

16

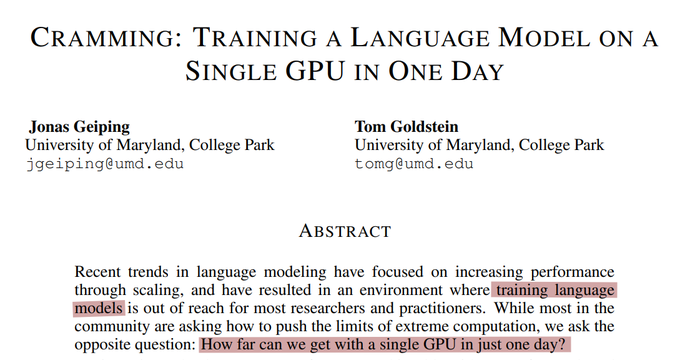

Great thread and insights, some mirror vision indeed and lots I didn’t know. Caveat is that this doesn’t improve on BERT, seems trivial but to keep in mind. Would be nice to see this with GPT and compare the keepers, looks like

@karpathy

is on that these days

2

1

17

Something everyone should know, but with an eye on historical perspectives I think. Historically attention is IO bound even more than flops bound, so the incentive was big for LLM practitioners to pile up on model dim. Flash relaxed that.. and openAI moved to 32k context

1

2

16

I personally love

@giffmana

very open takes / opinions + shared recipes and little tricks, not seen that often. Beyond this "style", the track record is very impressive

1

0

16

Illustration for a colleague, the pytorch way to sample data. Never as good as

@francoisfleuret

little book, and a little quick and dirty

2

0

14

It’s also a key part of the soon to be released PhotoRoom model (not vanilla DiT but heavily related). I really think gatekeeping reviews are too noisy, something like arxiv + open reviews/ test of time feels better to my eyes

The Diffusion Transformer paper, by my former-FAIR-and-current-NYU colleague

@sainingxie

and former-Berkeley-student-and-current-OpenAI engineer William Peebles, was rejected from CVR2023 for "lack of novelty", accepted at ICCV2023, and apparently forms the basis for Sora.

47

417

3K

1

0

15

How is the photoroom backend fast and scalable, with billions of diffusion based images generated a year -and growing- ? Re-posting slides from

@MatthieuToulem1

and

@EliotAndres

from latest GTC, for visibility.

0

4

15

Remember a thread two months ago on how to sell your sneakers () ? Remember this thread from

@matthieurouif

? (). Well, we have new Magic Studio incoming in

@photoroom_app

, now real time. Learning by doing, we're ML artisans :)

3

4

14

@gcvrsa

@silentsara

@brent_bellamy

Honey, check out rear facing car seats, these are by far the safest until age 4 (and are indeed hard to fit in a smallish car even if that doesn't justify crazy American pick ups)

1

0

13

Interesting results, which completely match residual path assumptions if you think about it. Each new layer adds residual information, so simple concepts are nailed early, complex ones late

2

1

14

To the surprise of nobody in the field, but much easier said than done. Congrats

@pommedeterre33

et al. for the Kernl, it was a motivation for xformers at the time and I'm glad that a complete faster Transformer, torch compatible, happened in the open !

12X faster transformer model, possible?

Yes, with

@OpenAI

Triton kernels!

We release Kernl, a lib to speedup inference of transformer models. It's very fast (sometimes SOTA), 1 LoC to use, and hackable to match most transformer architectures.

🧵

7

108

574

0

1

12

Dear TL, we're recruiting at

@photoroom_app

and more specifically in the ML team, if you're interested in wrangling data at scale (100M+), multi modalities, custom labelling models & data science challenges: DM and talent

@photoroom

.com !

Job desc here

0

3

12

I've a huge respect for

@OpenAI

, but this is an incredibly pretentious, self centered and historically wrong take. Swap AGI for RNA vaccines, Crispr , X-rays, relativity theory, antibiotics, .. None of them were any better kept under wraps, and nobody can be trusted with these.

3

0

12

Speeding up multimodal ML: we're rolling out a much faster scene suggestion

@photoroom_app

, which is content aware, editable to your taste, and now almost instant fast. The two top scenes are effectively infinitely fine grained recommendations for this content.

1

2

12

Reacting a bit late, but my take on this (the open part). I've been a follower of SemiAnalysis and

@dylan

for a while, generally impressed. Disagreeing this time.

I think this is based on two premises, largely debatable

1 - winner takes all

2 - bigger is better

2

1

12

@savvyRL

Word2vec was just about clustering, but here there seems to be a spatial component, new right ? Some cities are bound to be written together more often because of non-spatial logic, say a list of capitals for instance. A purely frequentist approach should get the loc wrong, no ?

1

0

12

@typewriteralley

There are existing counter examples to that, take Copenhagen for instance, the bikes and buses don't have to compete. The phrasing could have been "people on buses are often stuck in traffic because one-crewed cars take all the space". City planning education is rare :(

1

0

12

@finbarrtimbers

It’s hard to get food perf out of it, there are great kernels for this in xformers but you don’t typically get the speed that you could expect, for instance picking single coefficients doesn’t fit tensor cores. Blocksparse is much better if you want sparse

1

0

11

Of note: there’s an industry standard for inference called MLPerf. Fastest on nvidia GPU is TensorRT, across many models, go check it out.

Benchmarks are only meaningful best on best, there’s an order of magnitude perf span in between correct implementations.

With a little bit of experience, there’s no way these numbers are true, even if they were measured once. Playing this game you should take best implementation from team Jax vs. best from team PyTorch. 4x difference on SAM makes no sense (same for “it’s XLA” explanation

@fchollet

)

4

1

48

0

0

11

@Jonathan_Blow

Maybe that’s just an easy way to do layoffs, and people want to stuff their agenda in it ? “People want this not to be true, but it’s true” as the saying goes :)

1

0

10

Some tech news from the

@photoroom_app

team, it’s been a while:

- consistent renderings (in beta on the web already), your gen Ai pics look like they come from the same place, instead of being unrelated

1

1

11

None of these pics are completely real, but there’s some reality-informed diffusion :) no outgrowing (I believe

@photoroom_app

is the only company nailing that), but we’re improving on some details. Crazy optim on the backend to get to these speeds (seconds) but more to come

1

2

10

Why are the CPUs so idle, I hear you say ?

Because we precompute everything we can, that's why. Removes most augmentation options, but that's not really a thing for diffusion. That's how we got these 10k img/s (training !) on 16 A100 nodes that I mentioned in the blog post

1

0

10

The Lenna of generative AI just got an upgrade... Still ~instant rendering, now need to ship this (after internal demo and convincing colleagues 😬). Not quite the final checkpoint

2

1

9

@tunguz

We did that for xformers () in retrospect that was probably a mistake and a white paper on arxiv would have helped getting traction. Most people receptive fields are tuned to arxiv these days (+derived mailing lists, websites and RSS)

1

0

10

Pretty interesting, and great to see numbers out not using Infiniband, it’s a big part of public clouds offering

1

0

9

Sneak peek, which one is real ?

@photoroom_app

and instant backgrounds, this is still instant but quality and model understanding is going through the roof. Hold on to your socks and stay tuned

3

2

9

@KerenAnnMusic

@lorde

Does "appartheid state" and "colonies" count, or do you have selective eyesight ? Nothing to do with Judaism. With your reasoning Mandela would have died in prison

0

2

8

@melficexd

@cHHillee

they've been GP-GPU for a while, and GP stands for General Purpose, but I guess that most people commenting don't know that either. TensorCores in nvidia chips have been targeted at AI for a long time

0

0

9

And.. we're back in business ! Possibly placebo, but I can feel the heat just looking at the screen

1

0

9

@rasbt

you can try out "metaformers" (ViTs with some patch embedding layers) on cifar in xformers, super simple script

Defaults bring you to 86% cifar10 (not a world record, I know) within 10 minutes on a laptop with a 6M parameters model (half of resnet18)

2

2

9

Cringe-y for me to watch, too slow in the beginning but getting a bit better over time, a presentation I made a couple of weeks back on how some of the

@photoroom_app

AI features work behind the scenes. Not too detailed but hypothetical questions welcome

1

1

7

@ID_AA_Carmack

Initial release was not optimized at all, getting better these days (fusing layers with nvfuser or tensorRT for inference, better attention kernels from xformers and

@tri_dao

, ..). New major improvements may not be iso-weights from now on (to be able to use tinyNN for instance)

1

0

8

@BlancheMinerva

@norabelrose

Are you sure you’re reading this right ? This just shows the updated algorithm goes higher in flops iso-hardware, and is not affected by sequence length too much, but this is not the attention throughput (else it would be in seq/s or similar).

1

0

7