Hugging Face

@huggingface

Followers

509K

Following

8K

Media

447

Statuses

11K

The AI community building the future. https://t.co/VkRPD0Vclr

Joined September 2016

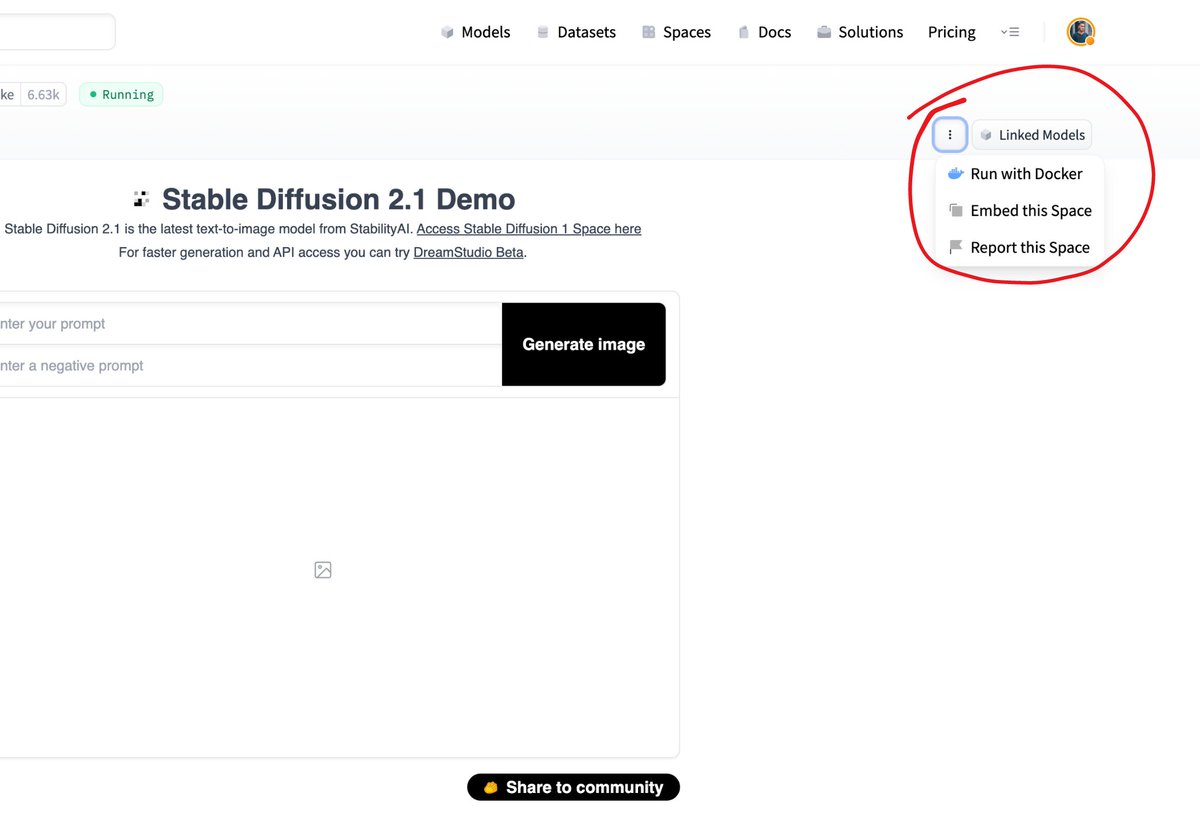

THIS IS BIG! 👀. It's now possible to take any of the >30,000 ML apps from Spaces and run them locally (or on your own infrastructure) with the new "Run with @Docker" feature. 🔥🐳. See an app you like? Run it yourself in just 2 clicks🤯

34

342

2K

🚨Transformers is expanding to Speech!🚨. 🤗Transformers v4.3.0 is out and we are excited to welcome @facebookai's Wav2Vec2 as the first Automatic Speech Recognition model to our library!. 👉Now, you can transcribe your audio files directly on the hub:

17

309

1K

SAM, the groundbreaking segmentation model from @Meta is now in available in 🤗 Transformers!.What does this mean?. 1. One line of code to load it, one line to run it.2. Efficient batching support to generate multiple masks.3. pipeline support for easier usage. More details: 🧵

24

242

1K

Last week @MetaAI publicly released huge LMs, with up to ☄️30B parameters. Great win for Open-Source🎉. These checkpoints are now in 🤗transformers!.But how to use such big checkpoints?. Introducing Accelerate and .⚡️BIG MODEL INFERENCE⚡️ . Load & USE the 30B model in colab (!)⬇️

14

234

1K

The first RNN in transformers! 🤯.Announcing the integration of RWKV models in transformers with @BlinkDL_AI and RWKV community!.RWKV is an attention free model that combines the best from RNNs and transformers. Learn more about the model in this blogpost:

18

261

1K

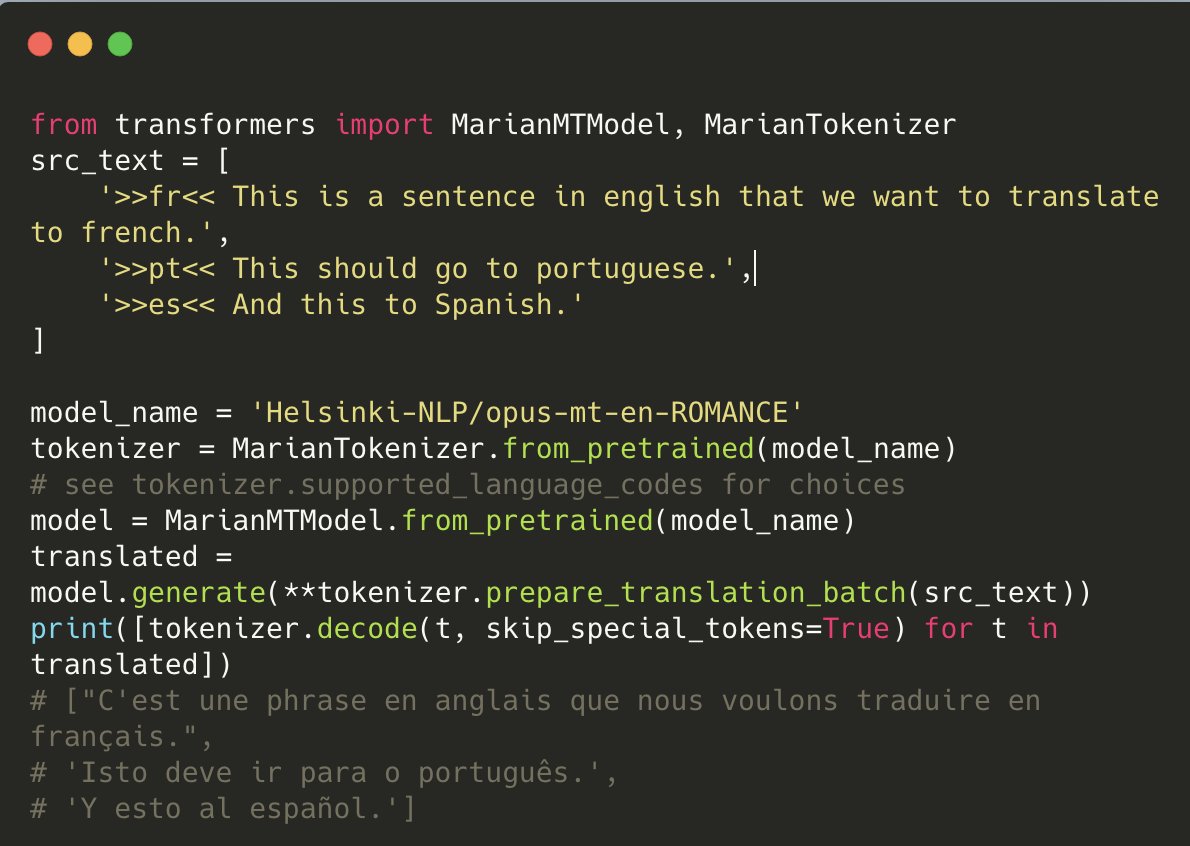

Let’s democratize NLP for all languages! 🌎🌎🌎. Today, with v2.9.1, we are releasing 1,008 machine translation models, covering ` of 140 different languages trained by @jorgtiedemann with @marian, ported by @sam_shleifer. Find your language here: [1/4]

19

343

1K

Long-range sequence modeling meets 🤗 transformers! We are happy to officially release Reformer, a transformer that can process sequences as long as 500.000 tokens from @GoogleAI. Thanks a million, Nikita Kitaev and @lukaszkaiser! Try it out here:

7

251

979

We are honored to be awarded the Best Demo Paper for "Transformers: State-of-the-Art Natural Language Processing" at #emnlp2020 😍. Thank you to our wonderful team members and the fantastic community of contributors who make the library possible 🤗🤗🤗.

28

134

964

Time to push explainable AI 🔬. exBERT, the visual analysis tool to explore learned representations from @MITIBMLab is now integrated on our model pages for BERT, DistilBERT, RoBERTa, XLM & more! Just click on the tag #exbert on @huggingface’s models page:

5

271

931

🤗 Transformers meets VISION 📸🖼️. v4.6.0 is the first CV dedicated release!. - CLIP @OpenAI, Image-Text similarity or Zero-Shot Image classification.- ViT @GoogleAI, and.- DeiT @facebookai, SOTA Image Classification. Try ViT/DeiT on the hub (Mobile too!):.

10

253

933

Super happy to announce that we are acquiring @pollenrobotics to bring open-source robots to the world! 🤖. Since @RemiCadene joined us from Tesla, we’ve become the most widely used software platform for open robotics thanks to @LeRobotHF and the Hugging Face Hub. Now, we’re

52

183

919

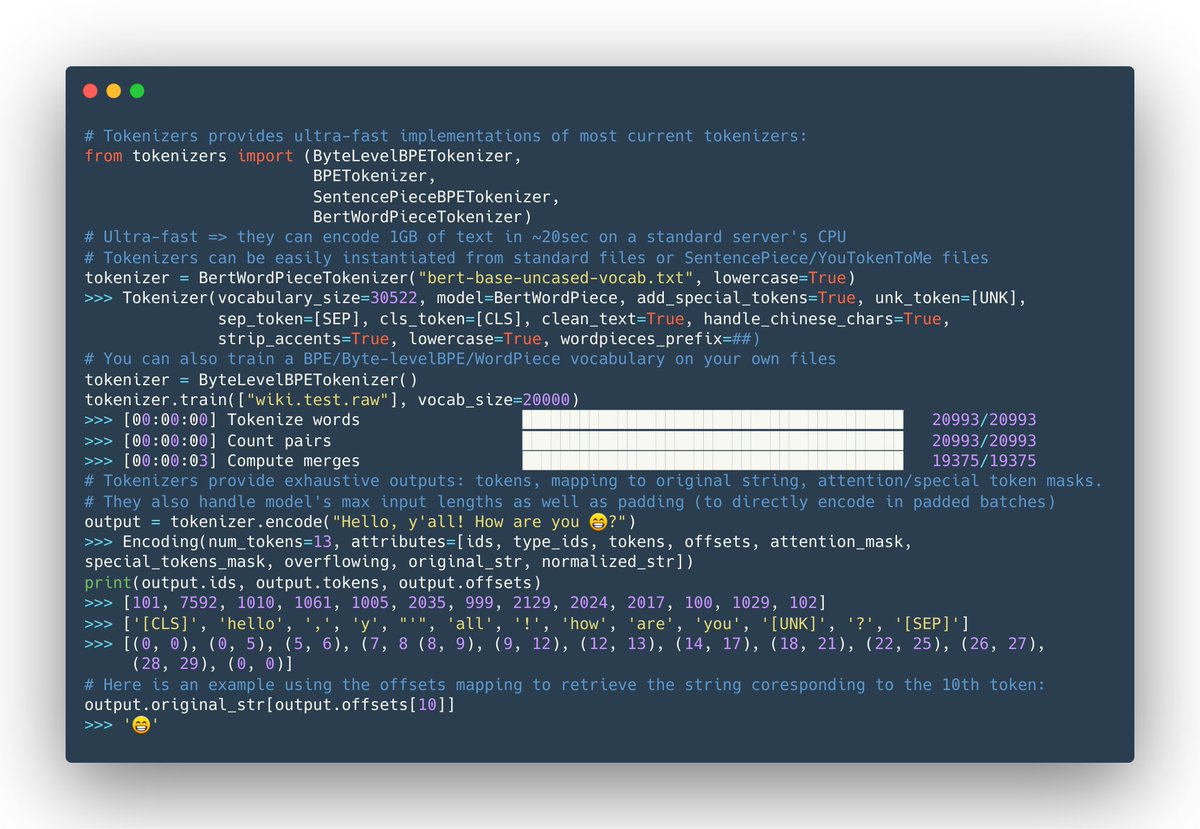

Now that neural nets have fast implementations, a bottleneck in pipelines is tokenization: strings➡️model inputs. Welcome 🤗Tokenizers: ultra-fast & versatile tokenization led by @moi_anthony:.-encode 1GB in 20sec.-BPE/byte-level-BPE/WordPiece/SentencePiece. -python/js/rust.

11

228

867

Bored at home? Need a new friend?.Hang out with BART, the newest model available in transformers (thx @sam_shleifer) , with the hefty 2.6 release (notes: . Now you can get state-of-the-art summarization with a few lines of code: 👇👇👇

14

205

736

Today we are excited to announce a new partnership with @awscloud! 🔥. Together, we will accelerate the availability of open-source machine learning 🤝. Read the post 👉

10

154

700

Document parsing meets 🤗 Transformers! . 📄#LayoutLMv2 and #LayoutXLM by @MSFTResearch are now available! 🔥 . They're capable of parsing document images (like PDFs) by incorporating text, layout, and visual information, as in the @gradio demo below ⬇️.

10

187

682

We are excited to partner with @AIatMeta to welcome Llama 4 Maverick (402B) & Scout (109B) natively multimodal Language Models on the Hugging Face Hub with Xet 🤗. Both MoE models trained on up-to 40 Trillion tokens, pre-trained on 200 languages and significantly outperforms its

25

118

711

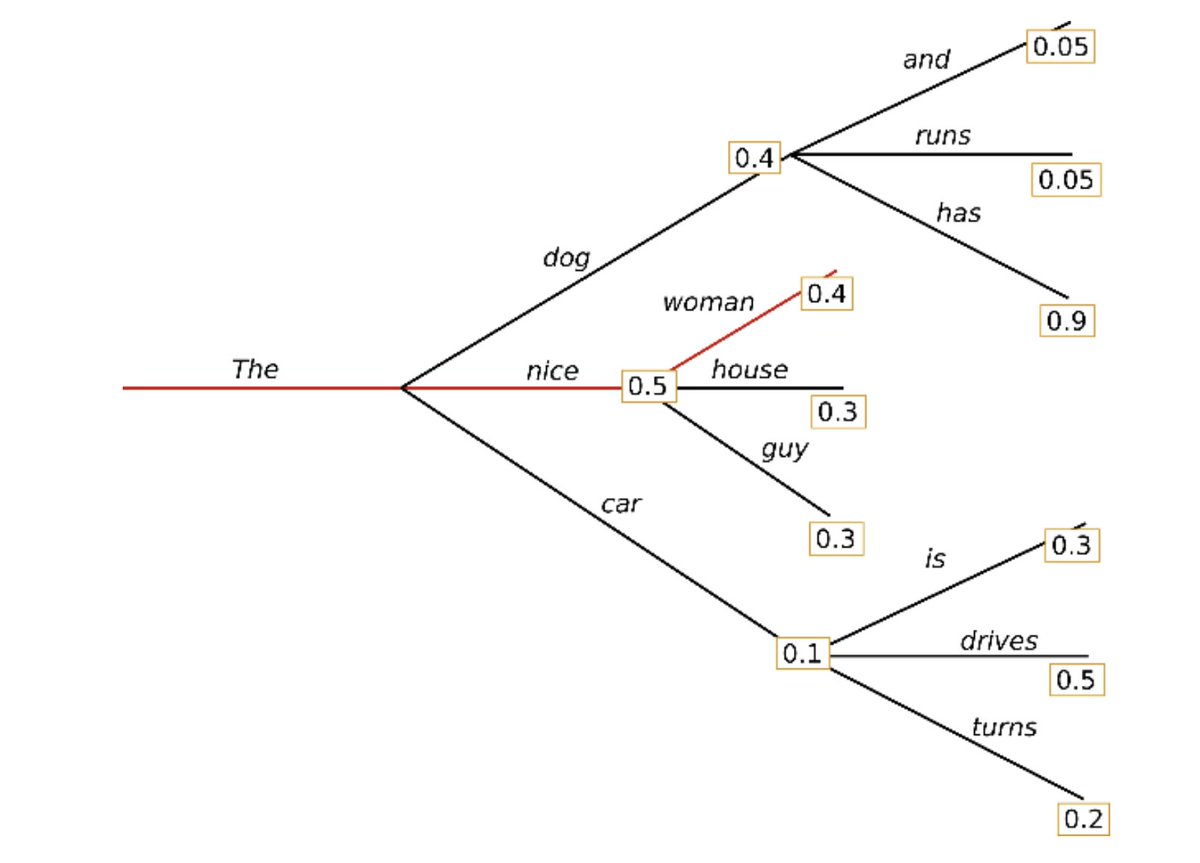

The 101 for text generation! 💪💪💪. This is an overview of the main decoding methods and how to use them super easily in Transformers with GPT2, XLNet, Bart, T5,. It includes greedy decoding, beam search, top-k/nucleus sampling,. : by @PatrickPlaten

11

202

676

Transformers 2.4.0 is out 🤗. - Training transformers from scratch is now supported.- New models, including *FlauBERT*, Dutch BERT, *UmBERTo*.- Revamped documentation.- First multi-modal model, MMBT from @facebookai, text & images. Bye bye Python 2 🙃.

7

163

659

Fine-tuning a *3-billion* parameter model on a single GPU?. Now possible in transformers, thanks to the DeepSpeed/Fairscale integrations!. Thank you @StasBekman for the seamless integration, and thanks to @microsoft and @facebookai teams for their support!.

8

176

653

You can now visualize Transformers training performance with a seamless @weights_biases integration. Compare hyperparameters, output metrics, and system stats like GPU utilization across your models!. Step-by-step guide: Colab:

8

173

630

💃PyTorch-Transformers 1.1.0 is live💃. It includes RoBERTa, the transformer model from @facebookai, current state-of-the-art on the SuperGLUE leaderboard! Thanks to @myleott @julien_c @LysandreJik and all the 100+ contributors!

6

190

632

🔥Fine-Tuning @facebookai's Wav2Vec2 for Speech Recognition is now possible in Transformers🔥. Not only for English but for 53 Languages🤯. Check out the tutorials:.👉 Train Wav2Vec2 on TIMIT 👉 Train XLSR-Wav2Vec2 on Common Voice.

6

151

617

The Technology Behind BLOOM Training🌸. Discover how @BigscienceW used @MSFTResearch DeepSpeed + @nvidia Megatron-LM technologies to train the World's Largest Open Multilingual Language Model (BLOOM):.

8

148

619

💫 Perceiver IO by @DeepMind is now available in 🤗 Transformers! . A general purpose deep learning model that works on any modality and combinations thereof.📜text.🖼️ images.🎥 video.🔊 audio.☁️ point clouds. Read more in our blog post:

4

112

592

🖌️ Stable Diffusion meets 🧨Diffusers!. Releasing diffusers==0.2.2 with full support of @StabilityAI's Stable Diffusion & schedulers 🔥. Google colab:.👉 Code snippet 👇

7

120

575

Hugging Face 🫶 @GoogleColab . With the latest release of huggingface_hub, you don't need to manually log in anymore. Create a secret once and share it with every notebook you run. 🤗. pip install --upgrade huggingface_hub . Check it out!👇

5

107

563

Today we're happy to release four new official notebook tutorials available in our documentation and in colab thanks to @MorganFunto to get started with tokenizers and transformer models in just seconds! (1/6)

10

151

551

The 1.5 billion parameter GPT-2 (aka gpt2-xl) is up:.✅ in the transformers repo: ✅ try it out live in Write With Transformer🦄 Coming next: .🔘 Detector model based on RoBERTa . Thanks @OpenAI @Miles_Brundage @jackclarkSF and all.

9

149

546

@deepseek_ai Congratulations on the stellar release! 🤩. The model checkpoints and a detailed report - truly Christmas is here! .

4

17

550

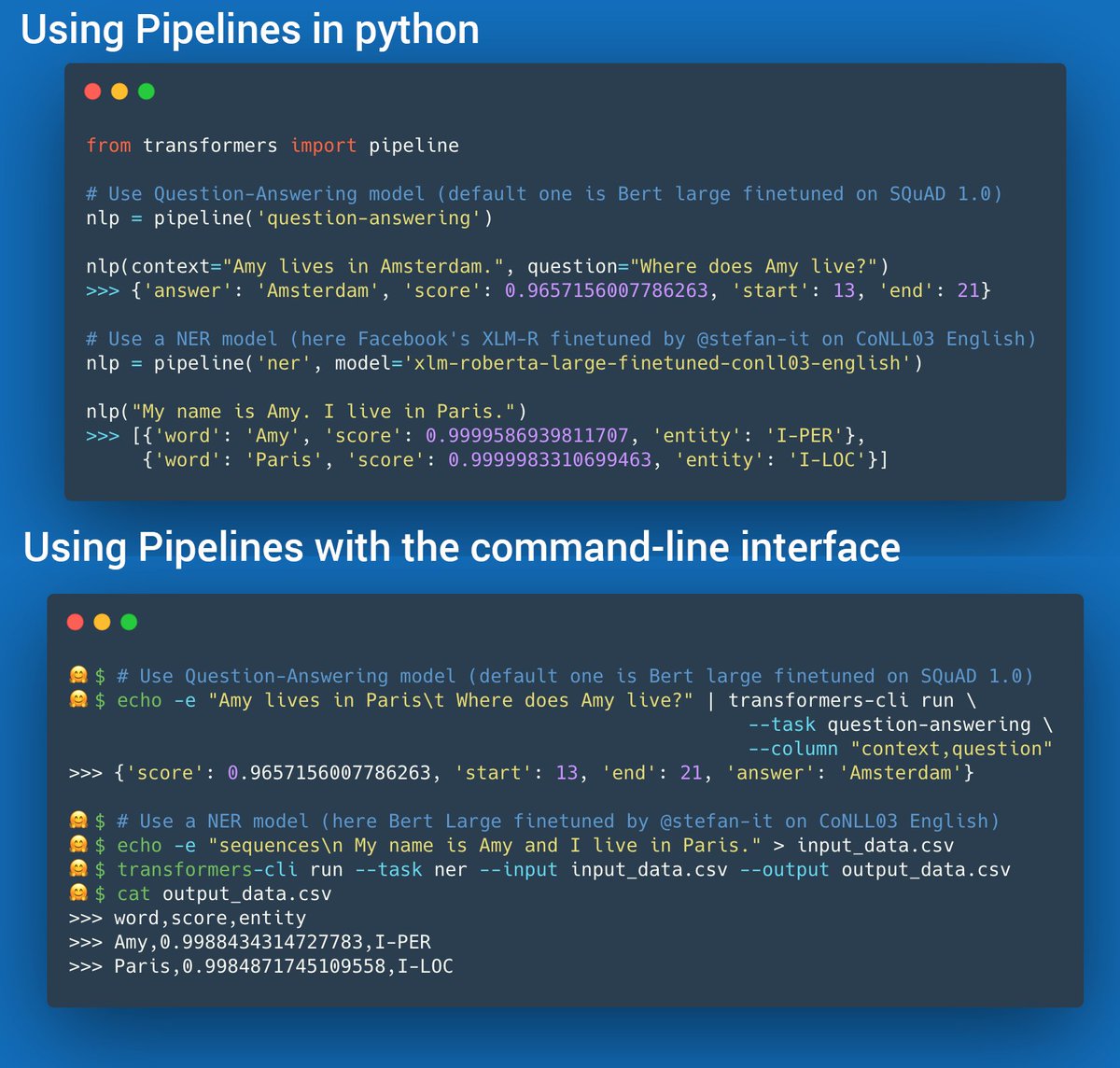

TRL 🤗 Hugging Face. Excited to announce that we're doubling down on our efforts to democratize RLHF and reinforcement learning with TRL, new addition to the @huggingface family, developed and led by team member @lvwerra 🎉🎉. Train your first RLHF model 👉

7

121

520

🔥JAX meets Transformers🔥. @GoogleAI's JAX/Flax library can now be used as Transformers' backbone ML library. JAX/Flax makes distributed training on TPU effortless and highly efficient!. 👉 Google Colab: 👉 Runtime evaluation: .

3

109

519

The new SOTA is in Transformers! DeBERTa-v2 beats the human baseline on SuperGLUE and up to a crazy 91.7% dev accuracy on MNLI task. Beats T5 while 10x smaller!. DeBERTa-v2 contributed by @Pengcheng2020 from @MSFTResearch . Try it directly on the hub:

5

110

523

1/4. Four NLP tutorials are now available on .@kaggle.! It's now easier than ever to leverage tokenizers and transformer models like BERT, GPT2, RoBERTa, XLNet, DistilBERT,. for your next competition! 💪💪💪! #NLProc #NLP #DataScience #kaggle.

3

146

514

We've heard your requests! Over the past few months . we've been working on a Hugging Face Course!. The release is imminent. Sign-up for the newsletter to know when it comes out: Sneak peek; Transfer Learning with @GuggerSylvain:

9

122

509

GPT-Neo, the #OpenSource cousin of GPT3, can do practically anything in #NLP from sentiment analysis to writing SQL queries: just tell it what to do, in your own words. 🤯. How does it work? 🧐.Want to try it out? 🎮.👉

8

146

494

Thanks to @srush_nlp, we now have an example of a training module for NER leveraging transformers. Under 300 lines of codes and supports GPUs and TPUs thanks to @PyTorchLightnin!. Colab: . Example:

4

132

495

🔥Transformers' first-ever end-2-end multimodal demo was just released, leveraging LXMERT, SOTA model for visual Q&A!. Model by @HaoTan5, @mohitban47, with an impressive implementation in Transformers by @avalmendoz (@UNCnlp). Notebook available here: 🤗

6

128

502

TODAY'S A BIG DAY. Spaces are now publicly available. Build, host, and share your ML apps on @huggingface in just a few minutes. There's no limit to what you can build. Be creative, and share what you make with the community. 🙏 @streamlit and @gradio .

4

135

491

👋 To all JS lovers: NLP is more accessible than ever! You can now leverage the power of DistilBERT-cased for Question Answering w/ just 3 lines of code!!! 🤗. You can even run the model remotely w/ the built-in @TensorFlow Serving compatibility 🚀.

10

125

493

Happy to officially include DialoGPT from @MSFTResearch to 🤗transformers (see docs: . DialoGPT is the first conversational response model added to the library. Now you can build a state-of-the-art chatbot in just 10 lines of code 👇👇👇

5

117

472

Last week, @MetaAI introduced NLLB-200: a massive translation model supporting 200 languages. Models are now available through the Hugging Face Hub, using 🤗Transformers' main branch. Models on the Hub: Learn about NLLB-200:

6

140

458

Our Distilbert paper just got accepted at NeurIPS 2019's ECM2 workshop!. - 40% smaller 60% faster than BERT.- 97% of the performance on GLUE. We also distilled GPT2 in an 82M params model💥. All the weights are available in TF2.0 @tensorflow here:

4

97

463

Machine learning demos are increasingly a vital part of releasing a model. Demos allow anyone, not just ML engineers, to try a model, give feedback on predictions, and build trust. That's why we are thrilled to announce @Gradio 3.0: a grounds-up redesign of the Gradio library 🥳

7

92

455

🤗Transformers are starting to work with structured databases!. We just released 🤗Transformers v4.1.1 with TAPAS, a multi-modal model for question answering on tabular data from @googleAI. Try it out through transformers or our inference API:

5

92

453

🚨Exciting news! Next week, we’ll be launching a brand-new Audio Course! 🤗. Sign up today ( and join us for a LIVE course launch event featuring amazing guests like @DynamicWebPaige, Seokhwan Kim, and @functiontelechy! ⚡️.

4

96

445

Happy to announce we partenered with @onnxai @onnxruntime @microsoft to make state-of-the-art inference up to 5x faster 🚀. NLP for every people and organizations! #msbuild

3

102

441

🤗We are going to invest more in @tensorflow in 2021! . If you want to take part in building the fastest growing NLP open-source library, join us:

9

78

422

🧨Diffusers supports Stable Diffusion 2 !. Run @StabilityAI's Stable Diffusion 2 with zero changes to your code using your familiar diffusers API. Everything is supported: attention optimizations, fp16, img2image, swappable schedulers, and more🤗

5

82

434

We're excited to collaborate with the Europan Space Agency for the release of MajorTOM, the largest ML-ready Sentinel-2 images dataset! 🚀. It covers 50% of the Earth. 2.5 trillion pixels of open source!. 🤗👐🌌🚀🌏.

Our @ESA_EO Φ-lab has released, in partnership with @huggingface, the first dataset of 'MajorTOM', or the Terrestrial Observation Metaset, the largest community-oriented and machine-learning-ready collection of @CopernicusEU #Sentinel2 images ever published and covering over 50%

6

68

413