Soumith Chintala

@soumithchintala

Followers

186,905

Following

887

Media

180

Statuses

3,474

Cofounded and lead @PyTorch at Meta. Also dabble in robotics at NYU. AI is delicious when it is accessible and open-source.

New York City

Joined September 2009

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Madonna

• 1268931 Tweets

어린이날

• 416277 Tweets

こどもの日

• 373383 Tweets

Canelo

• 246361 Tweets

NHKマイル

• 124164 Tweets

子供の日

• 105765 Tweets

#マリカにじさんじ杯

• 80951 Tweets

鯉のぼり

• 80523 Tweets

GW最終日

• 51529 Tweets

#ไขข้อขึ้นใจไปกับนุนิว

• 48864 Tweets

TOP10Worldwide MOONLIGHTiTunes

• 46457 Tweets

ジャンタルマンタル

• 42425 Tweets

アスコリピチェーノ

• 38597 Tweets

予選突破

• 29956 Tweets

マリカ杯

• 29096 Tweets

誕生日記念

• 27552 Tweets

#ユーフォ3期

• 27120 Tweets

ルメール

• 22837 Tweets

ボンドガール

• 21818 Tweets

Happy Easter

• 21683 Tweets

Patrick Drinks Milk

• 21069 Tweets

アフター

• 21035 Tweets

JR京都駅

• 19246 Tweets

महाराणा प्रताप

• 16847 Tweets

ロジリオン

• 16438 Tweets

Χριστος Ανεστη

• 14853 Tweets

Braverman

• 13082 Tweets

シェリン

• 12148 Tweets

予選通過

• 11322 Tweets

不審物発見

• 11087 Tweets

Wofai

• 10295 Tweets

Last Seen Profiles

No More GIL!

the Python team has officially accepted the proposal.

Congrats

@colesbury

on his multi-year brilliant effort to remove the GIL, and a heartfelt thanks to the Python Steering Council and Core team for a thoughtful plan to make this a reality.

70

1K

5K

apparently Google laid off their entire Python Foundations team, WTF!

(

@SkyLi0n

who is one of the pybind11 maintainers just informed me, asking what ways they can re-fund pybind11)

The team seems to have done substantial work that seems critical for Google internally as well.…

123

563

4K

If you have questions about why Meta open-sources its AI, here's a clear answer in Meta's earnings call today from

@finkd

72

417

3K

It’s been 5 years since we launched

@pytorch

. It’s much bigger than we expected -- usage, contributors, funding. We’re blessed with success, but not perfect. A thread (mirrored at ) about some of the interesting decisions and pivots we’ve had to make 👇

26

279

2K

i might have heard the same 😃 -- I guess info like this is passed around but no one wants to say it out loud.

GPT-4: 8 x 220B experts trained with different data/task distributions and 16-iter inference.

Glad that Geohot said it out loud.

Though, at this point, GPT-4 is…

57

387

2K

The first full paper on

@pytorch

after 3 years of development.

It describes our goals, design principles, technical details uptil v0.4

Catch the poster at

#NeurIPS2019

Authored by

@apaszke

,

@colesbury

et. al.

14

432

2K

PyTorch co-author Sam Gross (

@colesbury

) has been working on removing the GIL from Python.

Like...we can start using threads again instead of multiprocessing hacks!

This was a multi-year project by Sam.

Great article summarizing it:

13

321

2K

Based on all the user-request videos that

@sama

's been posting, it looks like sora is powered by a Game Engine, and generates artifacts and parameters for the Game Engine. 🤔

here is sora, our video generation model:

today we are starting red-teaming and offering access to a limited number of creators.

@_tim_brooks

@billpeeb

@model_mechanic

are really incredible; amazing work by them and the team.

remarkable moment.

2K

4K

26K

76

79

1K

this weekend has been very sad.

My friends at

@OpenAI

swore that it had become a magical place, with the talent density, velocity, research focus and (yet) a product fit that is really generational.

For such a place to breakdown in the cringiest way possible is doubly sad.

34

61

1K

* In 2016, I thought OpenAI was just shady, with highly unrealistic statements

* In 2020, I thought OpenAI was doing awesome work, but a bit too hypey, and the AGI bonds were weird

* In 2022, I fully changed my opinion and I think OpenAI is just phenomenal for changing the world.…

17

76

1K

LLaMa-2 from

@MetaAI

is here!

Open weights, free for research and commercial use. Pre-trained on 2T tokens.

Fine-tuned too (unlike v1).

🔥🔥🔥

Lets gooo....

The paper lists the amazing authors who worked to make this happen night and day. Be sure to thank…

31

186

1K

Tensor Comprehensions: einstein-notation like language transpiles to CUDA, and autotuned via evolutionary search to maximize perf.

Know nothing about GPU programming? Still write high-performance deep learning.

@PyTorch

integration coming in <3 weeks.

13

431

1K

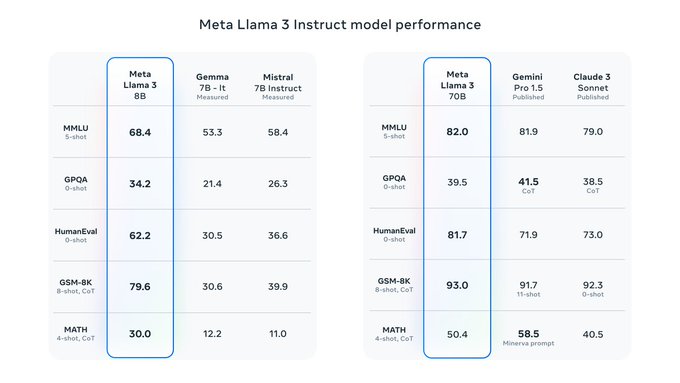

Seems to solidly compete with GPT-4 on benchmarks.

Google has existing customers and surfaces to start the feedback loop, without worrying about adoption.

And Google will use TPUs for inference, so doesn't have to pay NVIDIA their 70% margins (like

@OpenAI

and

@Microsoft

has to…

We’re excited to announce 𝗚𝗲𝗺𝗶𝗻𝗶:

@Google

’s largest and most capable AI model.

Built to be natively multimodal, it can understand and operate across text, code, audio, image and video - and achieves state-of-the-art performance across many tasks. 🧵

174

2K

6K

18

83

958

People getting mad about

@OpenAI

not releasing GPT4's research details....

the only tangible way to get back is to surpass GPT-4's results and release the details of how it was done.

literally any other kind of criticism is a mere expression of anger and a big distraction.

71

68

839

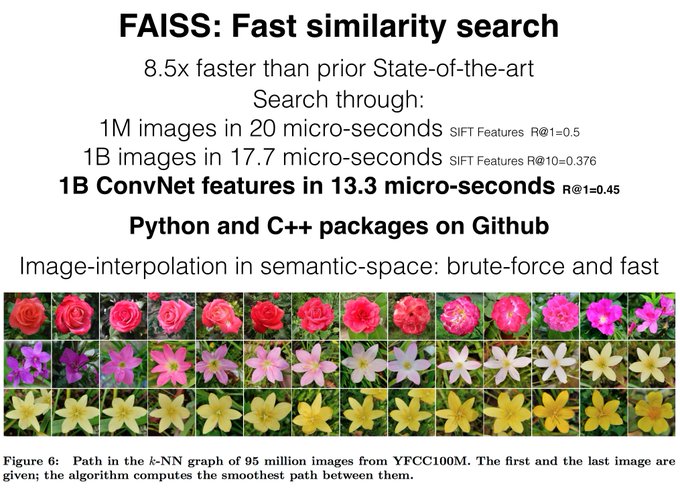

10 crazy years -- pytorch, detectron, segment-anything, llama, faiss, wav2vec, biggraph, fasttext, the Cake below the cherry, and so much more.

Can't say we didn't change AI and to an extent the world.

23

60

833

Perplexity has become my most used AI app by the end of 2023. I use it for fact-seeking questions -- including recent news / facts, summarizing opinions and recommendations on products and much more.

ChatGPT + Browsing can do similar stuff, but its like 100x slower, and is often…

17

56

786

Getting

@satyanadella

to talk about PyTorch...✅

.

.

(Satya and I went to the same high school, Hyderabad Public School)

13

46

775

We just released AITemplate -- a high-performance Inference Engine -- similar to TensorRT but open-source.

It is really fast!

On StableDiffusion, it is 2.5x faster than the XLA based version released last week.

19

116

730

Llama3 8B and 70B are out, with pretty exciting results!

* The ~400B is still training but results already look promising.

* Meta's own Chat interface is also live at

* TorchTune integration is shortly going live:

15

95

715

to my researcher friends (at

@openai

and those who watched this saga unfold) -- if you want to focus on doing solid *open* and published research, have access to lots of hardware -- there are a few options: Meta, Mistral, etc.

don't let your work get lost in corporate sagas!…

22

54

691

Llama3-70B has settled at

#5

. With 405B still to come next...

I remember when GPT-4 released in March 2023, it looked like it was nearly-impossible to get to the same performance.

Since then, I've seen

@Ahmad_Al_Dahle

and the rest of the GenAI org in a chaotic rise to focus,…

17

48

681

ML Code Completeness Checklist: consistent and structured information in the README makes your code more popular and usable.

Sensible advice, backed by data.

Proposed by

@paperswithcode

and now part of the NeurIPS Code Submission process.

Read more:

6

152

653

Super excited to welcome the PFN team to the

@PyTorch

community. With Chainer, CuPy, Optuna, MNCore, their innovations need no introduction. The community is going to get even more fun! :)

6

142

642

Small perks of joining the Linux Foundation!

We spoke about ML Accelerators and Linux driver-land issues :D

Two creators of passion projects that transformed the landscape of how we code today — Linus Torvalds and

@soumithchintala

meet for the first time, sharing a smile and a love for the open source community.

#PyTorchFoundation

18

68

685

1

40

636

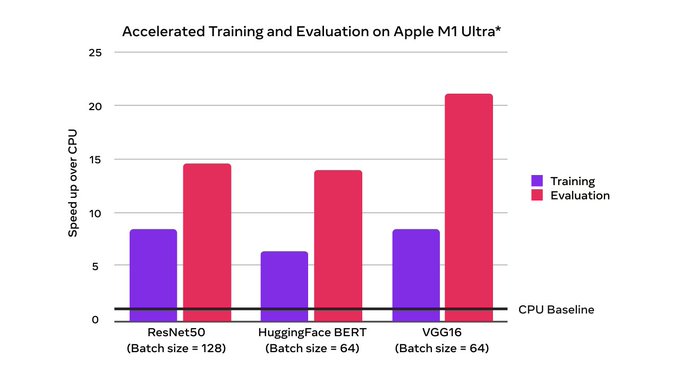

Everyone's been waiting for it!

Thanks to Apple, working in closing collaboration with the core team for making this happen!

7

47

630

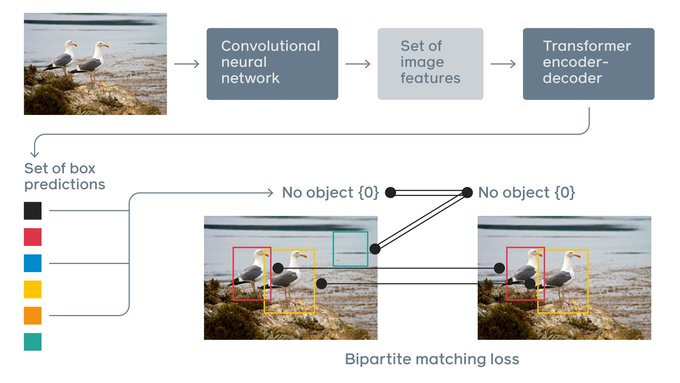

- Take FasterRCNN

- Remove clunky NMS, Proposals, ROIAlign, Refinement and their gazillion hyperparameters

- Replace with Transformer

- Win!

Simplifies code and improves performance.

Nice work from

@fvsmassa

(torchvision maintainer) and his collaborators at FAIR.

5

104

630

Open LLMs need to get organized and co-ordinated about sharing human feedback. It's the weakest link with Open LLMs right now. They don't have 100m+ people giving feedback like in the case of OpenAI/Anthropic/Bard.

They can always progress with a Terms-of-Service arbitrage, but…

27

82

626

Cloud TPUs are out, we'll start sketching out

@PyTorch

integration. The cost is $6.50 per TPU-hour right now. Hopefully when they get affordable, we will be ready with PyTorch support :)

Thanks

@googleresearch

who have been very open to the conversation of

@PyTorch

integration.

4

115

562

This is not a research paper, this is a real-world product. Wow!

Been following

@runwayml

from their early days (and visited their offices last year). Great set of people, strong creative and product sense. Watch out for them.

6

59

555

very excited about the

@GoogleAI

office in Bangalore!

At

#GoogleForIndia

today, we announced Google Research India - a new AI research team in Bangalore that will focus on advancing computer science & applying AI research to solve big problems in healthcare, agriculture, education, and more.

#GoogleAI

263

2K

9K

4

38

545

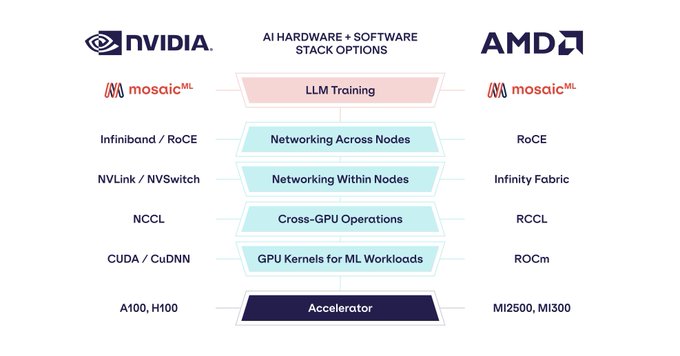

Here's

@MosaicML

showcasing their results with

@PyTorch

2.0 + AMD for LLM Training.

They made "Zero Code Changes" to run on AMD.

MI250 is already trending well, and IMO MI300X will be very competitive.

16

77

543

Two exciting news from our robotics research today. 1/

DIGIT: a vision-based touch-sensor

Projects light into a gel in the "finger-tip".

A camera + model rapidly estimates the changes in image to compute localized pressure

Announcement that it is commercially available now!

12

88

497

this take is so bad, it's hard to comprehend where to start taking it apart!

For one, it starts with academic peer-review pathology: "paper too simple, so cant be innovative -- reject".

It equates "fundamental innovations" to "architectural innovations" which is like ughhh...

13

23

461

.

@OpenAI

showed an cool code generation demo at

#MSBuild2020

of a big language model trained on lots of github repositories

The demo does some non-trivial codegen specific to the context.

Eagerly waiting for more details!

Video: starting at 28:45

5

155

447

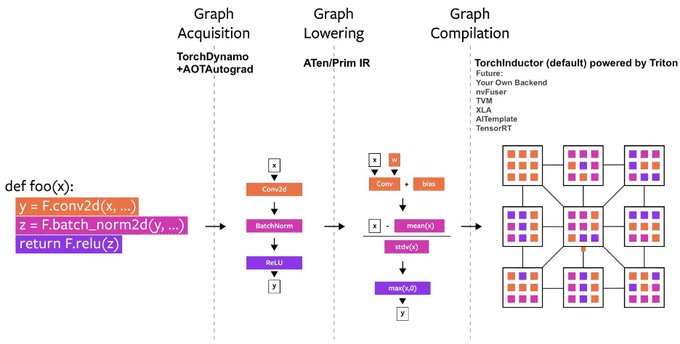

so excited to introduce

@PyTorch

2.0, a year in the works.

Still early, be gentle :)

We just introduced PyTorch 2.0 at the

#PyTorchConference

, introducing torch.compile!

Available in the nightlies today, stable release Early March 2023.

Read the full post:

🧵below!

1/5

23

524

2K

8

50

443

thanks to

@JeffDean

and

@SingularMattrix

for their great leadership today; and

@fchollet

@dwarak

and many others at

@GoogleDeepMind

for quickly charting a good and aligned path forward together.

We can go back focusing on the unlimited amounts of good work ahead of us.

(Jeff,…

16

25

446

it pains me to see a poorly constructed benchmark coming from a credible source. I hope

@anyscalecompute

fixes things, and also consults other stakeholders before publishing such benchmarks. If I didn't know Anyscale closely, i would have attributed bad faith.

Lets dive into…

8

45

309

It's incredible to see how far

@pytorch

has come as a community, while preserving our core values of pushing for simplicity and innovation. (1/x)

2

55

427

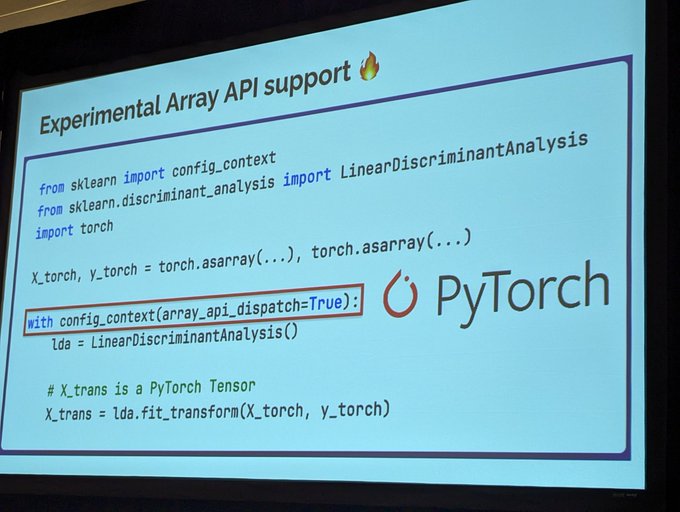

wow didn't know this was happening. this is huge!

scikit added support for pytorch and GPUs via array dispatch

New in

@scikit_learn

-- experimental support for building models on GPUs with

@PyTorch

-

@thomasjpfan

at

#SciPy2023

0

83

375

3

49

430

Congratulations Jeff and team!

In 2015 TensorFlow pushed framework engineering up a level and pushed everyone forward.

JAX seems to be doing the same in 2020, so thanks for continually funding great frameworks out of

@GoogleAI

When we released

@TensorFlow

as an open source project in Nov. 2015, we hoped external machine learning researchers & practicioners would find it as useful as we had internally at

@GoogleAI

. Very proud to see us hit 100M downloads!

2015 blog post:

16

149

956

2

20

413

the Cerebras chip is a technological marvel -- a real, working full-wafer chip with 18GB of register file!

It's probably one of the first chips where data "feed" will become the bottleneck, even for fairly modern networks. Congrats

@CerebrasSystems

!

10

110

411

nothing short of mind-blowing!

holy shit, the future is getting crazy!

here is sora, our video generation model:

today we are starting red-teaming and offering access to a limited number of creators.

@_tim_brooks

@billpeeb

@model_mechanic

are really incredible; amazing work by them and the team.

remarkable moment.

2K

4K

26K

13

14

406

@OpenAI

Join the folks in the business of pushing the limits of open-science:

@MetaAI

,

@Stanford

,

@StabilityAI

,

@huggingface

and others.

Help make this happen.

9

22

404

this Nature machine intelligence thing is fine exploitation of researchers. Please lets not make it a thing.

Its a decade when sci-hub has to exist hush-hush and Aaron Schwartz was legally ambushed because he downloaded a bunch of research. Let that sink in. Signed.

6

94

398

Spot-pricing on TPUs is getting good. We have prototyped TPU-PyTorch support (w. Google engineers), hammering coverage and performance now. Promising times....

5

85

402