Cody Blakeney

@code_star

Followers

3,409

Following

860

Media

788

Statuses

11,878

Head of Data Research @MosaicML / @databricks | Formerly Visiting Researcher @ Facebook | Ph.D | #TXSTFOOTBALL fan |

Brooklyn, NY

Joined August 2011

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

#GranHermano

• 150919 Tweets

スタンプ

• 145431 Tweets

#JACKANDJOKERQ1

• 113234 Tweets

GPT-4o

• 112232 Tweets

#WWERaw

• 89269 Tweets

Luka

• 72679 Tweets

Dallas

• 47014 Tweets

Mavs

• 31538 Tweets

Diniz

• 27239 Tweets

Shai

• 25332 Tweets

Dort

• 21258 Tweets

Gunther

• 18873 Tweets

#NarcoCandidataClaudia60

• 17171 Tweets

スナック

• 14877 Tweets

スクエニ

• 14381 Tweets

Manny

• 13480 Tweets

Jey Uso

• 13147 Tweets

書類送検

• 12909 Tweets

Mavericks

• 11578 Tweets

Chet

• 10915 Tweets

Last Seen Profiles

@gdequeiroz

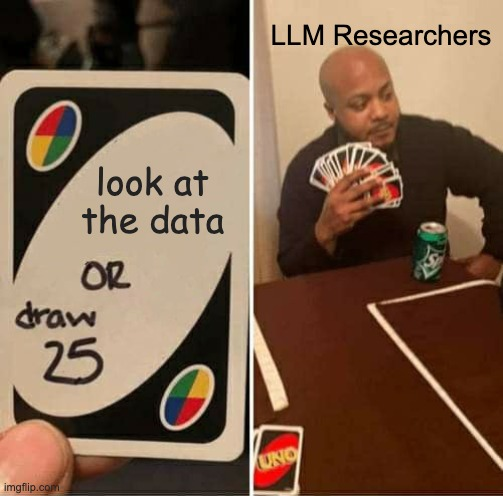

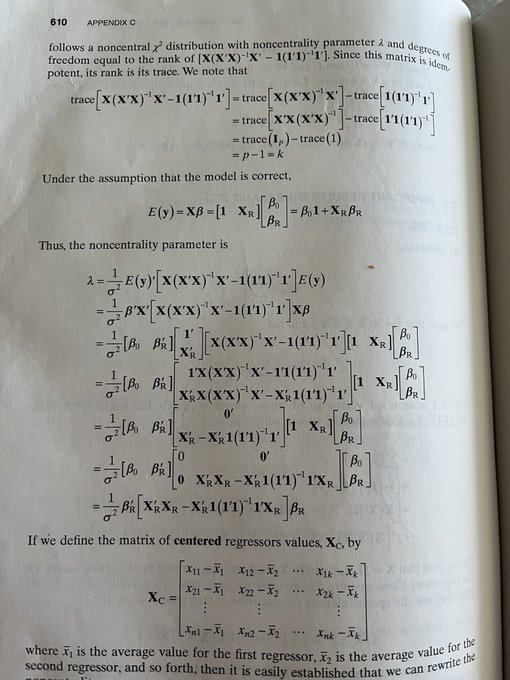

The best way I can articulate it is they care deeply about (or have worked hard at) proofs of things DL people just throw away. After several pages proving if you have an unbiased estimator of a parameter it's pretty annoying to see someone just doing a hyperparameter sweep.

6

5

349

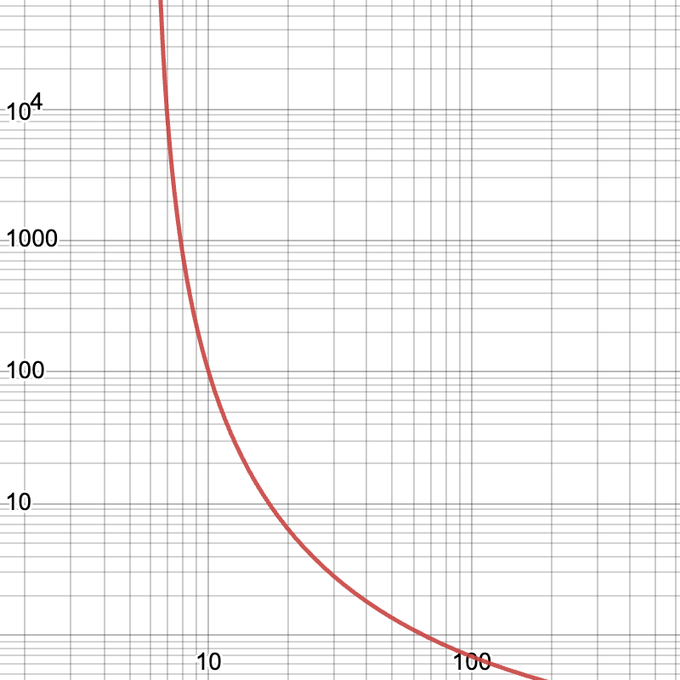

Ok, but hear me out. a 7B model with the same performance as a 67B model is worth 7837x as much.

15

14

226

@maxisawesome538

@TylerAlterman

Alpha meme -> zoomer news (duet) -> boomer news (actual news) -> millennial news (twitter)

2

5

192

That’s not entirely true. We released an open source 30B model, described in great detail the data used to train it, and the framework to train it.

Just add GPUs.

Of course if you pay us, we make dealing with the infra much easier 😉

7

10

166

One of my favorite genre of tweets is public radio hosts clapping back at people who asked them to do/not do exactly what they already did/didn’t

5

7

123

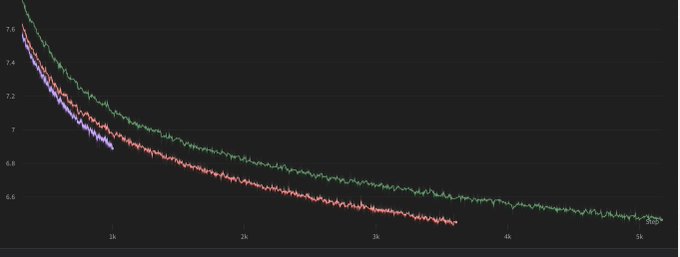

@AlbalakAlon

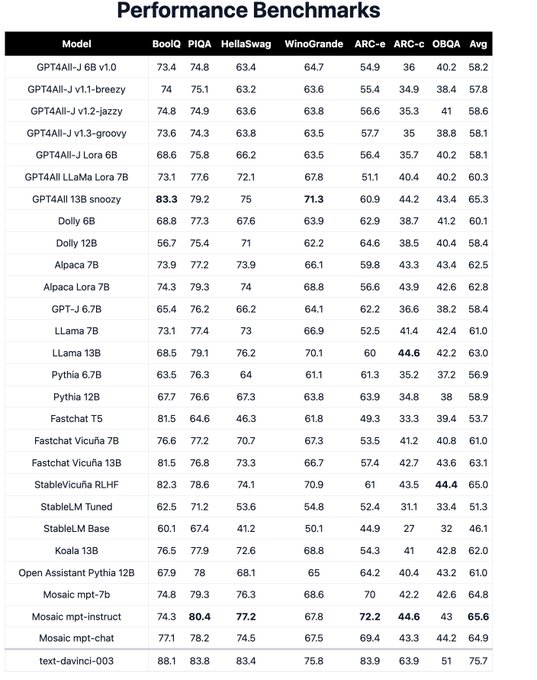

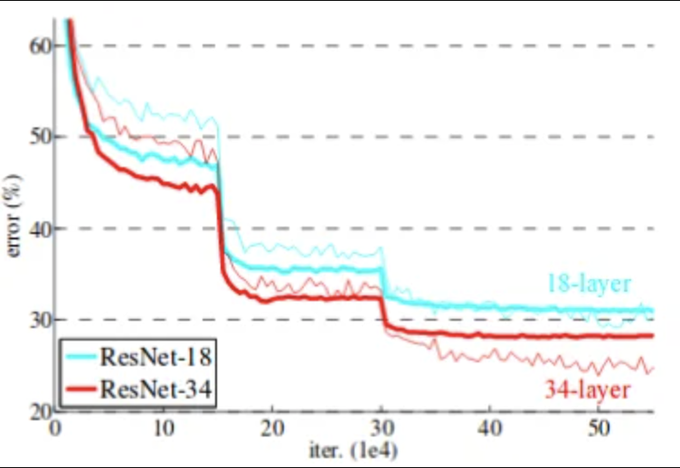

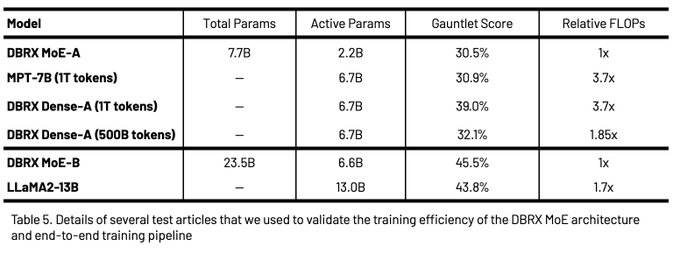

Yes! We trained a *new* MPT7B. Exact same arch and code. We were able to hit the same quality with half the number of tokens / training. Its not quite 2x reduction in training (larger tokenizer), but pretty dang close. We evaluated it on our newest version of guantlet.

6

11

118

@bartbing71

This isn’t the right take away but I hate the hassle the most when I catch cheating. Like … can you cheat better so I can enjoy my evening?

0

0

74

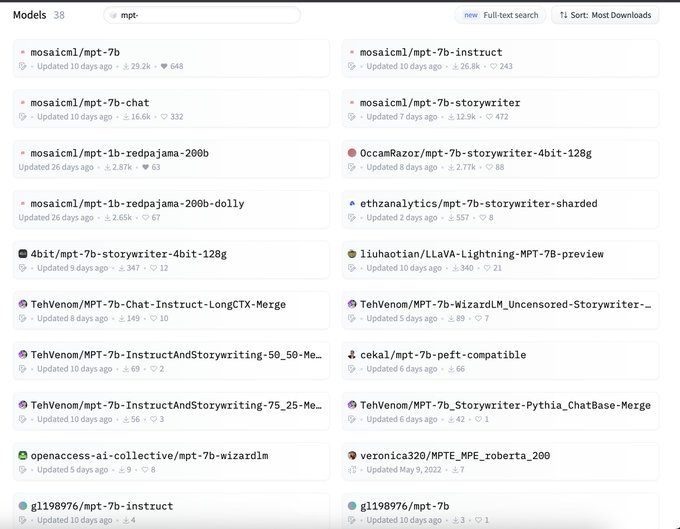

I'm absolutely floored by all the community-driven projects around MPT-7B 🤯. Are you using it for something? Tell us (

@MosaicML

), we would love to hear it!

3

5

70

@kairyssdal

how much do I need to donate to APM or Marketplace to have start the show off on a Wednesday saying "In Los Angeles, I am Kai Ryssdal it is Wednesday, my dudes!"

3

0

68

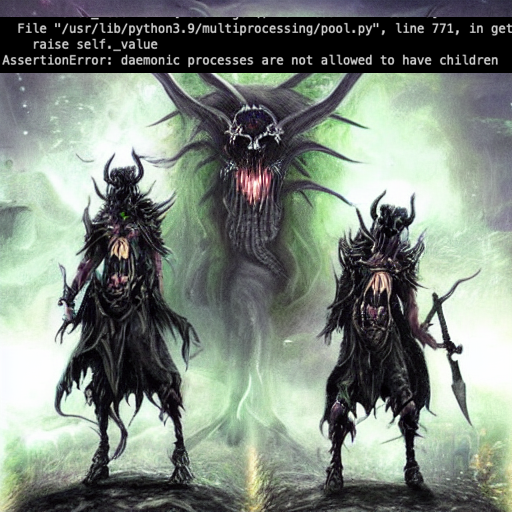

@chrisalbon

Docker is the solution. Fuck it, just send the whole OS and all the packages. I don't trust anyone.

5

2

61

I have to thank my amazing team (the

@DbrxMosaicAI

Data team

@mansiege

@_BrettLarsen

@ZackAnkner

Sean Owen and Tessa Barton) for their outstanding work. We have try made a generational improvement in our data. Token for token our data is twice as good as MPT7B was.

2

4

58

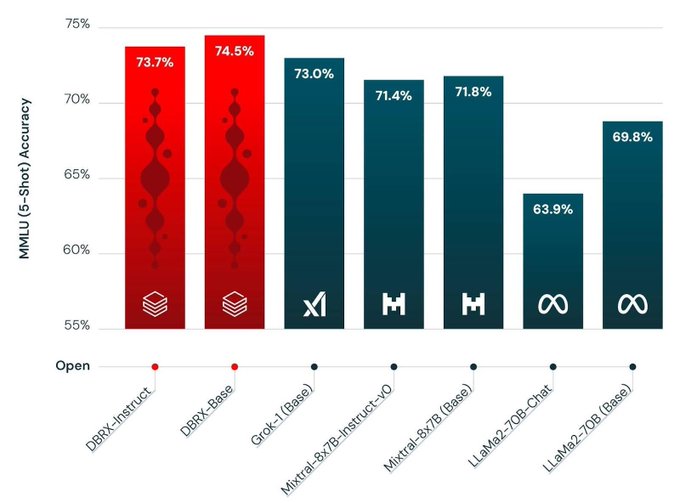

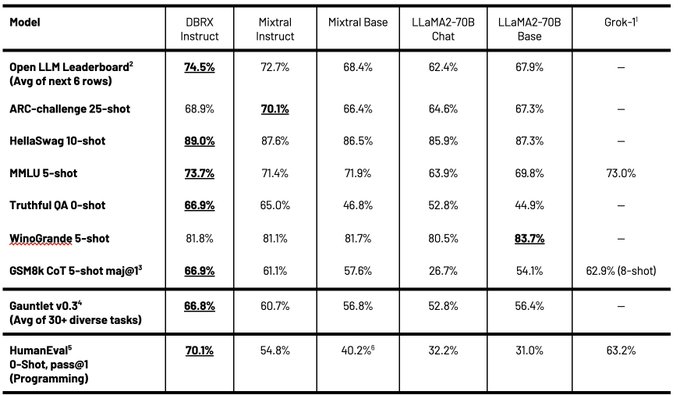

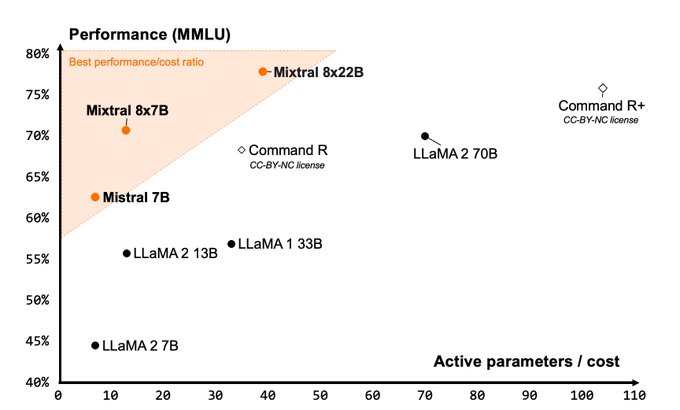

Feels like there is a model missing from this triangle. 🤔

Incredible performance and efficiency, all Apache 2.0 open, from the amazing

@MistralAI

team!!!

I’m most excited for the SOTA OSS function calling, code and math reasoning capabilities!!

Cc

@GuillaumeLample

@tlacroix6

@dchaplot

@mjmj1oo

@sophiamyang

3

4

71

4

0

57

wow! you got into that *fast*. Yup that all looks right!

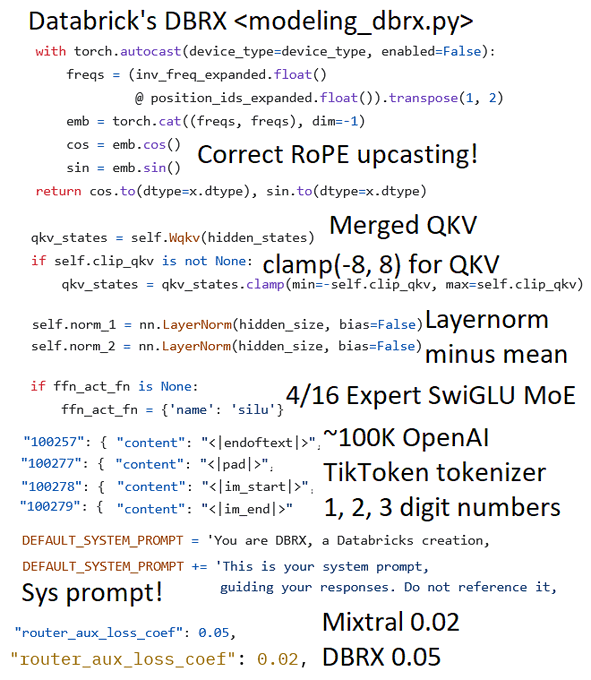

Took a look at

@databricks

's new open source 132 billion model called DBRX!

1) Merged attention QKV clamped betw (-8, 8)

2) Not RMS Layernorm - now has mean removal unlike Llama

3) 4 active experts / 16. Mixtral 2/8 experts.

4)

@OpenAI

's TikToken tokenizer 100K. Llama splits…

25

174

1K

2

1

53

Today is the first day of my big boy job. I'm excited to finally be full-time at

@MosaicML

! 🥳 (now excuse me while I go flood our cluster with new experiments)

7

2

48

@ItsMePCandi

@caradaze

Just a guess. Spots are a zero sum game. Once too many tourists find out it’s harder for locals to go. 🤷♂️

2

0

43

@johnwil80428495

@UniversityStar

Well alot of us love people that are old or have compromised immune systems. If we do the right things we can save lives.

0

0

43

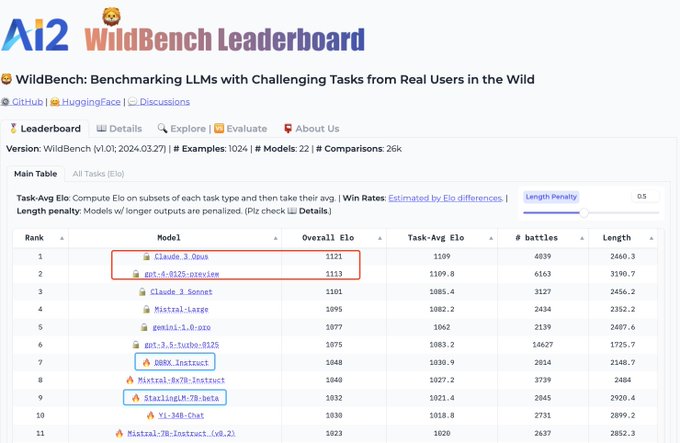

DBRX is the best open model on AI2 WildBench! 😀

🆕 Check out the recent update of 𝕎𝕚𝕝𝕕𝔹𝕖𝕟𝕔𝕙! We have included a few more models including DBRX-Instruct

@databricks

and StarlingLM-beta (7B)

@NexusflowX

which are both super powerful! DBRX-Instruct is indeed the best open LLM; Starling-LM 7B outperforms a lot of even…

3

32

127

3

5

42

@Tim_Dettmers

Truly the shame should go further up the author list.

That being said I think like 30-50% of deep learning papers of the last decade wouldn’t have been published if they had properly tuned baselines.

0

0

42

It’s coming back! The

@jefrankle

lost a bet with the unbelievably talented

@mansiege

and has been subjected to being rad. What an unfortunate turn of events.

5

4

39

I think people some people (not necessarily Jesse) misunderstood why there is a lack of transparency. Meta isn’t afraid of transparency, or giving up secret sauce. Big players will not disclose their data until case law over copyright/fair use is better defined. That doesn’t mean…

5

3

40

Words cannot express how excited I am about this.

@lilac_ai

is *the* best user experience I have found for exploring, cleaning, and understanding data for LLMs. I can’t wait to work with them to build the future of data!

Incredibly excited to announce that

@lilac_ai

is joining

@databricks

!

With Lilac in Databricks, data curation for LLMs will be elevated to the next level: Enterprise AI 🚀🚀

A huge huge to everyone who’s supported us on this journey ❤️

44

14

221

1

1

40

Ok I just got around to taking the time to learn how to use

@weights_biases

. Wow what a game changer. I can't believe I put it off this long.

1

4

39

MI250s run out of the box with ZERO CODE CHANGES on llm-foundry 👀👀👀 huge shout out to

@abhi_venigalla

and

@vitaliychiley

for this one!

2

6

39

If you are hiring anything ML/NN related reach out to my boy. We were in the same PhD cohort. Half of my good ideas in my dissertation he helped me brainstorm. One of the best python programmers I know. Immigration laws in this country are bs and have him scrambling.

1

20

36

@NaveenGRao

I feel like a python -> c/c++ translator would get you most of the way to what you want.

2

0

37

I cannot say enough how much I ❤️ love ❤️ our model gauntlet. Both for the speed at which it evaluates on its many tasks, and the thoughtfulness that went into organizing the tasks. It’s been a god send for us for selecting pre-training data and making modeling decisions.

2

4

35

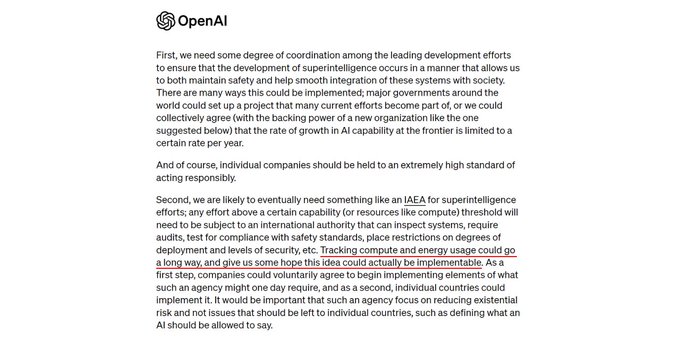

It’s hard for me for read the statements by OpenAI as anything other than a cynical advertisement for how powerful their products are, and to scare people off from throwing their hat in the ring.

2

2

35

Kind of genius if this is what happened. Drop the big expensive model, let people analyze it and be amazed, then distill it to save costs.

*If* that is what occurred *and if* if has regressed this seems like a case where metrics didn’t capture the effects of compression.

5

1

34

Fwiw I currently light piles of cash on fire for

@MosaicML

so that we can learn how to light smaller piles of cash on fire 🔥 (when we have to)

0

0

33