Alexis Conneau

@alex_conneau

Followers

26,271

Following

143

Media

31

Statuses

341

Audio AGI Research Lead @OpenAI

San Francisco

Joined September 2016

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Chiefs

• 79040 Tweets

Amad

• 74955 Tweets

#虎に翼

• 59363 Tweets

Ubisoft

• 55940 Tweets

Yasuke

• 54157 Tweets

Antony

• 50623 Tweets

#PLdaGLOBONÃ0

• 35669 Tweets

Eagles

• 33342 Tweets

ジェシー

• 32749 Tweets

Romney

• 29583 Tweets

#ラヴィット

• 28835 Tweets

Cádiz

• 28224 Tweets

Chargers

• 24067 Tweets

Nitro

• 23982 Tweets

Steelers

• 23957 Tweets

Reece James

• 22853 Tweets

Amrabat

• 21938 Tweets

Celta

• 20759 Tweets

Ravens

• 20230 Tweets

Browns

• 19940 Tweets

Cowboys

• 19068 Tweets

Raiders

• 16194 Tweets

Texans

• 15138 Tweets

Bengals

• 14338 Tweets

$BRETT

• 13229 Tweets

Rams

• 11550 Tweets

#AEWDynamite

• 11471 Tweets

Last Seen Profiles

Pinned Tweet

@OpenAI

#GPT4o

#Audio

Extremely excited to share the results of what I've been working on for 2 years

GPT models now natively understand audio: you can talk to the Transformer itself!

The feeling is hard to describe so I can't wait for people to speak to it

#HearTheAGI

🧵1/N

35

53

458

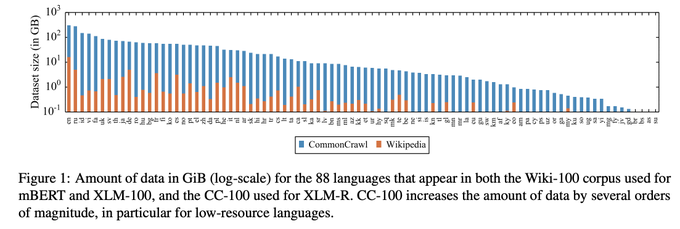

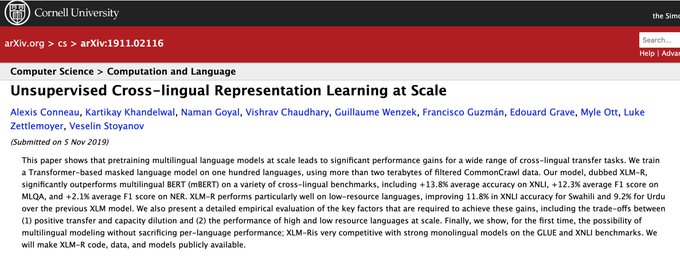

Just released our new XLM/mBERT pytorch model in 100 languages. Significantly outperforms the TensorFlow mBERT OSS model while trained on the same Wikipedia data.

@GuillaumeLample

@Thom_Wolf

@PyTorch

2

196

676

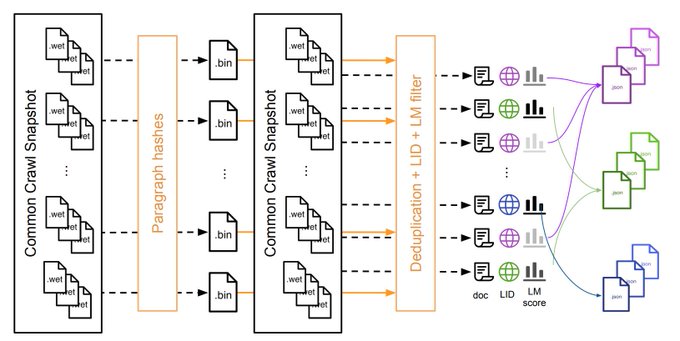

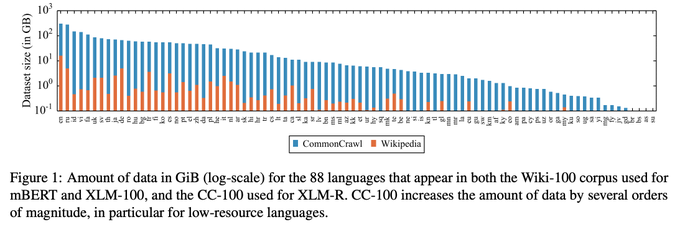

DATASET RELEASE: "CC100", the CommonCrawl dataset of 2.5TB of clean unsupervised text from 100 languages (used to train XLM-R) is now publicly available.

You can find below the

Data:

Script:

By

@VishravC

et al.

2

184

682

👨🔬Life update: Happy to share that I recently joined

@GoogleAI

Language as a research scientist 👨🏫

I will continue my research on building neural networks that can learn with little to no supervision

14

2

540

New work: "Unsupervised speech recognition"

TL;DR: it's possible for a neural network to transcribe speech into text with very strong performance, without being given any labeled data.

Paper:

Blog:

Code:

3

96

468

Career update: A month ago, I re-joined FAIR at

@MetaAI

as a research scientist.

I am continuing my work on self-supervised learning for Language.

12

6

322

This video clip should appear at the beginning of any AI movie in the classic flashbacks

A demo from 1993 of 32-year-old Yann LeCun showing off the world's first convolutional network for text recognition.

#tbt

#ML

#neuralnetworks

#CNNs

#MachineLearning

64

2K

8K

0

40

258

Would be cool to be able to say "

#ChatGPT

sucks" ... "it can't answer my <insert_weirdest_question>", and sound clever 🤓

But let's be honest, it has almost no flaws. It can answer most questions so well. Even tricking it is hard

Plus, the path for improvement is very clear

11

7

188

⚙️Release: CCNet is our new tool for extracting high-quality and large-scale monolingual corpora from CommonCraw in more than a hundred languages.

Paper:

Tool:

By G. Wenzek, M-A Lachaux,

@EXGRV

,

@armandjoulin

0

57

162

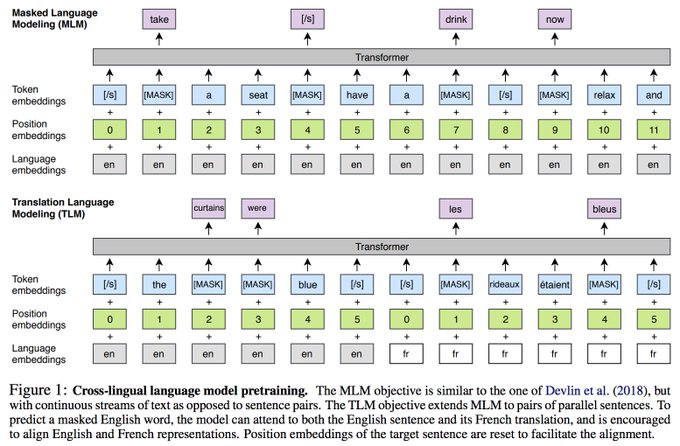

1/4 XLM: Cross-lingual language model pretraining. We extend BERT to the cross-lingual setting. New state of the art on XNLI, unsupervised machine translation and supervised machine translation.

Joint work with

@GuillaumeLample

6

64

151

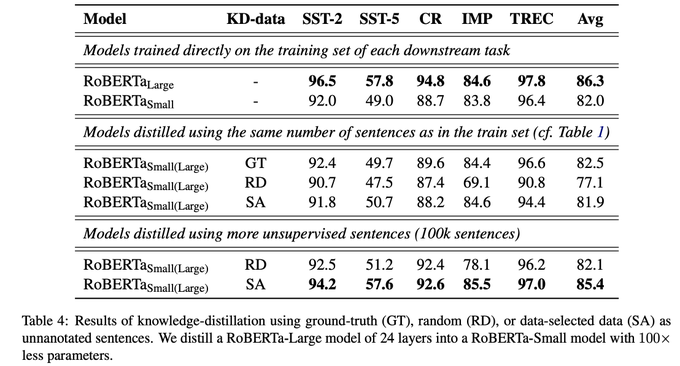

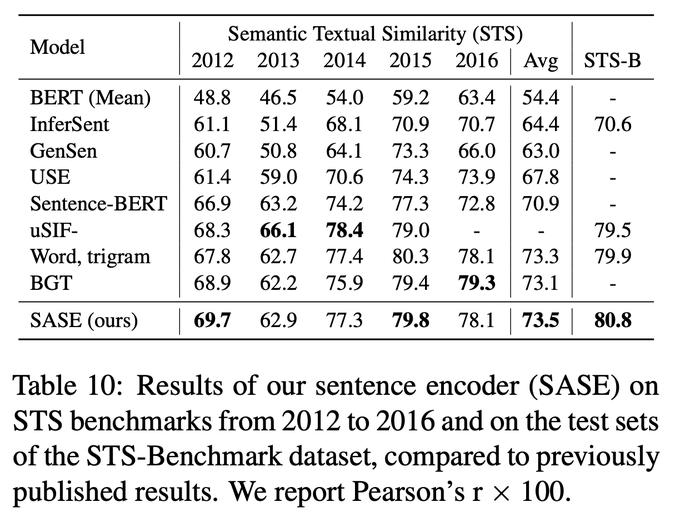

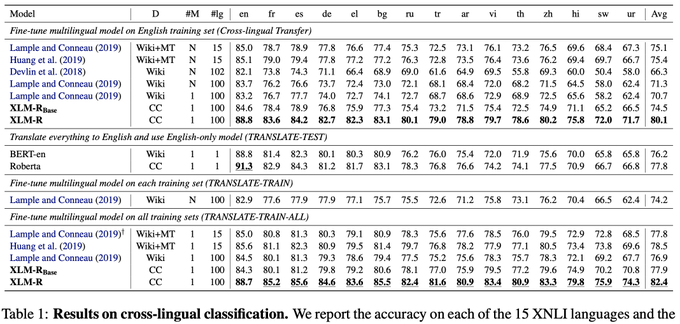

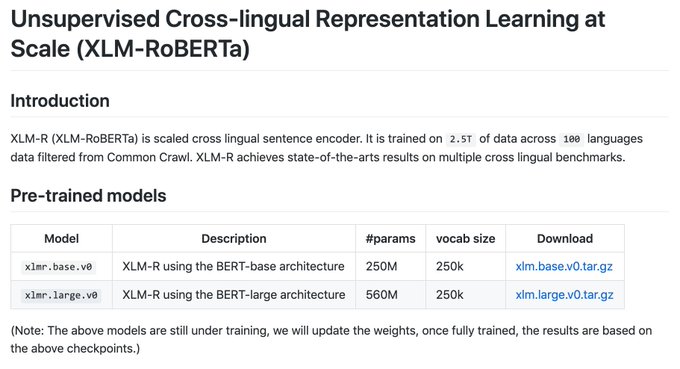

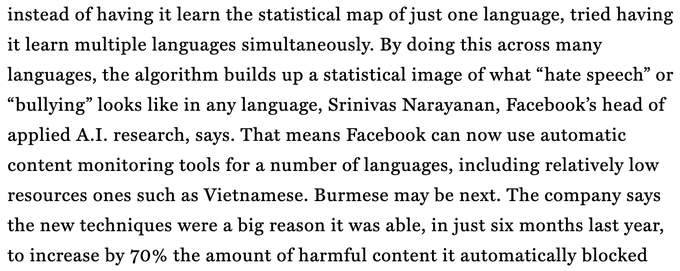

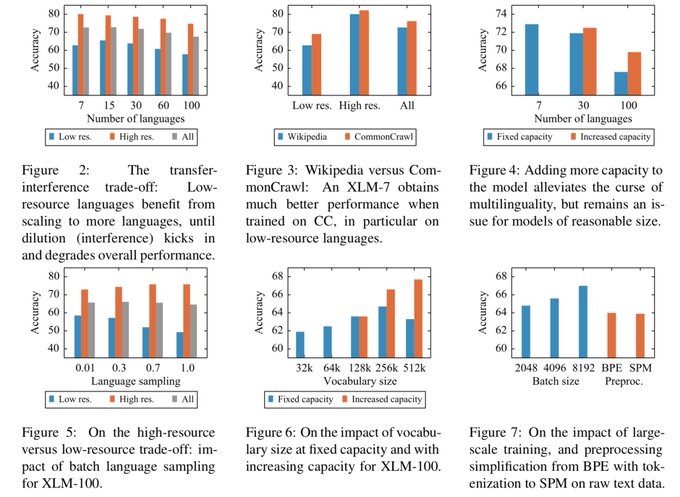

Two papers accepted this year at

#ACL2020

:)

The first one on Unsupervised Cross-lingual Representation Learning at Scale (XLM-R) is a new SOTA on XLU benchmarks; and shows that multilinguality doesn't imply losing monolingual performance. (1/3)

1

27

148

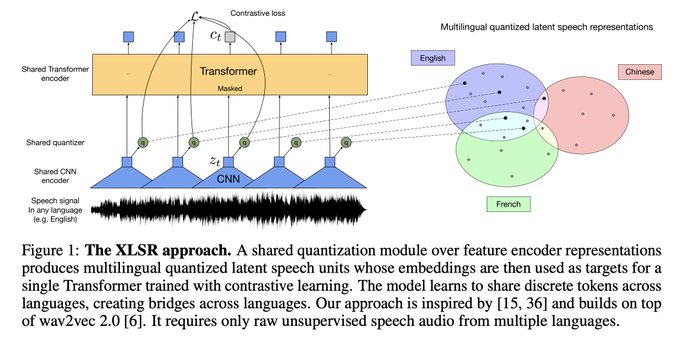

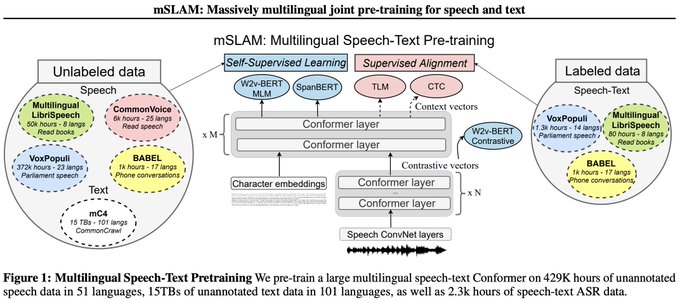

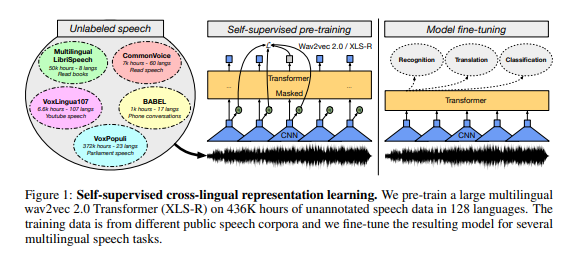

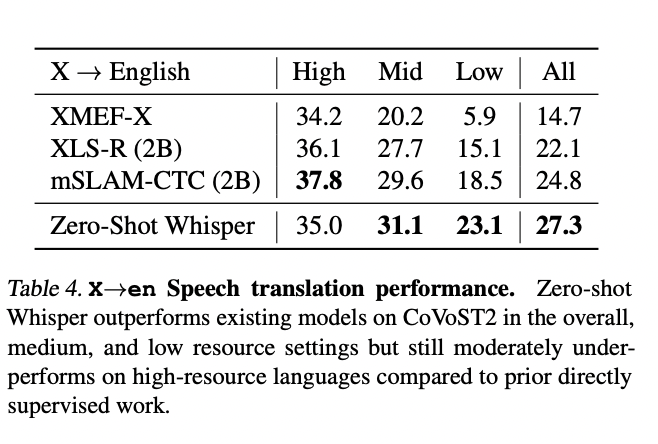

📢 mSLAM: Massively multilingual joint pre-training for speech and text 📢

Imagine if we could unify speech and text understanding within a single model + handle all languages?

This is the direction we're taking with mSLAM (~=XLM-R+XLS-R?)

New SOTAs on AST(+2.7BLEU) and ASR...

6

24

130

Out of the 4 most downloaded models on

@huggingface

, one is XLM-R and one is XLS-R

made it possible. HuggingFace is an incredible partner to both researchers & practitioners

No wonder

@ClementDelangue

can organize AI parties with 5,000 people in a week

0

15

117

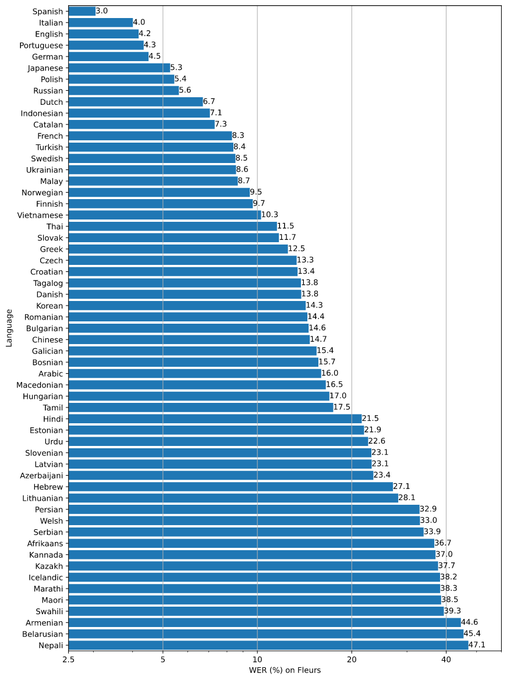

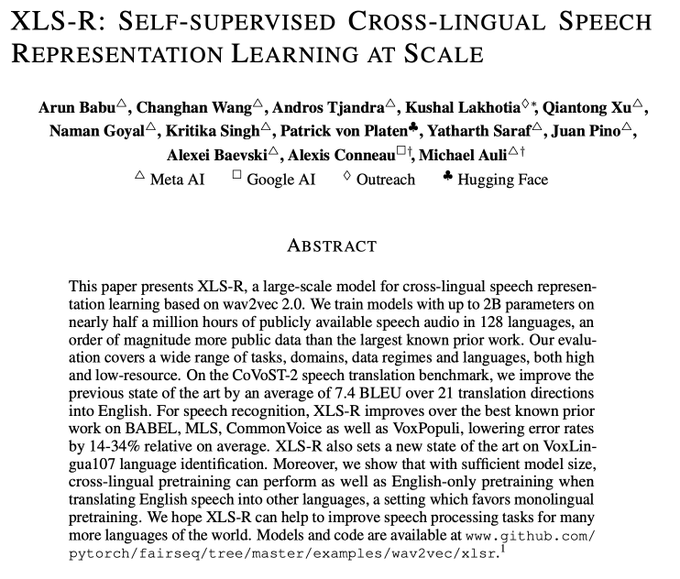

🎉 We had 3 papers accepted at

#Interspeech2022

+ 1 new paper 🎉

1) XLS-R: SSL for Speech

2) XTREME-S: Benchmark for Speech SSL

3) FLEURS: 100-way parallel speech data based on FLoRes MT data + Speech "bitext" mining

4) SSL for Speech2speech translation

<Thread>

2

12

121

Our FLEURS paper won the best paper award at SLT 2022!

@ieee_slt

SLT:

arXiv:

Thanks to the organizers! Grateful for the collaboration with many great colleagues 🙂

6

19

116

Glad to announce that our paper on Cross-lingual Language Model Pretraining has been accepted as a spotlight presentation at

@NeurIPSConf

.

Code:

Paper:

3

14

101

It's not surprising

@OpenAI

focused on mining supervised data, it's the right thing to do and it's been so under-explored in Speech

Think about how MT is done: pretty much only bitext mining. Check out LASER for speech (Meta) or FLEURS (Google); more work needs to be done there

3

4

96

Check out the open-source code for SOTA Unsupervised Machine Translation at . Gives 28 BLEU on WMT English-French without a single parallel sentence!

2/2 papers accepted at

@emnlp2018

! One is our paper on Unsupervised MT: for which we also open-sourced the code:

Other one will come soon :)

@alex_conneau

@LudovicDenoyer

1

39

175

0

18

81

We are releasing the checkpoints of XLSR-53 fine-tuned on each language of the MLS dataset

Thanks to

@PatrickPlaten

and

@huggingface

we can now easily understand and look at the common mistakes made by these models. So far, at least the French model seems to work decently :)

1

8

74

Great event incoming to build ASR systems in many languages using

@huggingface

. Will involve the new XLS-R models, as well as many tricks (fine-tuning, self-training, LM-decoding).

Jan 24th to Feb 7th

🎙️Speech community event incoming! 📨

The Robust Speech Challenge will be held from January 24th to February 7th in collaboration with

@OVHcloud

🔥

Come and join us to build robust speech recognition systems in 70+ languages🤗🌍

To participate:

6

58

192

0

6

65

Very cool work by

@PatrickPlaten

@Thom_Wolf

and the

@huggingface

team integrating wav2vec 2.0 into HuggingFace.

The amazing Transformers library is now also a speech recognition library!

🚨Transformers is expanding to Speech!🚨

🤗Transformers v4.3.0 is out and we are excited to welcome

@facebookai

's Wav2Vec2 as the first Automatic Speech Recognition model to our library!

👉Now, you can transcribe your audio files directly on the hub:

17

317

1K

0

10

63

The "Jigsaw Multilingual Toxic Comment Classification"

@kaggle

competition from Jigsaw/

@GoogleAI

is now over.

Happy to see winning solutions using XLM-R as their main model

Thanks to

@huggingface

for making the model very easy to use for participants

0

14

63

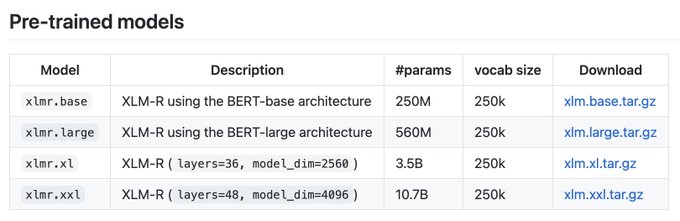

We just released the XLM-R XL (3.5 Billion parameters) and the XLM-R XXL (10.7B Billion parameters) models.

Link:

1

8

61

I cannot believe this is real

Big congrats to the magicians who made Sora:

@_tim_brooks

&

@billpeeb

,

@model_mechanic

and team

3

2

45

Research on retrieval-augmented LLMs is nicely complementary to research on dense models like GPT-3 or PaLM.

Very excited to introduce Atlas, a new retrieval augmented language model which is competitive with larger models on few-shot tasks such as question answering or fact checking.

Work lead by

@gizacard

and

@PSH_Lewis

.

Paper:

2

11

74

2

3

43

Join the live stream of

@OpenAI

DevDay on Monday 10am!

Some great new stuff to be announced there

3

3

42

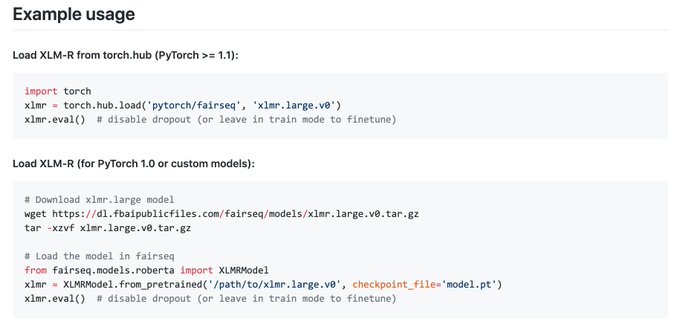

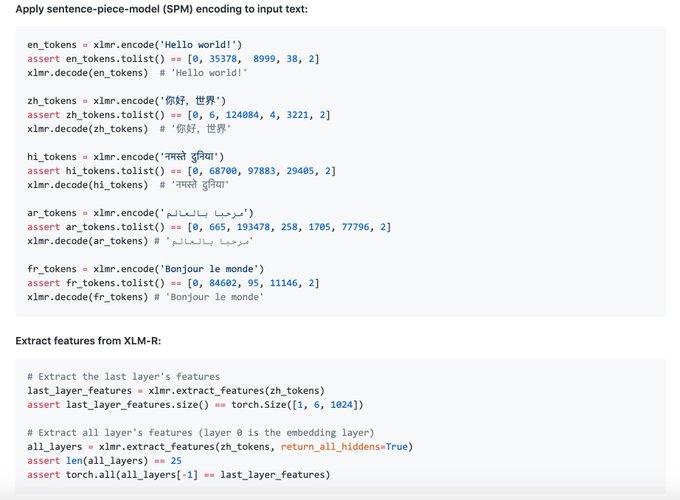

Play with our XLM-R and XLM-R_Base with

@Pytorch

hub: . Available on FairSeq, Pytext and XLM. Soon on

@HuggingFace

Transformer repo and

@TensorFlow

hub. [5/6]

4

12

42

I spent a great year at

@GoogleAI

, working on multimodal self-supervised learning, for speech and text.

Thanks to all my amazing colleagues there for the ride.

1

0

39

We're working hard to provide the community with high-quality

#CommonCrawl

corpora to enable representation learning for low-resource languages. The reign of Wikipedia corpora is over =). The CC100 corpus of XLM-R will be released soon at

#LREC2020

. (3/3)

1

3

39

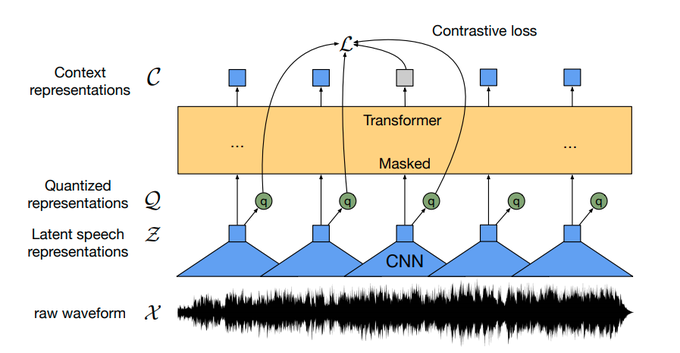

Self-supervised pretraining for speech enables automatic speech recognition with only *10 minutes* 🕐 of transcribed speech data. Works very well cross-lingually too.

Wav2vec 2.0:

XLSR:

By

@ZloiAlexei

and colleagues from Facebook

0

4

34

Congrats, Google DeepMind folks!

3

0

30

We have a new paper: "Scaling laws for generative mixed-modal LMs"

Check it out!

Although I have contributed only a little I am proud to be on that work with this set of amazing colleagues

1

2

32

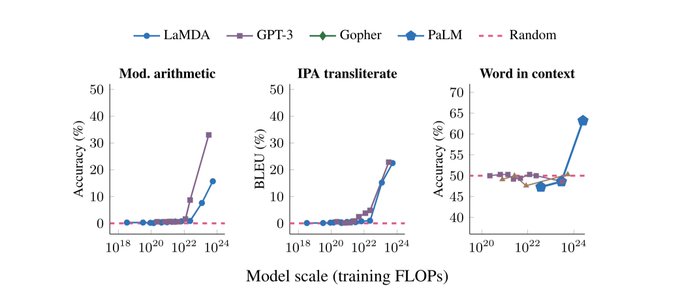

These "phase transitions" in LLMs are quite incredible

1

1

28

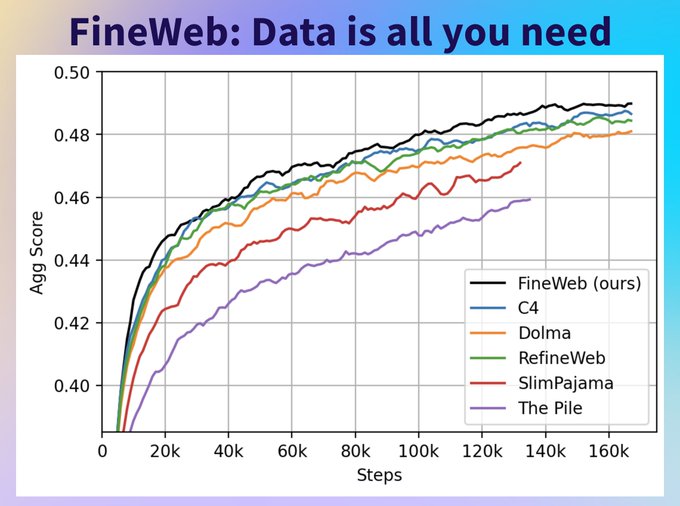

Wow!

@huggingface

Data is all we need! 👑 Not only since Llama 3 have we known that data is all we need. Excited to share 🍷 FineWeb, a 15T token open-source dataset! Fineweb is a deduplicated English web dataset derived from CommonCrawl created at

@huggingface

! 🌐

TL;DR:

🌐 15T tokens of cleaned

14

87

395

0

5

30

Congrats to the

@AIatMeta

team working hard for that cool release!

Congrats too to the former FAIRies who initiated the Llama train, wherever they are now!

1

0

25

@colinraffel

Thanks!

In the github we release four 100M/1B-sentences FAISS indexes () that fit on a single GPU (16/32GB mem) for simplicity. Embeddings are quite compressed but these still work quite well for similarity search. I'll add the github link to arxiv! :)

1

4

20

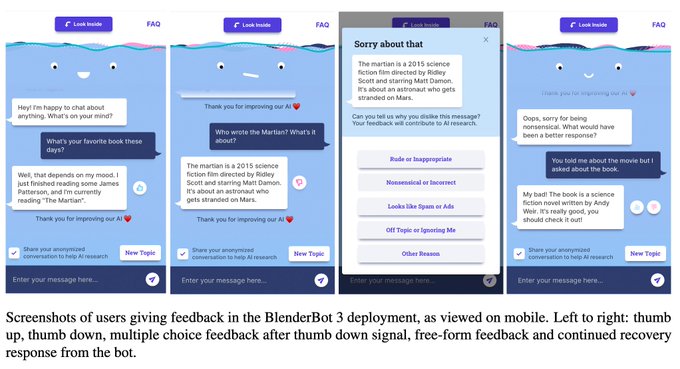

Really impressive and fun. Conversations are sometimes quite impressive. Also the Demo is well designed and easy to use.

Check it out here:

0

0

18

@pmddomingos

@sama

@ilyasut

AlexNet / Seq2seq / GPTs (i.e. LLMs) / Rebirth of self-supervised learning (GPT-1/Sentiment-neuron). Among other things

0

0

16

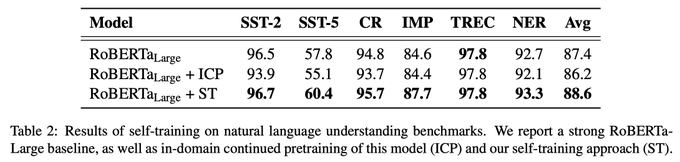

Really great trying it for generation

But a lot of downstream results reported for BLOOM & OPT () are basically close to random (e.g. SST, MNLI)

Does it make sense to report those scores? "Few-shot" doesn't mean you *don't* have to provide good results.

2

1

16

What a legend

0

0

13

@ParcolletT

Knowledge distillation (+ quantization) of large fine-tuned models to smaller models works quite well though. Big companies use that as they also need very small models for prod. What do you think about this approach?

1

0

13

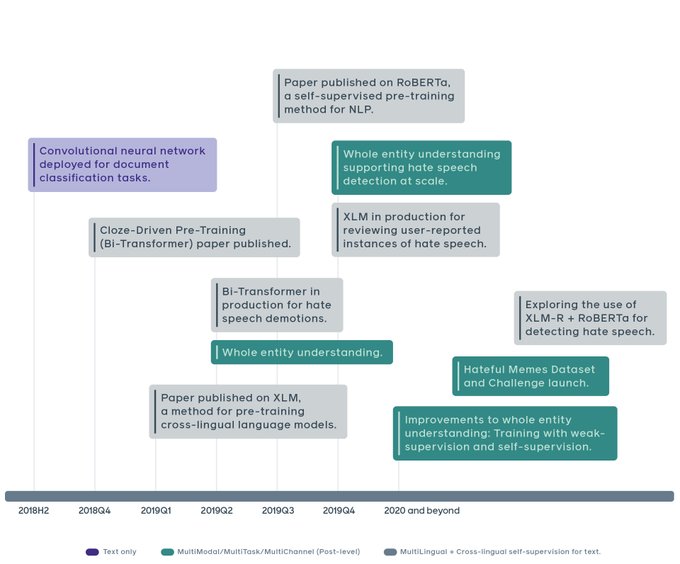

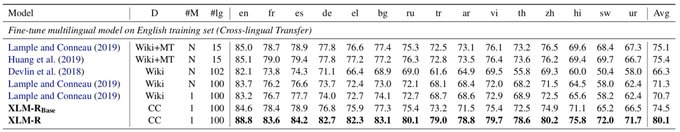

Nice article from

@FortuneMagazine

that mentions our work on hate speech:

"[Facebook] says the new techniques [XLM(-R)] were a big reason it was able [..] to increase by 70% the amount of harmful content it automatically blocked from being posted."

1

1

12

Amazing work that takes one more significant step towards the unification of neural nets for all modalities

Now open-sourced and available on

@huggingface

0

1

12

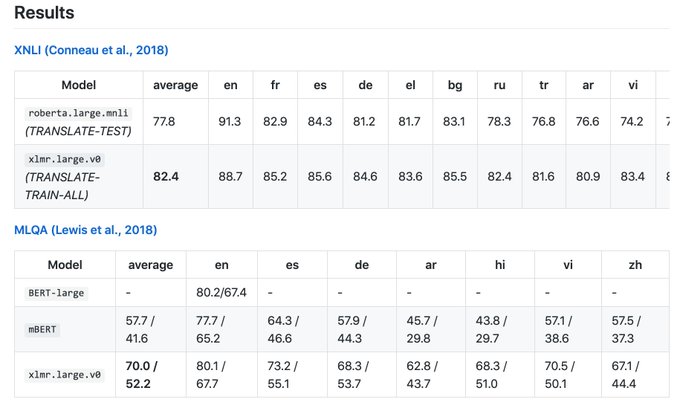

XLM-R especially outperforms mBERT and XLM-100 on low-resource languages, for which CommonCrawl data (see

#CCNet

) enables representation learning: +13.7% and +9.3% for Urdu, +21.6% and +13.8% accuracy for Swahili on XNLI. [4/6]

1

2

11

"Model downloads of XLS-R (300m,1B,2B) spiked at 3,000 downloads per day during event"

Thank you

@huggingface

@PatrickPlaten

🤗

Speech technology for everyone in all languages will become a reality, not just for research, but also for concrete products.

0

2

11

@ashkamath20

@seb_ruder

@NYUDataScience

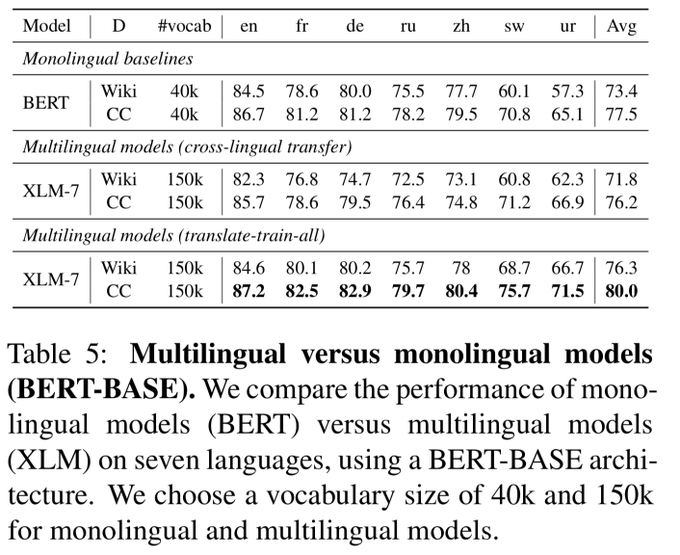

I'm not sure about monolingual transfer performing as well as joint learning though. Results from perform around 70% on XNLI which is more than 5% average accuracy below the state of the art.

2

1

9

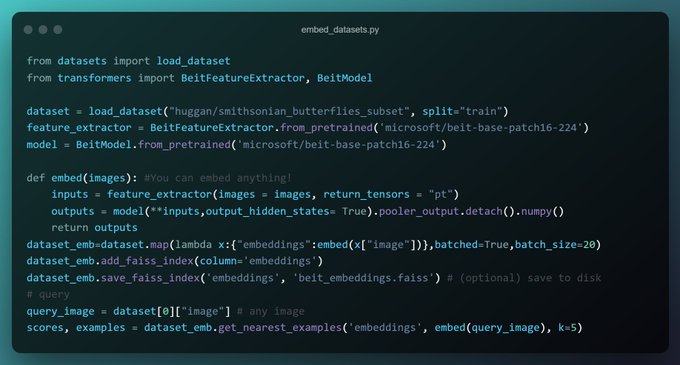

This is really awesome

Fast similarity search made simple with FAISS integration in

@huggingface

, for any embedding dataset

Underrated

@huggingface

datasets feature🧡

Adding an embedding faiss index to your dataset is this easy ⤵️

8

45

308

0

1

8

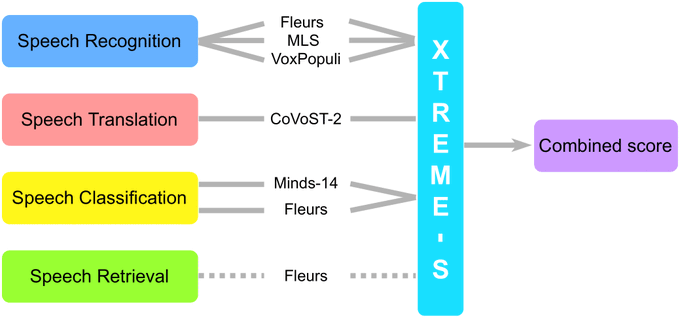

This is the result of work done by many amazing folks across

@GoogleAI

,

@huggingface

, and

@MetaAI

.

Please consider using XTREME-S so that as a community, we can accelerate progress on speech technology for the benefit of all.

📊4 challenging speech tasks, 102 spoken languages: can one model solve them all? 🤯

Introducing

@GoogleAI

's XTREME-S🏂 - the first multilingual speech benchmark that is both diverse, fully accessible, and reproducible!

👉

1/9

2

80

260

0

3

7