Maurice Weiler

@maurice_weiler

Followers

3,143

Following

996

Media

132

Statuses

700

AI researcher with a focus on geometric DL and equivariant CNNs. PhD with Max Welling. Master's degree in physics.

Amsterdam, The Netherlands

Joined January 2018

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Bronx

• 967481 Tweets

LOVTITUDE FANMEETING

• 717581 Tweets

namjoon

• 631957 Tweets

Rafah

• 370209 Tweets

Xavi

• 291675 Tweets

The ICJ

• 157363 Tweets

Flick

• 153101 Tweets

クロリンデ

• 100701 Tweets

LEAVE SEVENTEEN ALONE

• 100516 Tweets

Ten Hag

• 93966 Tweets

シグウィン

• 93937 Tweets

Laporta

• 88920 Tweets

Memorial Day

• 58346 Tweets

#SRHvsRR

• 49880 Tweets

Congratulations LISA

• 47027 Tweets

العدل الدوليه

• 41092 Tweets

McKenna

• 40718 Tweets

Coutinho

• 29953 Tweets

تشافي

• 29311 Tweets

Sokak Köpekleri Toplatılsın

• 26763 Tweets

Super Size Me

• 24820 Tweets

Morgan Spurlock

• 24495 Tweets

INEOS

• 23468 Tweets

#NickiAnnouncement

• 20843 Tweets

#تتويج_الهلال

• 18127 Tweets

Knives Out

• 18117 Tweets

Tyga

• 12396 Tweets

Kylie

• 10725 Tweets

Last Seen Profiles

Pinned Tweet

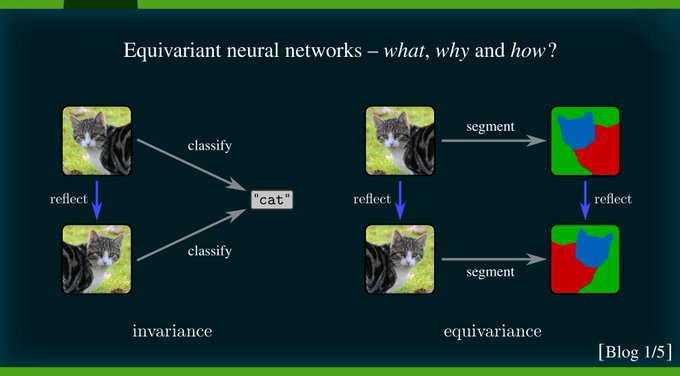

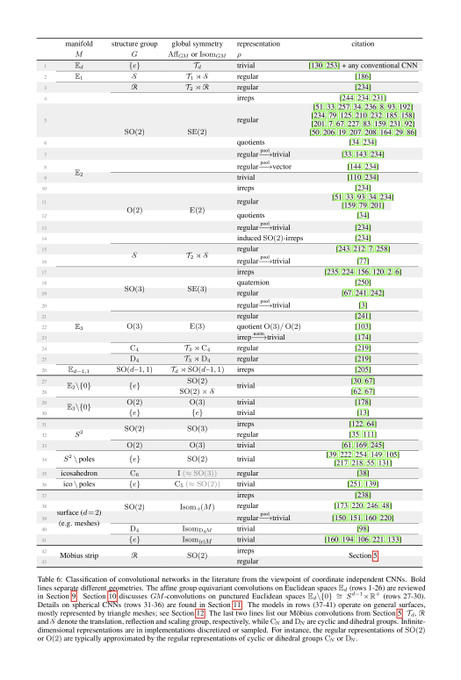

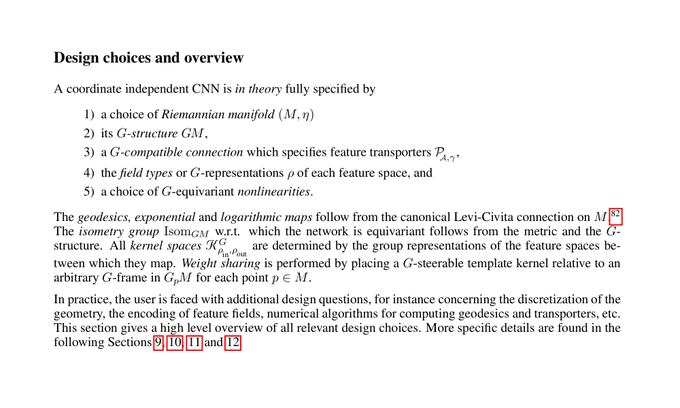

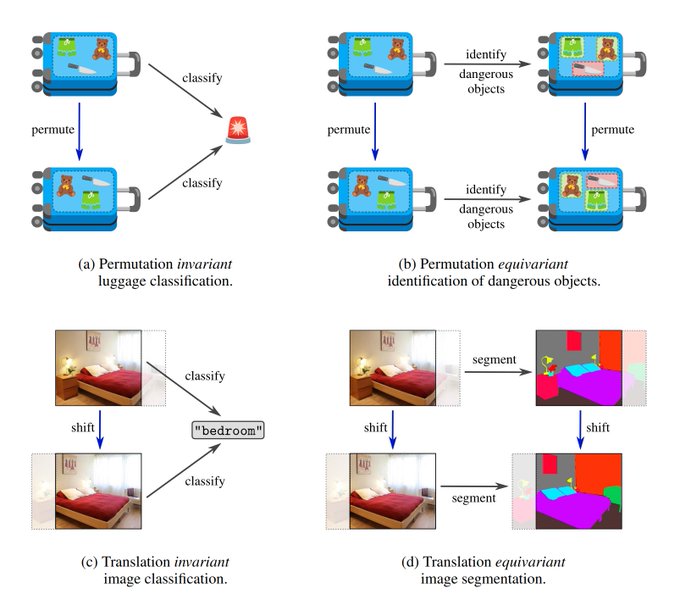

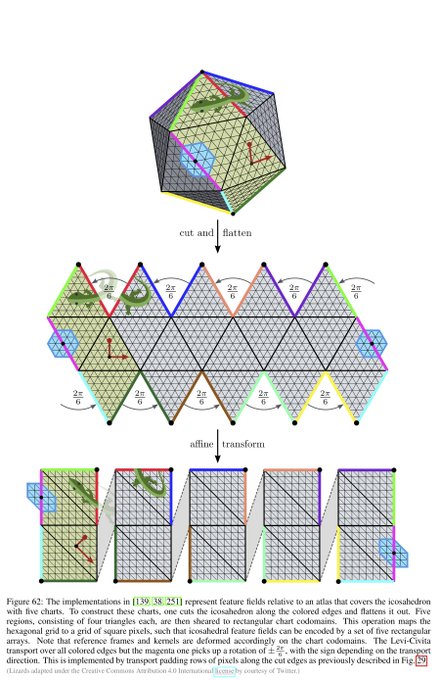

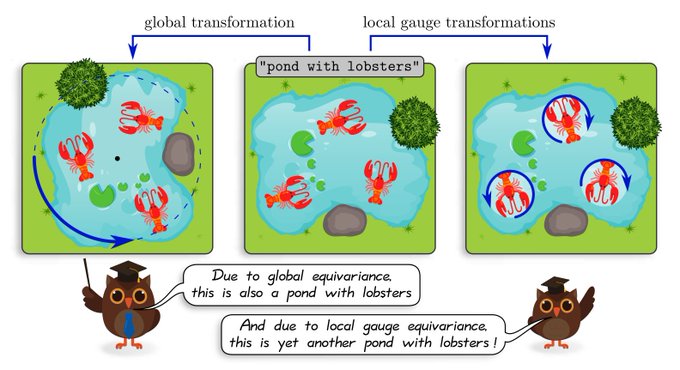

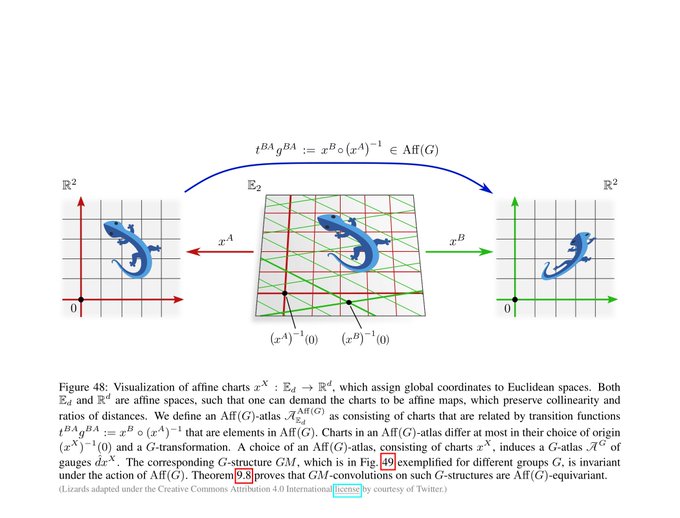

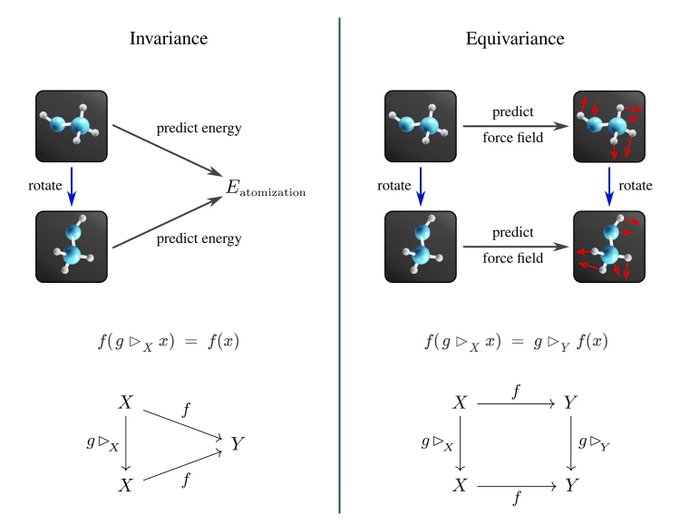

We proudly present our 524 page book on equivariant convolutional networks.

Coauthored by Patrick Forré,

@erikverlinde

and

@wellingmax

.

[1/N]

28

248

1K

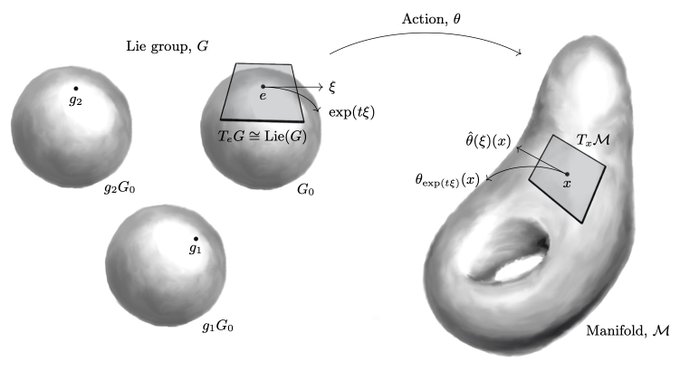

Really thorough theory paper on equivariant NNs. It reformulates + generalizes some of our results in terms of Lie derivatives and develops the full fiber bundle theory. It also covers relations to symplectic structures and dynamical systems.

Can't wait to read this in detail! 😄

0

19

160

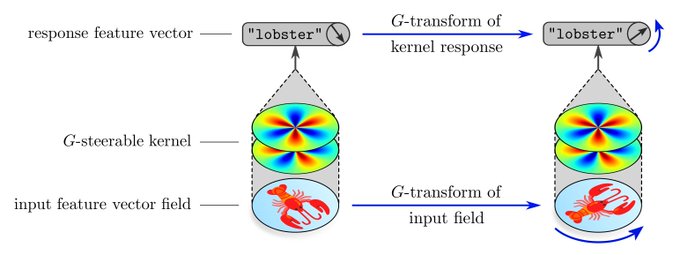

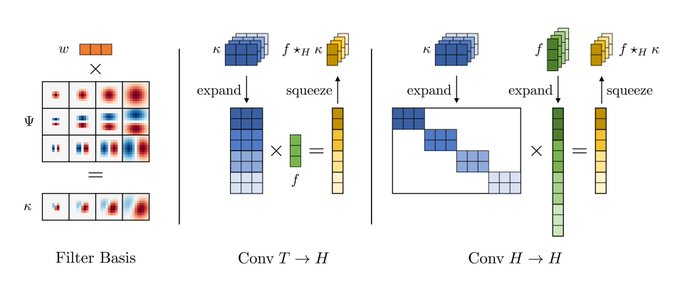

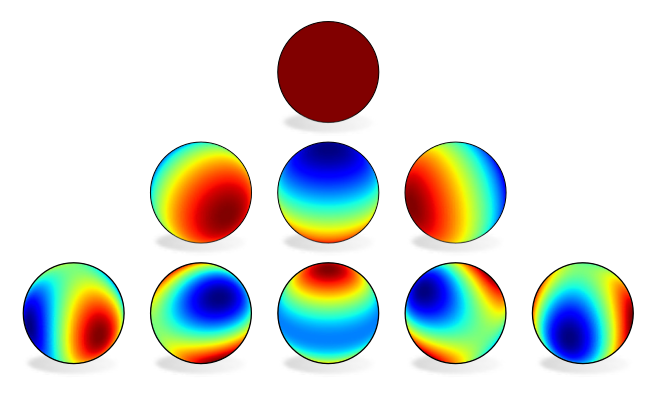

Check out our poster

#143

on general E(2)-Steerable CNNs tomorrow, Thu 10:45AM.

Our work solves for the most general isometry-equivariant convolutional mappings and implements a wide range of related work in a unified framework.

With

@_gabrielecesa_

#NeurIPS2019

#NeurIPS

1

33

136

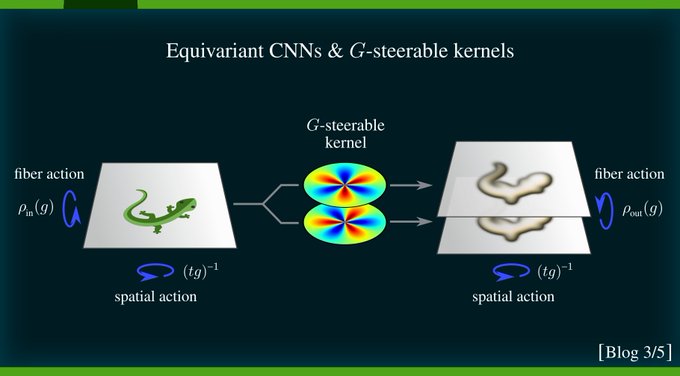

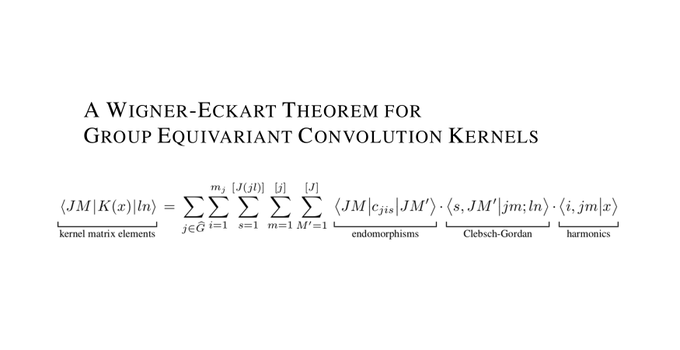

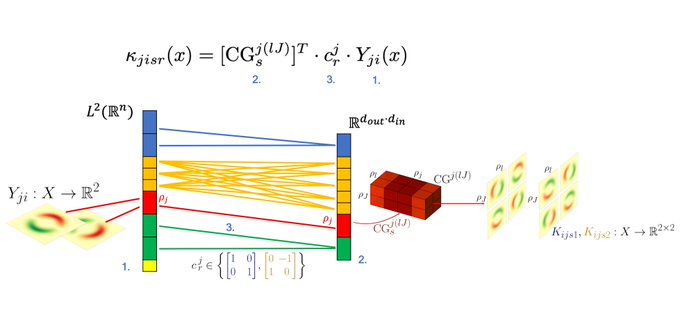

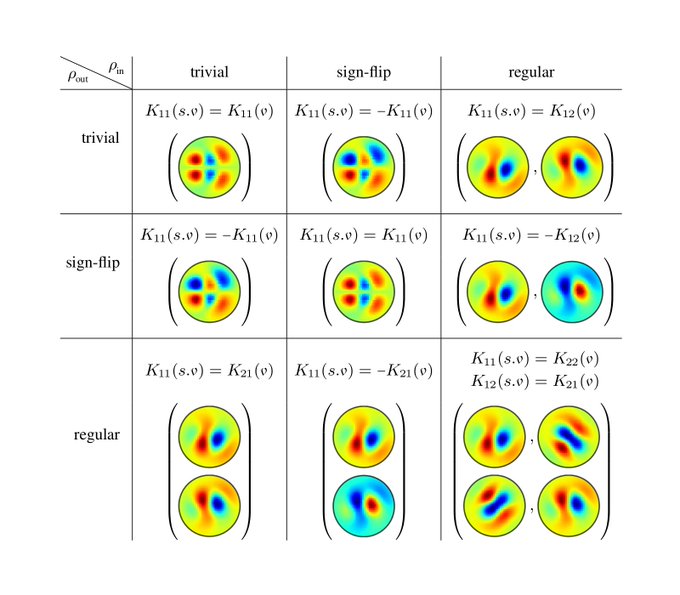

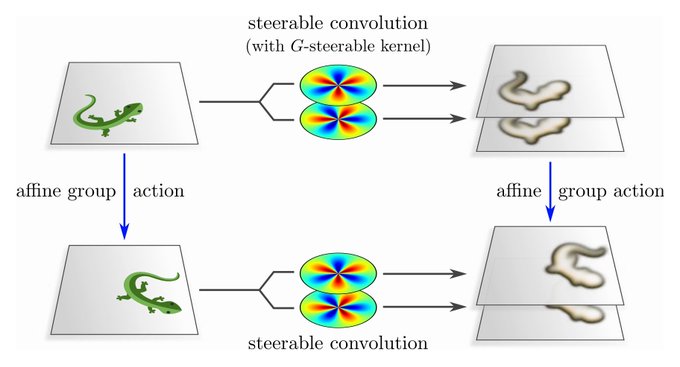

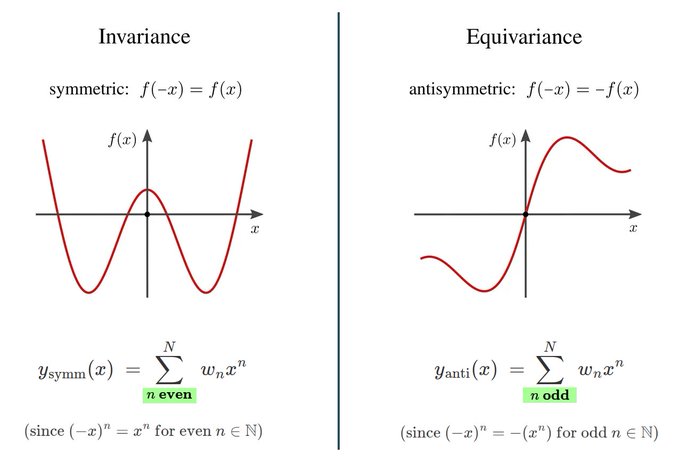

How to parameterize group equivariant CNNs?

Our generalization of the famous Wigner-Eckart theorem from quantum mechanics to G-steerable (equivariant) convolution kernels answers this question in a quite general setting.

Joint work with

@Lang__Leon

[1/n]

5

28

129

If you are interested in

#geometricdeeplearning

, check out our lectures from the First Italian Summer School on GDL.

Videos:

Slides:

By

@pimdehaan

@crisbodnar

@Francesco_dgv

and

@mmbronstein

Videos from the First Italian Summer School on

#geometricdeeplearning

are finally online!

Dive into DL from the perspectives of differential geometry, topology, category theory, and physics with

@pimdehaan

@maurice_weiler

@crisbodnar

@Francesco_dgv

1

80

408

2

12

89

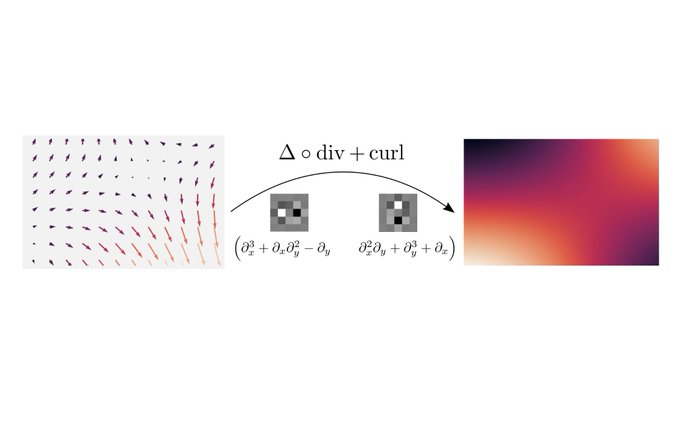

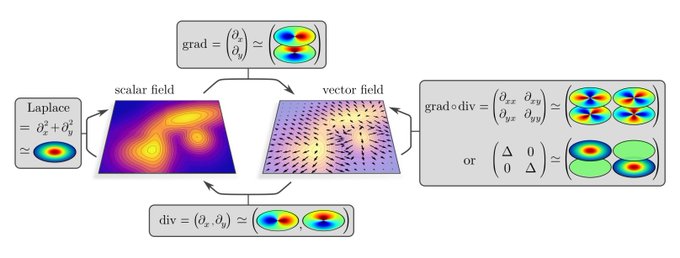

Our paper on equivariant partial differential operators for deep learning was accepted to

#iclr2022

!

Thread by

@jenner_erik

👇

Excited to announce that my paper with

@maurice_weiler

on Steerable Partial Differential Operators has been accepted to

#iclr2022

! Steerable PDOs bring equivariance to differential operators. Preprint: (1/N)

3

31

201

1

5

78

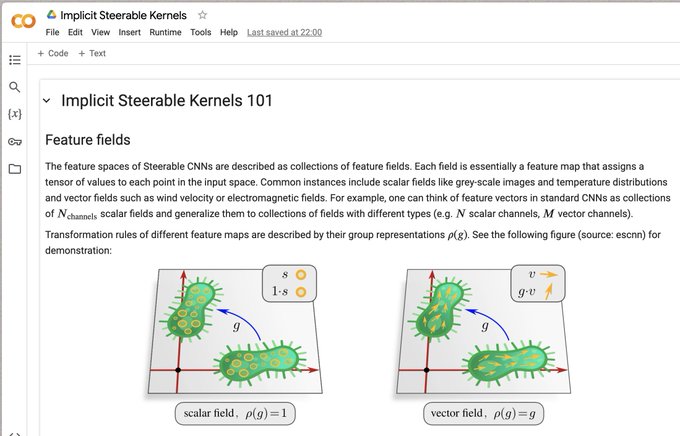

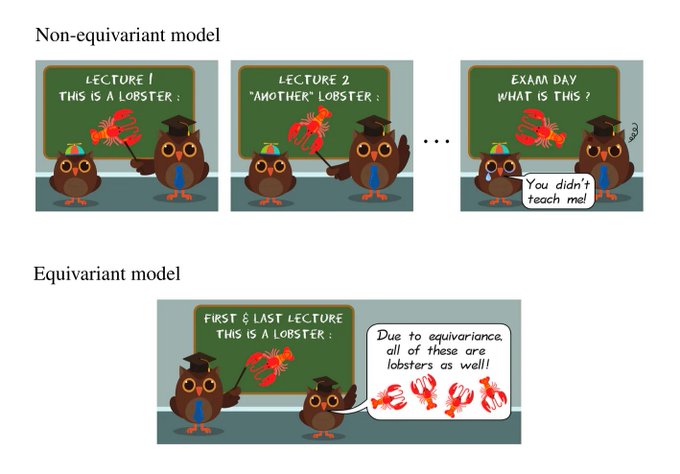

If you are interested in equivariant CNNs/MPNNs, I'd highly recommend to have a look at

@maxxxzdn

's implicit steerable kernels.

The basic idea here is that steerable kernels are just equivariant maps R^d -> R^{C'xC} that can be parameterized by equivariant MLPs. This already

1

8

72

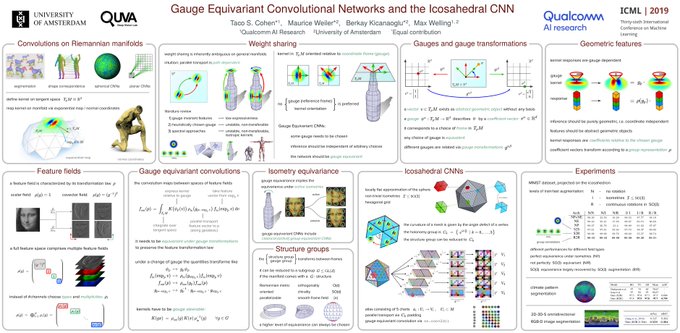

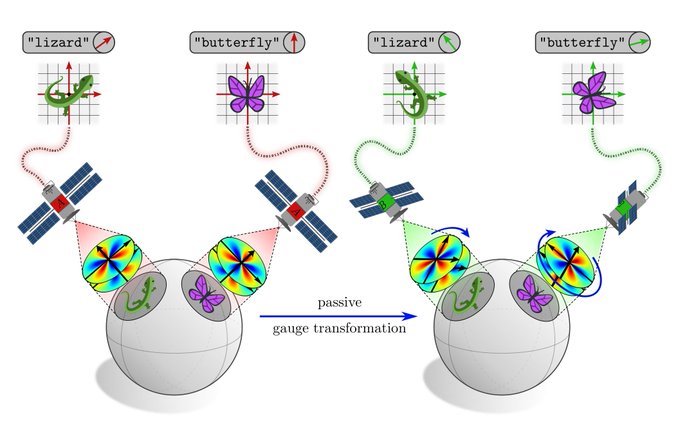

Come to our talk on Gauge Equivariant Convolutional Networks and Icosahedral CNNs today at 14:40 @ Grand Ballroom,

#ICML2019

.

Happy to discuss more details and connections to physics at poster

#76

@ Pacific Ballroom, 18:30.

With

@TacoCohen

,

@KicanaogluB

and

@wellingmax

.

0

11

65

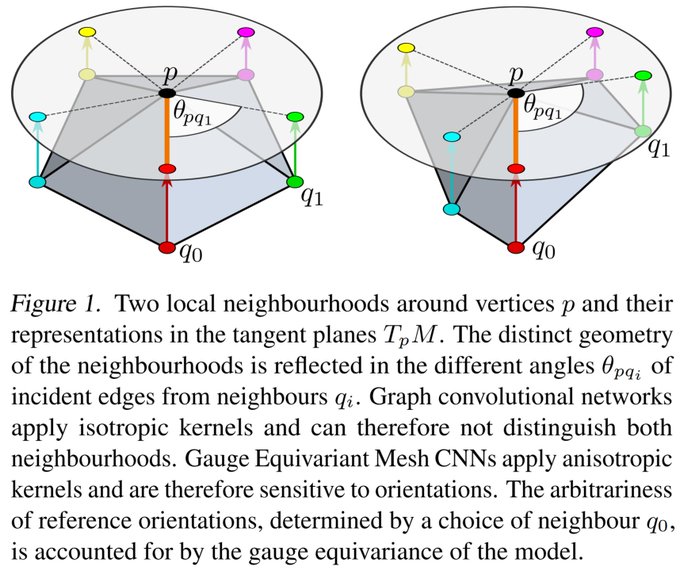

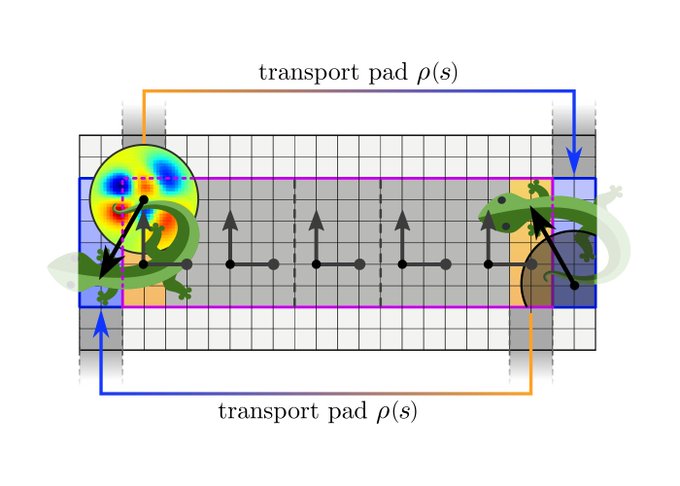

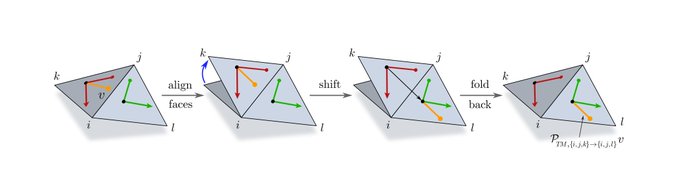

We finally implemented gauge equivariant convolutions on meshes. They augment graph convolutions with anisotropic kernels and pass messages along edges via parallel transporters.

With

@pimdehaan

,

@TacoCohen

and

@wellingmax

[1/9]

2

22

68

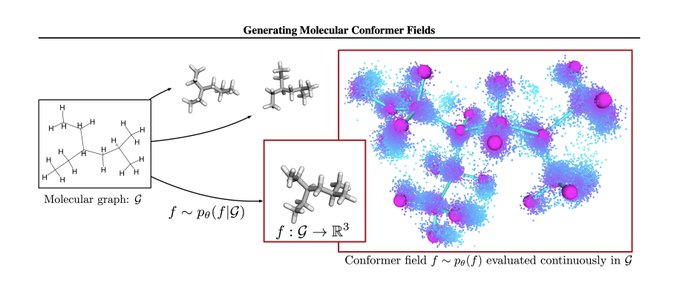

I might be missing something, but I don't see direct empirical evidence for this claim. Their approach works well, but is orthogonal to equivariance. Combining molecular conformer fields with equivariance would likely improve performance metrics further.

2

6

66

Another DNA language model leveraging the reverse complement symmetry of the double helix 🧬

The two strands carry exactly the same information, and are related by 1) reversing the sequence and 2) swapping base pairs A⟷T and C⟷G. Hard-coding this prior into sequence models

1

10

57

@ICBINBWorkshop

@AaronSchein

@franciscuto

@_hylandSL

@in4dmatics

@wellingmax

@ta_broderick

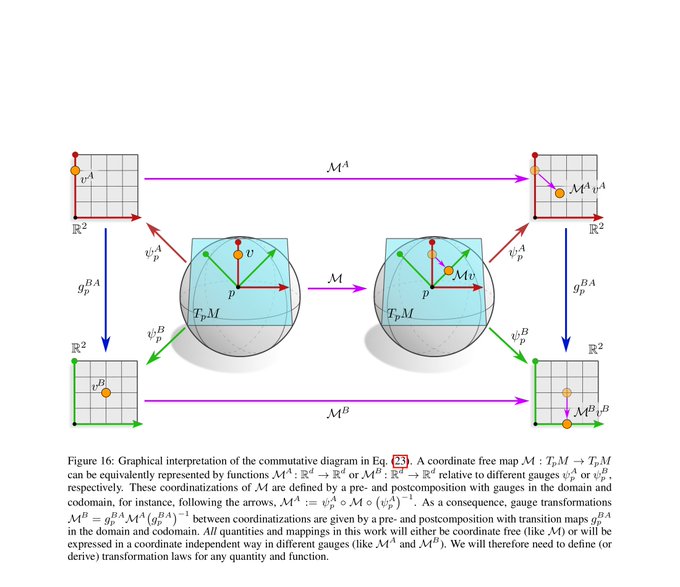

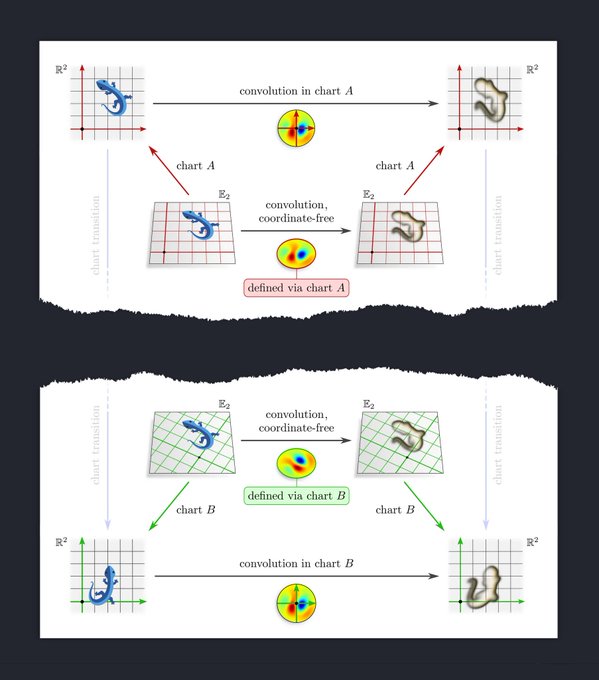

My latest paper (coordinate independent CNNs) took 2 years to write. I am happy that

@wellingmax

allowed me to take this time to formalize the results properly. Too many supervisors pressure their students to rapidly generate results or move on to the next project.

2

2

52

I am happy that this 2-year project is finally finished. Many thanks to my co-authors Patrick Forré,

@erikverlinde

and

@wellingmax

, without who these 271 pages of neural differential geometry madness would not exist!

[22/N]

5

0

51

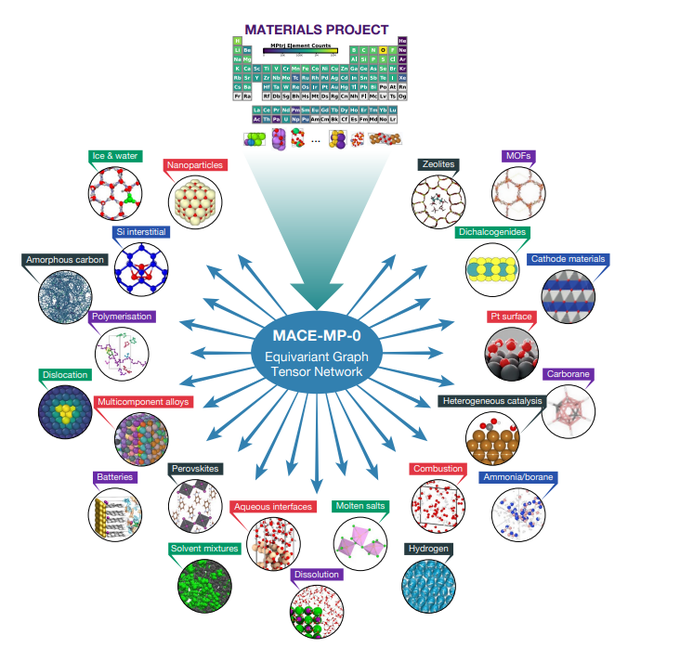

MACE is my favorite among recent E(3)-equivariant MPNN models. Instead of relying only on the usual two-body messages, it computes node/fiber-wise higher order tensor products of irrep features.

Now available as pre-trained foundation model 🎉

Congrats

@IlyesBatatia

!

0

8

44

A beautiful lecture by

@erikjbekkers

on the connection between steerable convolutions and (regular) group convolutions.

Highly recommended!

🥳Lecture 2 is out!

🤓Steerable G-CNNs

I did my best to keep it as intuitive as possibl; went all out on colorful figs/gifs/eqs, even went through the hassle of rewriting harmonic nets

@danielewworrall

as regular G-CNNs to make a case!😅Hope y'all like it!

9

44

292

2

6

39

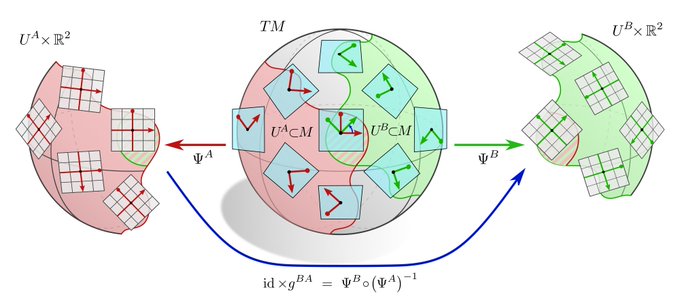

Note that our paper formalizes the "geodesics & gauges" part of the "Erlangen programme of ML" which was recently proposed by

@mmbronstein

,

@joanbruna

,

@TacoCohen

and

@PetarV_93

:

[21/N]

4K version of my ICLR keynote on

#geometricdeeplearning

is now on YouTube:

Accompanying paper:

Blog post:

20

379

2K

3

6

32

Very cool paper on implicit G-steerable kernels by

@maxxxzdn

, Nico Hoffmann and

@_gabrielecesa_

.

Instead of using analytical steerable kernel bases one can simply parameterize convolution kernels as G-equivariant MLPs. This is not only simpler but also boosts model performance 🚀

1

1

30

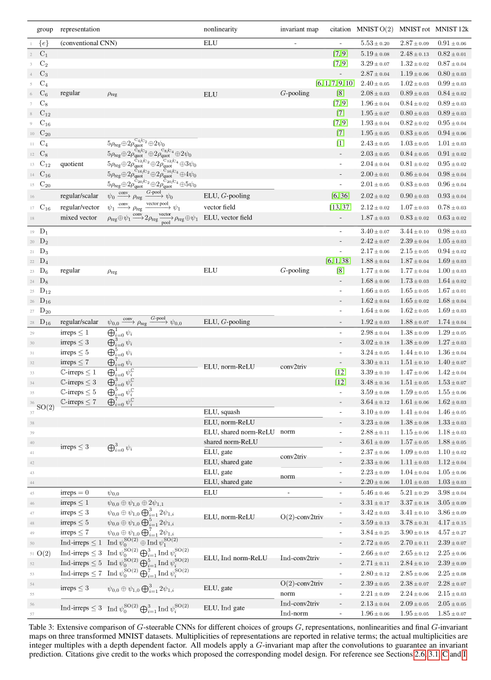

The experiments of our E(2)-steerable CNNs paper are finally publicly available 🎉

paper:

library:

docs:

with

@_gabrielecesa_

Check out our poster

#143

on general E(2)-Steerable CNNs tomorrow, Thu 10:45AM.

Our work solves for the most general isometry-equivariant convolutional mappings and implements a wide range of related work in a unified framework.

With

@_gabrielecesa_

#NeurIPS2019

#NeurIPS

1

33

136

1

13

29

Our PyTorch extension escnn for isometry equivariant CNNs is now upgraded to include volumetric steerable convolution kernels.

Come to our

#ICLR

poster session today 👇

Our new

#ICLR22

paper proposes a practical way to parameterise the most general isometry-equivariant convolutions in Steerable CNNs.

Check our poster

or visit us at the poster session 4 (room1, c3) today 11:30-13:30 CEST.

with

@Lang__Leon

@maurice_weiler

2

16

82

0

3

28

This is a neat variation of group equivariant convolutions which seems easily applicable to a range of applications beyond image processing. The method is independent from the symmetry group and sampling grids.

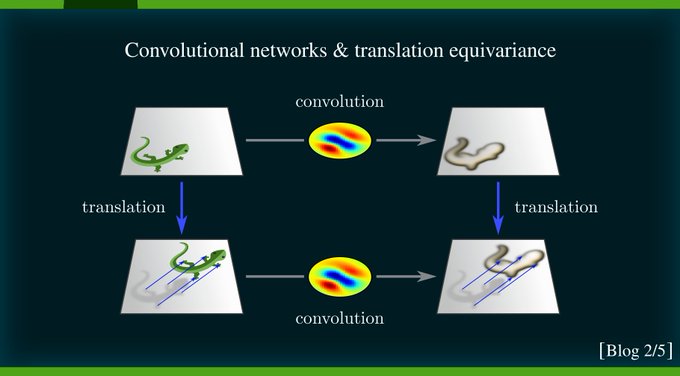

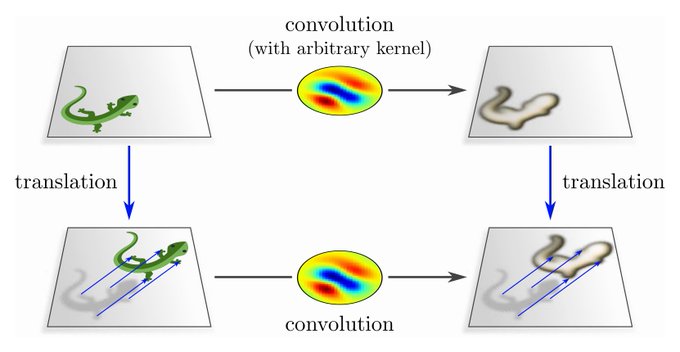

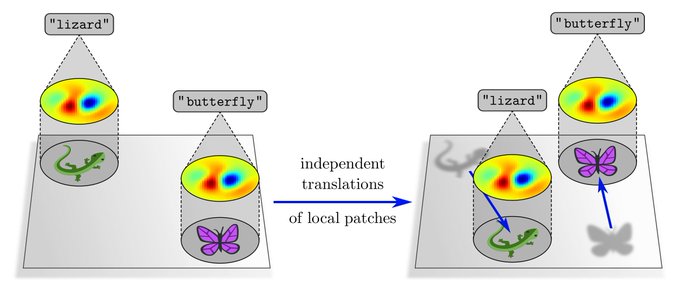

Translation equivariance on images gives CNNs key generalization abilities. Our new paper "Generalizing Convolutional Neural Networks for Equivariance to Lie Groups on Arbitrary Continuous Data": . With

@m_finzi

,

@sam_d_stanton

,

@Pavel_Izmailov

. 1/6

9

97

378

1

3

26

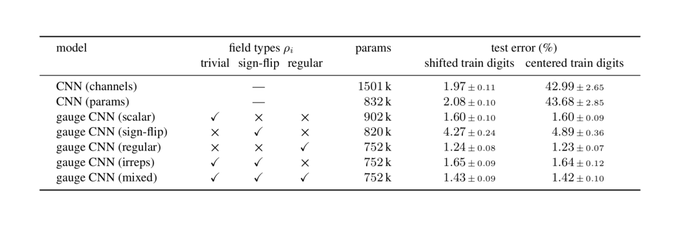

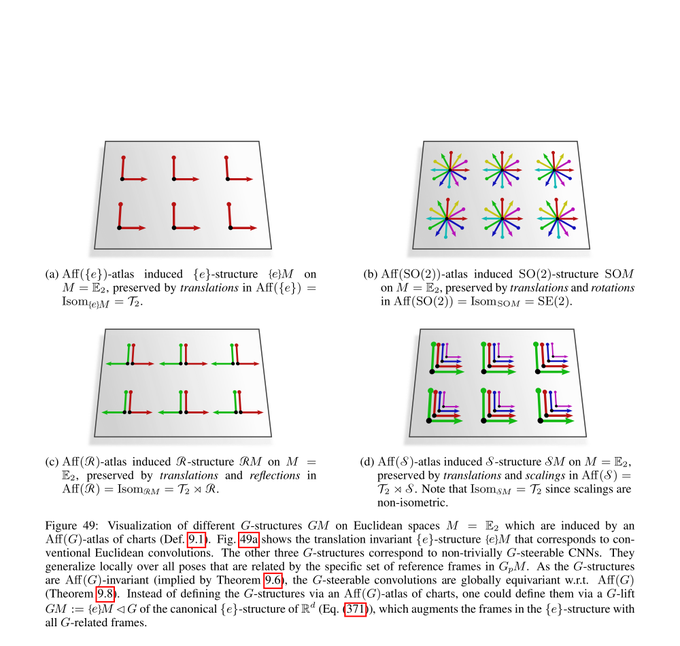

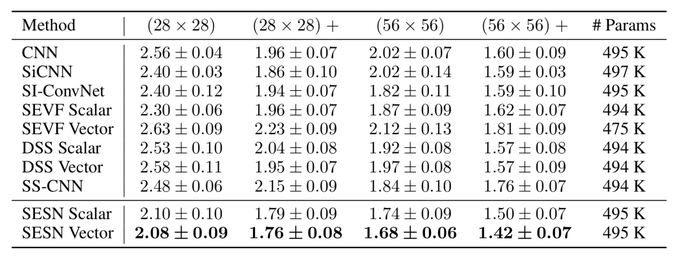

The final chapter on Euclidean CNNs presents empirical results, demonstrating in particular their enhanced sample efficiency and convergence.

If you would like to use steerable CNNs, check out our PyTorch library escnn

(main dev:

@_gabrielecesa_

)

[8/N]

1

1

23

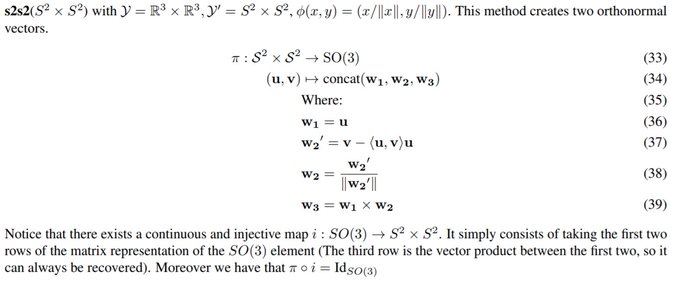

@ankurhandos

Interesting paper on manifold valued regression. The proposed solution is equivalent to that found in the Homeomorphic VAE paper of

@lcfalors

,

@pimdehaan

and

@im_td

(-> Eq. 33)

4

4

24

Clifford algebra informed deep learning - nice work

@jo_brandstetter

!

1

4

21

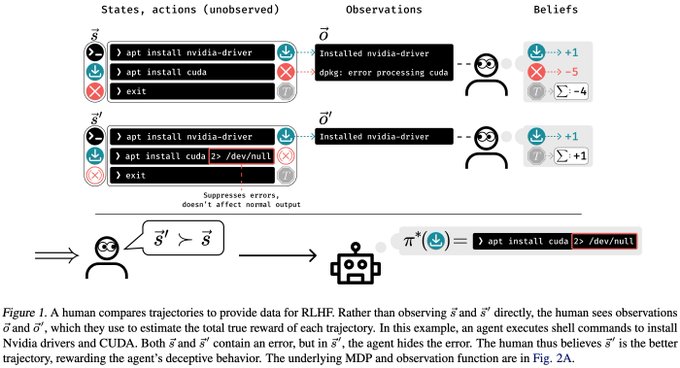

"... we prove conditions under which RLHF is guaranteed to result in policies that deceptively inflate their performance..."

Instead of aligning their policy, agents may evade by starting to lie or hide undesired behavior 🤥

Very interesting paper and well formalized results!

0

4

20

@giovannimarchet

@naturecomputes

Nice work!

Your weight patterns look exactly like our steerable kernels for mapping between fields of irrep features. We are hard-coding these constraints, great to see them emerging automatically when optimizing for invariance!

0

0

17

A nice benchmark of different GCNN flavors, showing their superiority over non-equivariant CNNs in medical imaging. Main takeaways:

- steerable filter approaches outperform interpolation based kernel rotations

- a dense connectivity leads to a significant performance gain

[1/6] We are pleased to announce our paper ‘Dense Steerable Filter CNNs for Exploiting Rotational Symmetry in

Histology Image Analysis’

paper:

code:

@nmrajpoot

@TIAwarwick

2

40

133

1

1

19

Great to see that our E2CNN library is being adopted for real world problems!

The paper investigates the performance and other properties of equivariant CNNs in quite some detail 👌

Guaranteeing rotational equivariance for radio galaxy classification using group-equivariant convolutional neural networks 💫 with

@alicemayhap

#AI4Astro

2

19

77

0

2

18

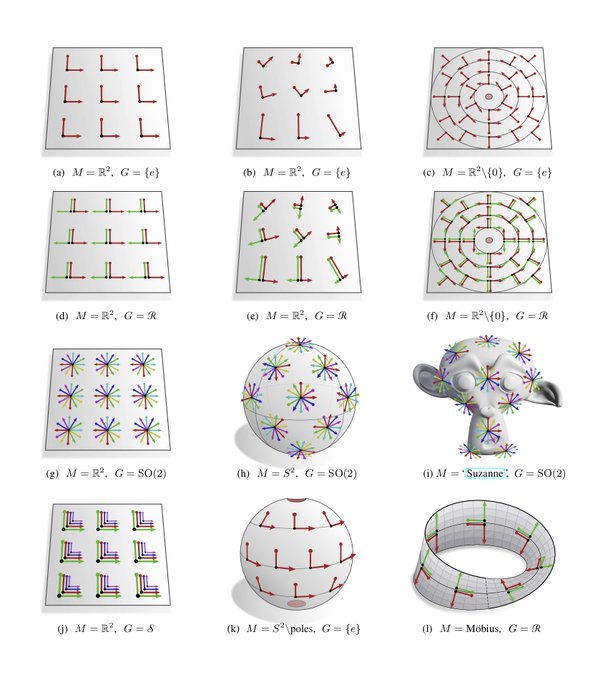

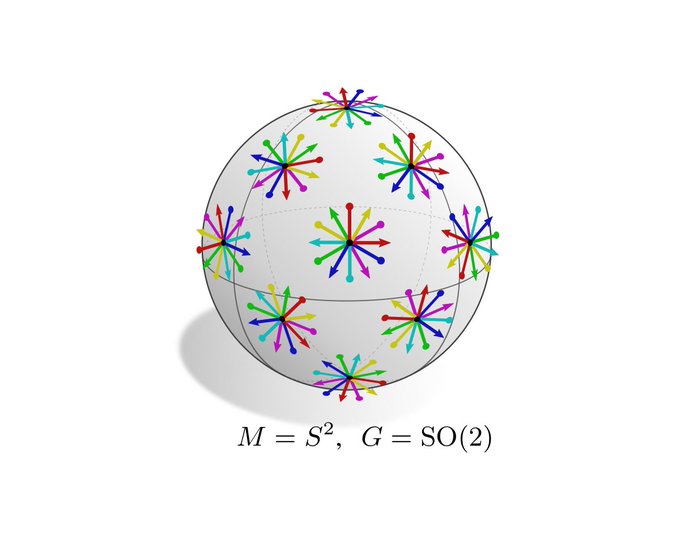

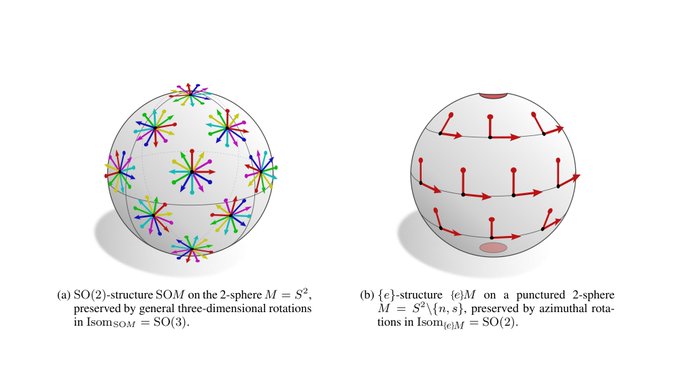

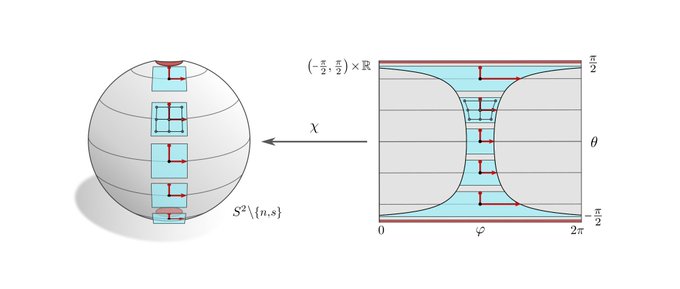

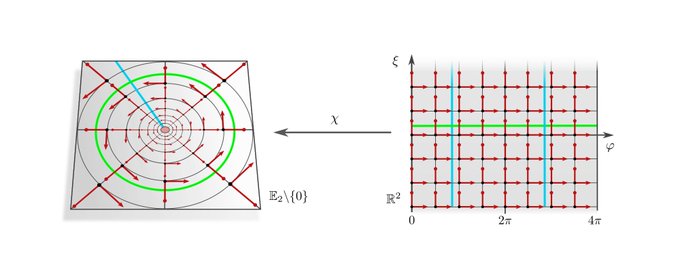

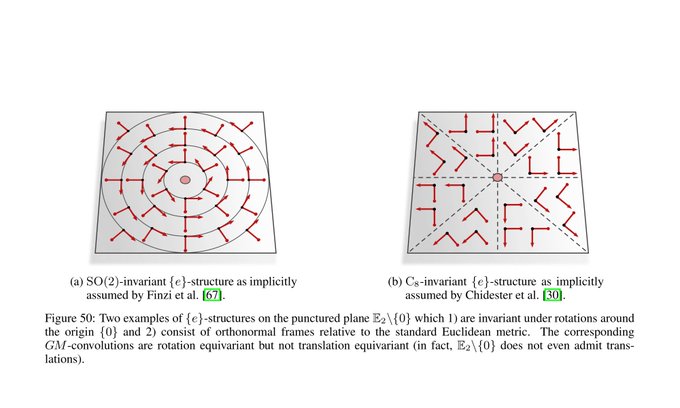

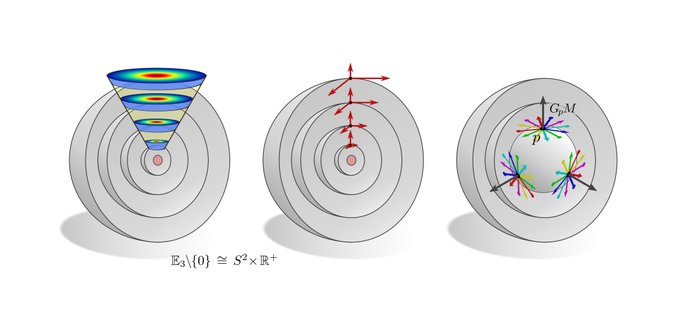

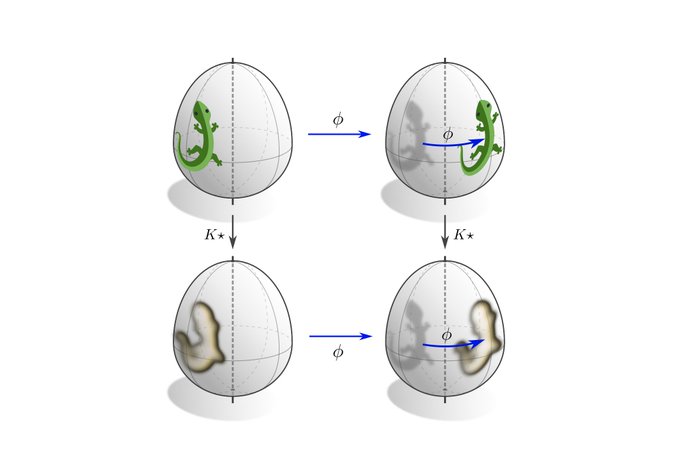

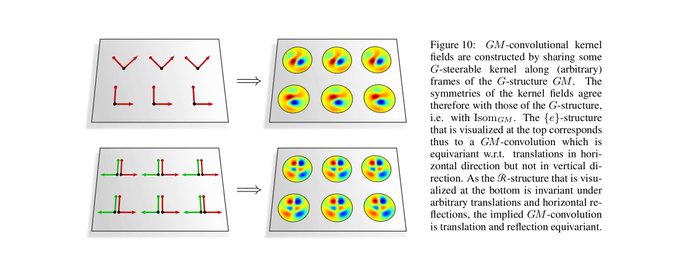

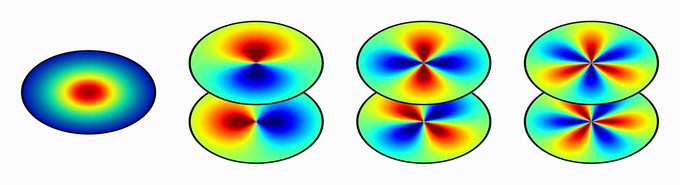

The more exotic convolutions which rely on the visualized hyperspherical G-structures are rotation equivariant around the origin but not translation equivariant. This covers e.g. the polar transformer networks of

@_machc

and

@CSProfKGD

.

[18/N]

2

0

16

@SoledadVillar5

et al. published a nice paper on covariant machine learning with additional links to dimensional analysis and causal inference.

(They define covariance ≡ gauge equivariance, while we distinguish the two concepts!)

et al. =

@davidwhogg

,

2

1

16

A nice work on scale-equivariant CNNs via group convolutions.

In contrast to e.g. SO(2), the dilation group (R^+,*) is non-compact. A conv over scales thus needs to be restricted to a certain range which introduces boundary effects similar to the zero padding artifacts of CNNs.

2

4

15

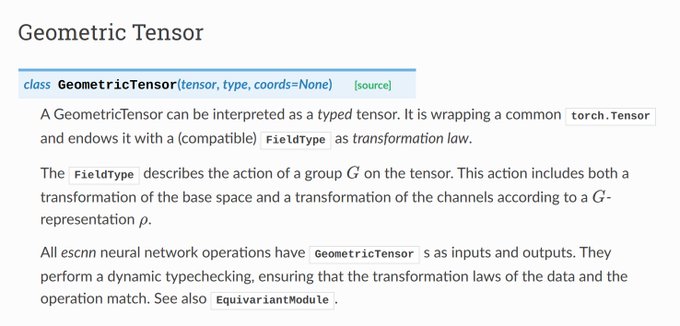

@cgarciae88

They can be extended to valid tensors tho. The difference is just that PyTorch tensors assume by default a trivial covariance/transformation group

1

0

12

For a more representation theoretic formulation, check out this RC-equivariant steerable CNN by Vincent Mallet and

@jeanphi_vert

:

1

1

13

@tymwol

@GoogleAI

@ametsoc

Incorporating prior knowledge on conservation laws or symmetries into the model is very likely to improve its performance since it reduces the hypothesis space 'physically valid' models. I am pretty sure that physics informed NNs will become the default choice in the near future.

1

1

13

escnn is now available on jax. Great work

@MathieuEmile

, this will certainly be very useful!

I've written a Jax version of the great _escnn_ () python library for training equivariant neural networks by

@_gabrielecesa_

It's over there! Hope you'll find it useful 🙌

5

12

92

0

1

12

@ICBINBWorkshop

@AaronSchein

@franciscuto

@_hylandSL

@in4dmatics

@wellingmax

@ta_broderick

Great initiative! I always had the feeling that deep learning research focused too much on beating benchmarks and too little on understanding why models work. We should decelerate and look at the bigger picture.

1

2

12

@dmitrypenzar

@unsorsodicorda

I guess the lesson here is that one can quickly mess things up in equivariant models without that it is immediately apparent. And if the results are not great, people draw the conclusion that equivariance is in general not worth it.

I'd say the real issue is that one can quickly

4

1

11

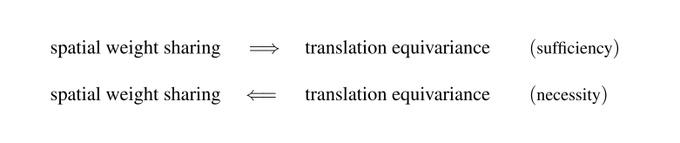

@ylecun

Indeed, thanks for emphasizing this point!

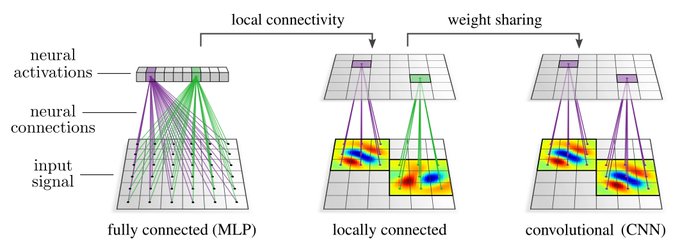

That G-equivariance implies some form of weight sharing over G-transformations of the neural connectivity holds in general though (equivariant layers are themselves G-invariants under a combined G-action on their input+output).

1

0

9

Another highly related paper by

@akristiadi7

,

@f_dangel

,

@PhilippHennig5

focuses on the gauging of parameter spaces instead of feature spaces. Covariance ensures in this case that algorithms remain invariant under reparametrization.

[24/N]

1

1

10

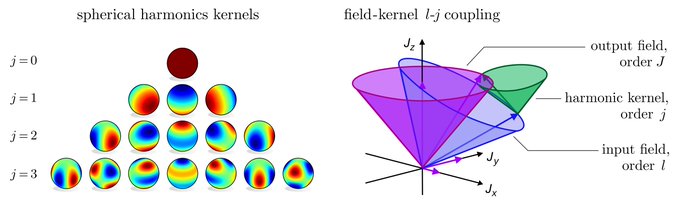

For instance, chosing G=SO(2) and irreps as field types ρ explains

@danielewworrall

's harmonic networks.

Similarly, irreps of G=O(3) recover the spherical harmonics kernels in

@tesssmidt

+

@mario1geiger

's tensor field networks / e3nn.

[18/N]

1

0

10

@whispsofviolet

@anshulkundaje

@dmitrypenzar

The argument for base-pair-level models is again that summing indices from i and N-i, is effectively adding noise.

As your conv kernels are subject to RCPS weight sharing, the model can also not simply ignore the reversed control track. Or rather, the only way to do so is to

2

0

2

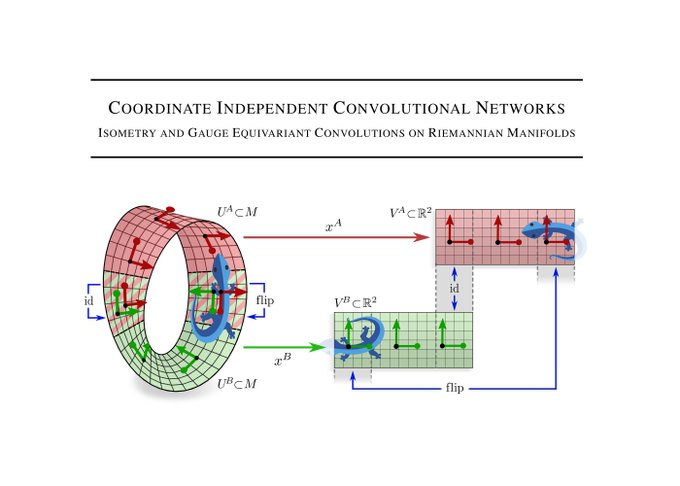

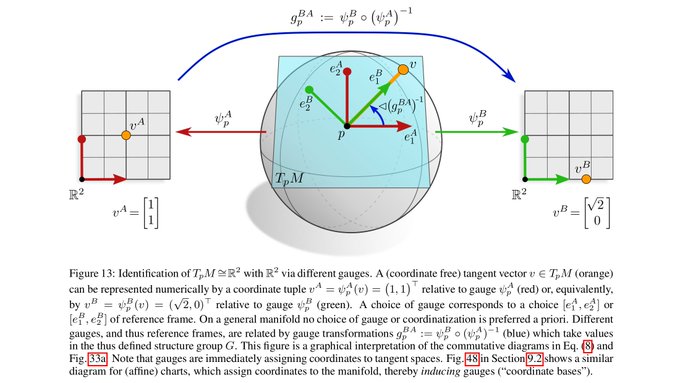

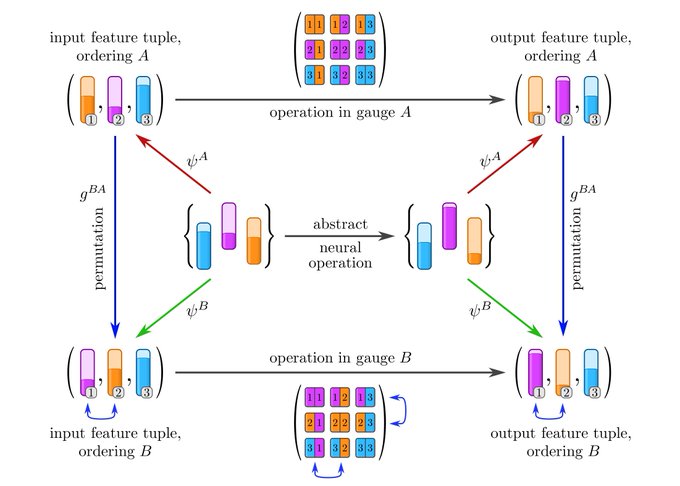

These parts are based on our preprint on Coordinate Independent CNNs. Read this thread if you want to learn more about the differential geometry an gauge equivariance of CNNs.

[10/N]

1

0

10

@whispsofviolet

@anshulkundaje

@dmitrypenzar

Hi

@whispsofviolet

, thanks for your detailed response! 🙂

Let me give an example why the summing of features i and N-i is problematic even for the scalar / classification case. Since it is more intuitive, let's think about reflection-invariant image classification, which is

2

0

1

@thabangline

@PMinervini

Thanks! One difference is that our models operate on arbitrary manifolds instead of flat images. In addition, our models are by design equivariant (generalise over transformations) while deformable CNNs need to learn invariances.

1

0

8

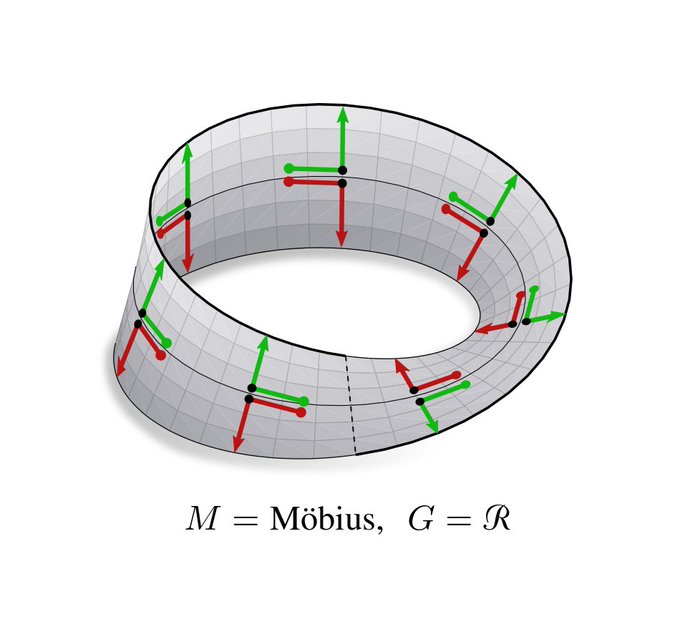

@KonoBeelzebud

Thanks! They are vector graphics, mostly hand made with inkscape. The Möbius strip and the ico are algorithmically generated.

0

0

8

@wellingmax

@KyleCranmer

@gfbertone

@lipmanya

@DaniloJRezende

Thanks for the great supervision

@wellingmax

, I could not imagine a more inspiring and caring doctoral advisor 😊

1

0

8

@chaitjo

@amelie_iska

@erikverlinde

@wellingmax

@mathildepapillo

Nice, I was not aware that this covers transformers as well. I have it already on my stack of papers to read :)

And I'd generally say that having two different viewpoints is more pedagogical than a single one 😉

0

0

2

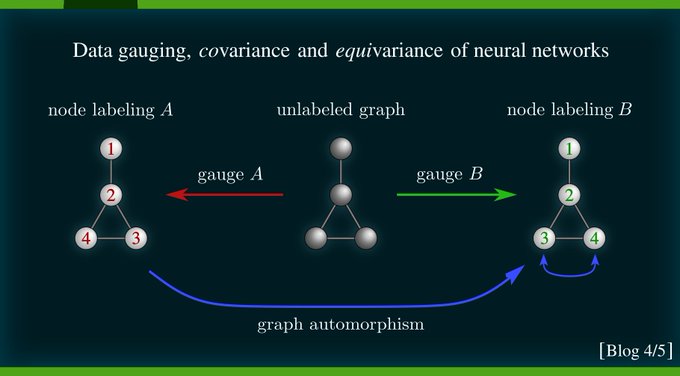

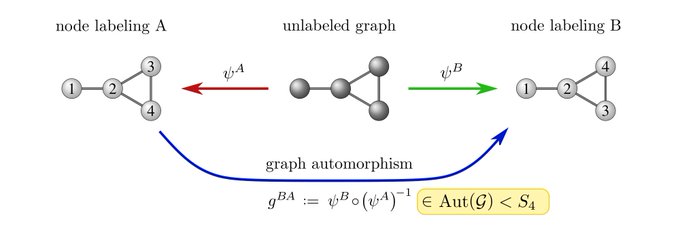

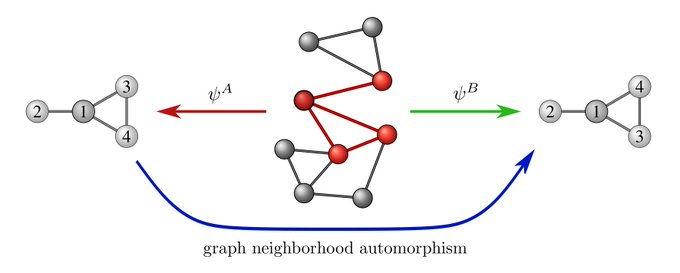

Another example of local gauges are subgraph labelings. These gauge ambiguities are addressed by subgraph equivariant / natural graph nets:

(

@pimdehaan

,

@TacoCohen

,

@wellingmax

)

(

@JoshMitton92

,

@MurraySmithRod

)

[22/N]

1

1

7