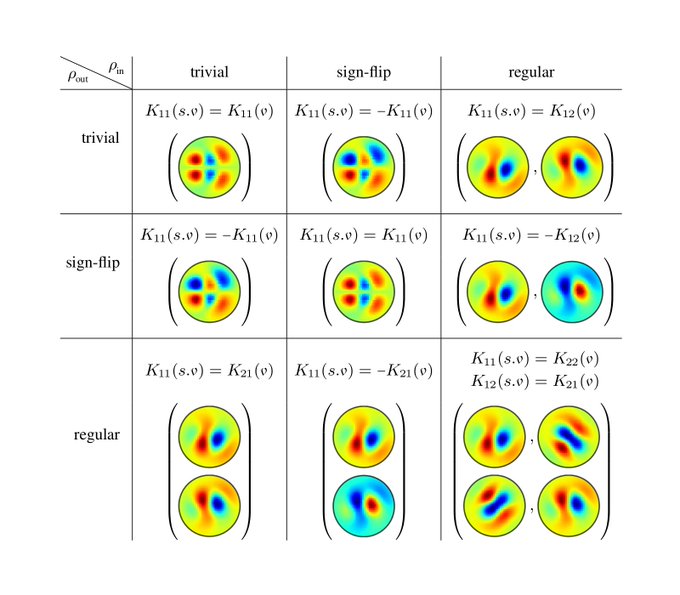

The fig. shows reflection-steerable kernels for different input/output field types of a convolution layer. Each combination results in some reflection symmetry of the kernels.

[11/N]

1

0

16

Replies

Happy to announce our work on Coordinate Independent Convolutional Networks.

It develops a theory of CNNs on Riemannian manifolds and clarifies the interplay of the kernels' local gauge equivariance and the networks' global isometry equivariance.

[1/N]

24

324

1K

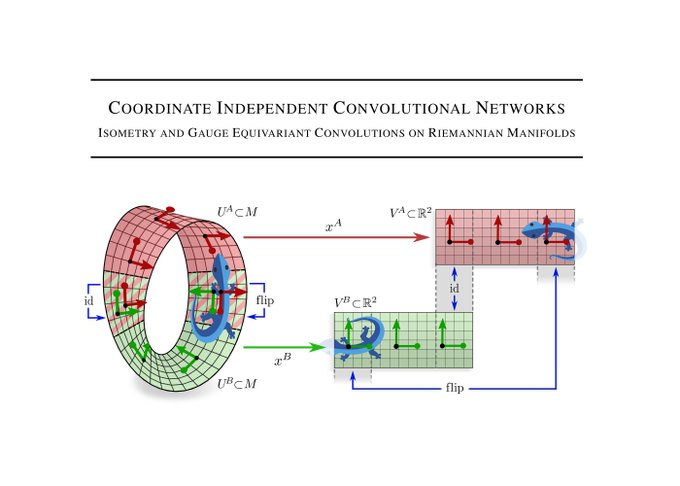

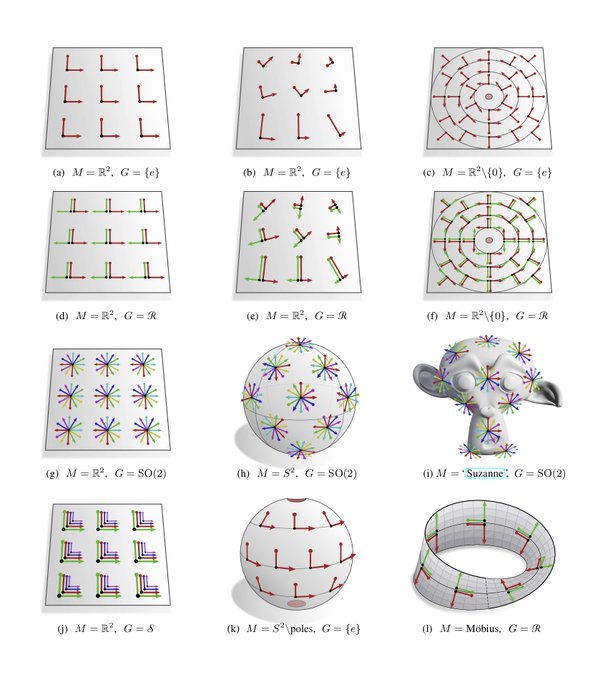

Why *coordinate independent* networks? In contrast to Euclidean spaces R^d, general manifolds do not come with a canonical choice of reference frames. This implies in particular that the alignment of a shared convolution kernel is inherently ambiguous.

[2/N]

2

0

40

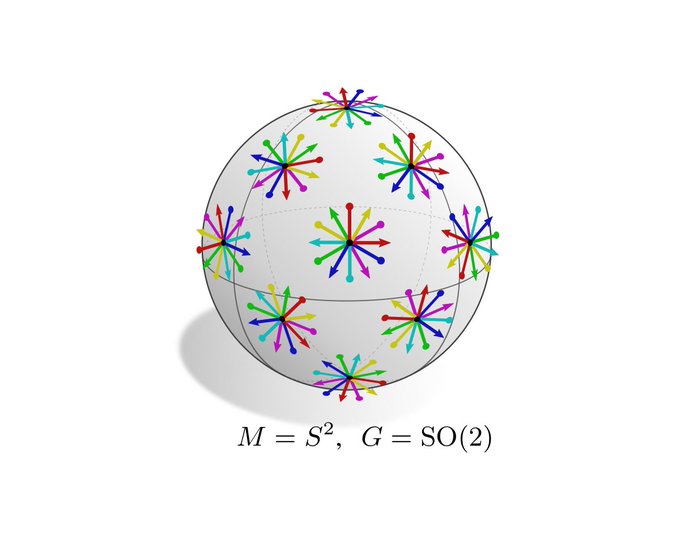

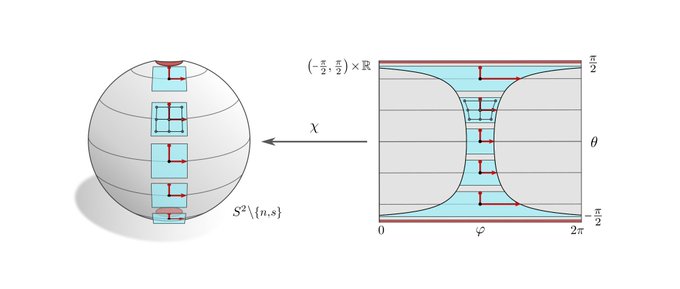

Consider for instance the 2-sphere S^2, where no particular rotation of kernels is mathematically preferred.

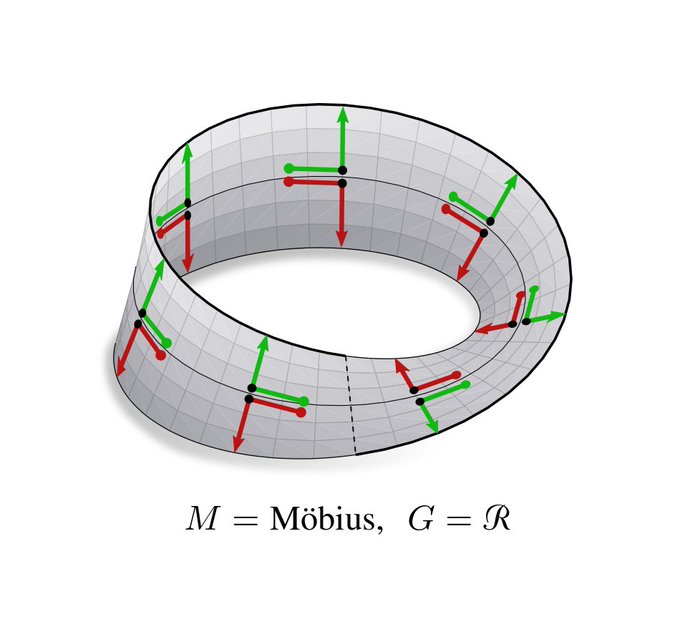

Another example is the Möbius strip. Kernels can be aligned along the strip, however, being a non-orientable manifold, the reflection of kernels remains unclear.

[3/N]

2

1

28

The ambiguity of frames (kernel alignments) is formalized by a G-structure. Its structure group G<=GL(d) specifies hereby the range of transition functions (gauge trafos) between frames of the same tangent space (and thus the necessary level of coordinate independence).

[4/N]

1

0

29

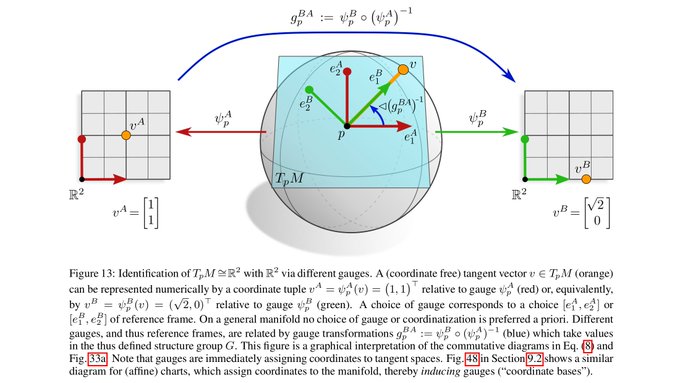

The concept of coordinate independence is best explained at the example of tangent vectors: a vector v in TpM is a *coordinate free* object. It may in an implementation be represented by its numerical coefficients v^A or v^B relative to some choice of frame (gauge) A or B.

[5/N]

1

0

22

Both choices encode exactly the same information. Gauge transformations (a change of basis) g^BA in G translate between the two numerical representations of the geometric object.

[6/N]

1

0

19

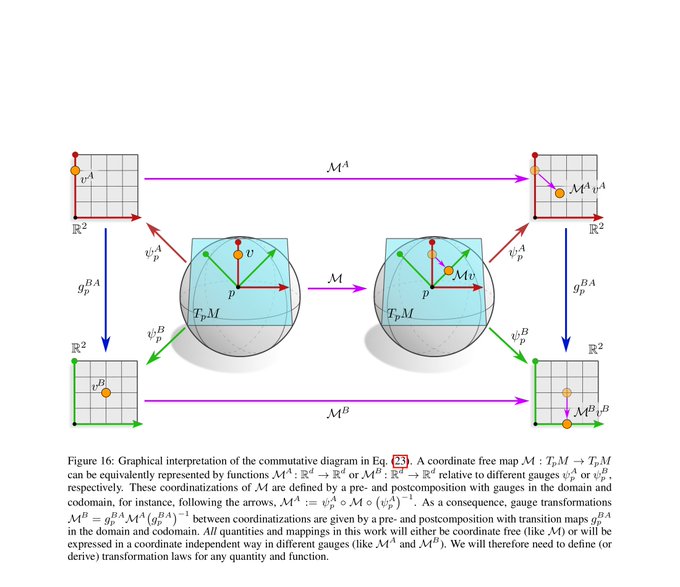

Not only geometric quantities but also functions between them can be represented in different coordinates. The gauge trafo of a function is defined by the requirement that it maps gauge transformed inputs to gauge transformed outputs.

[7/N]

1

0

18

Feature vector fields generalize this idea: they are fields of coord. free geom. quantities, which may be expressed relative to any frames of the G-structure.

They are characterized by a group repr. rho of G, i.e. their numerical coeffs. transform according to rho(g^BA).

[8/N]

1

0

17

Any neural network layer is required to respect these transformation laws of feature fields - they need to be coordinate independent!

We argue that this requirement does by itself not constrain the network connectivity.

[9/N]

1

0

16

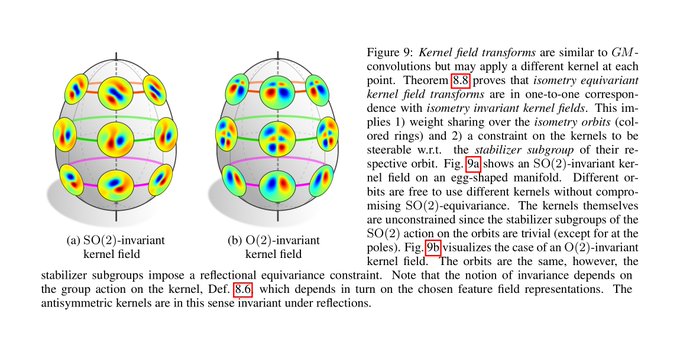

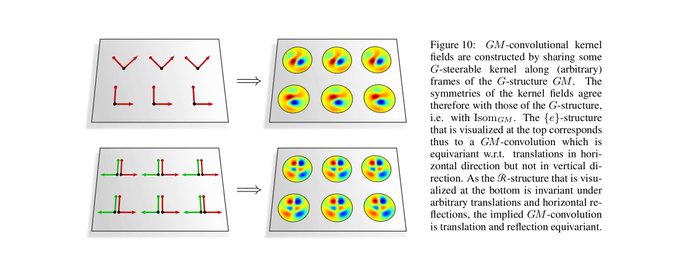

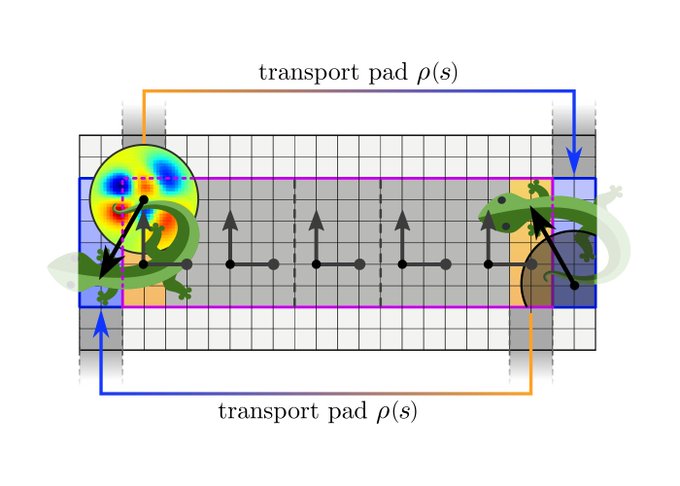

However, we show that the *weight sharing* of a convolution kernel (or bias/nonlinearity) is only then coord. independent if this kernel is G-steerable (equivariant under gauge trafos). Coordinate independent convolutions are parameterized by such G-steerable kernels.

[10/N]

1

0

19

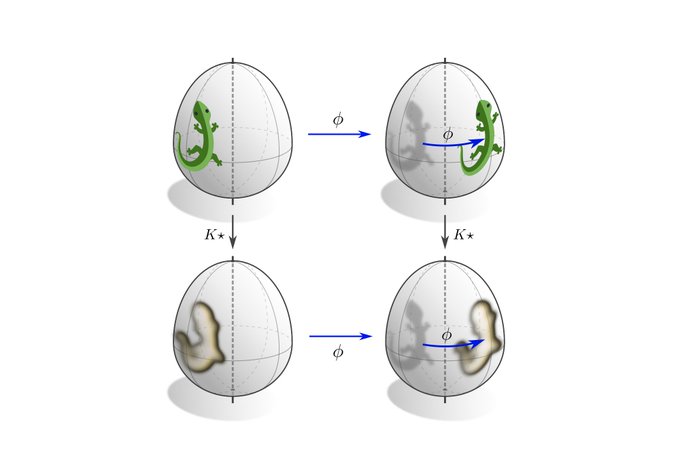

Besides being equivariant under local gauge transformations, coordinate independent CNNs are equivariant under the global action of isometries. This means that an isometry transformed input feature fields results in an accordingly transformed output feature field.

[12/N]

1

0

16

We find that isometry equivariance is in one-to-one correspondence with the invariance of the kernel field (neural connectivity) under the considered isometry (sub)group. Note that this only requires weight sharing over the isometry orbits, not over the whole manifold.

[13/N]

1

0

13

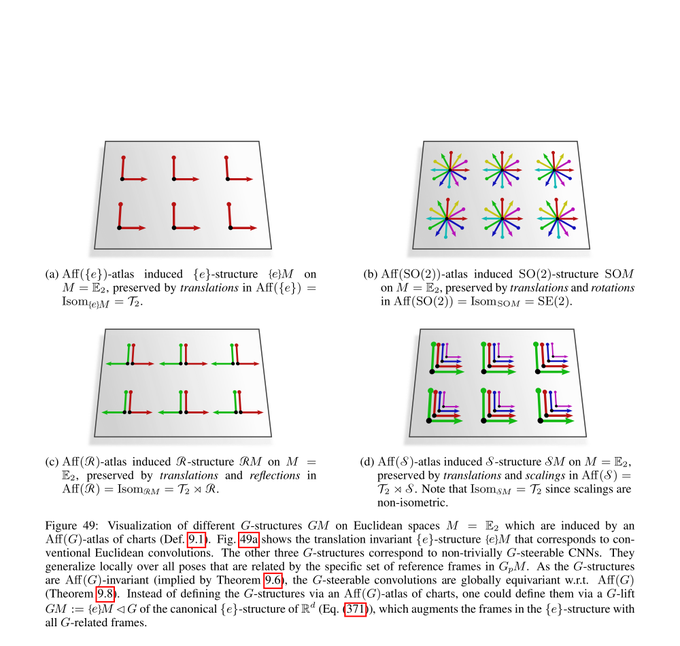

Coordinate independent CNNs apply G-steerable kernels relative to (arbitrary) frames of the G-structure, such that both have the same symmetries (invariances). It follows that our convolutions are equivariant w.r.t. the symmetries of the G-structure! (c.f. figs in [3/N])

[14/N]

1

0

14

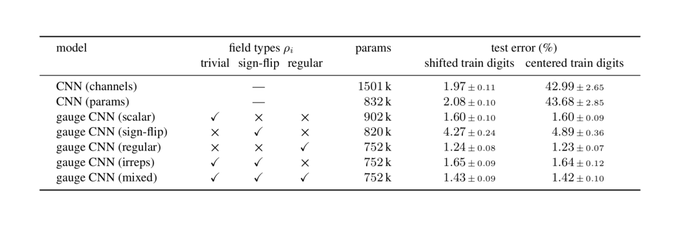

We implement and evaluate our theory with a toy model on the Möbius strip, which relies on reflection-steerable kernels. The code is publicly available at .

[15/N]

1

0

22

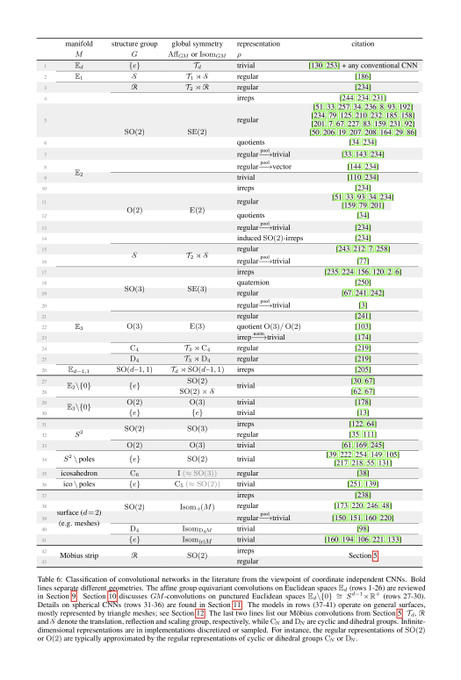

To demonstrate the generality of our differential geometric formulation of convolutional networks, we provide an extensive literature review of >70 pages. This review shows that existing models can be characterized in terms of manifolds, G-structures, group reprs. etc.

[16/N]

1

3

28

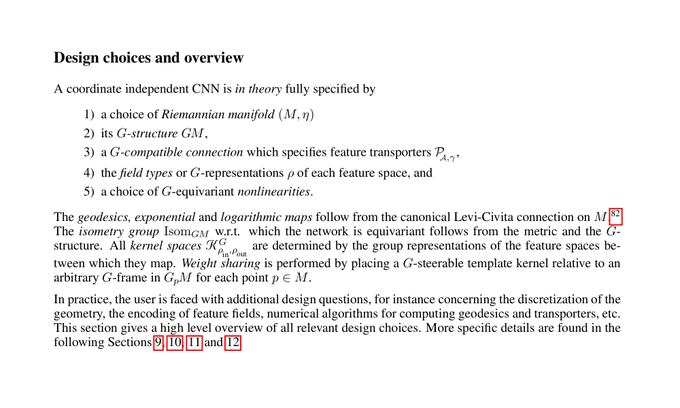

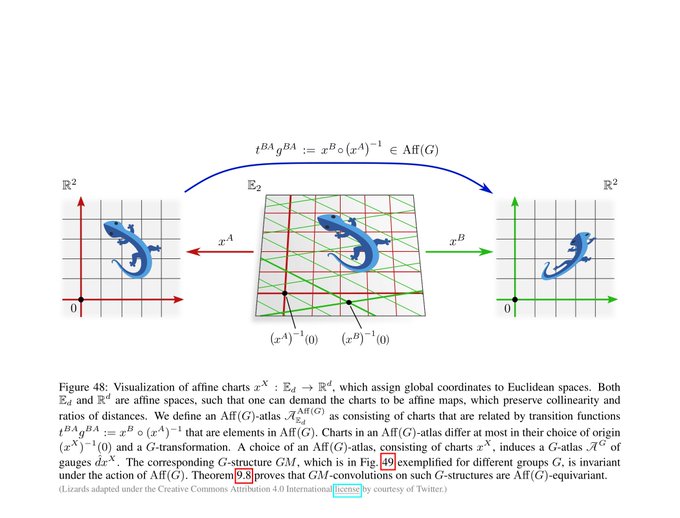

We first cover Euclidean coordinate independent CNNs. This is a special case where we can prove the models' equivariance under affine groups Aff(G) instead of mere isometry equivariance.

[17/N]

1

0

16

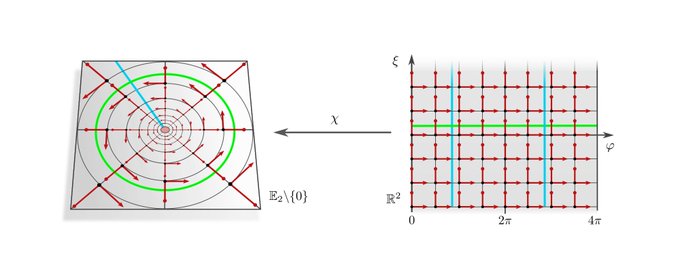

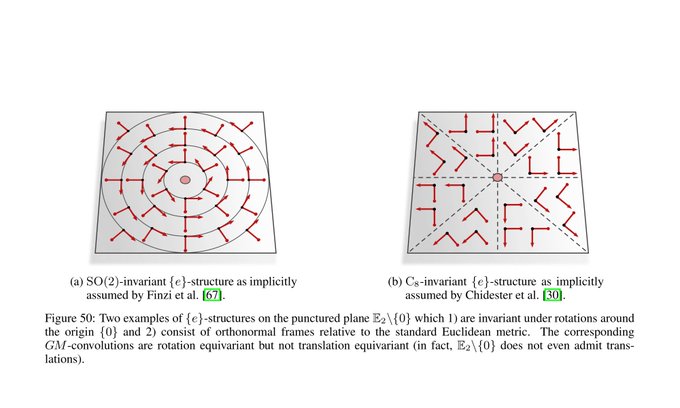

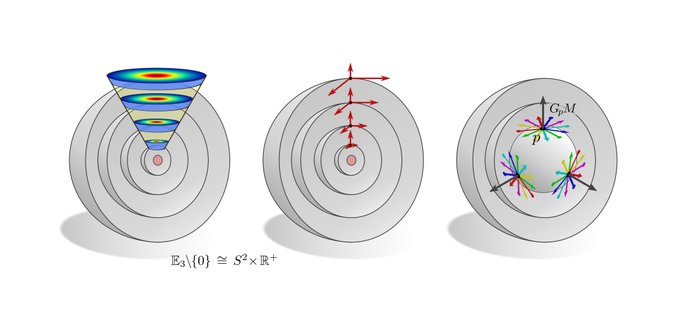

The more exotic convolutions which rely on the visualized hyperspherical G-structures are rotation equivariant around the origin but not translation equivariant. This covers e.g. the polar transformer networks of

@_machc

and

@CSProfKGD

.

[18/N]

2

0

16

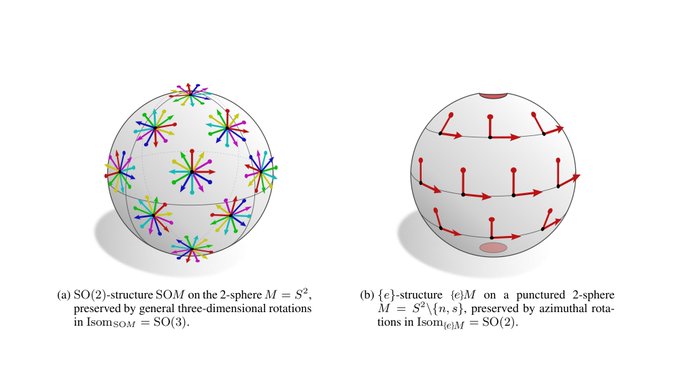

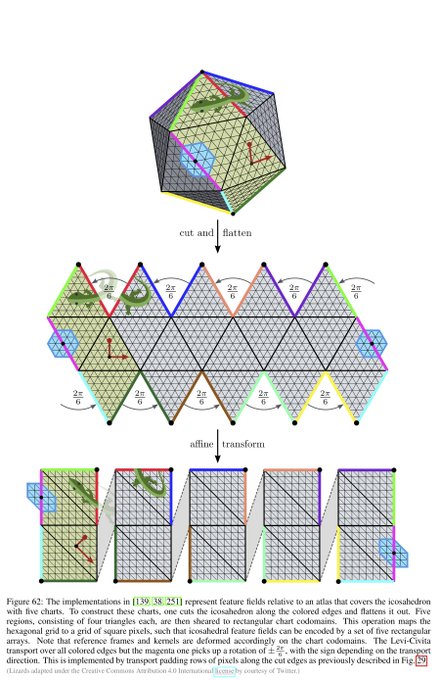

Spherical CNNs are usually either fully SO(3) equivariant or only equivariant w.r.t. SO(2) rotations around a fixed axis. They correspond in our theory to the visualized SO(2)-structure and {e}-structure, respectively. An alternative are icosahedral approximations.

[19/N]

1

0

17

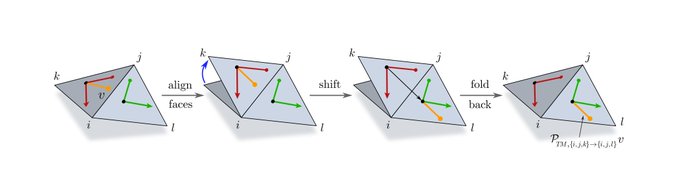

Finally, we are covering CNNs on general surfaces, which are most commonly represented by triangle meshes. Once again, we can distinguish between SO(2)-steerable models and {e}-steerable models.

[20/N]

1

0

16

Note that our paper formalizes the "geodesics & gauges" part of the "Erlangen programme of ML" which was recently proposed by

@mmbronstein

,

@joanbruna

,

@TacoCohen

and

@PetarV_93

:

[21/N]

4K version of my ICLR keynote on

#geometricdeeplearning

is now on YouTube:

Accompanying paper:

Blog post:

20

379

2K

3

6

32

I am happy that this 2-year project is finally finished. Many thanks to my co-authors Patrick Forré,

@erikverlinde

and

@wellingmax

, without who these 271 pages of neural differential geometry madness would not exist!

[22/N]

5

0

51