All empirical evidence suggests rather that equivariance unlocks significant gains.

At least when using the right type of architecture - making the wrong choices, the NNs end up over-constrained. Regular GCNNs are essentially always working better (even though comp expensive).

1

0

10

Replies

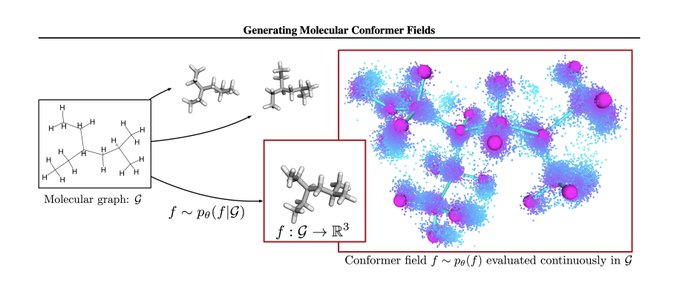

I might be missing something, but I don't see direct empirical evidence for this claim. Their approach works well, but is orthogonal to equivariance. Combining molecular conformer fields with equivariance would likely improve performance metrics further.

2

6

66