Max Zhdanov

@maxxxzdn

Followers

2K

Following

3K

Media

104

Statuses

532

busy scaling on two GPUs at @amlabuva with @wellingmax and @jwvdm

Amsterdam, Netherlands

Joined March 2022

🤹 New blog post! I write about our recent work on using hierarchical trees to enable sparse attention over irregular data (point clouds, meshes) - Erwin Transformer. blog: https://t.co/dClrZ4tOoz paper: https://t.co/EKUH9gJ7o3 Compressed version in the thread below:

7

89

522

We’re heading to #NeurIPS2025 in San Diego with 9 accepted papers🌴 Check out the full list below and come say hi at the posters! 🧵 0 / 9

1

7

14

A lot has been said about the deteriorating effect of modern architecture on the human psyche, and we should start having the same discussion about AI-generated images.

0

0

2

Weather affects everything and everyone. Our latest AI model developed with @GoogleResearch is helping us better predict it. ⛅ WeatherNext 2 is our most advanced system yet, able to generate more accurate and higher-resolution global forecasts. Here’s what it can do - and why

78

212

1K

The variance across ICLR scores is wild, OpenAI should decrease the temperature

8

45

620

Olga is cooking MOFs, check it out for an elegant model for material generation.

Cool news: our extended Riemannian Gaussian VFM paper is out! 🔮 We define and study a variational objective for probability flows 🌀 on manifolds with closed-form geodesics. @FEijkelboom @a_ppln @CongLiu202212 @wellingmax @jwvdm @erikjbekkers 🔥 📜 https://t.co/PE6I6YcoTn

1

3

12

[Book][Open Access]🧵 Lectures on Generalized Global Symmetries Principles and Applications Nabil Iqbal https://t.co/CXU2CfX3lO

2

14

98

✨CAMERA READY UPDATE✨ with new cool plots in which we show how we can use our Equivariant Neural Eikonal Solver for path planning in Riemannian manifolds Check our paper here https://t.co/3QnB40p3tE And see you at NeurIPS 🥰

🌍 From earthquake prediction to robot navigation - what connects them? Eikonal equations! We developed E-NES: a neural network that leverages geometric symmetries to solve entire families of velocity fields through group transformations. Grid-free and scalable! 🧵👇

2

23

219

If you would like to use flash-clifford for your application, or are generally curious about Clifford algebra neural networks, please do not hesitate to reach out :) for more details, see

github.com

⚡ Triton implementation of Clifford algebra neural networks. - maxxxzdn/flash-clifford

1

0

13

The computational advantage of flash-clifford holds at scale, which suggests that Clifford algebra NNs can be useful in data-intensive applications.

1

0

7

Additionally, we employ a (MV_DIM, BATCH_SIZE, NUM_FEATURES) memory layout, which allows expressing the multivector linear layer as a batch matmul, further boosting the performance.

1

0

4

Since it is usually wrapped in other operations, such as activations and normalization, we can fuse those into a single kernel, achieving a significant speedup.

1

0

5

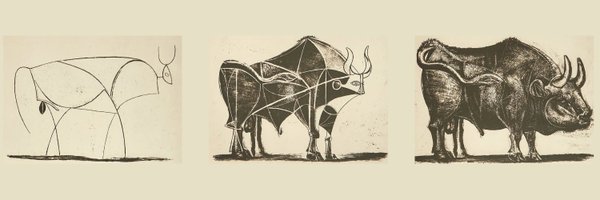

The geometric product is a bilinear operation that takes two multivectors and returns a multivector, essentially mixing information between grades equivariantly.

1

0

6

Elements of Clifford algebra are multivectors - stacks of basis components (scalar, vector, etc.). Those components correspond 1:1 to irreducible representations of O(n): grade 0 (scalar) <-> 0e grade 1 (vector) <-> 1o grade 2 (bivector) <-> 1e grade 3 (trivector) <-> 0o

1

0

4

Clifford algebra is tightly connected to the Euclidean group E(n) and can be used to parameterize E(n)-equivariant NNs: 1) GNNs (@djjruhe) : https://t.co/5OGxqC2JwO 2) CNNs: https://t.co/KCI7XZY8z0 3) Transformers: (@johannbrehmer, @pimdehaan):

arxiv.org

Problems involving geometric data arise in physics, chemistry, robotics, computer vision, and many other fields. Such data can take numerous forms, for instance points, direction vectors,...

1

0

14

Clifford Algebra Neural Networks are undeservedly dismissed for being too slow, but they don't have to be! 🚀Introducing **flash-clifford**: a hardware-efficient implementation of Clifford Algebra NNs in Triton, featuring the fastest equivariant primitives that scale.

6

32

130

Choudhury and Kim et al., "Accelerating Vision Transformers With Adaptive Patch Sizes" Transformer patches don't need to be of uniform size -- choose sizes based on entropy --> faster training/inference. Are scale-spaces gonna make a comeback?

8

36

387

I am looking for internship, EU based or remote (I have work permit for EU). I am interested in efficient computation architectures, deep learning for physical simulations. I know and contributed a bit to bayesian deep learning, tensor networks, MCMC methods, SSM&transformers,

1

2

27

As promised after our great discussion, @chaitanyakjoshi! Your inspiring post led to our formal rejoinder: the Platonic Transformer. What if the "Equivariance vs. Scale" debate is a false premise? Our paper shows you can have both. 📄 Preprint: https://t.co/kd8MFiOmuG 1/9

After a long hiatus, I've started blogging again! My first post was a difficult one to write, because I don't want to keep repeating what's already in papers. I tried to give some nuanced and (hopefully) fresh takes on equivariance and geometry in molecular modelling.

1

28

93

I’m turning 41, but I don’t feel like celebrating. Our generation is running out of time to save the free Internet built for us by our fathers. What was once the promise of the free exchange of information is being turned into the ultimate tool of control. Once-free countries

7K

34K

131K