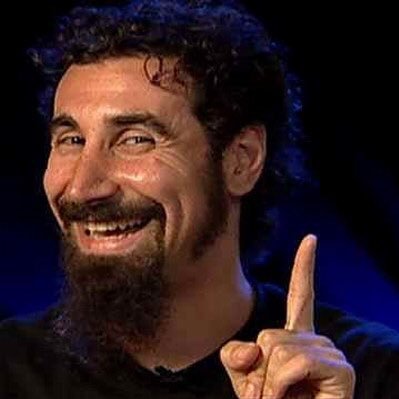

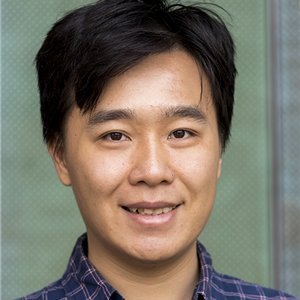

Taco Cohen

@TacoCohen

Followers

28K

Following

6K

Media

48

Statuses

1K

Post-trainologer at FAIR. Into codegen, RL, equivariance, generative models. Spent time at Qualcomm, Scyfer (acquired), UvA, Deepmind, OpenAI.

Joined March 2013

New essay on AI doom. The central arguments lack empirical grounding, relying on unfalsifiable theories and analogies rather than evidence from actual systems. We think iterative safety improvement, the process that worked for other technologies, should work for AI too.

Our critics say our work will destroy the world, and many now point to "If Anyone Builds It, Everyone Dies" as the canonical case for AI doom. Yet we find the book's arguments extremely weak. The book might make for interesting fiction, but it never presents any evidence.

5

8

59

Exactly. I learned a ton of math during my PhD, and it was fun and easy *because I had a goal* to use it in my research. Coding it up is also a great way to detect gaps in your understanding. Totally different from learning in class. Another common fallacy is that you need to

This is empirically incorrect. Hundreds of thousands of https://t.co/GEOZunWoXj students have learned the required math for ML as they go. By *far* the biggest problem we've seen is from people who try to learn the math first. They learn the wrong stuff & have not context.

22

67

1K

Worth reflecting on the fact over-precaution about nuclear power and GMOs increased catastrophic risk to humanity.

6

35

123

Cannot recommend more

🚨 Attention aspiring PhD students 🚨 Meta / FAIR is looking for candidates for a joint academic/industry PhD! Keywords: AI for Math & Code. LLMs, RL, formal and informal reasoning. You will be co-advised by prof. @Amaury_Hayat from ecole des ponts and yours truly. You'll have

0

1

10

Andrej has such a well-regularized and calibrated world model, and his speech decoder is not excessively RL trained allowing for high mutual information with the WM. Very cool

The @karpathy interview 0:00:00 – AGI is still a decade away 0:30:33 – LLM cognitive deficits 0:40:53 – RL is terrible 0:50:26 – How do humans learn? 1:07:13 – AGI will blend into 2% GDP growth 1:18:24 – ASI 1:33:38 – Evolution of intelligence & culture 1:43:43 - Why self

6

5

192

Sneak peak from a paper about scaling RL compute for LLMs: probably the most compute-expensive paper I've worked on, but hoping that others can run experiments cheaply for the science of scaling RL. Coincidentally, this is similar motivation to what we had for the NeurIPS best

11

37

418

"Equivariance matters even more at larger scales" ~ https://t.co/hSdOxIP3UD All the more reason we need scalable architectures with symmetry awareness. I know this is an obvious ask but I'm still confident that scaling and inductive bias need not be at odds. This paper

1

23

182

Replicate IMO-Gold in less than 500 lines: https://t.co/XHQXDaJ452 The prover-verifier workflow from Huang & Yang: Winning Gold at IMO 2025 with a Model-Agnostic Verification-and-Refinement Pipeline ( https://t.co/MD4ZNZeRPF), original code at https://t.co/MJhU5BLEDJ

4

21

158

For the aspiring PhD students among you :) I cannot emphasise enough what exceptional conditions these are for a PhD!

🚨 Attention aspiring PhD students 🚨 Meta / FAIR is looking for candidates for a joint academic/industry PhD! Keywords: AI for Math & Code. LLMs, RL, formal and informal reasoning. You will be co-advised by prof. @Amaury_Hayat from ecole des ponts and yours truly. You'll have

2

2

32

It’s the best PhD program: you get the best of both worlds, academic freedom and a LOT of GPUs. Even better, it’s with @TacoCohen and @Amaury_Hayat They are truly legends.

🚨 Attention aspiring PhD students 🚨 Meta / FAIR is looking for candidates for a joint academic/industry PhD! Keywords: AI for Math & Code. LLMs, RL, formal and informal reasoning. You will be co-advised by prof. @Amaury_Hayat from ecole des ponts and yours truly. You'll have

1

7

89

https://t.co/HZuAsF64M8 (Ignore the fact that I'm not listed as hiring manager)

1

1

46

🚨 Attention aspiring PhD students 🚨 Meta / FAIR is looking for candidates for a joint academic/industry PhD! Keywords: AI for Math & Code. LLMs, RL, formal and informal reasoning. You will be co-advised by prof. @Amaury_Hayat from ecole des ponts and yours truly. You'll have

24

121

897

I'm probably one of the very few who still cover PSRs in course! With some cute matrix multiplication animation in slides. It has been treated as a very obscure topic but really it's just low-rank + hankelness... slides: https://t.co/ZjVTyXEIc2 video:

This week's #PaperILike is "Predictive Representations of State" (Littman et al., 2001). A lesser known classic that is overdue for a revival. Fans of POMDPs will enjoy. PDF:

4

12

88

The act of writing reveals to you, in quite a brutal way, just how many of your thoughts are merely feelings

39

368

3K

🚀 Excited to share our new paper on scaling laws for xLSTMs vs. Transformers. Key result: xLSTM models Pareto-dominate Transformers in cross-entropy loss. - At fixed FLOP budgets → xLSTMs perform better - At fixed validation loss → xLSTMs need fewer FLOPs 🧵 Details in thread

13

40

212

Today, I am launching @axiommathai At Axiom, we are building a self-improving superintelligent reasoner, starting with an AI mathematician.

182

263

2K

Even with full-batch gradients, DL optimizers defy classical optimization theory, as they operate at the *edge of stability.* With @alex_damian_, we introduce "central flows": a theoretical tool to analyze these dynamics that makes accurate quantitative predictions on real NNs.

20

213

1K

The two main issues with GRPO: 1) No credit assignment, unless you do rollouts from each state (VinePPO-style), which is super expensive. 2) Doing multiple rollouts from the same state requires state resetting / copying capabilities. This is fine for question answering, and

12

20

291