Morgan McGuire (Hack @ W&B Sep 21/22)

@morgymcg

Followers

2,912

Following

3,928

Media

545

Statuses

5,965

Lead Growth ML @weights_biases | ex-Facebook Safety | | 🇮🇪 | Came for the bants, stayed for the rants

Ireland

Joined October 2010

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Arsenal

• 673171 Tweets

ميلان

• 406486 Tweets

#اليوم_الوطني_السعودي_94

• 389942 Tweets

#Alikocistifa

• 149005 Tweets

Eagles

• 131386 Tweets

Giants

• 115526 Tweets

Bears

• 97716 Tweets

Saints

• 93841 Tweets

Steelers

• 92703 Tweets

Fields

• 77315 Tweets

Cowboys

• 76399 Tweets

Ravens

• 68542 Tweets

Lions

• 64820 Tweets

Browns

• 54858 Tweets

Panthers

• 50953 Tweets

#GHDBT3

• 47365 Tweets

Raiders

• 45112 Tweets

49ers

• 44703 Tweets

連休最終日

• 42784 Tweets

Jerry

• 41177 Tweets

ドジャース

• 34832 Tweets

Lamar

• 32165 Tweets

Dolphins

• 29775 Tweets

Rams

• 27462 Tweets

Seahawks

• 25825 Tweets

Andy Dalton

• 21788 Tweets

Jennings

• 21053 Tweets

Bryce Young

• 19051 Tweets

Moody

• 17802 Tweets

McCarthy

• 16447 Tweets

Purdy

• 15141 Tweets

Cardinals

• 14737 Tweets

Last Seen Profiles

Pinned Tweet

Participants at our LLM Judges hackathon in SF next weekend will get o1 access 🎉

📅 Sep 21st & 22nd

(human) guest judges:

@GregKamradt

@eugeneyan

@charles_irl

@sh_reya

🎟️

0

8

16

😍 This

@huggingface

tip to prevent colab from disconnecting

`

function ConnectButton(){

console.log("Connect pushed");

document.querySelector("

#top

-toolbar > colab-connect-button").shadowRoot.querySelector("

#connect

").click()

}

setInterval(ConnectButton,60000);

`

6

107

516

The Llama 2 Getting Started guide from

@AIatMeta

is really comprehensive, with plenty of code examples for fine-tuning and inference

Delighted to see

@weights_biases

added there as the logger of choice 🤩 See the guide from Meta here:

From what I can

7

78

386

I put together a quick fastai demo implementing

@karpathy

's notebook training minGPT to generate Shakespeare

Code:

Its a quick demo, follow along on the fastai forum to see how it progresses:

1

42

184

This talk from

@colinraffel

at the

@SimonsInstitute

highlighting the advantages of an ecosystem of specialist models/adapters was great - good starting place if you’re curious about how to combine/hot-swap LLM adapters

3

32

167

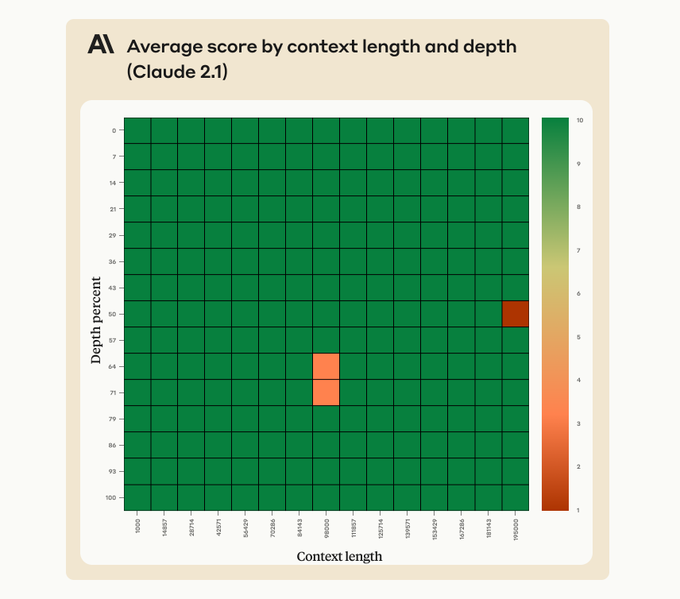

Given theres no sign of being able to use Ultra any time soon, this

@AnthropicAI

post in response to

@GregKamradt

's Claude 2.1's evaluation is probably the more useful tweet for your day today 😃

5

5

140

Any one else strugging with `gpt-4-1106-preview` ?

Using

@jxnlco

's Instructor and I'm finding `gpt-4-1106-preview` to be really bad at following instructions - only successfully generating examples for 2 out of 7 examples vs consistently 7 out of 7 for `gpt-4-0613`

Same issue

32

11

138

This is a great blog post, while it points out the strong performers in time to first token and token generation rate, it also highlights other practical considerations when choosing between inference libraries:

2

23

128

Once you get done with the latest

@huggingface

newsletter released today, come on over and check out the first in a 2-part series of how to *comprehensively* set up and train a language model using HuggingFace Datasets and Trainer

1

23

126

A user yesterday just casually dropped a mobile client for

@weights_biases

in a GitHub issue🔥🔥🔥

Includes all metrics plus system metrics

I think this could be really useful to keep an eye on long-running training runs while you're on the move

Long-requested, delivered by

7

23

128

A couple

@weights_biases

releases from last week I'm excited about:

🪄 W&B Prompts and our new

@OpenAI

API integration

1. W&B Prompts

Track inputs & outputs to your LLM chain, inspect and debug LLM chain elements...plus a bonus

@LangChainAI

1-liner!

👉

2

23

121

Anyone want to suggest some Mixtral 8x7b fine-tuning configs to try?

Myself and

@capetorch

have 8 x H100s (thanks to

@CoreWeave

🙇) for 5 more days and are doing some explorations find a decent Mixtral fine-tuning recipe using axolotl that we can share.

What configs should we

7

20

117

Props to the

@StabilityAI

team and

@EMostaque

for sharing somuch of their LLM training procedure, configs and metrics 🙌

It’s a great nice example of sharing from their own

@weights_biases

instance for open research

1

17

107

PyTorch stepping into the LLM-fine-tuning arena feels huge, looking forward to seeing torchtune evolve in the coming months

Tutorials and docs are really nicely built with a blend of education (eg LoRA explainer) as well as how-tos

wandb integration the cherry on top

👇

2

24

104

⚡️⚡️ Super stoked to say I've joined

@weights_biases

as a Growth ML Engineer! Looking forward to doing some fun ML with

@lavanyaai

and the team! Drop me a line here if you have any Weights & Biases questions, if I can't help I'll try find someone who can ☺️

16

4

102

I've been a huge fan of Instructor for quite a while for getting consistent structured outputs out of LLMs...

So I'm delighted to see

@weights_biases

course with its creator,

@jxnlco

finally released!

4

17

100

I'm hiring an AI Engineer for my team at

@weights_biases

If you're living in SF, enamoured by building and sharing your LLM-powered creations and would like to help take our AI developer tools to every software developer out there, my DMs are open

11

15

99

Ran a quick repo expanded to GPT-4-Turbo and Mixtral-8x7B on this non-deterministic MoE idea using modifed code from

@maksym_andr

Unique sequences generated at temperature = 0, from 30 calls:

GPT-4-Turbo : 30 👀

GPT-4-0613: 10

Mixtral-8x7B-Instruct-v1.0 : 3

GPT-3.5-Turbo : 2

GPT-4 is inherently not reproducible, most likely due to batched inference with MoEs (h/t

@patrickrchao

for the ref!):

interestingly, GPT-3.5 Turbo seems _weirdly_ bimodal wrt logprobs (my own exp below): seems like extra evidence that it's also a MoE 🤔

3

28

256

3

8

94

Before ICLR craziness overtook things I wrote up a post on how to pre-train or fine-tune a RoBERTA

@huggingface

model with

#fastai

v2

With it you can train RoBERTa from scratch or else fine-tune it on your data before your main downstream task

4

20

85

Fastai was my first deep learning course that stuck and the place that gave me the confidence to start writing about ML

I owe most of where I am to this special course and community ♥️

After 2 years, Practical Deep Learning for Coders v5 is finally ready! 🎊

This is a from-scratch rewrite of our most popular course. It has a focus on interactive explorations, & covers

@PyTorch

,

@huggingface

, DeBERTa, ConvNeXt,

@Gradio

& other goodies 🧵

116

1K

5K

2

5

87

🚀 Hiring - ML Engineer, Generative AI 🚀

Our Growth ML team at

@weights_biases

is hiring!

We're looking for someone to join us in creating engaging technical content for all things Generative AI (primarily LLM-focussed) to help educate the AI community and showcase how W&B's

8

11

70

Article updated: Demo applying

@huggingface

"normalizers" from tokenizers library to your Datasets for preprocessing

Informative article update 🤓 or opportunity to add another gif to the post 🥳? You decide...

(thanks

@GuggerSylvain

for highlighting)

Once you get done with the latest

@huggingface

newsletter released today, come on over and check out the first in a 2-part series of how to *comprehensively* set up and train a language model using HuggingFace Datasets and Trainer

1

23

126

0

19

70

"I hope someone can build a really valuable business using this course, because that would be a real RAG to Riches story" -

@AndrewYNg

Well well Andrew, update on our in-production support bot (powered by

@llama_index

) coming tomorrow 😜

New short course on sophisticated RAG (Retrieval Augmented Generation) techniques is out! Taught by

@jerryjliu0

and

@datta_cs

of

@llama_index

and

@truera_ai

, this teaches advanced techniques that help your LLM generate good answers.

Topics include:

- Sentence-window retrieval,

70

494

3K

0

11

69

First time looking at Gemini caching

32k : minimum cache input token count required

**forever** : how long you can keep things cached if you like

caching only saves costs : no latency wins (for now), h/t

@johnowhitaker

Would love a lower minimum token count and latency

Great news for

@Google

developers:

Context caching for the Gemini API is here, supports both 1.5 Flash and 1.5 Pro, is 2x cheaper than we previously announced, and is available to everyone right now. 🤯

30

159

1K

6

8

64

Working with Opus within Cursor was a decent enough experience to turn all my Instructor code for a particular task into a nice mermaid diagram - makes explaining the validators + retries much clearer.

Being able to ask for corrections while easily referencing

@Teknium1

Was messing around with the below.

Examples seemed to help with diagramming more complex fns, but the system prompt alone worked well enough most of the time.

Including "- Use quotes around the text in every node." helped w/ the invalid nodes

0

0

20

2

2

54

.

@Noahpinion

said it better than I could here, this was a really fantastic interview with Sarah Paine

4

7

54

2 months and 2 weeks since our first code commit, 14

@weights_biases

training runs are going right now for our

@fastdotai

community submission to

@paperswithcode

Reproducibility Challenge. This project has been so great to work on, 6 days to go until the deadline 🚀

1

5

54

Working at

@weights_biases

ticks the 2 main boxes I look for in a job:

Do interesting & challenging work ✅

Shape the future of the company ✅

Feel free to DM me if you'd like a quick, 100% confidential chat about applying to the team!

My team is hiring!

👩🔬 ML Engineers

👨🔬 Project Managers

Perks include: working on insanely exciting/challenging problems, on a product ML engineers love, with the smartest/kindest/most fun folks in the world

DM me!

#deeplearning

6

102

334

0

7

53

RIP RAG

“I think long context is definitely the future rather than RAG”

On domain specialisation:

“If you want a model for medical domain, legal domain…it (finetuning) definitely makes sense…finetuning can also be an alternative to RAG”

Great episode, had to listen 0.75x 😂

I'm back! and super proud to be the first podcast to feature

@YiTayML

senpai

with special shoutouts to

@quocleix

,

@_jasonwei

,

@hwchung27

,

@teortaxesTex

!

Special interest callouts:

- why encoder-decoder is not actually that different than decoder-only cc

@teortaxesTex

@eugeneyan

5

13

139

5

7

51

I brought fastai’s activation stats visualisation into weights and biases using custom charts, helps compare across multiple models/runs a little easier

Inspecting your activations can be a useful way to debug model training! Today's featured report uses

@fastdotai

's ActivationStats callback to debug a GPT model for text generation by visualizing its "colorful" dimension. 1/2

📝:

1

22

71

3

7

49

Paper Presentation 🗣️ - AdaHessian Optimizer

Come join the AdaHessian authors

@yao_zhewei

and A. Gholami for an explanation of the AdaHessian paper, learn about second-order methods

Thurs, Aug 27, 2020 09:00 AM Pacific Time

Zoom details on the forum:

1

15

47

Love how the

@llm360

team share their

@weights_biases

workspaces publicly in the Metrics section for both Amber and Crystal Coder 😍

44 loss and eval charts logged during training, all publicly browsable

2

10

37

Put together a quick colab to fine-tune

@OpenAI

ChatGPT-3.5 on the huggingface api code from the gorilla dataset

Idea being to see if something like this can help improve ChatGPT-3.5's use of tools and mimic GPT-4's `functions` capability

5

9

43

Wowza, performant 1-bit LLMs (from 3b up) are here... whats the catch? Their models have to be pre-trained from scratch at this precision, don't think it mentions trying to quantize existing pre-trained models

6

4

43

1⃣1⃣0⃣days: From initial post to

@paperswithcode

Reproducibility Challenge submission

Recruit interest -> pick a paper (Reformer) -> push, push push -> submit

💯 Team effort

Reflections on our journey and what we would do differently next time:

1/2

1

6

43

The Apple research team behind MM1 giving a shoutout to the wandb crew supporting their work, love to see it 😍

🍎 + 🪄🐝

1

6

43

In 12 short minutes

@emilymbender

&

@alkoller

's

#acl2020

Best Theme Paper, rapidly deflates hype around how latest NLP models "understand" language, especially relevant given GPT-3 hype, recommended!!

Vid:

Climbing towards NLU...:

2

11

40

Stoked to work with

@weights_biases

to help the

@huggingface

community fine-tune models in 60+ languages!

💻 Fully instrumented XLSR colab with W&B:

We have language-specific W&B Projects AND a W&B feature (still in beta) I am really excited about... 1/4

Today, we are starting the XLSR-Wav2Vec2 Fine-Tuning week with over 250 participants and more joining by the hour🤯

We want to thank

@JiliJeanlouis

and

@OVHcloud

for providing 32GB V100s to all participants🔥

There are still plenty of free spots to join👉

2

20

98

1

3

38

Our in-house wizard & intern

@vwxyzjn

built our Stable Baselines 3

@weights_biases

integration last year

It makes me very happy to see it in Harrison's latest vid 🔥🔥🔥

1

6

38

If you're excited to come join us and work on LLMs and Generative AI more broadly at

@weights_biases

, I have 2 pointers after reviewing a few 100 resumes for this role:

1⃣ LLMs experience

We're looking for people who have been captivated by the power and potential of LLMs, to

🚀 Hiring - ML Engineer, Generative AI 🚀

Our Growth ML team at

@weights_biases

is hiring!

We're looking for someone to join us in creating engaging technical content for all things Generative AI (primarily LLM-focussed) to help educate the AI community and showcase how W&B's

8

11

70

1

7

36

Quick (basic)

@weights_biases

note on normalisation for the unsupervised

@kaggle

July Tabular Playground series

0

8

36

This is the start of exploration I'll be running on the pipeline settings for StableDiffusion from

@StabilityAI

, using

@weights_biases

Tables for visualisation

📘 Findings:

🖥️ Colab: (based on the excellent release Colab)

Delighted to see the StableDiffusion weights released publicly!

Like

@craiyonAI

before it, its great to be able to generate your own images on demand on your own machine

Cooking up a

@weights_biases

example right now

🎨

1

1

16

0

6

36

@thebadbucket

@citnaj

And this guess that the burst pipes were some kind of wild exotic plant can't be blamed really 😂

0

3

33

⚡️ AI Hacker Cup Lightning Comp

Today we're kicking off a ⚡️ 7-day competition to solve all 5 of the 2023 practice Hacker Cup challenges with

@MistralAI

models

Our current baseline is 2/5 with the starter RAG agent (with reflection)

@MistralAI

api access provided

Details👇

2

12

37

We (

@weights_biases

) love the Instructor library so we created a course, "LLM Engineering: Structured Outputs" with its creator,

@jxnlco

, who charts a mental map for how to get more consistent outputs from LLMs using function calling, validation + more

1

6

34

"An unhelpful error message is a bug" 😍 - part of the Flax Ecosystem Philosophy

Taken from the Jax/Flax intro session this evening as part of the

@huggingface

community effort kicking off

0

2

33

Delighted to see the

@harmonai_org

discord going public 🎶

I spoke to Zach and

@drscotthawley

last week about:

🏗️ what they've been building

🎚️ working with artists

🐝 how they used

@weights_biases

👯 how the community can get involved

Get involved!

1

16

32

Fine-tuning

@OpenAI

's GPT-3.5 is a great way to eek out more performance - it might even outperform GPT-4 for your usecase 🔥

I took a quick look at GPT-3.5 fine-tuning and logged the results with the

@weights_biases

openai-python integration

Lots of improvements to fine-tuning over the past month

- gpt3.5 Turbo

- Fine-tuning UI

- Continuous fine-tuning (fine-tune a fine-tune)

-

@weights_biases

support in latest SDK

It's important that we simultaneously ship amazing new stuff AND improve core foundations

4

8

52

3

4

31

whoa, 21% -> 51% accuracy on hugging face gorilla api eval set after fine-tuning GPT-3.5!

Re-running eval generations again to make sure this is legit

Put together a quick colab to fine-tune

@OpenAI

ChatGPT-3.5 on the huggingface api code from the gorilla dataset

Idea being to see if something like this can help improve ChatGPT-3.5's use of tools and mimic GPT-4's `functions` capability

5

9

43

4

6

32

We've added results from the

@YouSearchEngine

to our latest wandbot release (thanks to

@ParamBharat

), we've definitely seen it answer questions it would have otherwise struggled with, e.g. finding solved github/stackoverflow issues for some gnarlier support questions that aren't

Bard/Perplexity are showing that having an “online LLM” is now table stakes

Inspired by this

@SebastienBubeck

talk, I think the next frontier of embedding models is to go beyond space (precision/recall) and into time (permanent vs contingent facts, perhaps as proxied by

11

15

185

1

3

30

Can't decide which algorithm to use for your tabular data modelling? 😱

Ease your mind and come join me in ~8 hours (6pm GMT / 10am PT) to take a spin through the PyCaret library 😊

It's not always obvious which model & hyperparams work best for your tabular dataset. This Thu

@morgymcg

takes a look at how to compare performance between different traditional ML algorithms.

💡 Comparing

@XGBoostProject

, LightGBM & more w/

#pycaret

👉

0

7

40

0

14

29

:gift: :gift: Feedback Requested :gift: :gift:

Our

@fastdotai

community team would ♥️ feedback on our

@paperswithcode

Reproducibility Challenge

@weights_biases

report (1 day before the deadline 😬), reproducing the Reformer paper

📕:

Reply, DM... 1/2

1

10

29

Delighted to see

@ParamBharat

's work being shared here, evaluation report coming out soon thanks to

@ayushthakur0

!

Props also to the team at

@Replit

for their help getting wandbot running on Replit Deployments, more coming soon on that :)

Want to see a real-world RAG app in production? Check out wandbot 🤖 - chat over

@weights_biases

documentation, integrated with

@Discord

and

@SlackHQ

! (Full credits to

@ParamBharat

et al.)

It contains the following key features that every user should consider:

✅ Periodic data

5

82

339

2

6

28

Vision Transformer was so last week, ImageNet SOTA of 84.8% (no additional data used) with LambdaResNets

(Vision Transformer achieved 77.9% on ImageNet-only data, only starts to shine with huge data)

1

6

28

Our

@weights_biases

Keras callback gives you A LOT more than just experiment tracking, let

@ayushthakur0

take you on a journey of code, gifs and

@kaggle

notebooks…

Keras has played a big role in my DL journey. It turned 7 a few days back and I would like to thank

@fchollet

and the community for this great tool. 🎉

Here's a thread to share how I get more out of my Keras pipeline using

@weights_biases

.

🧵👇

4

28

195

0

3

28

Looking forward to visiting ETH Zurich next Thursday 12th to host a mega

@weights_biases

event with the GDSC crew there!

If you're in the neighbourhood feel free to drop in 👇

1

1

28

We've had a lot of fun and learned a lot about building LLM systems while working on wandbot, our

@weights_biases

technical support bot

Delighted to see our v1.0 release in the wild,

@ParamBharat

has a technical update on its new microservices architecture here:

🚀 Exciting announcement! Introducing Wandbot-v1 from

@weights_biases

- Running on

@OpenAI

's GPT-4-Turbo &

@llama_index

.

- Multilingual support with

@cohere

rerank

- Chat threads in Slack, Discord, Zendesk, and ChatGPT

-

@replit

Deployments

Full report:

2

28

73

0

5

27

I did this same research for our company offsite a few weeks ago

👉 6 of the 8 Transformers authors also use

@weights_biases

today 🤩

The 2 who don't either:

- can't (Google) or

- don't need to (crypto) 🔥

What have the eight ex-Google Brain authors of the Transformers paper been doing since December 2017? 🧐

Let’s find out together! 🧵

Paper:

Sources: Google, Pitchbook, LinkedIn

//

@ashVaswani

@NoamShazeer

@aidangomezzz

@nikiparmar09

@YesThisIsLion

66

637

4K

0

6

28

Tek out here going through the pain so you so don’t have too 😍

(

@weights_biases

links galore in case you want to see the details of the training config & metrics)

2

6

27

The real value of using

@weights_biases

struck me when I started using it with some teammates as part of a paper reproducibility challenge.

Watching 15 different experiment runs from 4 different teammates train in realtime was magic :D

Collaborate with your classmates easily this semester using W&B!

I’m excited to share this article on how you can collaborate with your peers on your Machine Learning assignments using

@weights_biases

for FREE.🤠

Why is W&B useful for ML projects👇 1/6

1

8

37

0

5

25

Consistent quality of life improvements to

@weights_biases

, often implemented within days of user feedback, is one of the things I love about working with the team here 😍

1

2

25

Finetuning ChatGPT-3.5 brought it up from 22% -> 47% on the Gorilla hugging face api evaluation dataset, cool!

Full details and code here:

Still not indicative that finetuning can make it as useful as GPT-4's `funcs` for tool use, but its promising!

2

7

26

Since I'm just back from Mexico I'm having fun fine-tuning stable diffusion using Diffusion from the hugging face Dreambooth hackathon on tortas!

Ongoing

@weights_biases

training journal here:

🎄 Advent of DreamBooth Hackathon 🎄

Today

@johnowhitaker

and I are kicking of a 1-month virtual hackathon to personalise Stable Diffusion models with a powerful technique from

@GoogleAI

called DreamBooth 🔮

Details 👉:

1

19

84

1

7

25

It was SO GREAT catching up with

@borisdayma

,

@iScienceLuvr

,

@shah_bu_land

&

@capetorch

at Fully Connected 23

Zooms from Dublin are better than nothing, but you can't beat in-person chat!

2

0

25

Deployments from

@Replit

really feels like the right level of performance vs complexity for a huge chunk of use cases - its been great to serve our LLM support bot, wandbot, on it!

@weights_biases

“Replit Deployments is a really great feature. It is easy to use, has professionalized our WandBot deployment, and made it much more stable.” -

@morgymcg

To create your own RAG bot for your platform, learn more:

1

5

15

0

4

23

Really 👌 interview with

@neuraltheory

and

@jeremyphoward

on where AI is going in the short-medium term, worth 45min

0

9

24

I'm hiring!

I'm looking for a growth-driven Project Manager to join my team and help us fuel the

@weights_biases

rocketship 🚀

If you are passionate about growing a business 📈 and have a growth mindset 🧘, shoot me a DM

0

7

23

"...test everything for yourself, don't believe it just because someone else said it, and don't believe anything I say today just because I said it - test it for yourself"

-

@jefrankle

in our free LLM fine-tuning course 🧠

When building AI systems

📣 Back by popular demand! Join our free "Training and Fine-tuning LLMs" course with

@MosaicML

!

💡 LLM Evaluation

💡 Dataset Curation

💡 Distributed Training

💡 Practical Tips from top industry experts

Enroll now 🔗

2

37

316

0

5

24

Really enjoyed this 15min talk from

@_inesmontani

on Practical NLP, real feels for the ole "hmmm lets say 90% accuracy" goal 😂

0

3

24

Currently reviewing a post for the

@weights_biases

blog before its released, sometimes I have to pinch myself when I remembered I'm getting paid for this.

Couldn't have imagined this 18months ago, its been some ride 🙏

1

0

24

Delighted to see the

@weights_biases

Prompts Tracer is now also added to

@LangChainAI

's Tracing Walkthrough section!

Capture inputs, intermediate results, outputs, token usage and more with 1 line:

os.environ["LANGCHAIN_WANDB_TRACING"] = "true"

🎉

@LangChainAI

🦜🔗 JS/TS 0.0.88 is live with:

🦁 New

@brave

private search API tool

✂️💻 15 different code text splitters

📗

@supabase

advanced metadata filtering

🔍 Self-query retriever improvements

📦

@vespaengine

retriever

Let's dive in 🧵

1

7

57

1

13

24

Flying in from 🇮🇪 to SF this week for

@aiDotEngineer

, until Monday July 1st - who's around for coffee?

Anyone want to ride the post-conference glow with a hack the weekend after the conference? I can see if

@l2k

will let me open the

@weights_biases

office for the weekend 😉

3

2

24

🇰🇷 Did you know that

@weights_biases

's blog, Fully Connected, has articles in Korean?

Given the glut of english-focussed ML resources today, hopefully these articles help bridge the language barrier between english speakers and non-english speakers

1/3

1

7

23

Kinda nuts

@Replit

are able to serve their LLMs on spot instances, great example of lots of small optimization wins adding up

1

1

23

I very much enjoy running through the courses

@dk21

and our Courses team produce - clear, sharp & concise.

Their latest work, "Building LLM-Powered Applications" is no different - give it a spin, we'd love to hear what you think!

🎉 I'm thrilled to announce we're launching a free online course at

@weights_biases

, titled "Building LLM-Powered Applications." This course is designed for Machine Learning practitioners and Software Engineers who are interested in understanding LLMs and wish to use them in

4

51

197

0

5

22

DALLE-Playground from the

@theaievangelist

is a really slick experience to generate images from DALL-E Mini from

@borisdayma

via a colab and a local webapp, highly recommend giving it a spin!

📓

🤖

2

3

22

Lots of goodness here in

@capetorch

's latest LLM fine-tuning report which shows you how to make the most out of all of

@weights_biases

's logging features in the HF Trainer

Fine-tuning LLM can be daunting as there are many parameters to play with; thankfully, libraries like the

@huggingface

transformers lib make this much easier. Integration with

@weights_biases

is as simple as setting report_to="wandb".

1

13

68

0

4

22

CrewAI &

@joaomdmoura

in the house, 10.6M agents kicked off in the last 30 days - announcements

- Code execution, let your crew run code

- Bring your own agents - llamaindex, autogen & more now supported

- Ship an api for your agents

Plus a delightful musical interlude from

Turns out agents are a thing

Not even standing room left at the Agents track at

@aiDotEngineer

😂😭

1

3

8

1

5

22