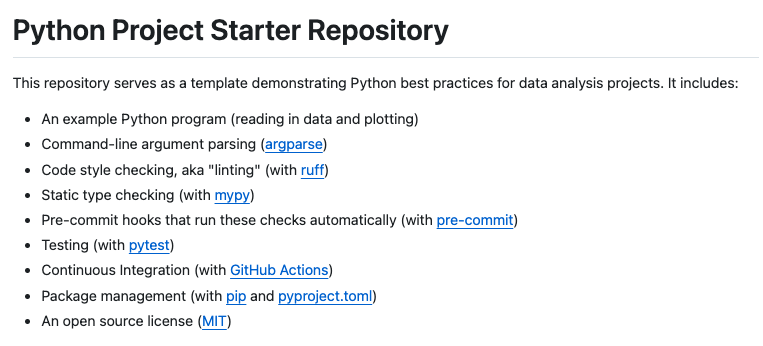

Graham Neubig

@gneubig

Followers

38K

Following

4K

Media

453

Statuses

4K

Associate professor @LTIatCMU. Co-founder/chief scientist @allhands_ai. I mostly work on modeling language.

Joined September 2010

I had to travel 26 hours and spend $2000+ to join #ICLR2023 in Rwanda. But people in Africa have to do this every time a conference is held in US. What happens when we make it easier to participate?. 1530% higher registrations from Africa. This is important and must continue.

17

185

1K

Announcement: @rbren_dev, @xingyaow_, and I have formed a company!. Our name is All Hands AI 🙌 And our mission is to build the world’s best AI software development agents, for everyone, in the open. Here’s why I think this mission is important 🧵

32

95

707

The semester is now over, and all of the videos for Neural Networks for NLP are now online! We feature new classes/sections on probing language models, sequence-to-sequence pre-training, and bias in NLP models by the wonderful TAs. Check them out:

2021 version of CMU "Neural Networks for NLP" slides ( and videos ( are being posted in real time! Check it out for a comprehensive graduate-level class on NLP! New this year: assignment on implementing parts of your own NN toolkit.

2

128

498

I was a bit short on research ideas, so I decided to ask @chrmanning (as simulated by @huggingface 's BLOOM for some inspiration. The advice was.

18

49

465

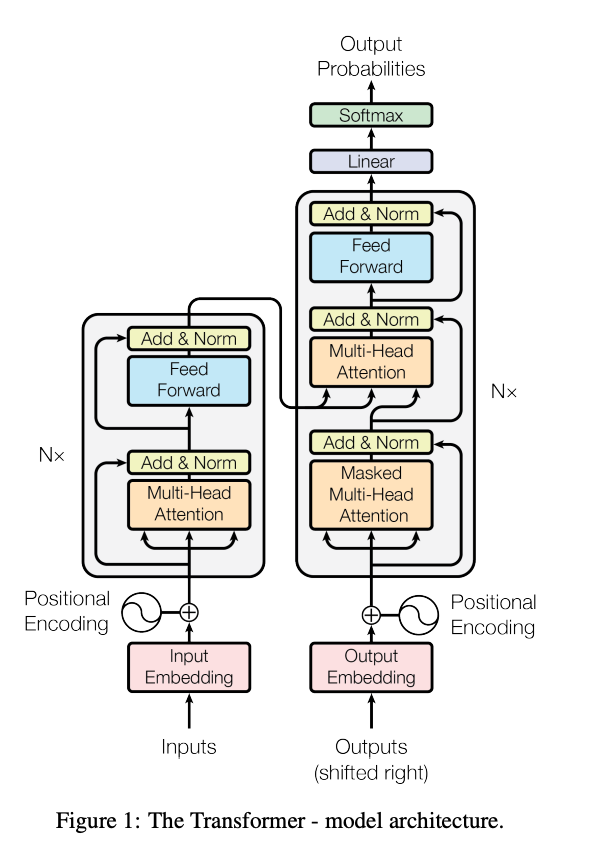

We have started posting CMU Advanced NLP lecture videos on YouTube: Check out the first 7!.1. Overview of NLP.2. Word Representation.3. Language Modeling.4. Sequence Modeling.5. Transformers.6. Generation Algorithms (by @abertsch72).7. Prompting.

I'm excited to be back in the classroom for CMU 11-711 Advanced NLP this semester! We revamped the curriculum to take into account recent advances in LLMs, and we have a new assignment "build-your-own-LLaMa". We'll be posting slides/videos going forward.

6

90

443

Happy to announce that I've formed a company, Inspired Cognition ( together with @stefan_fee and @odashi_en!. Our goal is to make it easier and more efficient to build AI systems (particularly NLP) through our tools and expertise. 1/2

14

48

442

CMU Advanced NLP is done for 2022! Check the videos on YouTube 😃. I also rehauled our assignments to reflect important skills in NLP for 2022: If you're teaching/learning NLP see the 🧵 and doc for more!.

We've started the Fall 2022 edition of:.🎓CMU CS11-711 Advanced NLP!🎓. Follow along for.* An intro of core topics.* Timely content; prompting, retrieval, bias/fairness.* Content on NLP research methodology. Page: Videos:

9

103

419

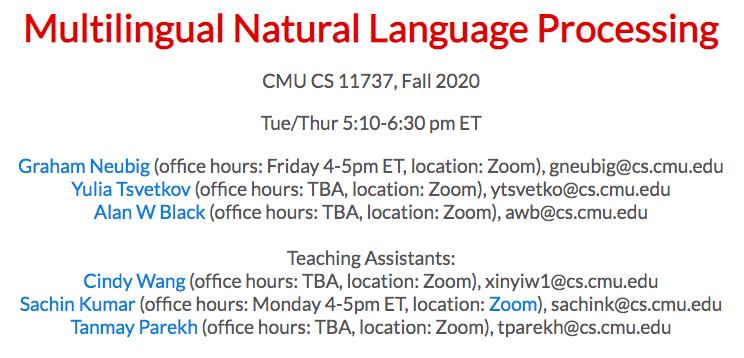

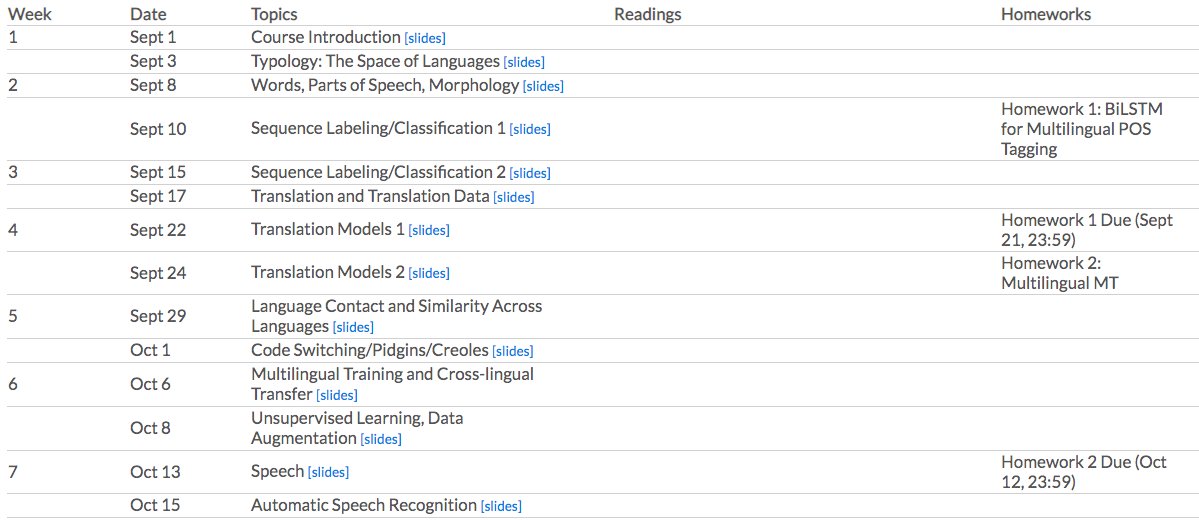

We have finished uploading our 23 class videos on Multilingual NLP: Including two really great guest lectures:.NLP for Indigenous Languages (by Pat Littell, CNRC): Universal NMT (by Orhan Firat, Google):

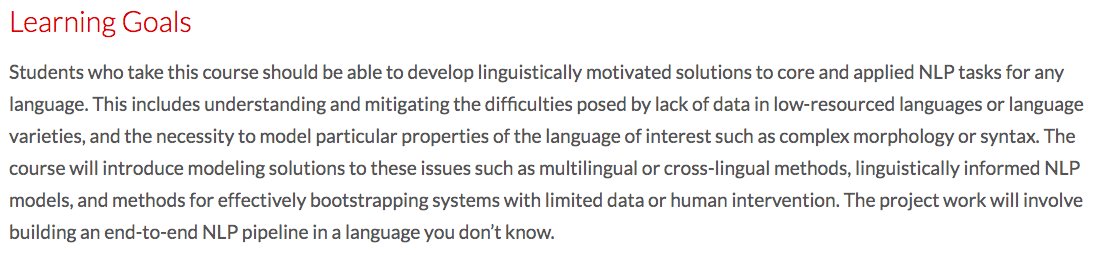

Looking forward to our *brand new class*, CMU CS11-737 "Multilingual Natural Language Processing" this semester with Yulia Tsvetkov and Alan Black! We're covering the linguistics, modeling, and data that you need to build NLP systems in new languages: 1/2

4

124

414

I wrote a more efficient/robust OpenAI querying wrapper:. 1. Parallel execution with adjustable rate limits.2. Automatic retries on failure.3. Interface to Huggingface/Cohere for comparison. This finished a 33k completions in ≈1 hour!. Available here:

OpenAI recently added a method to make asynchronous calls, which is good if you want many calls quickly. But it’s not super-well-documented, so I wrote a quick demo of how to make many calls at once, e.g. 100+ in a few seconds. Hope it's helpful!

8

63

375

Here are the slides for my kick-off talk, a high level overview of the exciting promise and current issues with large language models:

Exciting energy for the @LTIatCMU large language model event! Come on out this weekend if you're around Pittsburgh and interested in LLMs

3

71

323

Happy to release NN4NLP-concepts! It's a typology of important concepts that you should know to implement SOTA NLP models using neural nets: 1/3. We'll reference this in CMU CS11-747 this year, trying to maximize coverage. 1/3

2020 edition of CMU CS11-747 "Neural Networks for NLP", is starting tomorrow! We (co-teacher @stefan_fee and 6 wonderful TAs) restructured it a bit to be more focused on "core concepts" used across a wide variety of applications. 1/2

2

108

315

The videos for the spring semester of CMU 11-711 Advanced NLP are now all available 📺. Thanks to the TAs, students in the class, and everyone who followed along. We're doing it again in the Fall!.

I'm excited to be back in the classroom for CMU 11-711 Advanced NLP this semester! We revamped the curriculum to take into account recent advances in LLMs, and we have a new assignment "build-your-own-LLaMa". We'll be posting slides/videos going forward.

4

79

313

We're excited about all the interest in our Gemini report and working to make it even better!. This week we made major improvements, switching to the @MistralAI instruct model, and working with the Gemini team to reproduce their results. Updates below.

Google’s Gemini recently made waves as a major competitor to OpenAI’s GPT. Exciting! But we wondered:. How good is Gemini really?. At CMU, we performed an impartial, in-depth, and reproducible study comparing Gemini, GPT, and Mixtral. Paper: .🧵

8

39

304

I've started to upload the videos for the Neural Nets for NLP class here: We'll be uploading the videos regularly throughout the rest of the semester, so please follow the playlist if you're interested.

2020 edition of CMU CS11-747 "Neural Networks for NLP", is starting tomorrow! We (co-teacher @stefan_fee and 6 wonderful TAs) restructured it a bit to be more focused on "core concepts" used across a wide variety of applications. 1/2

3

69

292

2020 edition of CMU CS11-747 "Neural Networks for NLP", is starting tomorrow! We (co-teacher @stefan_fee and 6 wonderful TAs) restructured it a bit to be more focused on "core concepts" used across a wide variety of applications. 1/2

9

72

294

I've seen quite a few #NAACL2022 papers that say "our code is available at [link]" but the code is not available at "[link]". Everyone, let's release our research code! It's better for everyone, and hey, messy code is better than no code.

8

28

293

One major weakness of open-source multimodal models was document and UI understanding. Not anymore! We trained a model on 7.3M web examples for grounding, OCR, and action outcome prediction, with great results. It's MultiUI, code/data/model are all open:

Working on multimodal instruction tuning and finding it hard to scale? Building Web/GUI agents but data is too narrow? .Introducing 🚀MultiUI: 7.3M multimodal instructions from 1M webpage UIs, offering diverse data to boost text-rich visual understanding. Key takeaways:

5

40

292

Excited to announce @allhands_ai has fundraised $5M to accelerate development of open-source AI agents for developers! I'm looking forward to further building out the software, the community, and making AI developers accessible for all 🚀.

We are proud to announce that All Hands has raised $5M to build the world’s best software development agents, and do it in the open 🙌. Thank you to @MenloVentures and our wonderful slate of investors for believing in the mission!.

19

33

280

Recently there were some great results from the new Mamba architecture ( by @_albertgu and @tri_dao. We did a bit of third-party validation, and.1. The results are reproducible.2. Mamba 2.8B is competitive w/ some 7B models (!).3. Mistral is still strong.

Since some of you might be wondering whether Mamba 2.8B can serve as a drop-in replacement of some of the larger models, we've compared the Mamba model family to some of the most popular 7B models in @try_zeno . Report: 🧵 1/5.

3

31

266

Super-excited for the official release of ExplainaBoard, a new concept in leaderboards for NLP: It covers *9* tasks with *7* functionalities to analyze, explore, and combine results. Please try it out, submit systems, and help improve evaluation for NLP!

What's your system good/bad at? .Where can your model outperform others? .What are the mistakes that the top-10 systems make? .We are always struggling with these questions. A new academic tool can help us answer them in a one-click fashion and many more:

3

74

261

"Paraphrastic representations at scale" is a strong, blazing fast package for sentence embeddings by @johnwieting2. Paper: Code: Beats Sentence-BERT, LASER, USE on STS tasks, works multilingually, and is up to 6,000 times faster 😯

3

45

259

CMU 11-711 Advanced NLP has drawn to a close! You can now access all class materials online:.Slides: Videos: Hope it's useful, and stay tuned for "11-737 Multilingual NLP" next semester!.

In Fall 2021, CMU is updating its NLP curriculum, and 11-747 "Neural Networks for NLP" is being repurposed into 11-711 "Advanced NLP", the flagship research-based NLP class 😃.More NLP fundamentals, still neural network methods. Stay tuned! (CMU students, please register!).

3

55

255

Nice! Our paper on differentiable beam search (@kartik_goyal_, me, @redpony, and Taylor BK) was accepted to AAAI! Read to learn how to backprop through your search algorithm:

1

57

254

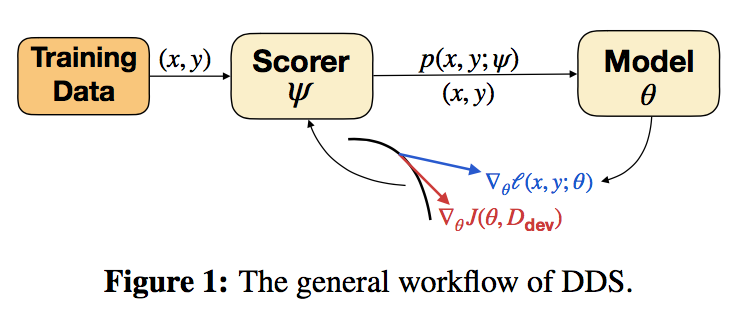

Really happy our paper on Differentiable Data Selection will appear at #ICML2020! The method is a *principled* way to choose which data goes into models and it's super-broadly applicable. We've already used it in multilingual models at #acl2020nlp too

Not all training data are equal, but how to identify the good data efficiently at different stage of model training? We propose to train a data selection agent by up-weighting data that has similar gradient with the gradient of the dev set:

1

43

247

We have started uploading the lecture videos for CS11-737 to YouTube now! You can see the first two on the class intro, and typology.

Looking forward to our *brand new class*, CMU CS11-737 "Multilingual Natural Language Processing" this semester with Yulia Tsvetkov and Alan Black! We're covering the linguistics, modeling, and data that you need to build NLP systems in new languages: 1/2

6

71

244

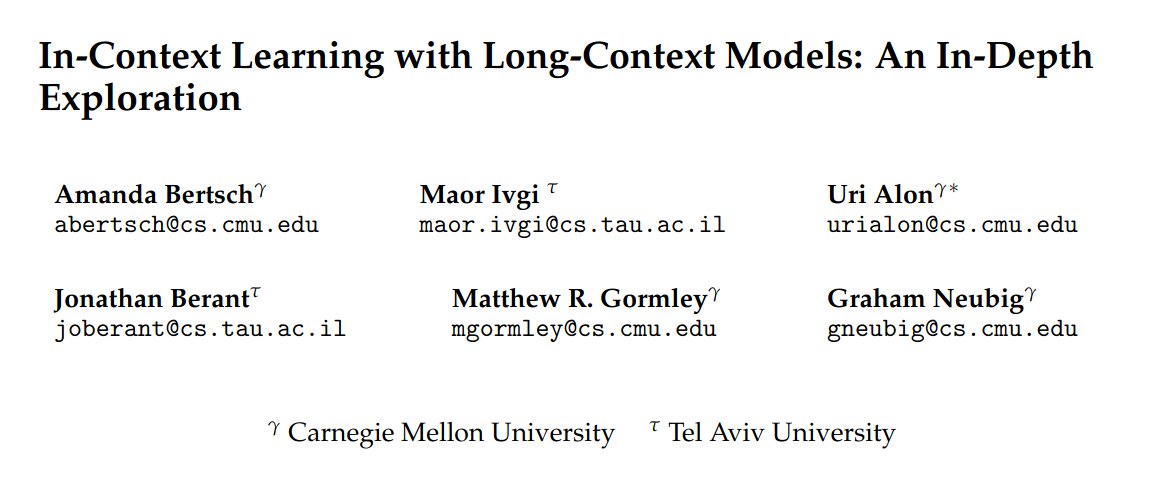

With long-context LMs, we can now fit *thousands* of training examples in context!. We perform an in-depth exploration of many-shot in-context learning, finding it surprisingly effective, providing huge increases over few-shot prompting, and competitive with fine-tuning!.

In-context learning provides an LLM with a few examples to improve accuracy. But with long-context LLMs, we can now use *thousands* of examples in-context. We find that this long-context ICL paradigm is surprisingly effective– and differs in behavior from short-context ICL! 🧵

4

31

242

New #acl2020nlp paper on "Generalizing Natural Language Analysis through Span-relation Representations"! We show how to solve 10 very different natural language analysis tasks with a single general-purpose method -- span/relation representations! 1/

2

51

233

MEGA is a new method for modeling long sequences based on the surprisingly simple technique of taking the moving average of embeddings. Excellent results, outperforming strong competitors such as S4 on most tasks! Strongly recommend that you check it out:

I'm excited to share our work on a new sequence modeling architecture called Mega: Moving Average Equipped Gated Attention. Mega achieves SOTA results on multiple benchmarks, including NMT, Long Range Arena, language modeling, ImageNet and raw speech classification.

2

32

233

In Fall 2021, CMU is updating its NLP curriculum, and 11-747 "Neural Networks for NLP" is being repurposed into 11-711 "Advanced NLP", the flagship research-based NLP class 😃.More NLP fundamentals, still neural network methods. Stay tuned! (CMU students, please register!).

2021 version of CMU "Neural Networks for NLP" slides ( and videos ( are being posted in real time! Check it out for a comprehensive graduate-level class on NLP! New this year: assignment on implementing parts of your own NN toolkit.

1

19

222

This is a well-written overview! Also, for a higher-level, more philosophical take on search in generation models see my recent class (slides: video: . I discuss the relationship between model, search, and output quality.

The 101 for text generation! 💪💪💪. This is an overview of the main decoding methods and how to use them super easily in Transformers with GPT2, XLNet, Bart, T5,. It includes greedy decoding, beam search, top-k/nucleus sampling,. : by @PatrickPlaten

0

48

223

#acl2019nlp paper on "Beyond BLEU: Training NMT with Semantic Similarity" by Wieting et al.: I like this because it shows 1) a nice use case for semantic similarity, 2) that we can/should optimize seq2seq models for something other than likelihood or BLEU!

5

58

220

Just released a new survey on prompting methods, which use language models to solve prediction tasks by providing them with a "prompt" like: "CMU is located in __". We worked really hard to make this well-organized and educational for both NLP experts and beginners, check it out!.

What is prompt-based learning, and what challenges are there? Will it be a new paradigm or a way for human-PLMs communication? How does it connect with other research and how to position it in the evolution of the NLP research paradigm? We released a systematic survey and beyond

1

55

217