Costa Huang

@vwxyzjn

Followers

7K

Following

9K

Media

403

Statuses

2K

Prev: RL @allen_ai @huggingface. Built @cleanrl_lib.

Philadelphia, PA

Joined March 2013

🚀 Happy to share Tülu 3! We trained the model with actual RL: the model only receives rewards if its generations are verified to be correct (e.g., correct math solution). ❤️ Check out our beautiful RL curves. Code is also available: ~single file PPO that scales to 70B.

13

81

488

🤩 @astral `uv` installing deep-ep and deep-gemm together! Thanks @charliermarsh!. code in the thread

1

9

102

Nice! If you don't own the pypi-level server infra, then there is not much you can do about its limitations.

With pyx, we can solve these problems. And for me, that's the most exciting thing about it. By providing our own end-to-end infrastructure we can solve _so_ many more problems for users that used to be out-of-scope.

0

0

5

Check out Jason’s new work on RL tricks. Lots of ablation studies comparing popular techniques like Clip Higher 🤩.

Excited to share our #RL_for_LLM paper: "Part I: Tricks or Traps? A Deep Dive into RL for LLM Reasoning" . We conducted a comprehensive analysis of RL techniques in LLM domain!🥳 .Surprisingly, we found that using only 2 techniques can unlock the learning capability of LLMs.😮

1

5

79

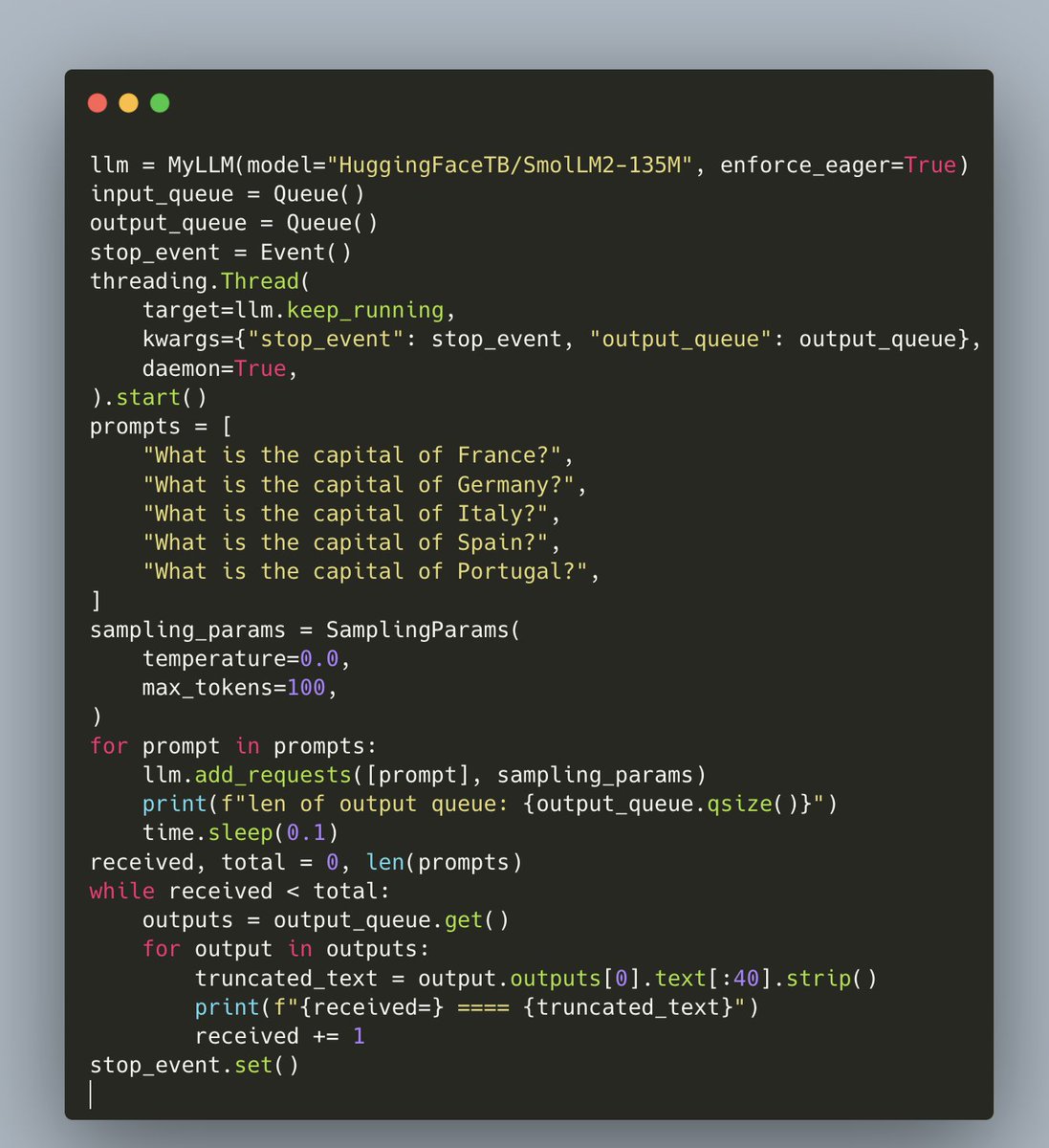

To demonstrate @vllm_project is a hackable for-loop. You *can* add requests in the middle of generations while still do batching properly.

4

15

243

RT @ChangJonathanC: while we wait for gpt-5 to drop. Here is a flex attention tutorial for building a < 1000 LoC vllm from scratch. https://….

jonathanc.net

PyTorch FlexAttention tutorial: Building a minimal vLLM-style inference engine from scratch with paged attention

0

37

0

RT @iScienceLuvr: Announcement 📢. We're hiring at @SophontAI for a variety of positions!. We're looking for exceptional, high-agency ML res….

0

23

0

RT @valentina__py: 💡Beyond math/code, instruction following with verifiable constraints is suitable to be learned with RLVR. But the set of….

0

94

0

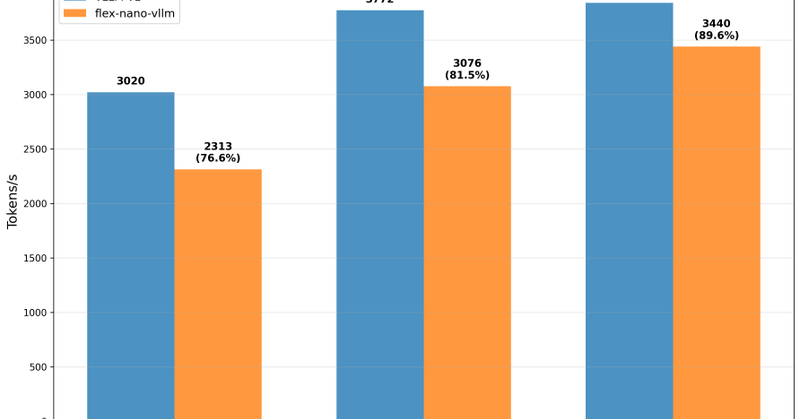

I am pretty amazed at Pure Python code matching vllm's performance 🤯.

github.com

Nano vLLM. Contribute to GeeeekExplorer/nano-vllm development by creating an account on GitHub.

0

3

35

Weixun's team at Alibaba is presenting a new RL framework for LLM training. It's super nice that they included training curves on Qwen3-30B-A3B-base! The roll looks yummy 😋

🚀 Introducing ROLL: An Efficient and User-Friendly RL Training Framework for Large-Scale Learning!. 🔥 Efficient, Scalable & Flexible – Train 200B+ models with 5D parallelism (TP/PP/CP/EP/DP), seamless vLLM/SGLang switching, async multi-env rollout for maximum RL throughput!

5

15

118

RT @interconnectsai: How I Write.And therein how I think. And how AI impacts it.

interconnects.ai

Therein how I think, how AI impacts it, and how writing reflects upon AI progress.

0

3

0

RT @cursor_ai: A conversation on the optimal reward for coding agents, infinite context models, and real-time RL

0

142

0

RT @PeterHndrsn: The next ~1-4 years will be taking the 2017-2020 years of Deep RL and scaling up: exploration, generalization, long-horizo….

0

37

0