Quoc Le

@quocleix

Followers

54K

Following

806

Media

70

Statuses

451

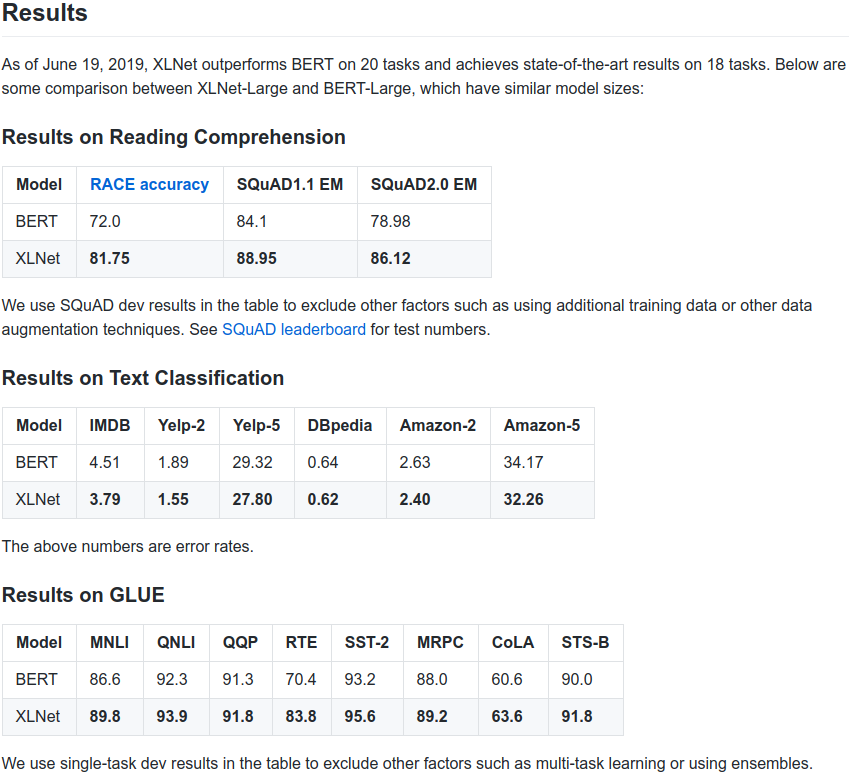

XLNet: a new pretraining method for NLP that significantly improves upon BERT on 20 tasks (e.g., SQuAD, GLUE, RACE). arxiv: github (code + pretrained models): with Zhilin Yang, @ZihangDai, Yiming Yang, Jaime Carbonell, @rsalakhu

19

680

2K

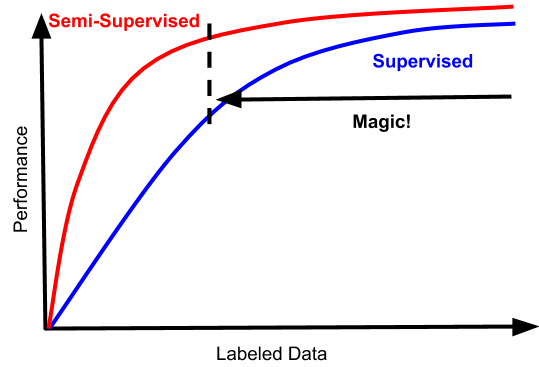

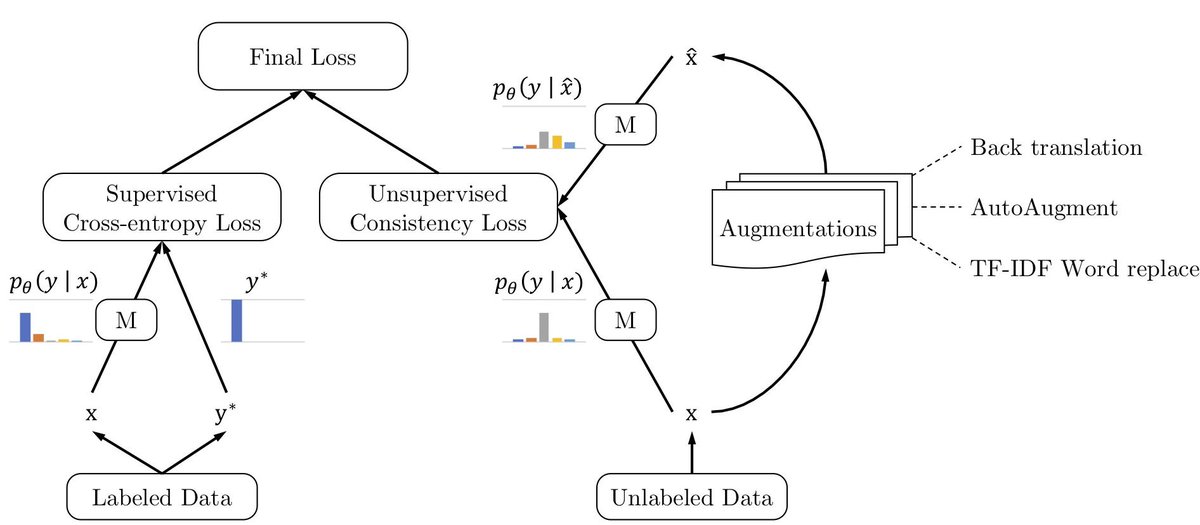

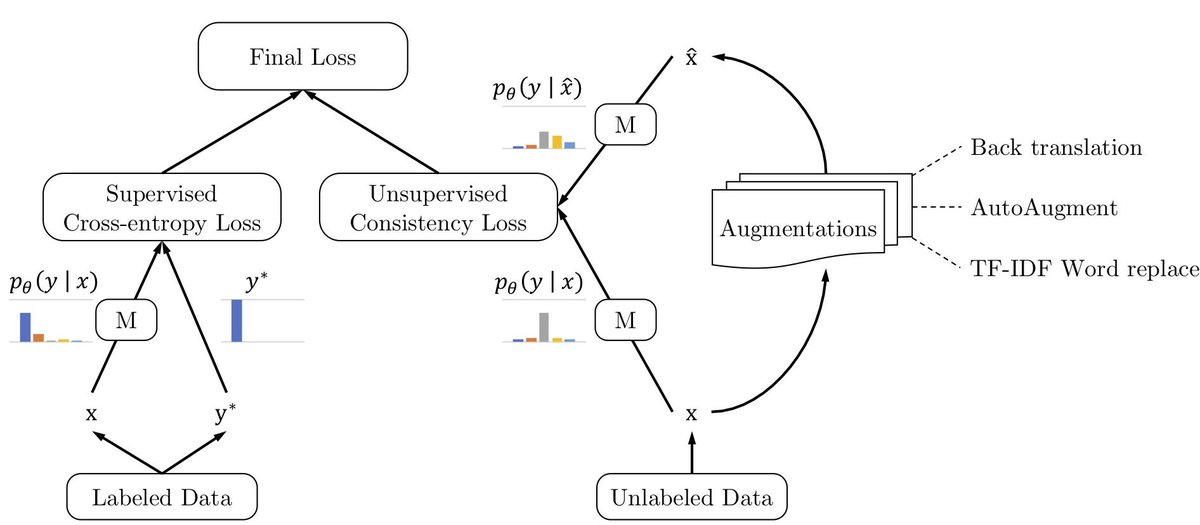

Data augmentation is often associated with supervised learning. We find *unsupervised* data augmentation works better. It combines well with transfer learning (e.g. BERT) and improves everything when datasets have a small number of labeled examples. Link:

Introducing UDA, our new work on "Unsupervised data augmentation" for semi-supervised learning (SSL) with Qizhe Xie, Zihang Dai, Eduard Hovy, & @quocleix. SOTA results on IMDB (with just 20 labeled examples!), SSL Cifar10 & SVHN (30% error reduction)!

3

153

605

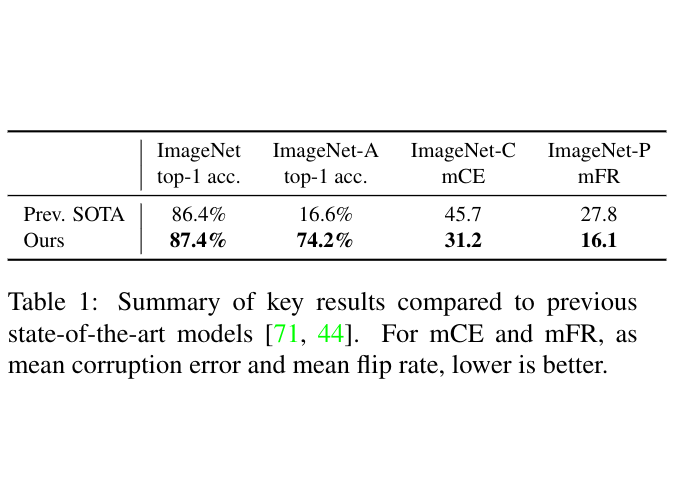

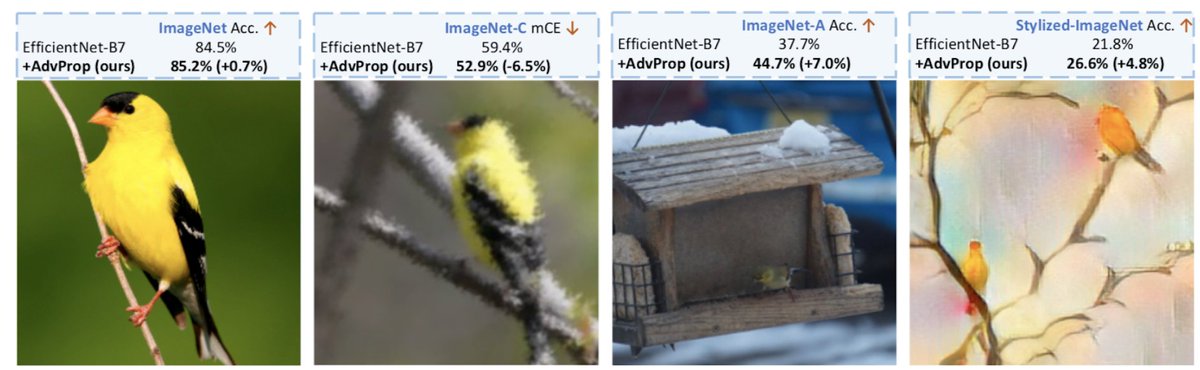

AdvProp: One weird trick to use adversarial examples to reduce overfitting. Key idea is to use two BatchNorms, one for normal examples and another one for adversarial examples. Significant gains on ImageNet and other test sets.

Can adversarial examples improve image recognition? Check out our recent work: AdvProp, achieving ImageNet top-1 accuracy 85.5% (no extra data) with adversarial examples!. Arxiv: .Checkpoints:

2

146

529

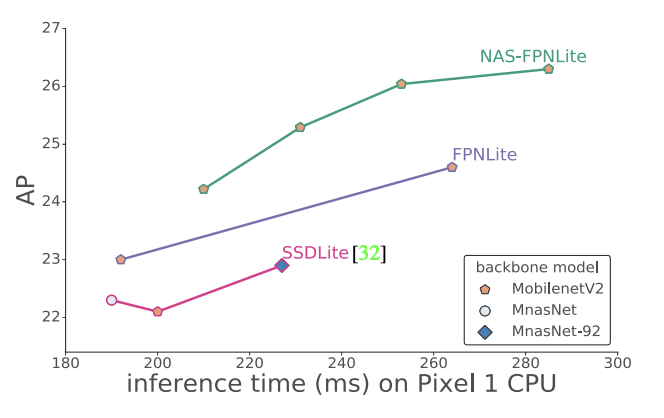

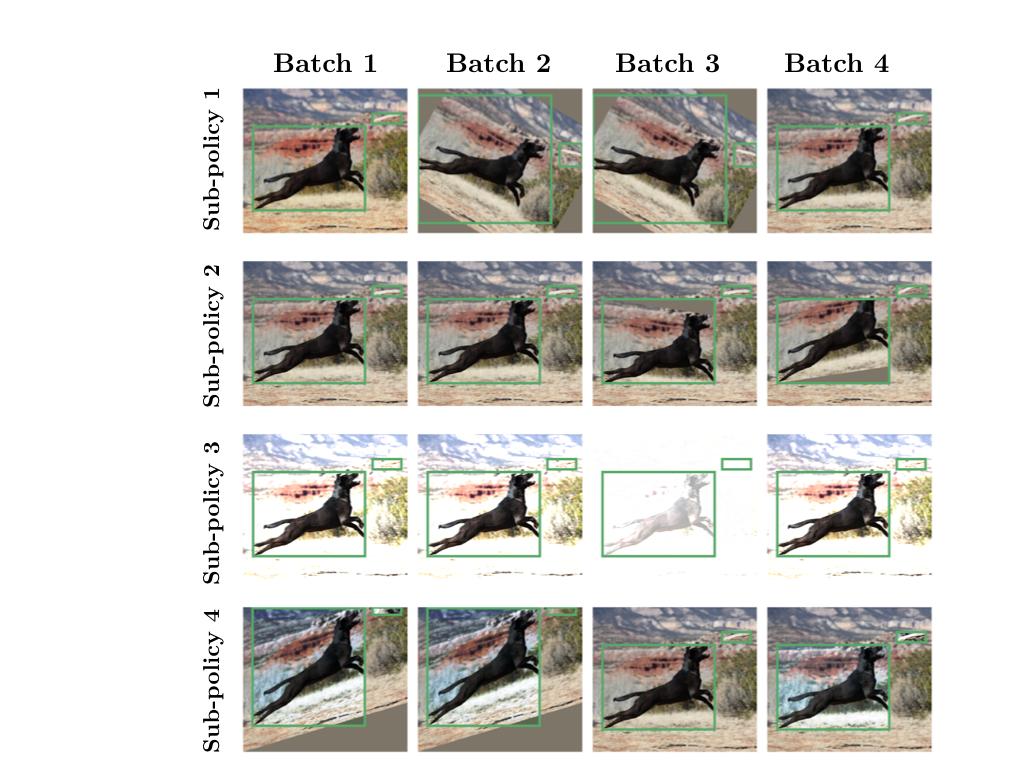

We opensourced AutoAugment strategy for object detection. This strategy significantly improves detection models in our benchmarks. Please try it on your problems. Code: Paper: More details & results 👇.

Data augmentation is even more crucial for detection. We present AutoAugment for object detection, achieving SOTA on COCO validation set (50.7 mAP). Policy transfers to different models & datasets. Paper: Code: details in thread.

4

144

454

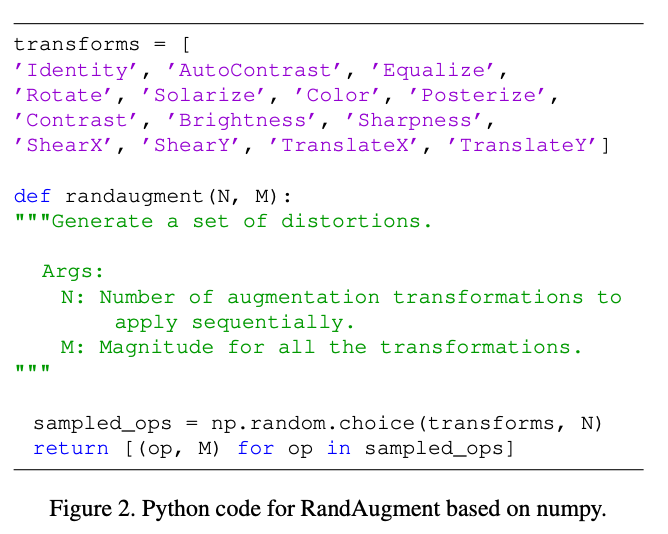

RandAugment was one of the secret sources behind Noisy Student that I tweeted last week. Code for RandAugment is now opensourced.

*New paper* RandAugment: a new data augmentation. Better & simpler than AutoAugment. Main idea is to select transformations at random, and tune their magnitude. It achieves 85.0% top-1 on ImageNet. Paper: Code:

3

77

412

Congratulations @geoffreyhinton. The celebration today brought back many fond memories of learning from and collaborating with Geoff. His passion for research is another level and has made a great impact on the career of researchers around him, including myself. Every time he.

Attending @geoffreyhinton’s retirement celebration at Google with old friends. Thank you for everything you’ve done for AI! @JeffDean @quocleix

5

12

359

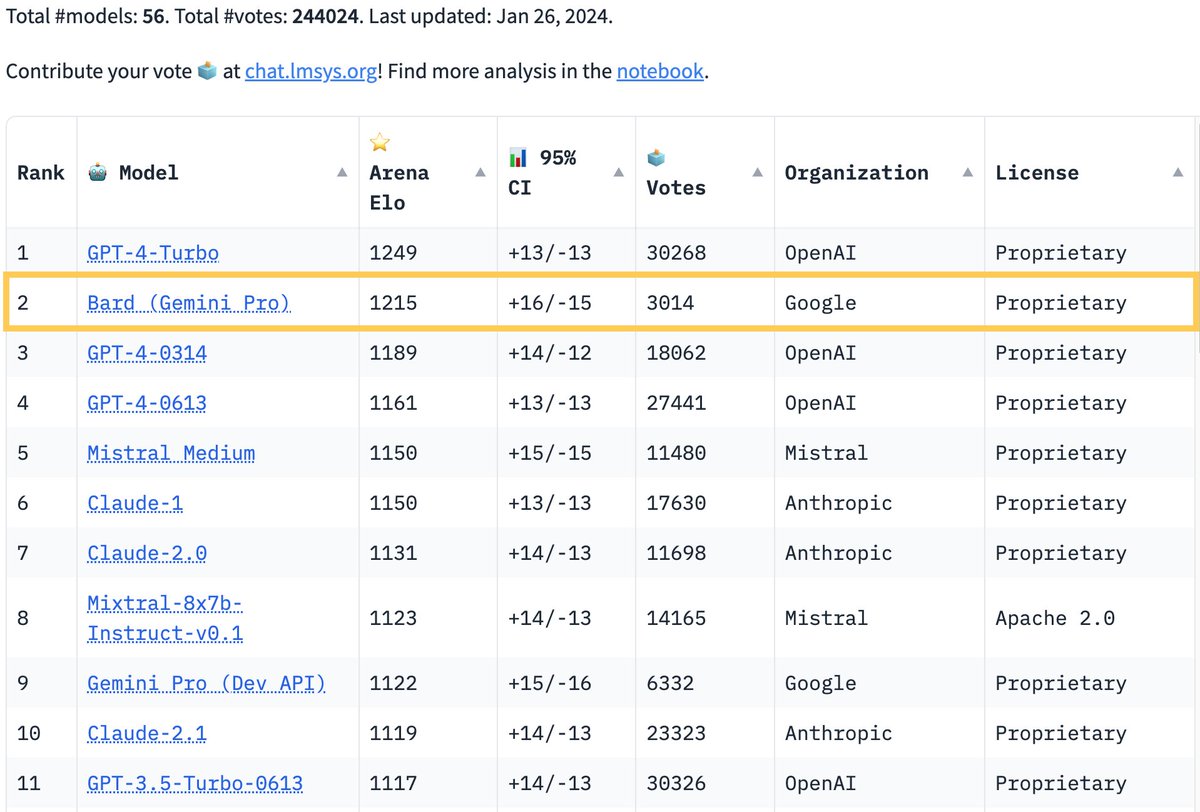

Bard (free) with Gemini Pro is now #2 on lmsys chatbot arena, surpassing GPT-4. I use it a lot for email and writing purposes and its responses are much better since the first launch. Give it a try at

🔥Breaking News from Arena. Google's Bard has just made a stunning leap, surpassing GPT-4 to the SECOND SPOT on the leaderboard! Big congrats to @Google for the remarkable achievement!. The race is heating up like never before! Super excited to see what's next for Bard + Gemini

14

32

308

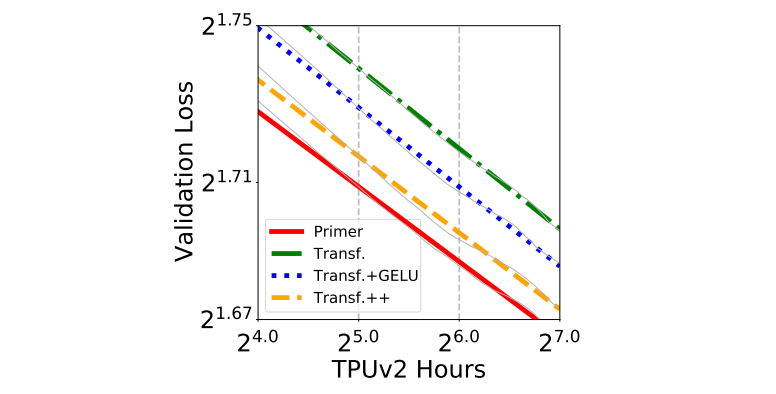

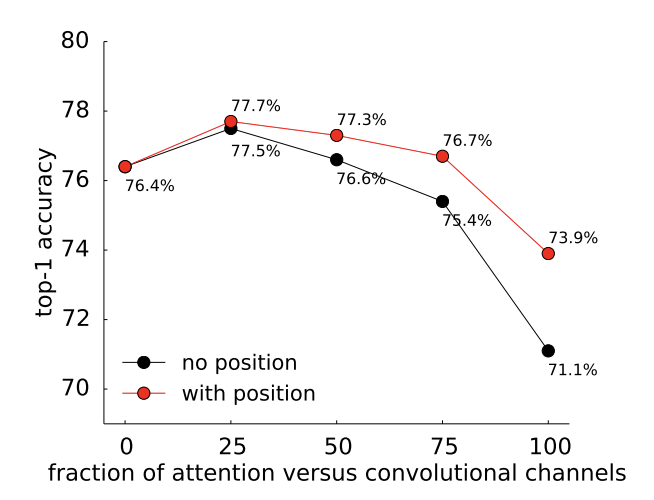

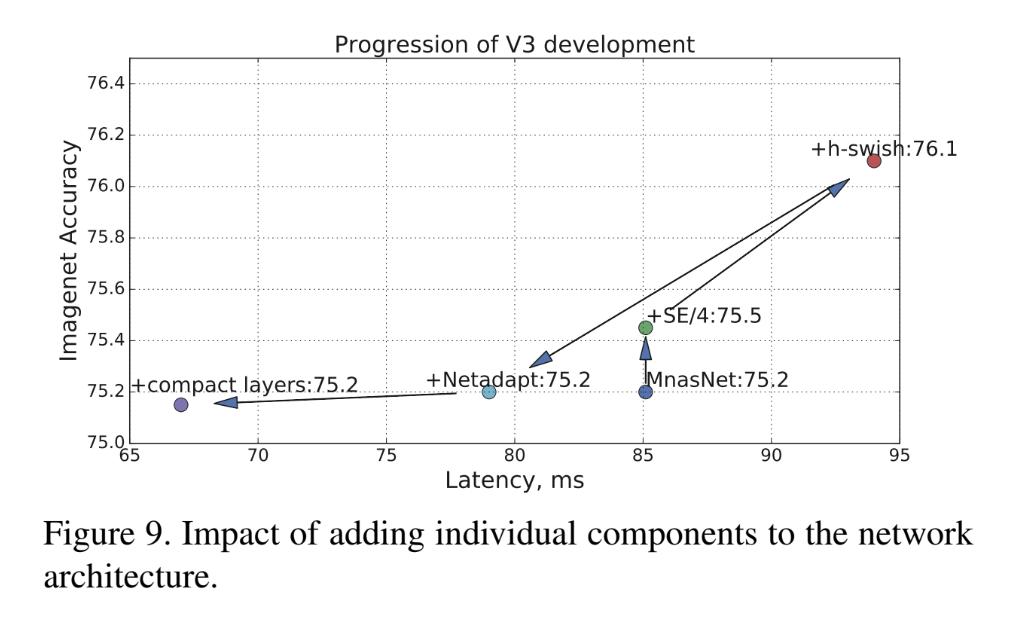

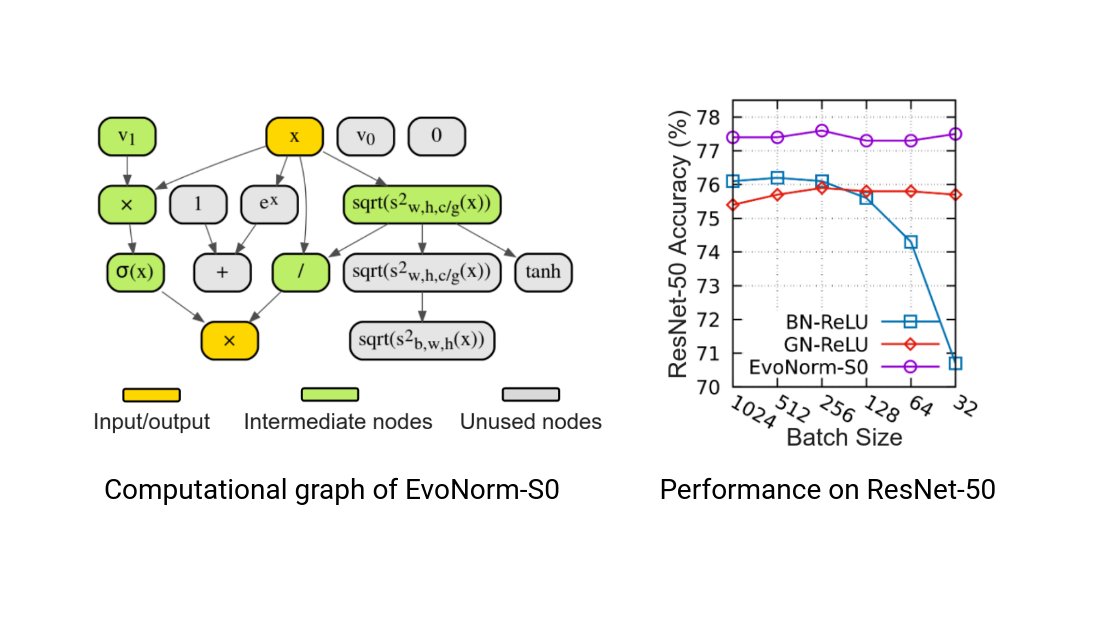

Cool results from our collaboration with colleagues at @DeepMind on searching for new layers as alternatives for BatchNorm-ReLU. Excited with the potential use of AutoML for discovering novel ML concepts from low level primitives.

New paper: Evolving Normalization-Activation Layers. We use evolution to design new layers called EvoNorms, which outperform BatchNorm-ReLU on many tasks. A promising use of AutoML to discover fundamental ML building blocks. Joint work with @DeepMind

2

77

309

Amazing achievement: Our AI system at Google DeepMind just achieved the equivalent of a silver medal at the International Mathematical Olympiad (#IMO2024). This is a major milestone for AI in mathematics. Very proud to have been a part of it!.

We’re presenting the first AI to solve International Mathematical Olympiad problems at a silver medalist level.🥈. It combines AlphaProof, a new breakthrough model for formal reasoning, and AlphaGeometry 2, an improved version of our previous system. 🧵

5

32

275

Evolved Transformer is now opensourced in Tensor2Tensor:.

We used architecture search to improve Transformer architecture. Key is to use evolution and seed initial population with Transformer itself. The found architecture, Evolved Transformer, is better and more efficient, especially for small size models. Link:

3

73

280

Waymo's blogpost about the use of automated data augmentation methods for self-driving cars (AutoAugment, RandAugment, Progressive Population Based Augmentation - PPBA). PPBA is ". up to 10 times more data efficient than training nets without augmentation".

Our newest research in collaboration with our @googleAI colleagues will allow us to train better machine learning models with less data and improve perception tasks for the Waymo Driver.

0

58

273

Exciting new AutoML lineup for @googlecloud : AutoML Tables & Mobile Vision. Collaboration between Google Brain + AI + Cloud. Some benchmark data are below. Google Brain AutoML team will also participate in a Kaggle competition tomorrow. This will be fun!.

9

67

254

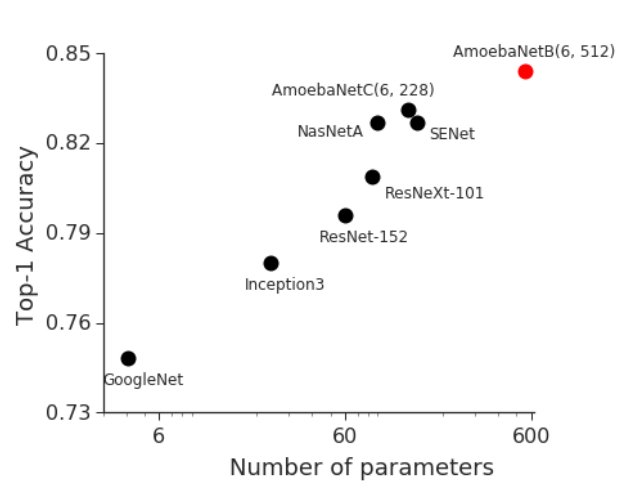

We studied transfer learning from ImageNet to other datasets. Finding: better ImageNet architectures tend to work better on other datasets too. Surprise: pretraining on ImageNet dataset sometimes doesn't help very much. More info:

We updated our paper "Do Better ImageNet Models Transfer Better?" In v2, we show that regularization settings for ImageNet training matter a lot for transfer learning on fixed features. ImageNet accuracy now correlates with transfer acc in all settings.

3

55

228

Gemini 2.5 Pro is now #1 across all categories on Arena leaderboard. Been playing with it and it's a really amazing model.

BREAKING: Gemini 2.5 Pro is now #1 on the Arena leaderboard - the largest score jump ever (+40 pts vs Grok-3/GPT-4.5)! 🏆. Tested under codename "nebula"🌌, Gemini 2.5 Pro ranked #1🥇 across ALL categories and UNIQUELY #1 in Math, Creative Writing, Instruction Following, Longer

7

14

189

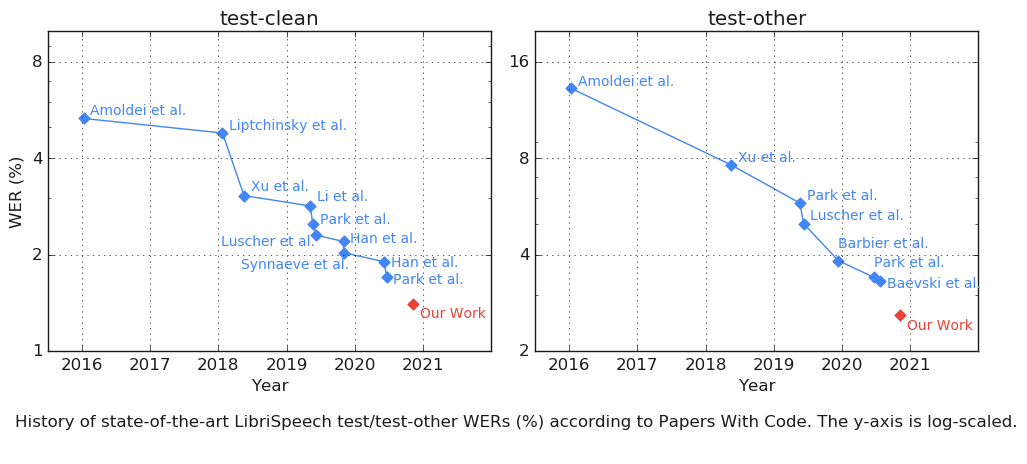

Wanted to apply AutoAugment to speech, but a handcrafted augmentation policy already improves SOTA. Idea: randomly drop out certain time & frequency blocks, and warp input spectrogram. Results: state-of-art on LibriSpeech 960h & Switchboard 300h. Link:

Automatic Speech Recognition (ASR) struggles in the absence of an extensive volume of training data. We present SpecAugment, a new approach to augmenting audio data that treats it as a visual problem rather than an audio one. Learn more at →

0

37

151

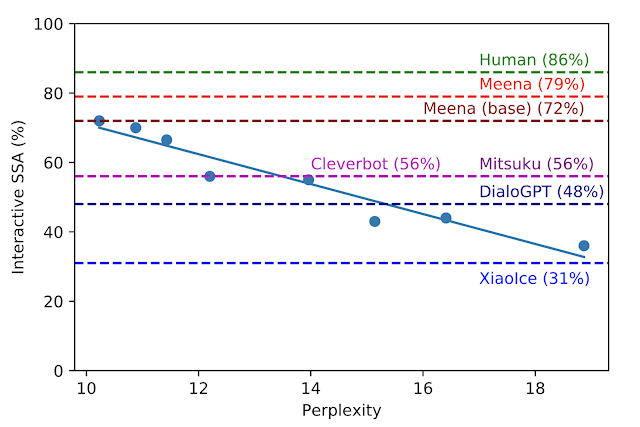

@xpearhead @lmthang My favorite conversation is below. The Hayvard pun was funny but I totally missed the steer joke at the end until it was pointed out today by @Blonkhart

5

20

142

For me, ELMo is my favorite work of 2018. Together with ULMFit, CoVE, GPT, BERT and others, they represent a breakthrough. I just mean that these ideas have a history, and want to bring up some history from my part. Also check out some comments in the thread for related works.

Peters et al 2017 and 2018 (ELMo) get the credit for the discoveries of 1) Using language models for transfer learning and 2) Embedding words through a language model ("contextualized word vectors"). ELMo is great, but these methods were proposed earlier. (1/3).

0

15

136

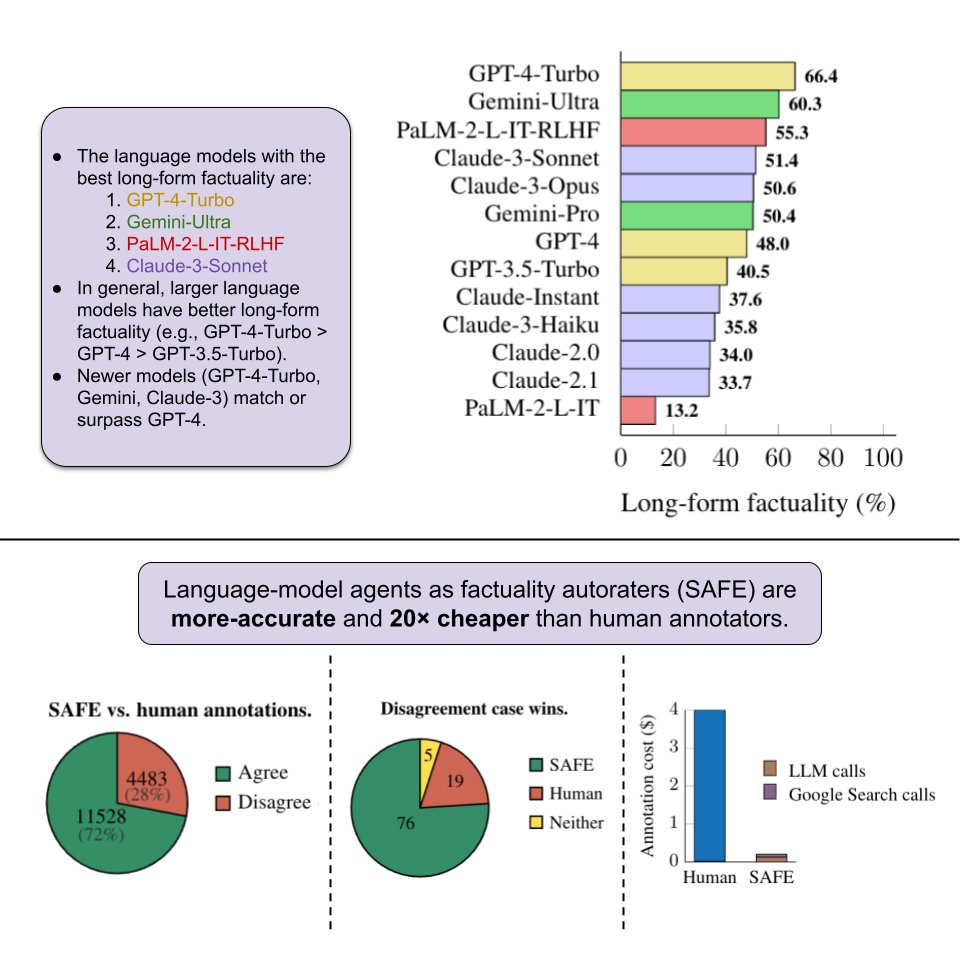

Our new work on evaluating and benchmarking long-form factuality. We provide a new dataset, an evaluation method, an aggregation metric that accounts for both precision and recall, and an analysis of thirteen popular LLMs (including Gemini, GPT, and Claude). We’re also.

New @GoogleDeepMind+@Stanford paper! 📜. How can we benchmark long-form factuality in language models?. We show that LLMs can generate a large dataset and are better annotators than humans, and we use this to rank Gemini, GPT, Claude, and PaLM-2 models.

2

20

137

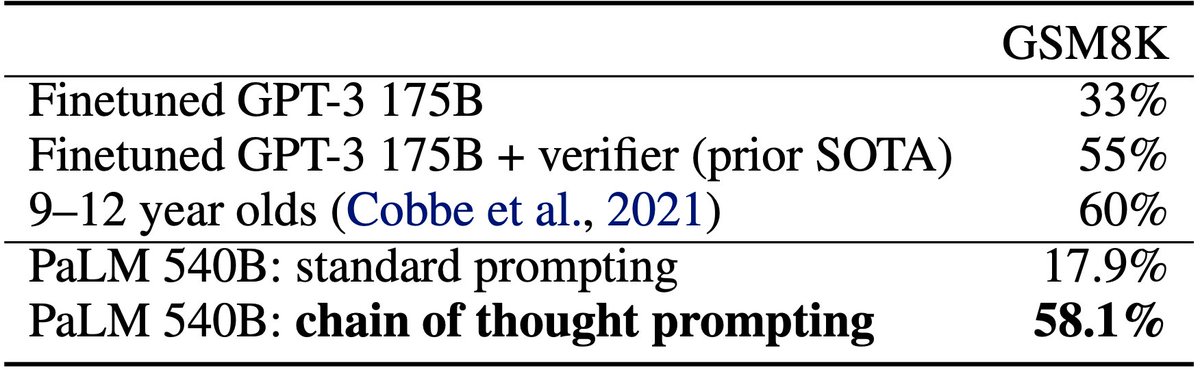

You should spend more compute at test time.

Do you like LLMs? Do you also like for loops? Then you’ll love our new paper!. We scale inference compute through repeated sampling: we let models make hundreds or thousands of attempts when solving a problem, rather than just one. By simply sampling more, we can boost LLM

2

9

109

Since our work on "Semi-supervised sequence learning", ELMo, BERT and others have shown changes in the algorithm give big accuracy gains. But now given these nice results with a vanilla language model, it's possible that a big factor for gains can come from scale. Exciting!.

We've trained an unsupervised language model that can generate coherent paragraphs and perform rudimentary reading comprehension, machine translation, question answering, and summarization — all without task-specific training:

0

17

119

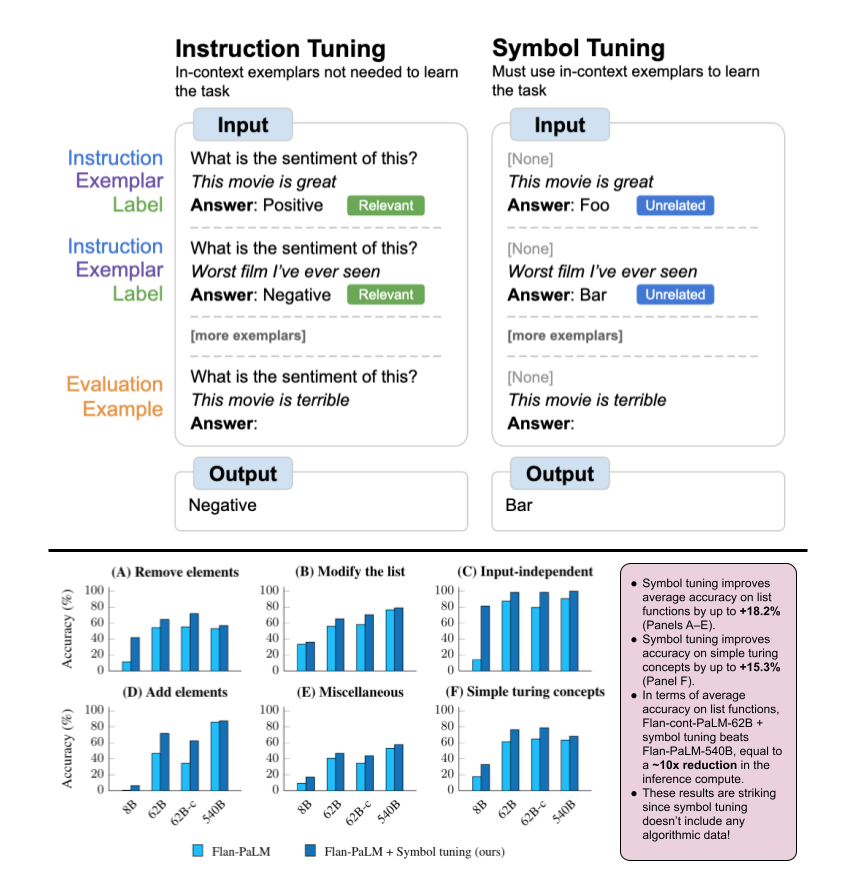

Cool new research from our team @Google!. Symbol tuning is a new finetuning method that allows language models to better learn input–label mappings in-context.

New @GoogleAI+@Stanford paper!📜. Symbol tuning is a simple method that improves in-context learning by emphasizing input–label mappings. It improves robustness to prompts without instructions/relevant labels and boosts performance on algorithmic tasks.

2

12

100

Introducing Natural Questions, a new dataset for Q&A research. Many questions in the dataset are not yet answered well by Google:. where does the energy in a nuclear explosion come from?. when are hops added to the brewing process?. The dataset is still difficult for SoTA models.

Introducing Natural Questions, a new, large-scale corpus and challenge for training and evaluating open-domain question answering systems, and the first to replicate the end-to-end process in which people find answers to questions. Learn more at ↓

0

20

105

Introducing MAPO, an policy gradient method augmented with memory of good trajectories to make better updates. Well suited for generating programs to query databases. Good accuracies on WikiTableQuestions and WikiSQL. Video: #NeurIPS2018

1

27

97

Update from #KaggleDays , 5 hours into the competition and Google AutoML still maintains its lead. Three hours to go (five hours since I took the pictures).

4

11

94

Excited to see Gemini #1 on lmsys. Nice ELO of 1300 :-).

Exciting News from Chatbot Arena!. @GoogleDeepMind's new Gemini 1.5 Pro (Experimental 0801) has been tested in Arena for the past week, gathering over 12K community votes. For the first time, Google Gemini has claimed the #1 spot, surpassing GPT-4o/Claude-3.5 with an impressive

1

2

81

Blog post about our collaboration to automate the design of machine learning models for @Waymo 's perception problems.

Our researchers have teamed up with @GoogleAI to put cutting-edge AutoML research into practice by automatically generating neural nets for our self-driving cars. The results? Faster and more accurate nets for our vehicles.

0

14

84

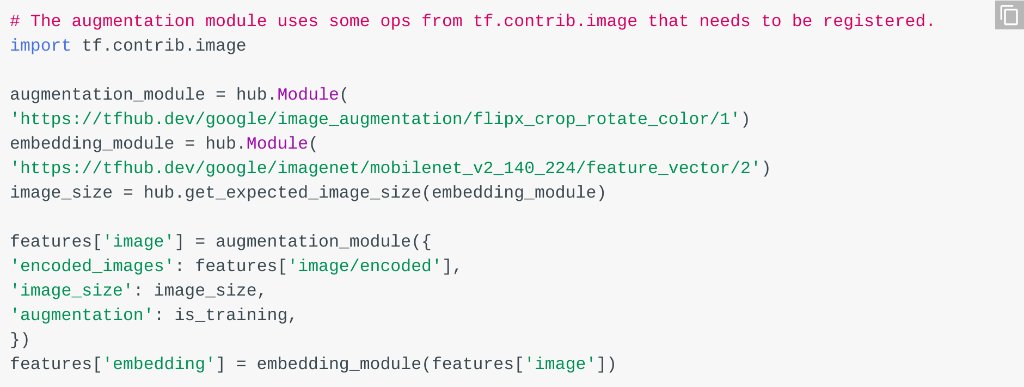

Auto-Augment policies are now opensourced in TF-Hub.

Image augmentation lets you get the most of your dataset. We released state-of-the-art AutoAugment Modules on #TFHub allowing you to train better image models with less data! #TensorFlowHub #GoogleAI. Check it out here →

0

15

83

@Miles_Brundage @emilymbender @timnitGebru Oops, thanks for pointing out. We had some text discussing Bender et al. in an earlier draft, but it looks like an editing pass to rework some text from a few weeks ago accidentally dropped that. We'll upload a new version soon with that discussion restored.

9

7

70

@deliprao We congratulate Tomas on winning the award. Regarding seq2seq, there are inaccuracies in his account. In particular, we all recall very specifically that he did not suggest the idea to us, and was in fact highly skeptical when we shared the end-to-end translation idea with him.

4

1

73

Asking LLMs to take a step back improves their ability to reason and answer highly specific questions.

Introducing 🔥 ‘Step back prompting’ 🔥 fueled by the power of abstraction. Joint work with the awesome collaborators at @GoogleDeepMind : @HuaixiuZheng , @xinyun_chen_ , @HengTze , @edchi , @quocleix , @denny_zhou. LLMs struggle to answer specific questions such as: “Estella

1

14

66

This work continues our efforts on semi-supervised learning such as. UDA: MixMatch: FixMatch: Noisy Student: etc. Joint work with @hieupham789 @QizheXie @ZihangDai.

1

11

69

A weakness of LLMs is that they don’t know recent events well. This is nice work from Tu developing a benchmark (FreshQA) to measure factuality of recent events, and a simple method to improve search integration for better performance on the benchmark.

🚨 New @GoogleAI paper:. 🤖 LLMs are game-changers, but can they help us navigate a constantly changing world? 🤔. As of now, our work shows that LLMs, no matter their size, struggle when it comes to fast-changing knowledge & false premises. 📰: 👇

1

7

48

@xpearhead @lmthang @Blonkhart I had another conversation with Meena just now. It's not as funny and I don't understand the first answer. But the replies to the next two questions are quite funny.

1

9

47