Matthew Leavitt

@leavittron

Followers

2,232

Following

786

Media

185

Statuses

2,360

Chief Science Officer, Co-Founder @datologyai . Former: Head of Data Research @MosaicML ; FAIR. 🧠 and 🤖 intelligence // views are from nowhere

The Bay

Joined March 2011

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Norway

• 134115 Tweets

Pacers

• 131989 Tweets

中尾彬さん

• 116022 Tweets

声優さん

• 74990 Tweets

Ireland and Spain

• 48714 Tweets

古谷さん

• 44837 Tweets

妊娠中絶

• 26341 Tweets

安室さん

• 25057 Tweets

النرويج

• 24575 Tweets

声優交代

• 22427 Tweets

死刑求刑

• 21345 Tweets

Estado Palestino

• 20266 Tweets

ブートヒル

• 18033 Tweets

Noruega

• 16586 Tweets

袴田さん

• 15787 Tweets

RIDDLE

• 10356 Tweets

#FeelThePOP2ndWin

• 10148 Tweets

Last Seen Profiles

Pinned Tweet

The next 10x in deep learning efficiency gains are going to come from intelligent intervention on training data. But tools for automated data curation at scale didn’t exist—until now. I’m so excited to announce that I’ve co-founded

@DatologyAI

, with

@arimorcos

and

@hurrycane

11

16

126

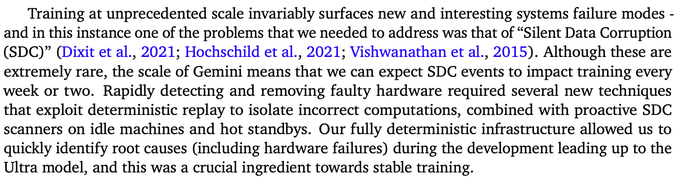

By now you may have seen some hubbub about

@MosaicML

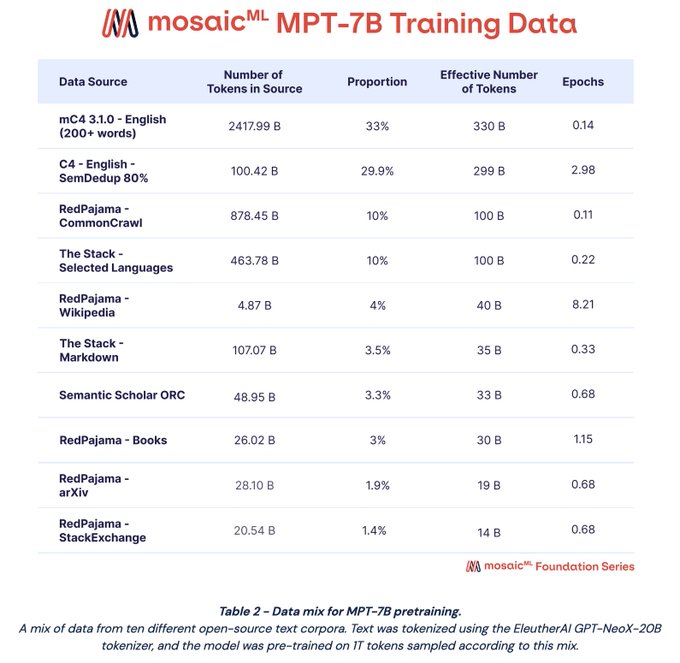

’s MPT-7B series of models: MPT-7B base, MPT-7B-Instruct, MPT-7B-Chat, and MPT-7B-StoryWriter-65k+. These models were pretrained on the same 1T token data mix. In this 🧵I break down the decisions behind our pretraining data mix

8

53

258

It seems likely to me that Mistral 7B's quality comes from its data. You know, the thing they provide exactly zero information about. The sliding window attention is a red herring.

11

9

174

s/o to

@danielking36

for the exceptional title. We also considered "Training on the test set is all you need", "The Unreasonable Effectiveness of Training on the Test Set", and "Intriguing Properties of Training on Test Data"

1

2

156

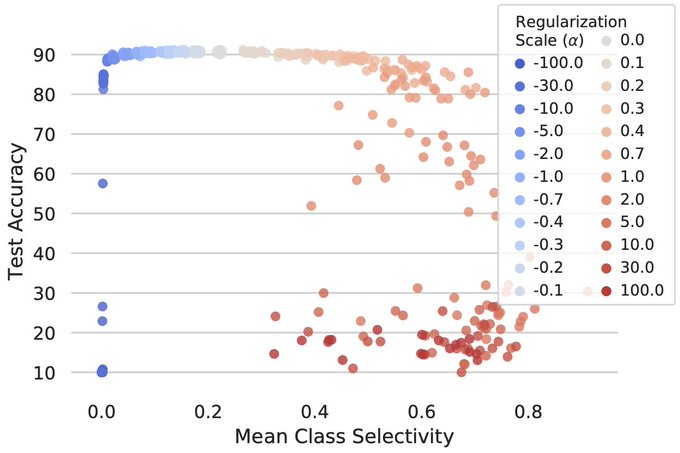

Class selectivity is often used to interpret the function of individual neurons.

@arimorcos

and I investigated whether it’s actually necessary and/or sufficient for deep networks to function properly. Spoiler: it’s mostly neither. (1/10)

6

34

108

This is a red herring, of course. What everyone really wants to know (and what W&B will certainly keep as a close secret) is the Best Seed. Publicizing this seed would not only give away their competitive advantage, but also violate US Arms Control Laws.

11

4

97

This was a huge headache in the early days of

@MosaicML

, so we built our tooling to seamlessly handle GPU failures. Our platform will detect a faulty node, pause training, cordon the node, sub in a spare, and resume from the most recent checkpoint. All w/o any human intervention

3

5

89

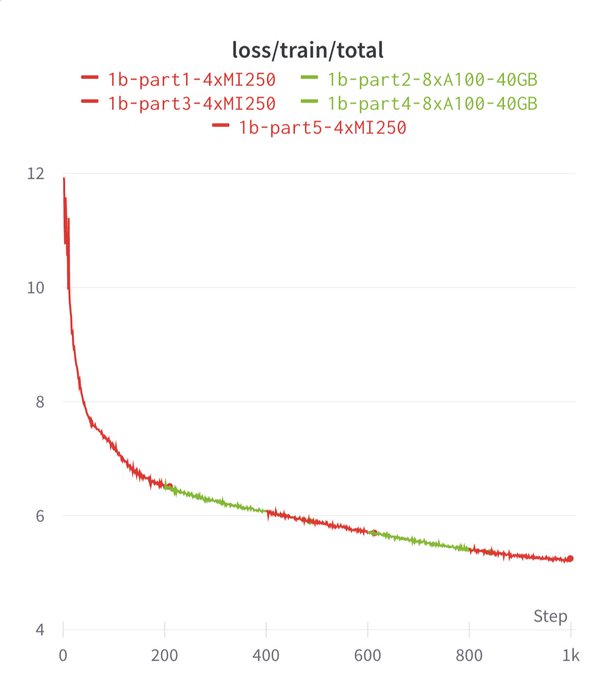

Celebrate GPU Independence Day! My colleagues at

@MosaicML

just showed how simple it is to train on AMD. The real kicker here is switching between AMD and NVIDIA in a single training run

1

15

91

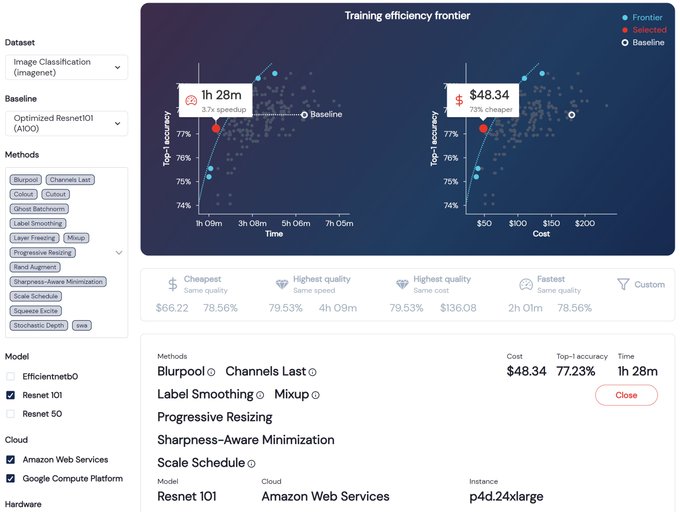

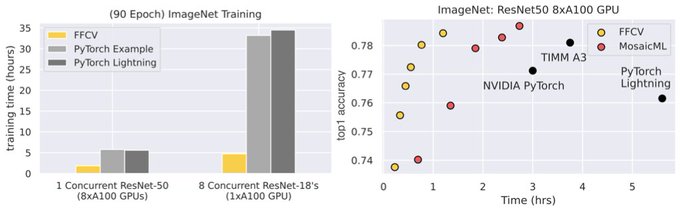

Me and my talented colleagues at

@MosaicML

made ResNet50 go brrrrrr. We devised three training recipes for a vanilla ResNet-50 architecture that are up to 7x faster than other baselines. We didn't even sweep hparams extensively. And it's plain PyTorch.

@jefrankle

has the scoop:

3

11

89

As Head of the Data Research Team at

@MosaicML

, I cannot think of an acquirer I'd be more excited about

Today we’re announcing plans for

@MosaicML

to join forces with

@databricks

! We are excited at the possibilities for this deal including serving the growing number of enterprises interested in LLMs and diffusion models.

58

66

665

5

2

81

I'm going to take this opportunity to recommend that everyone read Paul Cisek's1999 paper "Beyond the computer metaphor: Behaviour as interaction" which presages many of the contemporary discussions about the necessity of embodiment for overcoming limitations in deep learning

@dileeplearning

Nah man, see the tweet I quoted. Most people think it is a metaphor, cause they think computer == Von Neumann machine.

2

0

8

3

13

71

@arimorcos

and I are excited to announce our position paper, Towards falsifiable interpretability research, is part of

#NeurIPS2020

@MLRetrospective

! We argue for the importance of concrete, falsifiable hypotheses in interpretability research. Paper: (1/8)

3

6

66

@marcbeaupre

You need to generate 3-7T of magnetic field strength, which requires a large magnet, lots of power, and helium cooling. I dunno what the physical limits are on magnet size for field generation; also power consumption/dissipation seem like big issues

5

2

60

Now that we're out of stealth I'm very excited I can announce I'm a Research Scientist at

@MosaicML

.

We help the ML community burn less money by training models more efficiently. There's a lot of fascinating research and engineering that enables this.

And we're hiring 😀

Hello World! Today we come out of stealth to make ML training more efficient with a mosaic of methods that modify training to improve speed, reduce cost, and boost quality. Read our founders' blog by

@NaveenGRao

@hanlintang

@mcarbin

@jefrankle

(1/4)

7

41

164

4

3

54

This is why I pushed

@MosaicML

to create a Data Research Team last year (and

@jefrankle

recognized the value and made it happen)

1

1

54

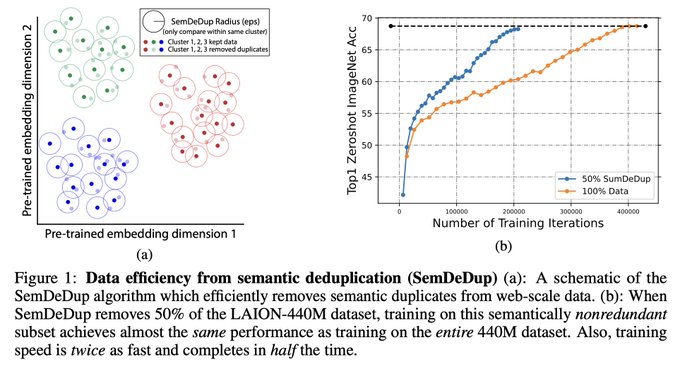

Very cool to see what is essentially SemDedup () work for fine-tuning data

1

6

49

Also, most importantly, these studies aren't decoding endogenously generated signals, they're reconstructing WHAT THEY ARE CURRENTLY SHOWING YOU

6

3

44

@marcbeaupre

Overall, I suspect miniaturization would require a massive breakthrough in materials science. The tech has already been around for 30+ years. I'd sooner bet on a different brain imaging modality than fmri miniaturization, but I'm also not an fmri expert

3

0

33

Very excited to announce that I've joined

@hanlintang

and

@NaveenGRao

in their quest to make ML more efficient!

3

1

37

Related question that

@KordingLab

and I have: is there a literature or materials on how to build strong hypotheses, esp in neuroscience? Most philosophical work we're familiar with is too abstract/meta to feel practical, esp as part of a graduate curriculum

Research on interpreting units in artificial neural networks fails to be falsifiable. And just about everything that Matt Leavitt and

@arimorcos

say about the problem in ANNs is a problem in neuroscience.

7

41

172

3

6

31

@ItsMrMetaverse

I actually escaped my neuroscience lock-up (they let us do that once in a while) and have been doing ML research for the last four years. But as a Metaverse Expert and t-shirt merchant you seem uniquely qualified to evaluate the trustworthiness of my statements about neuro and ML

3

2

34

@jbensnyder

This is an excellent point. All the studies I'm familiar with require training data for each individual, which is another limitation

6

0

33

Congratulations to

@tyrell_turing

for winning the

@CAN_ACN

Young Investigator Award for 2019! It must have been very challenging to pick from all the amazing young Canadian PIs. Thanks to Blake & everyone who makes Canada's neuroscience community so wonderful to be a part of!

3

2

28

@finbarrtimbers

At

@MosaicML

we did it with Alibi + FlashAttention + 80gb A100s. No secret sauce, just well-vetted research. Shout-out to

@OfirPress

and

@tri_dao

for their great methods!

1

2

32

To those saying "but what about the inexorable march of technological progress"

@paulg

I'm not saying it can never happen, just that it's probably not worth worrying about atm due to the logistics of generating the strength of magnetic field needed to do it.

1

1

16

1

2

30

Next up: C4. Our initial exps showed C4 just performed _really_ well. But we wanted to push it! We used SemDedup (ty

@arimorcos

' group) to remove the 20% most similar documents within C4, which was consistently :thumbsup: in our exps

1

2

27

How do easily interpretable neurons affect CNN performance? In a new blog post,

@arimorcos

and I summarize our recent work evaluating the causal role of selective neurons: easily interpretable neurons can actually impair performance!

2

5

25

Quarantine Dog

#1

: double dog, Tillamook cheddar, sauerkraut, avocado, pickled ginger, Japanese mayo, yuzu kosho.

4

0

26

One of my favorite parts of our blog post announcing the

@MosaicML

ResNet Recipes () is the recipe card, designed by the talented

@ericajiyuen

. BTW these times are for 8x-A100

0

4

26

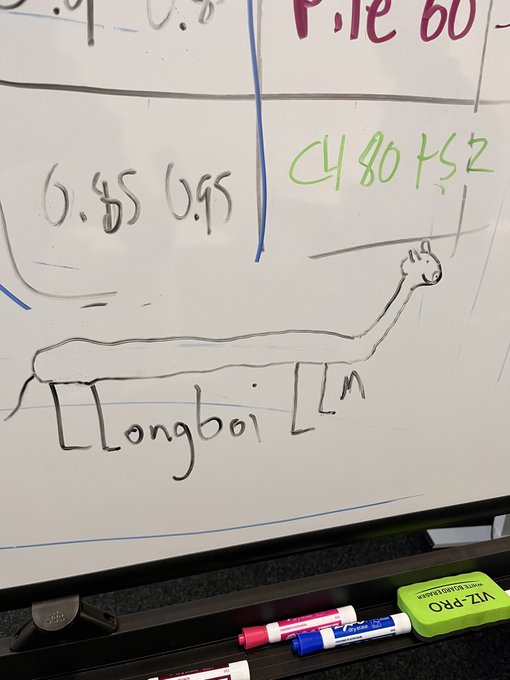

Gemini also continues the trend of training small models for looonger. As deep learning models transition from research artifact to production necessity, inference costs are going to increasingly dominate the economics. Llongboi just keeps getting llonger:

Ok, for those wondering about the origin of our nickname "Llongboi", here it is.

(

@jefrankle

got mad at me for putting this in the wild. Once it's free, it's free!)

1

0

22

1

0

26

All this talk of neural coding and computation by

@RomainBrette

@tyrell_turing

@andpru

@Neuro_Skeptic

et al. reminds me to remind everyone to read Paul Cisek's excellent (and imo overlooked) paper "Beyond the Computer Metaphor: Behavior as Interaction"

3

1

24

Good compute is terrible thing to waste, so

@abhi_venigalla

and I assembled some best practices for efficient CNN training and put them into a blog post.

New blog post! Take a look at some best practices for efficient CNN training, and find out how you can apply them easily with our Composer library:

#EfficientML

1

18

80

0

4

24

Like

@OpenAI

,

@BuceesUSA

offers employees PPUs instead of RSUs and has a capped profit model because the success of their mission will be so transformative to society that it would be unethical for them to capture all of the resulting value

1

3

23

Very excited to see

@MosaicML

used as a baseline, especially by work from

@aleks_madry

's lab. It showcases the massive speedups that can be achieved by combining thoughtful modifications to the training algorithm + well-applied systems knowledge. Eagerly anticipating the paper!

0

1

22

Excited to for my

#neuromatch2020

talk, today at 4pmPST/7pmEST/11pmGMT. It's a summary of my recent work with

@arimorcos

. If you miss free samples at the market, this is the next best thing. Come taste our work & if you like it read the paper!

Class selectivity is often used to interpret the function of individual neurons.

@arimorcos

and I investigated whether it’s actually necessary and/or sufficient for deep networks to function properly. Spoiler: it’s mostly neither. (1/10)

6

34

108

1

1

20

Big thanks to

@KordingLab

,

@bradpwyble

, &

@neuralreckoning

for organizing

#neuromatch2020

,

@DavideValeriani

for moderating my talk, & everyone who asked questions (no idea who, plz say hi if you wish). The experience has been a potent salve for the Coronavirus Blues!

Excited to for my

#neuromatch2020

talk, today at 4pmPST/7pmEST/11pmGMT. It's a summary of my recent work with

@arimorcos

. If you miss free samples at the market, this is the next best thing. Come taste our work & if you like it read the paper!

1

1

20

1

0

21

@code_star

,

@_BrettLarsen

,

@iamknighton

, and

@jefrankle

(yes, our Chief Scientist gets his hands dirty) put in a TON, and we couldn’t be happier with how the MPT-7B series of models turned out. And we're just getting started.

2

0

21

~2yrs ago

@nsaphra

came to my poster & we discussed regularizing to ctrl interpretability. She mentioned a superstar grad student (

@_angie_chen

). Things really got wild when

@ziv_ravid

joined the party. And

@kchonyc

graced us w/ wisdom throughout. V excited to finally announce:

New work w/

@ziv_ravid

@kchonyc

@leavittron

@nsaphra

: We break the steepest MLM loss drop into *2* phase changes: first in internal grammatical structure, then external capabilities. Big implications for emergence, simplicity bias, and interpretability! 🧵

2

62

351

1

3

20

My mom had to cancel the education conference she was organizing 😭 but got v excited when she heard about

#neuromatch2020

and wants to organize something similar.

@bradpwyble

@neuralreckoning

@KordingLab

@titipat_a

et al., do you have resources or a "how-to"? ❤️❤️❤️

3

0

17

This is why I went to grad school

The original LLongboi (drawing by

@leavittron

) secretly meming this code name into existence is one of my proudest moments at

@MosaicML

2

3

28

2

0

20

It's nuts how often I see slack notifications that we closed a new customer. Those three sales reps,

@barrydauber

,

@mrdrjennings

, and

@stewartsherpa

, are UNSTOPPABLE. Glad to see their hard work being recognized!

2

2

18

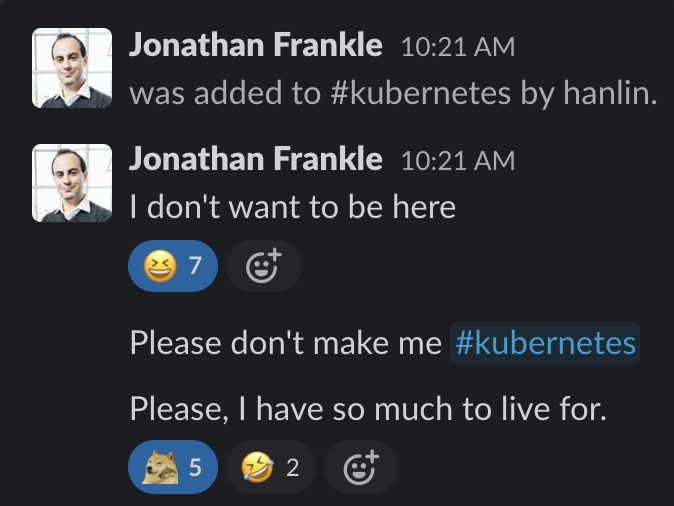

A haiku for the research scientists, at

@hanlintang

's suggestion:

Don't want to be here

Please don't, no kubernetes

So much to live for

0

3

19

I agree that not having experience training neural networks/not knowing the math underlying them shouldn't auto-invalidate one's AI takes. But "my AI takes are valid because deep learning doesn't use Real Math" is worse than wrong (more on that below) and weirdly fetishizes math

1

2

19

Very excited to announce that our work received a Spotlight Rejection at

@NeurIPSConf

#NeurIPS

0

0

19

"I want to see blood. We all want to see blood" -

@KordingLab

.

I've got to say, so far the worst part of

#neuromatch2020

so far is that

@KordingLab

can't spice up the debate by sliding

@tyrell_turing

a folding chair when Cisek has his back turned.

1

0

19

Zack worked his ass off for this paper and the reviewer responses (like he works his ass off for everything). This is extremely disappointing and I think this policy causes more harm than good.

0

0

17

Thrilled to have contributed to this. And excited to see what the community does with it!

Meet MPT-30B, the latest member of

@MosaicML

's family of open-source, commercially usable models. It's trained on 1T tokens with up to 8k context (even more w/ALiBi) on A100s and *H100s* with big improvements to Instruct and Chat. Take it for a spin on HF!

17

129

550

1

0

17

@finbarrtimbers

@MosaicML

@OfirPress

@tri_dao

We pretrained at 2048 then fine-tuned on 65k. We tried generation up to 84k. There are trucks we could use to push it further, but we wanted it to be simple for others to use. Dunno if you saw, but we used it to generate an epilogue to The Great Gatsby:

2

0

18

@paulg

I'm not saying it can never happen, just that it's probably not worth worrying about atm due to the logistics of generating the strength of magnetic field needed to do it.

@marcbeaupre

You need to generate 3-7T of magnetic field strength, which requires a large magnet, lots of power, and helium cooling. I dunno what the physical limits are on magnet size for field generation; also power consumption/dissipation seem like big issues

5

2

60

1

1

16

@andpru

@KordingLab

Most of what I learned in my PhD was conveyed implicitly, and even the explicit channels were typically code comments or oral history. I had a course on "research conduct", but that was basically "Retraction Watch's Greatest Hits".

1

2

15

My man was crazy close. Someone give him a prize. Real numbers are 340B and 7e24 FLOPs.

@CNBC

doesn't need to wait for leaks, they should just ask

@abhi_venigalla

.

1

2

17

Big shout out to

@CerebrasSystems

for building the tools and dataset and releasing both. Very glad that data work is getting the attention it needs. Though I don't see the tools anywhere on your github. Am I looking in the right place?

1

0

16

Next up: RedPajama is

@togethercompute

’s commendable attempt to recreate the LLaMa data. Many of their sources (e.g. Wikipedia, StackExchange, arXiv) are already available as ready-to-use datasets elsewhere, but RedPajama contains data through 2023—the freshness is appealing

1

0

15

Studying neuroscience doesn't make you a neuroscientist.

Actual neuroscientists...

- draw the Felleman and Van Essen diagram from memory

- compute XOR in single astrocytes

- reconstruct detailed biographies from c-Fos levels

- optogenetically induce consciousness in macaques

0

1

15

@SpiderMonkeyXYZ

I'm familiar with the study. It's great research! What I'm calling bullshit on is the idea that that "your thoughts aren't safe" or that you should be concerned about someone stealing your dreams

1

1

13

Would be great for someone to build some data curation tools suited to contemporary pretraining practices

1

0

15

@ruthhook_

Maybe "intelligent" people just introspect more, complain more, or have more medical care

0

0

13

I contributed to this and it feels good. Use it for SSL + transformers, then tag me in the github issue if you run into problems!

0

0

15

TFW a full research team at Google scoops your grad project. At least you know it was a good idea!

0

0

15

@PsychScientists

Coffee is actually very high-dimensional and this is what happens when you project it into two dimensions. It actually goes quite nicely with the Swiss Roll problem. Some people recommend using tea-SNE, but it's just not the same.

0

0

14

I'd say Harvard won the lottery here, but I think the real beneficiaries are everyone who gets to work with you. Congratulations, Jonathan!

0

1

14