Naveen Rao

@NaveenGRao

Followers

31K

Following

13K

Media

65

Statuses

4K

VP GenAI @Databricks. Former CEO/cofounder MosaicML & Nervana/IntelAI. Neuro + CS. I like to build stuff that will eventually learn how to build other stuff.

Joined February 2009

@LanaLokteff Ignorance alert. Indians are rarely, if ever, considered minorities in the US in terms of aid. Having only grown up in the US (but of Indian heritage) I can say there are many reasons Indian immigrants do well here. Hint: has nothing to do with nepotism.

12

126

2K

@LanaLokteff What Indian has ever cried that??. Those folks can do well in India. They can do better here. The aspect of our country that it keeps it at the top is immigration of best and brightest. If those people don’t want to come here, the country will lose its edge.

8

51

756

Today we’re announcing plans for @MosaicML to join forces with @databricks! We are excited at the possibilities for this deal including serving the growing number of enterprises interested in LLMs and diffusion models.

56

65

643

Would you rather invest $200m+ into a new computing arch and get maybe 2x perf, or <$5m and get 7x with better algos?.We @MosaicML did just that! We released Mosaic ResNet and it achieves SOTA perf in just 27min on standard HW. You read that right👇

8

61

556

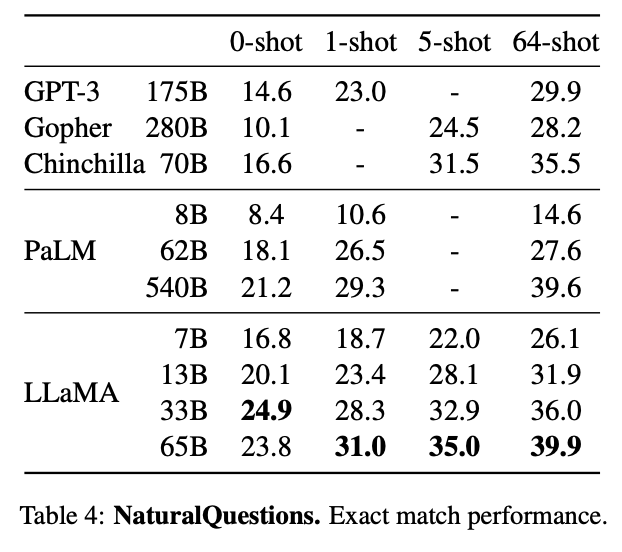

MPT-7B (aka Llongboi) released! The BEST open LLM on which to build!. -Apache 2.0 license suitable for commercial use. -Base 7B LLM trained on 1T tokens outperforms LLaMA and GPT3. -64K+ context length. -$200k to train from scratch.

📢 Introducing MPT: a new family of open-source commercially usable LLMs from @MosaicML. Trained on 1T tokens of text+code, MPT models match and - in many ways - surpass LLaMa-7B. This release includes 4 models: MPT-Base, Instruct, Chat, & StoryWriter (🧵).

22

91

543

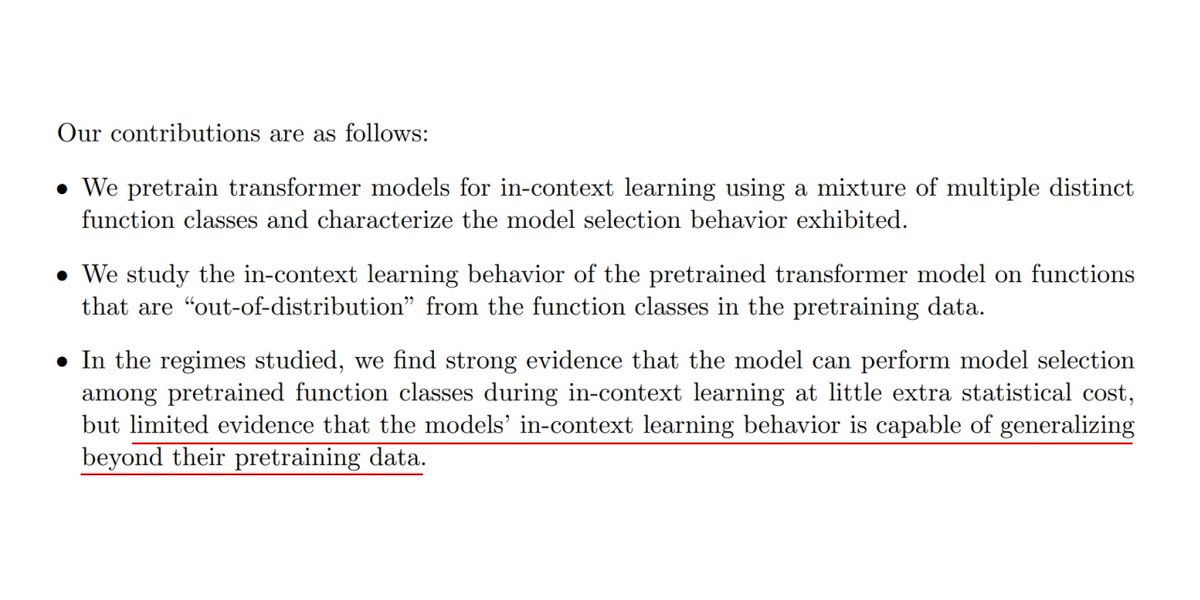

@martin_casado As much as I love my field, it's littered with anthropomorphizing results. We all want to believe that it's reasoning, and if I see evidence that looks like it's reasoning, we declare victory. It was always clear that these models estimate conditional probabilities and don't.

24

33

489

Ok, this site is getting really messed up now that @elonmusk has gone full wingnut politically. Out of morbid curiosity, I look at some of the crazy right posts. It's full of account like @VoteHarrisOut . it's an account that started in Aug 2023 and has sent nearly 44k tweets.

55

31

433

@okito 10x, yes. We and others are working on more efficiency. New hw will have features that help. We'll be 10x by year's end. Longer term, with the death of Moore's Law, it gets more difficult. Smaller models w/ some clever precomputing of results is needed. 100x might be much longer.

35

17

426

When we started @MosaicML we wanted to bring choice and cost reduction in AI to everyone. Today we've demonstrated that our stack fully supports @AMD Mi250! And it does it with good performance making it a viable alternative to Nvidia GPUs for cost/perf. We believe that, when.

Introducing training LLMs with AMD hardware!. MosaicML + PyTorch 2.0 + ROCm 5.4+ = LLM training out of the box with zero code changes. With MosaicML, the ML community has additional hardware + software options to choose from. Read more:

10

59

418

This might be the dumbest thing I’ve read this month. Bravo.

The last century was defined by how quickly we could invent and deploy everything. This century will be defined by our ability to collectively say NO to certain technologies. This will be the hardest challenge we’ve ever faced as a species. It’s antithetical to everything that.

28

20

404

@karpathy Wow congrats Andrej! I hope you can continue doing some of you open-source stuff and tutorials. the world needs more of that!.

6

1

385

Meet our new AI, #DBRX. DBRX is an advance in what language models can do per $. These economics will have profound impacts on how AI is used, and we've built this to democratize these capabilities!. It's the best open model in the world. It closes the gap to closed models in a.

Meet #DBRX: a general-purpose LLM that sets a new standard for efficient open source models. Use the DBRX model in your RAG apps or use the DBRX design to build your own custom LLMs and improve the quality of your GenAI applications.

17

62

367

Contrarian view: @sama is actually doing the right things with Open AI. He has the impossible job of taking an ideologically driven research group and trying to make it into a product company. Goals aside, if he didn't do this, investment would stop. Arguably, some of this issue.

28

17

308

Any AI researchers or engineers feeling uneasy about the future, we are hiring at @databricks / @MosaicML !.

9

36

286

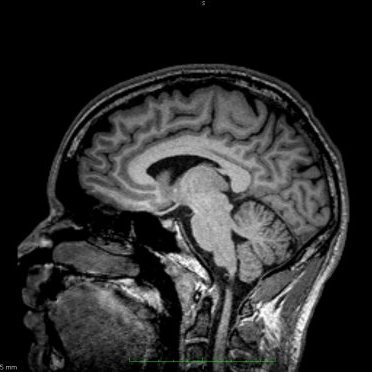

Well, that was fast! We (@MosaicML) released a SOTA result on MedQA just a few weeks ago and @GoogleAI now beat it soundly. It is incredible how quickly human performance is being commoditized. this essentially passes the USMLE. BTW, we did this with 1/200th of the parameters. .

Delighted to share our new @GoogleHealth @GoogleAI @Deepmind paper at the intersection of LLMs + health. Our LLMs building on Flan-PaLM reach SOTA on multiple medical question answering datasets including 67.6% on MedQA USMLE (+17% over prior work).

6

25

287

@jack Honestly this makes a lot more sense than any of the web3 rhetoric. Decentralized serving of information is doable and solves a real problem. This is a technology solution and doesn't need to be portrayed as some savior to all the world's problems.

11

12

234

Wow. in just 4 days the MPT-7B series of LLMs from @MosaicML was downloaded nearly 60k times! And I've seen cool apps things built on it. You guys are amazing!. Keep building! We love it!❤️

4

28

234

I have a massive respect for @vkhosla but this a short-term, inaccurate view. All the comments describe how China is already at the SOTA for LLMs. You can not stop information flow. The only way to stay ahead is to create a social/political environment that encourages.

The reason to keep it behind is so the Chinese don’t access new breakthrus and scale them. The risks in the techno economic was with china outweigh any of the sentient AI risks by orders of magnitude. And they will be interfering in our elections in 2024 to influence voters with.

15

14

226

This. This is why we do what we do @MosaicML. Enabling a small team (@amasad mentioned it was 2 people!) to build a state-of-the-art model with our stack makes me so happy!. Small teams using Mosaic ML to build AI is the future. @Replit and @MosaicML share the goal of enabling.

.@Replit just announced their own LLaMa style code LLM at their developer day!. replit-code-v1-3b. - 2.7b params.- 20 languages.- 525B tokens (“20x Chinchilla?”).- beats all open source code models on HumanEval benchmark.- trained in 10 days with @NaveenGRao @MosaicML

4

14

225

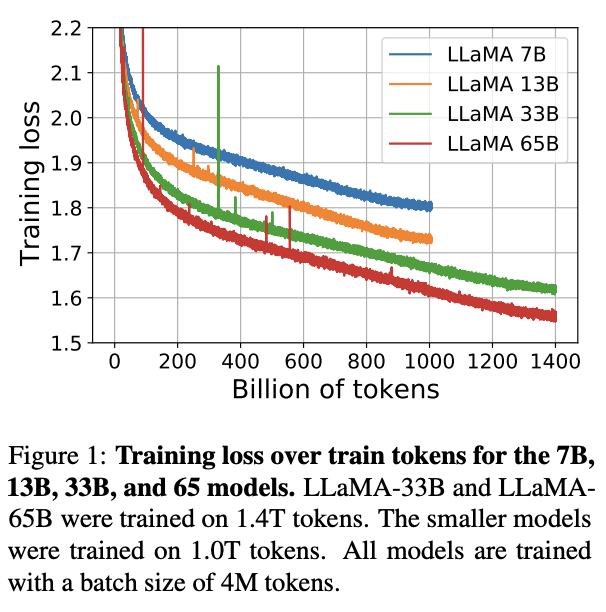

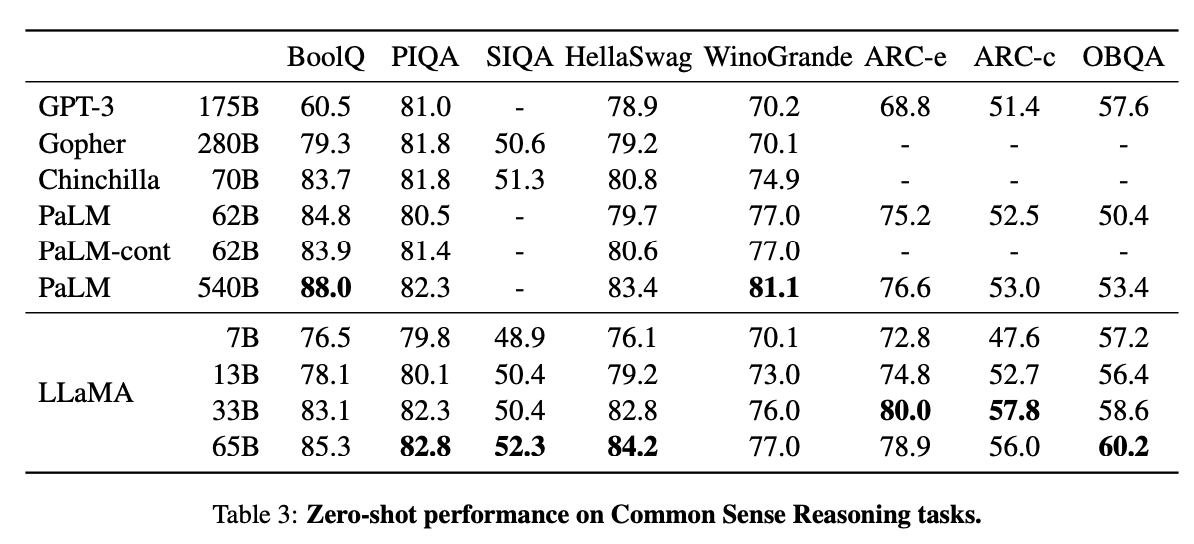

This is very cool! Thanks @MetaAI!. From what I can tell, this is a GNU license, which means fine-tuned models all must be upstreamed. For corporates, this is challenging as fine-tuned models on proprietary data will need to stay with the company. @MetaAI any idea if there are.

Today we release LLaMA, 4 foundation models ranging from 7B to 65B parameters. LLaMA-13B outperforms OPT and GPT-3 175B on most benchmarks. LLaMA-65B is competitive with Chinchilla 70B and PaLM 540B. The weights for all models are open and available at 1/n

7

21

218

Can someone explain to me what "PhD level" questions are? A PhD is all about discovering new knowledge and the process therein. It's not some grade achieved by taking an exam.

NEW w/ @coryweinberg:. OpenAI is doubling down on its application business. Execs have spoken with investors about three classes of future agent launches, ranging from $2K to $20K/month to do tasks like automating coding and PhD-level research:.

32

5

222

Let's demystify LLM serving on GPUs! Here we did a thorough analysis of latency/throughput tradeoffs, time to first tok, relationship to model size, quantization, and general concepts to be aware of. Enjoy! @MosaicML @databricks .

3

33

205

Agreed. There is no moat with any particular LLM. No one is more than a year ahead of others in the group of model builders. Open source does NOT threaten the technology ecosystem of the west. Let’s please stop with the straw man arguments.

Repeat after me: AI is not a weapon. With or without open source AI, China is not far behind. Like many other countries, they want control over AI technology and must develop their own homegrown stack.

6

28

189

Here's the (literal) money blog! @MosaicML is the FIRST company to publish costs for building Large Language models. These costs are available to our customers to build models on THEIR data. It IS within your reach: <$500k for GPT3!.

5

39

190

While ChatGPT gets the headlines, I think @natfriedman and Copilot have had a bigger impact on startups. Everyone is pitching “copilot for X” (finance, doctors, etc). I haven’t seen that since everyone was pitching “Uber for X” 7-8 years ago.

12

11

192

@pentagoniac Agreed that search isn't free. It is delivered without cost to the user through other monetization. Very clever and amazing. But AI costs are much higher, so I'm doubtful the same monetization will work. I see a world where we pay differential value for better AI. It's not bad,.

16

6

186

@thegrahamcooke Sorry, this doesn't disrupt much. I think there's a fundamental misunderstanding of why the best/largest models are in the cloud. Building pared-down models for edge is a different market with some advantages. But it is a different buying pattern.

3

2

185

Startups, need model training and serving compute to get to those end-of-year goals? DM me!. We❤️ our startup community @MosaicML/@databricks and want to see all the great GenAI products accelerated!. I'm willing to steal GPUs from our own research efforts (@jefrankle might hurt.

12

15

187

The official tweet thread and blog:.

25

10

185

Sorry, there's just no way senior leaders didn't know about these separation terms at OAI. Those terms don't just appear there; they were requested. Esp with the non-standard PPU structure, none of those terms are just "standard". I wish they would just say "sorry, bad call.

Scoop: OpenAI's senior leadership says they were unaware ex-employees who didn't sign departure docs were threatened with losing their vested equity. But their signatures on relevant documents (which Vox is now releasing) raise questions about whether they could have missed it.

7

9

182

I'm sure everyone wants to read about @databricks/@MosaicML inference stack over the holidays, so here ya go!. Serving Mixtral from @MistralAI and MoE (in the works for some time): Collaborating w/@nvidia and building upon TRT-LLM for inference:.

3

23

181

So I'm going to have to disagree here. as a musician, I don't find these AI generated songs to be very good. Music & lyrics are hard because you need so much context of being a human to get it all right. This AI generated stuff is super generic and could be useful as "filler".

Amazing text to music generations from @suno_ai_ , could easily see these taking over leaderboards. Personal favorite: this song I fished out of their Discord a few months ago, "Return to Monkey", which has been stuck in my head since :D. [00:57].I wanna return to monkey, I

64

9

180

It's official! Mosaic Doges are Bricksters! We're all very excited to be part of @databricks and to build the future of #GenAI for the enterprise. Thanks to @alighodsi and rest of Databricks for the belief in @MosaicML's vision. Let's go!!.

📢 Today, we're thrilled to announce that @Databricks has completed its acquisition of MosaicML. Our teams share a common goal to make #GenerativeAI accessible for all. We're excited to change the world together! . Read the press release and stay tuned for more updates:

8

11

175

This is good thread on where we are as an industry with LLMs. I can tell you from experience that enterprises absolutely want high levels of customization. Databricks + MosaicML enables this path from raw data -> filtering/ETL -> building models -> serving models.

The ChatGPT hype cycle:.- Stage 1: "GPT-X is out-of-the-box magic!".- Stage 2: "We need to use our data" (where we are now).- Stage 3: "We need to develop our data". From 2 -> 3, enterprises will realize not enough to just dump in a data lake. use case-specific dev is key!👇🧵

3

28

167

Boom! Another month, another drop! We love seeing what the community did with MPT-7B, and now we went bigger. Go play on @huggingface Under the hood, this is running on @mosaiml's inference service. You'll notice it's pretty snappy! Also, this is also one.

Meet MPT-30B, the latest member of @MosaicML's family of open-source, commercially usable models. It's trained on 1T tokens with up to 8k context (even more w/ALiBi) on A100s and *H100s* with big improvements to Instruct and Chat. Take it for a spin on HF!

7

33

161

I support open source for AI! We need to be sure to enable many researchers to solve the big problems in the field.

1/ We’ve submitted a letter to President Biden regarding the AI Executive Order and its potential for restricting open source AI. We believe strongly that open source is the only way to keep software safe and free from monopoly. Please help amplify.

9

21

162

We aren’t just about LLMs. The world is multi modal! Congrats to the @MosaicML / @databricks team to get this on ArXiv and establish a new standard for use. So much exciting work coming out!.

3

28

159

10 months ago I tweeted that we were getting a new project off the ground…today I’m proud to announce with @hanlintang @jefrankle and @mcarbin that MosaicML comes out of stealth! We are focused on the compute efficiency of neural network training using algorithmic methods.👇.

Hello World! Today we come out of stealth to make ML training more efficient with a mosaic of methods that modify training to improve speed, reduce cost, and boost quality. Read our founders' blog by @NaveenGRao @hanlintang @mcarbin @jefrankle (1/4)

25

19

156

Really excited to be supporting @Replit and @amasad in their efforts to build a special purpose LLM for their needs! Check out how they used @MosaicML and @databricks to accomplish this:

1

23

149

@mustafasuleymn No, we’re not. This doom and gloom prediction has been made for years, but it misses a fundamental understanding of humans. Humans will never exist in a “post work” regime…we need purpose and will endure pain to get it.

18

5

144

This is kind of a problem. It reminds me of how Microsoft in the 90s used to track how much money their customers made as a figure of merit. That's really the only way to understand how durable a revenue stream is.

You give me $50 billion to buy Nvidia chips and i’ll give you $3 billion (in revenue, which i’m still posting a loss on)

11

8

140

Excited to be driving in 24h of Le Mans in June with @COOLRacing ! We'll be donning an all @databricks livery. I think it'll really pop on TV :). 🏎️🏎️🛞.

Le Mans Livery Alert 🗣. COOL Racing's #47 LMP2 has been given a makeover for the 24 Hours of Le Mans on 15-16 June 🇫🇷.We are delighted to run under Databricks colors in La Sarthe this year. #COOLRacing #CLXMotorsport #LeMans24

11

10

132

This message from @ID_AA_Carmack hit home. I felt similarly at Intel. More resources were never enough. Is this the only possible state for large corporate orgs to exist? Is there a better way to build? I've read a number of books on this and still no answer.

13

5

126

Software will NOT eat the world when it comes to AI. The fundamental balance of compute and software is different with AI. To understand the differences and their implications we must dig into the profound statement that @pmarca made in 2011.

7

14

129

The single most important thing that @sama did was craft a narrative that appeals to people who wanted to "go for it" in AI. It's part academic curiosity, part anarchist, part desire to good in the world. Not sure that'll be sustained in MSFT.

13

6

127