Zack Ankner

@ZackAnkner

Followers

493

Following

307

Media

37

Statuses

185

Junior @MIT . President of AI @MIT . Research Scientist Intern @DbrxMosaicAI .

Joined September 2019

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Alito

• 226094 Tweets

Zócalo

• 165781 Tweets

超・獣神祭

• 75644 Tweets

Fiorentina

• 53512 Tweets

Gremio

• 51889 Tweets

PITANDA NO PODDELAS

• 43399 Tweets

Nestor

• 37178 Tweets

Mets

• 34055 Tweets

#AEWDynamite

• 31845 Tweets

ナイトウェア

• 30906 Tweets

Marcelo

• 30835 Tweets

あなたの悪夢

• 29416 Tweets

#ナイトメアの悪夢で遊ばナイト

• 25731 Tweets

Luciano

• 14476 Tweets

Shota

• 13336 Tweets

Talleres

• 11946 Tweets

Huachipato

• 11766 Tweets

Coyuca de Benítez

• 10293 Tweets

Okada

• 10048 Tweets

Last Seen Profiles

My EMNLP paper got desk-rejected post-rebuttal because I posted it to arxiv 25 minutes after the anonymity deadline. I was optimistic about our reviews, so I spent a whole week while visiting my family writing rebuttals and coding experiments to respond.

3

28

187

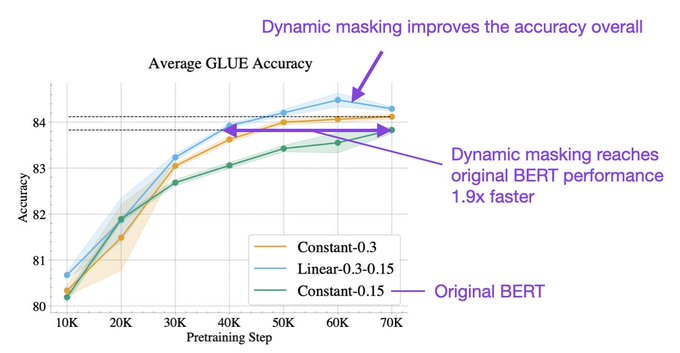

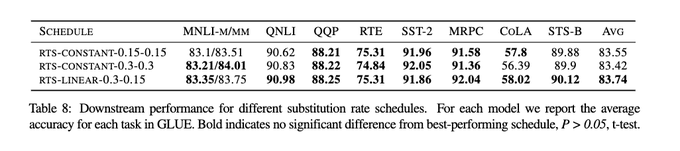

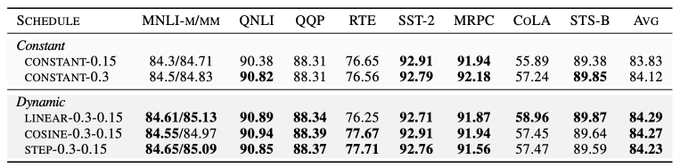

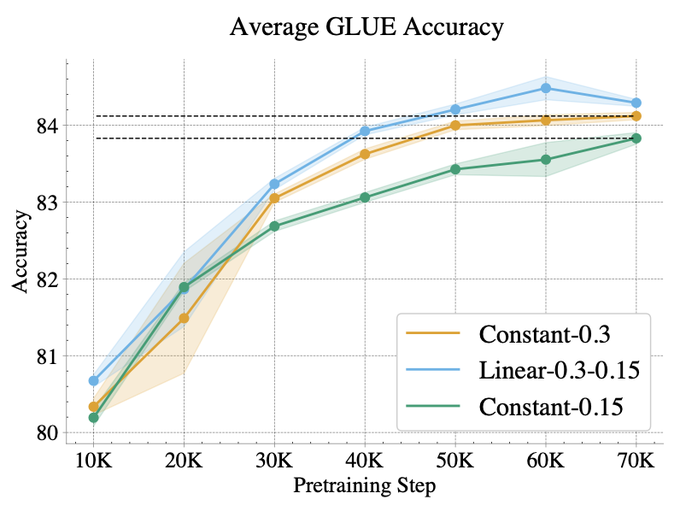

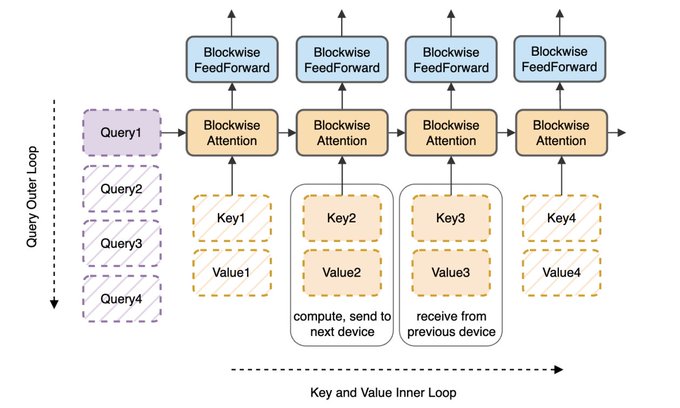

The linked thread contains in-depth details about the paper, but the TLDR is when pre-training BERT-style LLMs scheduling the rate at which tokens are masked outperforms using a fixed masking rate.

1

0

21

Super awesome to have our paper shared by

@rasbt

! Really weird and surreal to see something I worked on showing up in my timeline

1

2

20

Little rant, admin makes running a club

@MIT

the most frustrating experience. So many bs hoops to jump through and useless bureaucracy. Students are motivated and can manage their own clubs. Every year I'm honestly shocked most clubs don't disband because it's so god-awful

1

0

19

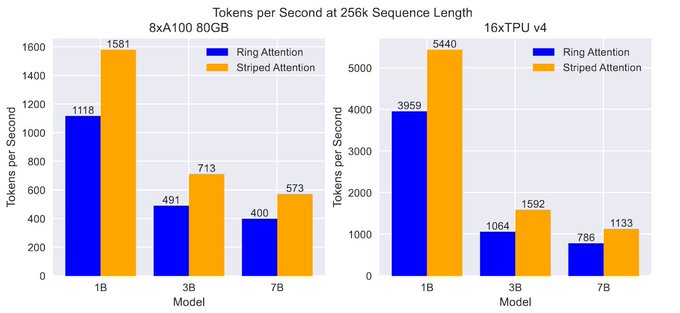

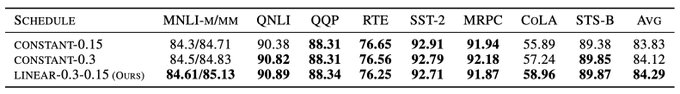

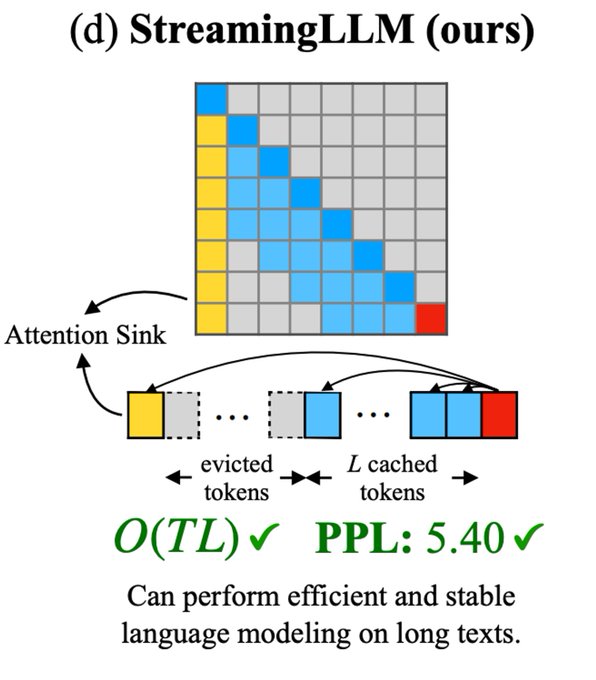

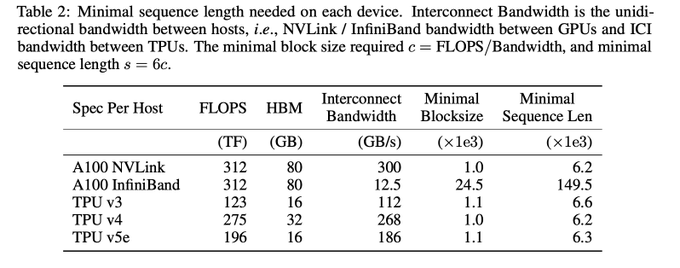

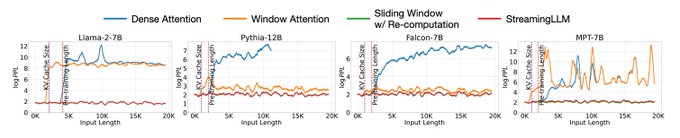

Super fun to work on this project!

@exists_forall

really has the fastest turnaround. We read the Ring Attention paper at our mlsys reading group on 10/18 and by 11/15 William had posted Striped-Attention!

1

1

16

This will be a valuable part of my weekly team meeting presentation. In seriousness, one of my favorite parts about working with the people

@MosaicML

is the research attitude and not taking ourselves too too seriously. Makes tackling challenging new projects a lot less daunting.

1

1

13

This was a collaboration with the awesome

@nsaphra

@leavittron

@davisblalock

@jefrankle

. This work was all made possible by

@MosaicML

. They really are the best people with the best ML stack and they made this entire project a great experience! [11/11]

0

0

6

@brianryhuang

Yeah I think in general "engineering" work gets looked down on in research. Its a broad overgeneralization but I can say I've definitely thought that way before when really all the gains we have are due to engineering considerations and there's so much interesting work there.

0

0

3

@abhi_venigalla

@MIT

Honestly admin babysitting at every part of the institute is crazy now. Swear these people think they’re running a day care and not a college sometimes

1

0

3

@stephenroller

Time to undo the last commit then? Its okay github stats needed to get padded anyways

1

0

2

@code_star

No idea, these are just the ramblings of a guy with a smooth brain who doesn't like in-place ops

2

0

2

@stephenroller

And an even greater instinct to leave all my code to get re-factored by smarter people and go back to data selection methods haha

0

0

2

@stephenroller

I'm sure the other folks at Mosaic can produce algorithmic improvements. I'm personally just working on pronouncing parallelism correctly 😁.

2

0

2

Thanks to the authors

@haoliuhl

@matei_zaharia

@pabbeel

for an awesome read and method!

Please let me know if I got any of the details wrong

0

0

2

@LChoshen

@jefrankle

What Jonathan said haha, and yes this was work I did during an internship at Mosaic! I work with Prof. Carbin at MIT

1

0

1

@itsclivetime

@abhi_venigalla

@MIT

Yeah having an independent org would definitely be easier. But for most of our activities we run we really need classrooms so kinda stuck sadly

0

0

1

@code_star

Yeah, vagueness around how I used word retrieval. I mean retrieval like doing some ANN lookup to extend your context window. Glad to hear 16K is set in stone ... my new north star.

0

0

1

@rasbt

@TheSeaMouse

Hey, one of the authors, we experimented with higher initial masking rates and found them not to work and actually degrade performance. Side note, that figure is misleading and we'll update, those were just supposed to be example values.

1

0

1

@anneouyang

@abhi_venigalla

@MIT

Yeah the tax part is brutal. So many chipotle tax reimbursements lost lol

0

0

1

@rasbt

@TheSeaMouse

We found the best initial masking rate to be consistent with the best constant masking rate. For larger models, the initial masking rate benefits from being higher. Will be dropping those larger models soon!

1

0

1

@danish037

@jefrankle

@juanmiguelpino

@hbouamor

@kalikabali

@IGurevych

Not exactly sure where but waiting till post-rebuttals after having authors spend their entire week grinding out results to have them be completely ignored is definitely not the right place imo.

1

0

1