Meet MPT-30B, the latest member of

@MosaicML

's family of open-source, commercially usable models. It's trained on 1T tokens with up to 8k context (even more w/ALiBi) on A100s and *H100s* with big improvements to Instruct and Chat. Take it for a spin on HF!

17

127

548

Replies

MPT-30B is a bigger sibling of MPT-7B, which we released a few weeks ago. The model arch is the same, the data mix is a similar, and the context grew to 8k. We massively upgraded the Instruct and Chat variants over MPT-7B. See the full details in our blog!

4

3

34

It's a huge improvement in MPT-7B in every way and it's a peer of LLaMA-30B, Falcon-40B, and GPT-3 according to our new evaluation framework. We collected (sub)tasks from popular eval benchmarks into categories like "world knowledge," "reading comprehension," and "programming."

1

0

29

It's optimized for incredibly fast inference. It fits on one A100, meaning you don't have to do any crazy gymnastics to take advantage of it. If you want to use it on the MosaicML inference service, it's 4x cheaper than comparable OpenAI models.

1

0

25

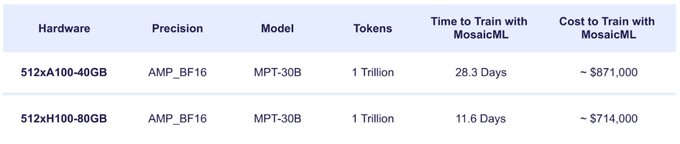

How much did it cost to train? At list price on

@MosaicML

, it was between $714k and $871k depending on your GPU choice. It's also incredibly cheap to fine-tune, at between $714 and $871 per 1B tokens.

2

0

30

MPT-30B-Instruct and MPT-30B-Chat include v2 of our instruction-following and chat datasets, themselves big upgrades over MPT-7B. (We're releasing MPT-7B-Instruct/Chat-v2 soon as well!) Check out the Chat model - served using MosaicML inference - here!

1

0

18

And of course, the base model is available for you to build on as you like, on your own or on the MosaicML Platform.

1

3

53

As always, the most exciting model is the that one *you* will build on *your* data using the MPT-30B architecture and base model. Sign up to get started on the MosaicML platform here!

0

4

26

@MosaicML

Awesome work, MosaicML!

Do you by chance have the training chronicles log? It would be interesting to the community to see what difficulties were encountered (spikes/divergences/etc) and how they were overcome.

Thanks a lot!

0

0

3

@MosaicML

The evaluation framework is quite intriguing. It appears that this is one of the unresolved matters lacking any consensus thus far.

0

0

0

@MosaicML

𝙃𝙚𝙡𝙡𝙤! 𝙄 𝙖𝙢 𝙞𝙣𝙩𝙚𝙧𝙚𝙨𝙩𝙚𝙙 𝙞𝙣 𝙮𝙤𝙪𝙧 𝙥𝙧𝙤𝙟𝙚𝙘𝙩! 💥𝙄 𝙬𝙤𝙪𝙡𝙙 𝙡𝙞𝙠𝙚 𝙩𝙤 𝙘𝙤𝙡𝙡𝙖𝙗𝙤𝙧𝙖𝙩𝙚. 𝙋𝙡𝙚𝙖𝙨𝙚 𝙨𝙚𝙣𝙙 𝙢𝙚 𝘿𝙈!📩☑️

0

0

0

@MosaicML

Thanks for sharing!

The evaluation framework is very interesting - this seems to be one if the open issues that doesn’t have any consensus yet?

0

0

0

@MosaicML

Cool but...

Hallucinates like mad 💀 How hard would it be to replace the post-training with something better than the same old trash RLHF?

0

0

0