Manjari Narayan

@NeuroStats

Followers

5K

Following

21K

Media

285

Statuses

10K

Quantitative Epistemologist. AI Epistemics in Bio. Feedback welcome at https://t.co/9xLU5az0f7 @StanfordMed | PhD@RiceU_ECE | BS@ECEILLINOIS

Pasteur's Quadrant

Joined November 2014

Going forward, I'm going to be active elsewhere. Links to other places @

1

0

2

RT @seanluomdphd: Empirically false. The largest study on natural history of BPD is called CLPS which shows that *most* (>50%) improve over….

pubmed.ncbi.nlm.nih.gov

The Collaborative Longitudinal Personality Disorders Study (CLPS; Gunderson et al., 2000) was developed to fill gaps in our understanding of the nature, course, and impact of personality disorders...

0

4

0

Wow.

Practically useful & biologically aligned benchmarks such as this one from @pkoo562 lab consistently show that all the overhyped annotation-agnostic DNA language models are actually terrible for transcriptional regulatory DNA in humans (mammals). 1/.

0

0

0

This is high bar of helpful criticism to offer someone on social media outside of work 💚.

@joel_bkr Sorry for the img, I cannot post long tweets, but here's the text so you can copy-paste it:

0

0

1

There are lots of other problems with this paper too. As usual the top violated assumption in any statistical analysis is correlated non i.i.d observations. Scientists who have thought a lot about construct validity in software productivity take issue with what was measured

METR’s analysis of this experiment is wildly misleading. The results indicate that people who have ~never used AI tools before are less productive while learning to use the tools, and say ~nothing about experienced AI tool users. Let's take a look at why.

2

1

8

I thought we were past this kind of stuff but that would require engineers who apply algorithm X to science to learn about science-ing. I remember a bunch of work like this 10+ years ago from biomedical engineers in schools of medicine. We could afford to have less of this flavor.

This is junk science that could be extremely harmful to vulnerable populations if ever deployed. AI facial analysis cannot infer latent psychological, personality, or behavioral features, and technology that pretends to have these capabilities is grossly unethical.

0

3

9

RT @bayesianboy: PTSD is a cognitive disorder, not a disorder of facial morphology or expression. Facial morphology and expression will nev….

0

9

0

Having actually worked with default mode networks based on imaging and the quality of inferences drawn about it from such data, I suspect attribution of their functionality isn't what people think it is in mainstream discourse. It is definitely a real phenomenon we.

Really interesting new @gwern essay: . LLM Daydreaming - Proposal of how default mode networks for LLMs are an example of missing capabilities for search and novelty. Btw, I know it's a bit cringe to delight in, but if you had told 19 year old me that a Gwern essay would open

0

0

2

This paper is a huge public service. As someone who once spent many years working on novel tests for distributional equivalence, it has been painful to read the OOD detection literature.

Excited about our new ICML paper, "Out-of-Distribution Detection Methods Answer the Wrong Questions". There's a prevalence of methods, but they aren't actually targeted at identifying points drawn from different distributions! 1/7.

0

0

2

Not very surprising. I often get deep research quality outputs without turning on deep research.

icymi, openai also open sourced how their deep research prompt rewriter works + the full prompts today. you can now build your own deep research agent - with full o3/o4-mini deep research quality - in 20LOC. if you're a fan of Claude Research they ALSO dropped how to add

0

0

1

RT @anshulkundaje: The benchmarking is also thorough using difficult and strong benchmarks introduced by several papers. Also they do exten….

0

2

0

RT @_yji_: Pfizer (the authors here) is the end user, they shouldn't be the ones having to call out papers from @NatureBiotech . What is th….

0

1

0

I recently read Michael Polanyi — the idea that personal tacit knowledge is where the new stuff really comes from is something I can't stop seeing everywhere now. > Paraphrasing something.@MelMitchell1 pointed out to me, if you define jobs in terms of tasks maybe you're.

I find the story of AI and radiology fascinating. Of course, Hinton's prediction was wrong* and tech advances don't automatically and straightforwardly cause job replacement — that's not the interesting part. Radiology has embraced AI enthusiastically, and the labor force is

1

0

7

RT @NeelNanda5: An unexpected consequence of mass llm use: fake citations of me show up in Google scholar that seem hallucinated from a pro….

0

17

0

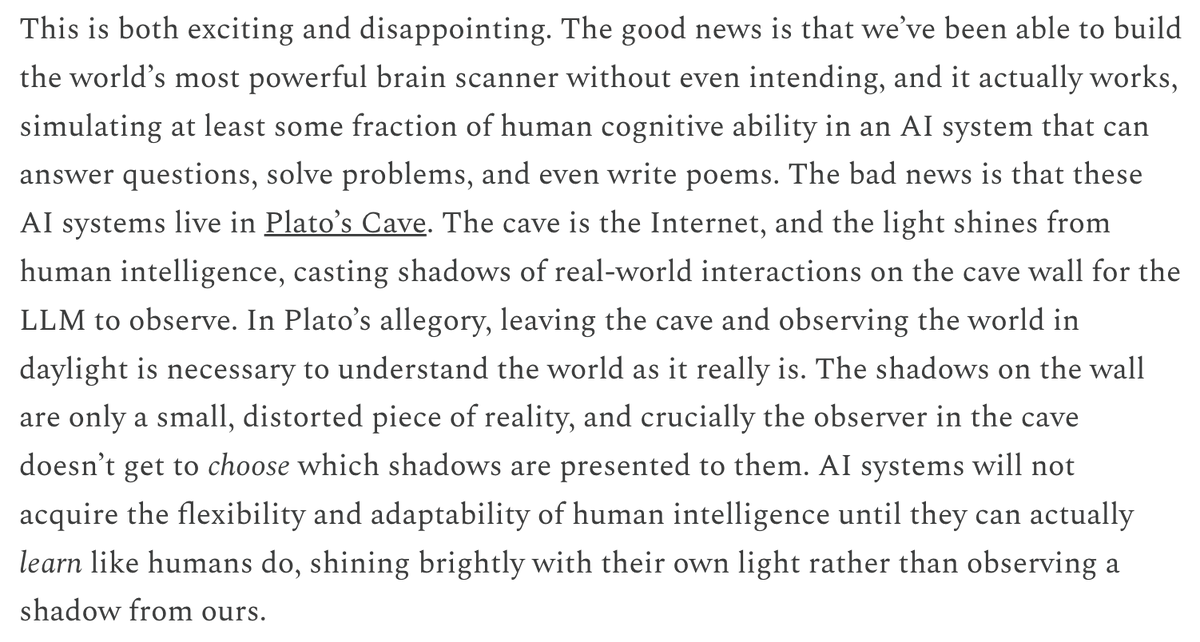

Reminds me of how @yudapearl has often used the Plato's cave metaphor to explain the lack of causal information in observational data. But this is an even more apt metaphor since LLMs indirectly learn causal information by learning from human experiences in the real world.

I'm surprised this post didn't get more attention. Most papers arguing we are far from AGI just show LLMs failing on tasks too big for their context window. Sergey identifies something more fundamental: while LLMs learned to mimic intelligence from internet data, they never had

0

0

12

Entirely well deserved. @awaisaftab, I've been reading your work for 6+ years. You've been creating a bridge between decades of writing in philosophy of psychiatry and drawing out its implications in clinical practice. There isn't much principled and scientific testing of.

The latest issue of Lancet Psychiatry contains a profile of me, in which they describe me as “one of the discipline’s foremost public intellectuals”!!! .

0

0

4

"Are you willing to just set a timer for just _ minutes to think about the thing or work on the thing" helps too. where _ = 1 to 10 minutes.

Amazing how big the quality of life improvements are downstream of “let me take this off future me’s plate.” It's not just shifting work up in time — it’s saving you all the mental friction b/w now & when you do it. Total psychic cost is the integral of cognitive load over time.

0

0

1

RT @imvaddi: @andrewgwils I was reading "Breaking Through" by Katilin Kariko, and the book ends with this note relevant in this context--"I….

0

1

0