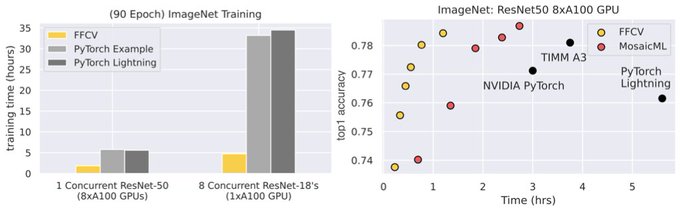

ImageNet is the new CIFAR! My students made FFCV (), a drop-in data loading library for training models *fast* (e.g., ImageNet in half an hour on 1 GPU, CIFAR in half a minute).

FFCV speeds up ~any existing training code (no training tricks needed) (1/3)

29

390

2K

Replies

FFCV is easy to use, minimally invasive, fast, and flexible: . We're really excited to both release FFCV today, and start unveiling (soon!) some of the large-scale empirical work it has enabled us to perform on an academic budget. (2/3)

2

11

113

You can start using FFCV today: check out the repo () and docs ()---we even have a Slack! Stay tuned for a blog post, and a paper explaining the details. w/

@gpoleclerc

@andrew_ilyas

@logan_engstrom

@smsampark

@hadisalmanx

(3/3)

2

9

72

PS A few examples: in 30 min, we can train ResNet-18 to 67% ImageNet acc on *one A100*. In 20 mins, ResNet-50 to 75.6% on a p4d AWS machine (<$5!). CIFAR costs 2 cents/model. My students tell me this is fast ;) [More seriously, we haven't seen anything in PyTorch that compares.]

7

5

90

@aleks_madry

Impressive. Sometimes I should compile a list of libraries and techniques that demonstrate that computers are significantly faster than we think.

0

0

1

@aleks_madry

@aleks_madry

(and team) this is really cool! nice job.

A few points on the image i posted. Excited to allow PL to work with ffcv so you can get both benefits (in addition to things like deepspeed, etc...)

2

0

18

@aleks_madry

Can you talk about the Quasi Randomness option? What properties of the randomness have you found to be important for good training?

0

0

0

@aleks_madry

What does 8 Concurrent ResNet-18s on 1xA100 mean? Really: 8 on the same GPU in 5 hours total for all of them?

1

0

0

@aleks_madry

Pytorch lightning is 10x slower 😳

@_willfalcon

@PyTorchLightnin

Anyways to get those speed ups in lightning? 😇

2

0

4

@aleks_madry

Wait whaaaaat? Sounds very cool -- how often is I/O the bottleneck, though? I thought algebraic computations usually were.

1

0

2