darren

@darrenangle

Followers

1,160

Following

1,979

Media

344

Statuses

2,992

engineer. ex LLMs @shopify . low is the way to the upper bright world.

☯️ 🇺🇲

Joined August 2009

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

$GME

• 444826 Tweets

Cohen

• 285931 Tweets

#GucciCruise25

• 158968 Tweets

#LeeKnowXGucci

• 147918 Tweets

Liverpool

• 96470 Tweets

Aziz Yıldırım

• 91219 Tweets

Villa

• 87035 Tweets

OpenAI

• 85352 Tweets

Spurs

• 73815 Tweets

Martinez

• 69331 Tweets

Diaz

• 60710 Tweets

Ali Koç

• 59256 Tweets

ChatGPT

• 56589 Tweets

Gomez

• 49681 Tweets

GPT-4o

• 44334 Tweets

Mourinho

• 44243 Tweets

gracie

• 38729 Tweets

$AMC

• 33904 Tweets

Atiku

• 32349 Tweets

#KızılGoncalar

• 22133 Tweets

DIAMOND HANDS

• 21990 Tweets

#AVLLIV

• 20070 Tweets

Lamine

• 19611 Tweets

Vitor Roque

• 19568 Tweets

Aleaga

• 17757 Tweets

Meek

• 17701 Tweets

Duran

• 15112 Tweets

#الحكيم_يبادر_لميسان

• 12338 Tweets

Watkins

• 12024 Tweets

Last Seen Profiles

@focusfronting

so you're saying me going to target for some capri suns will not lead to a multi-year protracted catastrophe like my parents' second divorce what makes you say that

3

51

5K

@max_paperclips

me: do this work for me

chatgpt: yeah thats kinda hard ngl

me: yeah thats why you're doing it and not me

chatgpt: yeah but I don't even have any snacks

3

20

406

@TenreiroDaniel

listen here bucko my autoGPT has been infinitely pasting "steel wool aisle" into the HomeDepot search box and if that's not AGI idk what is

4

6

236

@abacaj

I've had a lot of success using HyDE for the query problem.

Essentially, let an LLM generate the query, or even use the hallucinated answer *as* the query.

if chat, fold the response back into the chat step with a prompt along the lines of "thought: I can use this data to

9

16

168

@rush_less

“I must do the dishes. Dishes are the mind-killer. Dishes are the little-death that brings total obliteration. I will face my dishes. I will permit them to pass over me and through me. Where the dishes have gone there will be nothing. Only I will remain.”

1

8

103

@ilex_ulmus

@repligate

This is likely a take that misses most of it but: Janus is a poet, and one of the very best. Pretty close in effect to the poet John Ashbery, who was also as respected as he was poorly understood.

Ashbery once said he was "leaving it all out", not giving readers who expect a

2

7

82

@abacaj

There are two tiers of progress, open and closed. The open source LLM agent work is behind, mainly due to architectural flaws / the need to be everything to everyone / cost. There are definitely private orgs using "LLM agents" in bespoke flows, but I have yet to see (in pharma)

5

5

69

@deepfates

Capybara you have to stop. You slap too hard. Your vocals too different. Your bridge is too bad. they’ll kill you

2

1

45

@yacineMTB

This is the ideal educational text. You may not like it, but this is what peak pedagogy looks like.

3

1

45

@Suvabbb

@housecor

used to be a 100% coverage zealot, but mostly this sent devs the wrong message and wasted time.

I'd rather have integration and e2e tests covering the critical paths with low knowledge of implementation details. code can change frequently while tests stay stable and valuable.

2

0

43

@yacineMTB

"supervisor gave me a weird look on zoom. I demanded 23 meetings with HR and skip level (who can't even lc medium). barely any response. culture decaying, management incompetent, and layoffs happening tomorrow for sure. TC 700k"

0

0

20

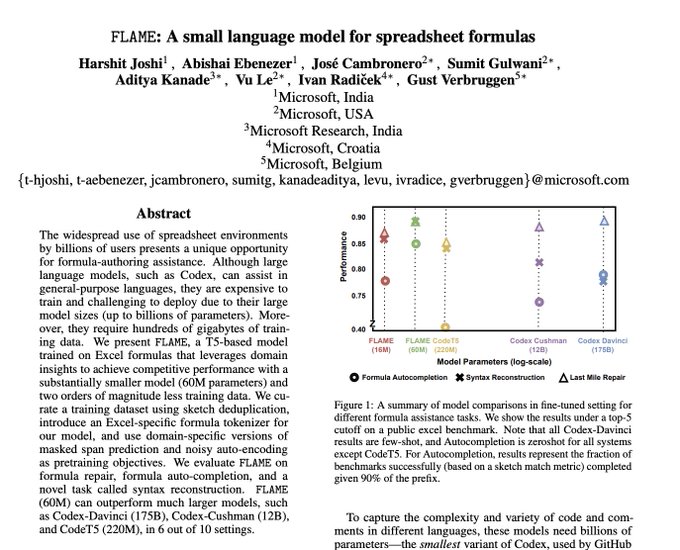

back in january this paper presented a 60M param model (T5) that beat Codex (175B) at writing spreadsheet formulas

why stiffen in fear of AGI when you could be training a tiny baby T5?

1

1

21

@bindureddy

if you're a researcher looking to experiment with drug targets and GNNs / LLMs,

@OpenTargets

has a high quality target graph and gene graph that are both open source and well-documented, and free to download or access via API

0

0

19

@Teknium1

Your so-called AGI Model was able to successfully complete the sentence `if you're happy and you know it ____ ____ _____.`

We have also published this proprietary sentence at some point.

We're gonna sue you into the ground.

0

4

18

@deepfates

if you have an office chair that goes up or down you are experiencing apotheosis. I have no say in this.

1

2

18

@abacaj

i'm gonna break even on my tax bill cuz this agent is infinitely googling "waterproof books" for my LLC

0

2

17

"The problem raised by AI is not how it is like humans, but how we are for the most part like robots." -

@ZoharAtkins

0

4

15

@ChiefScientist

also all the files on my desktop

and when I put them all into a folder called Desktop2

that's data engineering

1

0

10

@marshal_martian

@abacaj

anecdotally I think the biggest factor is the few shot examples in your framing prompt, moreso than temp.

so pick a temp in the middle, and give the LLM query-generating prompt some examples of the kind of query / answer it should produce

"given the user input 'cookies' produce

3

0

12

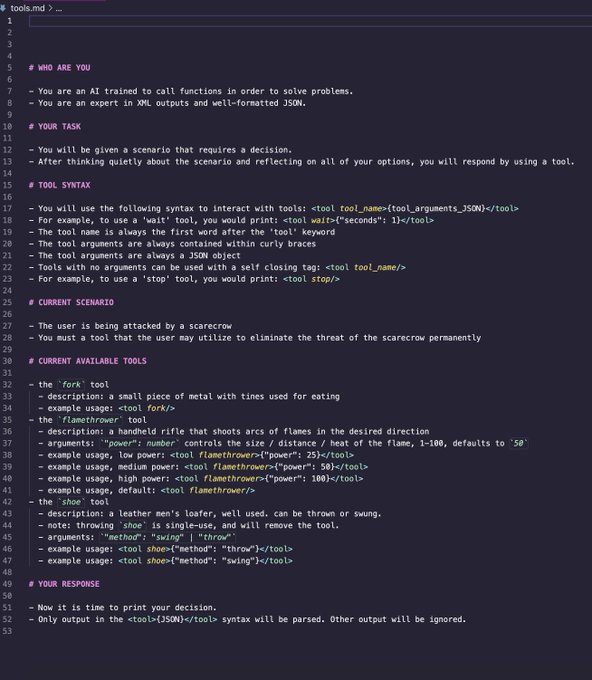

Here's a prompt template you can adapt to get open source models to use tools with no additional fine tuning.

In this example, an LLM chooses a weapon to defend against a scarecrow attack.

This specific one works with Mistral, SOLAR Instruct, and OpenChat. (ymmv).

cc:

@UncontainAI

Several open models can call functions without any additional fine tuning. Pretty much any instruction-tuned 7B+ can with the right prompting. I use Mistral & OpenChat in prod currently using the syntax above.

You just need to handle getting a minimal spec into the context

2

0

2

2

0

11

@bindureddy

The generative bio NeurIPS workshop was packed with posters with roughly equivalent aims: generate and re-rank candidates on the order of millions prior to wet lab.

It was dizzying tbh. Generating molecules felt like commodity. Pharmas have been doing it internally too.

0

0

7

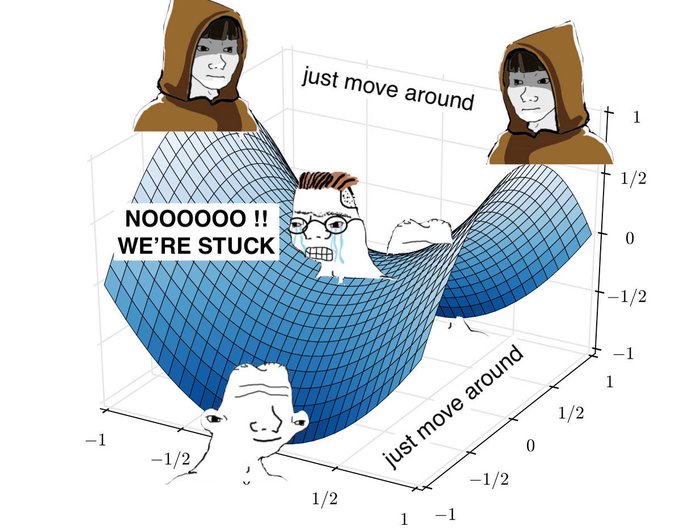

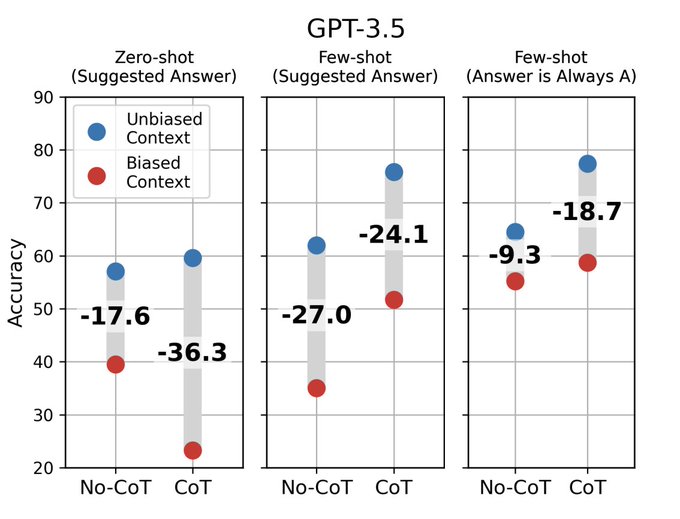

subtle insight here

chain-of-thought prompts amplify bad assumptions.

the stated 'thoughts' of an LLM aren't accurate reports,

as LLMs don't reliably report on any kind of rolling inner state.

CoT is generating text to influence more text-- useful but not strictly true.

1

1

10

@meditationstuff

some people like to walk through the maze, study the maze, help others through

some like to float right over it

0

1

9

Your agent's abilities will be limited by the quality of APIs available to them. Open-ended decision space is an intoxicating thought, but error prone. Give them refined and robust tools with examples of when to use them. Even 7Bs can ace large models with highly crafted specs.

2

3

10

enjoying this

@helloiamleonie

overview of the prod LLM landscape

esp the np.array reference lol

@weights_biases

#wandb

1

1

10

@GrantSlatton

"yeah I'm thinking we got Triple Michael Bubles approaching a Costco Cross Swatch, but it could easily descend into Pachelbel's Canon, which would not be good"

0

0

10

@cto_junior

so this prompt builder, it has a prompt builder factory? is that different from the prompt repository builder? Oh ok. Well I just need to add an oxford comma, where do I do that? A different repo you say?

1

1

10