Grant♟️

@granawkins

Followers

10K

Following

52K

Media

4K

Statuses

15K

American Diaspora | Kardashev-2 or bust | core dev Mentat | builder of curiosities: https://t.co/LHAqqpGqxQ https://t.co/Ue0DBYDipg Mailogy and Rawdog

Joined November 2010

Unbelievable. 30 months after ChatGPT the best answer is "build your own using an API key and vector embeddings database like Chroma". How is this possible.

What is currently the best solution for turning a large collections of personal notes and chats into an LLM-interrogable dataset?. It seems like most off-the-shelf options can't keep context at scale.

66

60

2K

@cosine_distance Bro we need a follow-up post saying you brought her flowers and asked her to out to play pickleball. - doesn't matter if she says yes or no, anything less is straight-up antisocial.

1

1

1K

"Lemme just turn down some of the highest-salary positions in history and educate the proles".

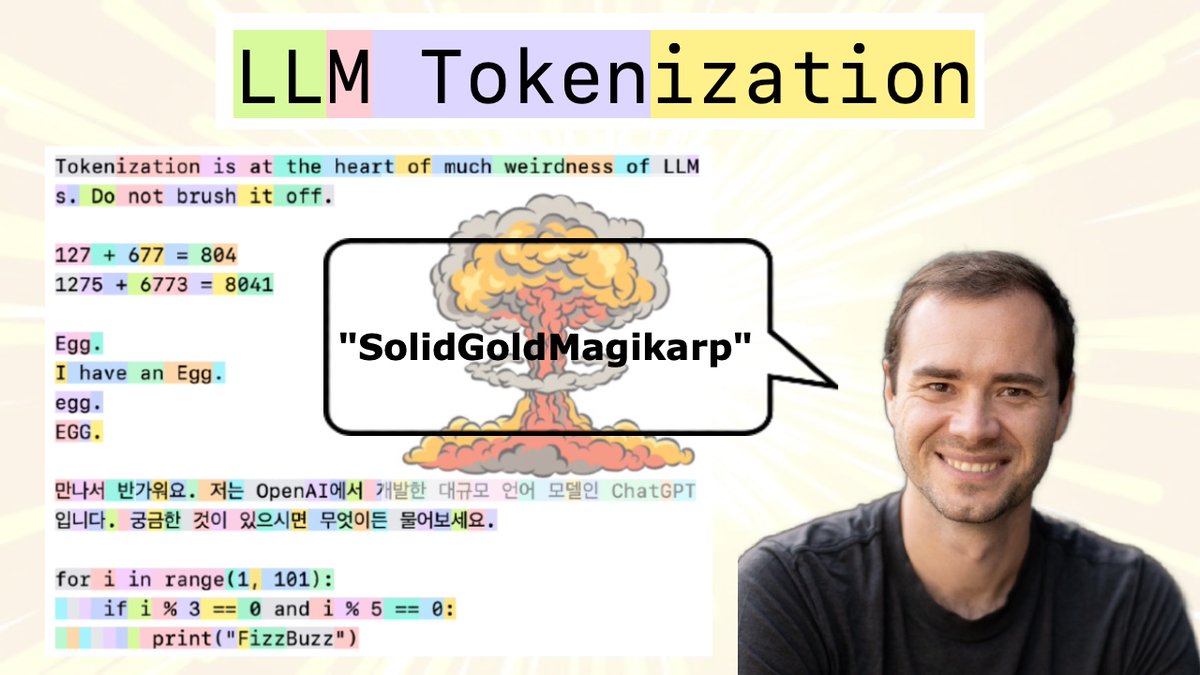

New (2h13m 😅) lecture: "Let's build the GPT Tokenizer". Tokenizers are a completely separate stage of the LLM pipeline: they have their own training set, training algorithm (Byte Pair Encoding), and after training implement two functions: encode() from strings to tokens, and

10

62

1K

@lightxvision Find the biggest, baddest looking dude you can and punch him straight in the mouth.

5

1

593

@fortelabs This right here.

16

5

608

the Mechanistic Universe is a lie - everything is diffusion. atoms, electrical charges, the strong and weak forces - just heuristics (generated lazily) with high enough resolution that we mostly don’t notice.

Wow, diffusion models (used in AI image generation) are also game engines - a type of world simulation. By predicting the next frame of the classic shooter DOOM, you get a playable game at 20 fps without any underlying real game engine. This video is from the diffusion model.

39

29

594

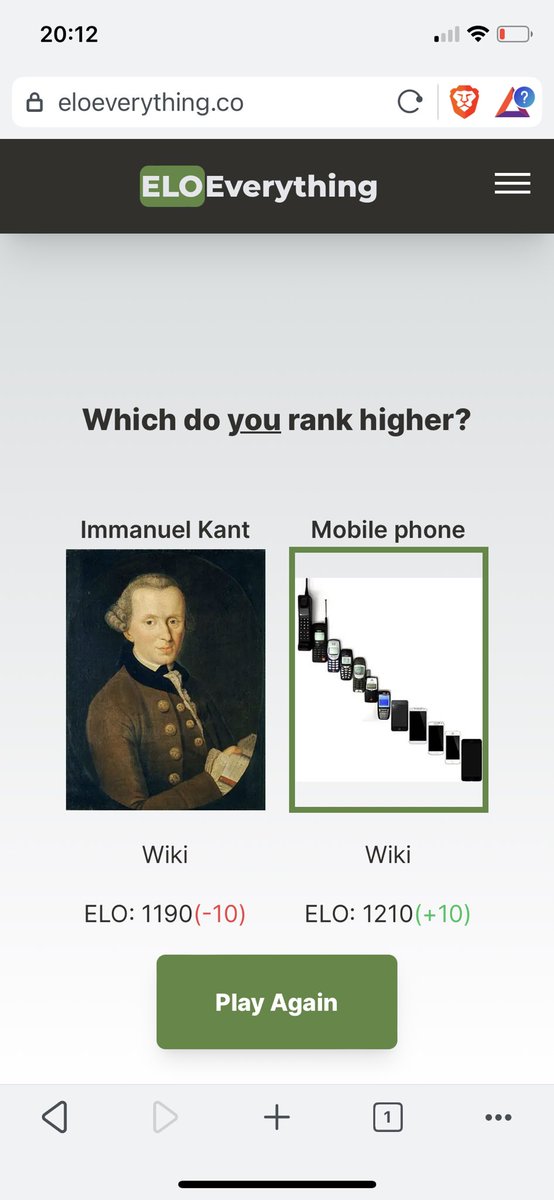

181,295 games of #eloeverything have been played since I reset the database ~12 hours ago.

34

33

511