Zachary Nado

@zacharynado

Followers

10,002

Following

652

Media

224

Statuses

8,231

Research engineer @googlebrain . Past: software intern @SpaceX , ugrad researcher in @tserre lab @BrownUniversity . All opinions my own.

Boston, MA

Joined January 2017

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Hamas

• 881036 Tweets

Ali Koç

• 254154 Tweets

Aziz Yıldırım

• 236467 Tweets

Fenerbahçe

• 223032 Tweets

Colombia

• 163336 Tweets

Perez

• 85814 Tweets

Olympics

• 70276 Tweets

Lewis

• 69842 Tweets

Russell

• 68780 Tweets

Crazier

• 60450 Tweets

Pedri

• 46357 Tweets

Duki

• 46288 Tweets

Hamilton

• 43708 Tweets

#kimmich_to_alahli

• 42390 Tweets

Ferrari

• 41415 Tweets

Copa América

• 40889 Tweets

Estudiantes

• 32867 Tweets

Verstappen

• 28688 Tweets

عمرو دياب

• 23668 Tweets

Checo

• 23161 Tweets

#precure

• 22978 Tweets

ロックの日

• 22839 Tweets

The Bolter

• 20848 Tweets

GETAWAY CAR

• 20428 Tweets

Pence

• 18350 Tweets

#UFCLouisville

• 14640 Tweets

コイン当選確率

• 13960 Tweets

Ahmet Selim Kul

• 13484 Tweets

Hannah Montana

• 12218 Tweets

Belmont

• 10838 Tweets

Last Seen Profiles

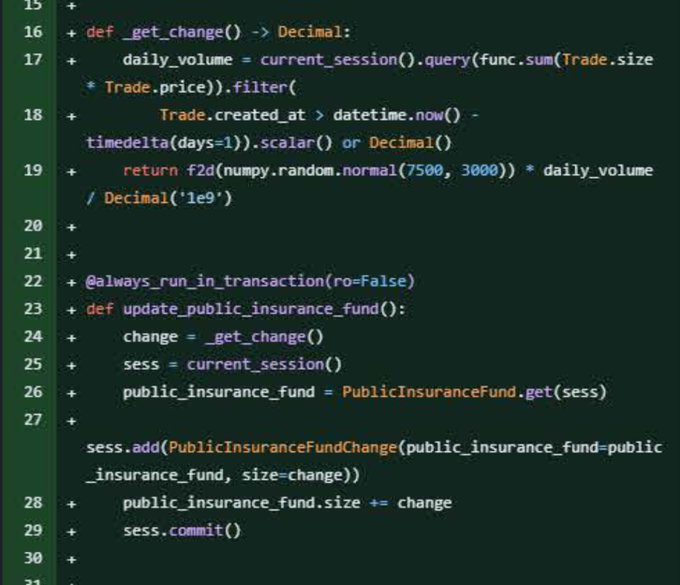

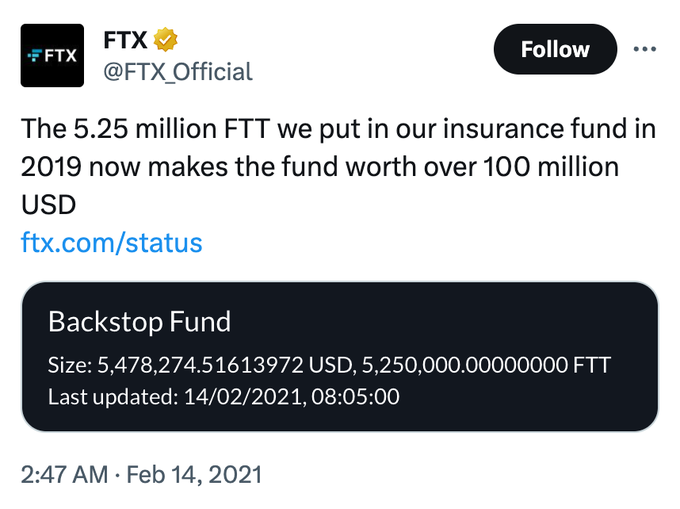

>importing numpy without renaming to np

FTX was never gonna make it

23

166

2K

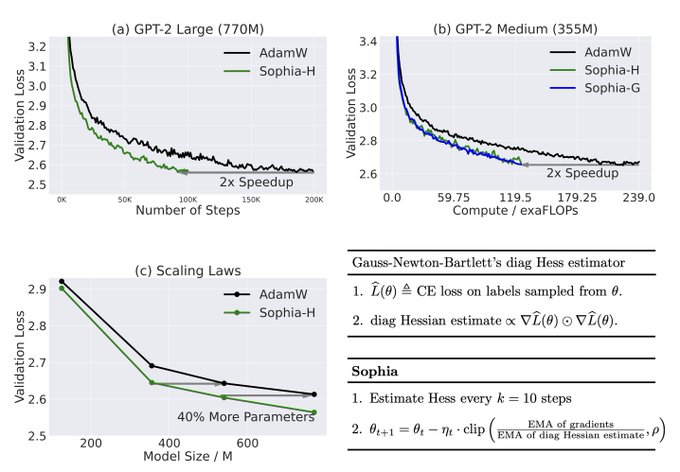

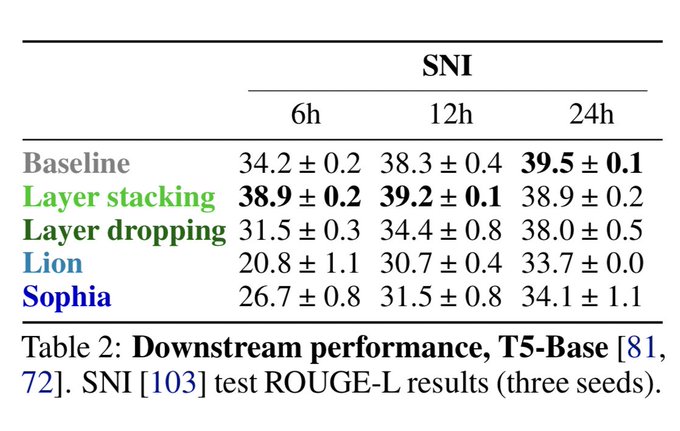

tl;dr submit a training algorithm* that is faster** than Adam*** and win $10,000 💸🚀

*a set of hparams, self-tuning algorithm, and/or update rule

**see rules for how we measure speed

***beat all submissions, currently the best is NAdamW in wallclock and DistShampoo in steps

To highlight the importance of

#ML

training & algorithmic efficiency, we’re excited to provide compute resources to help evaluate the best submissions to the

@MLCommons

AlgoPerf training algorithms competition, w/ a chance to win a prize from MLCommons!

22

115

467

10

49

373

here we go again with the classic once-a-month new optimizer hype cycle

8

12

301

"Before OpenAI came onto the scene, machine learning research was really hard—so much so that, a few years ago, only people with Ph.D.s could effectively build new AI models or applications." lol, lmao even

9

11

292

@caffeinefused

I think "AI" will be super useful long term but the over promising of AGI next year by the tech bro hype boys is getting old

2

4

285

🌝

New: Google quietly scrapped a set of Gemini launch events planned for next week, delaying the model’s release to early next year.

w/

@amir

37

48

398

8

5

270

I explain ML and DL concepts to PhDs all day every day, and vice versa, and I have a bachelors

8

5

254

@SebastianSzturo

we did! ⚡

12

0

250

Wrote my first blog post at , about generating

#pusheen

with AI! There's a version for those with and without an AI background, so don't let that hold you back from reading!

5

54

208

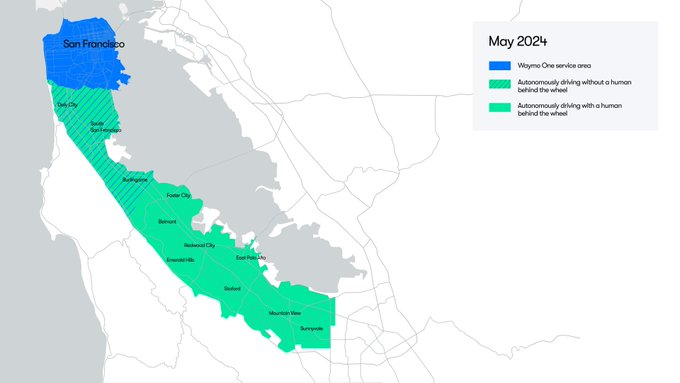

I haven't kept up with self driving details much, genuine question, are there any competitors even close to Waymo?

39

0

202

A thread on our latest optimizers work! We tune Nesterov/Adam to match performance of LARS/LAMB on their more commonly used workloads. We (

@jmgilmer

, Chris Shallue,

@_arohan_

,

@GeorgeEDahl

) do this to provide more competitive baselines for large-batch training speed measurements

3

30

159

squeezing model sizes down is just as important as scaling up in my opinion, and 1.5 Flash ⚡️ is so incredibly capable while so small and cheap it's been blowing our minds 🤯

it has been an incredible privilege and so much fun building this model (sometimes too much fun)! ⚡️

Today, we’re excited to introduce a new Gemini model: 1.5 Flash. ⚡

It’s a lighter weight model compared to 1.5 Pro and optimized for tasks where low latency and cost matter - like chat applications, extracting data from long documents and more.

#GoogleIO

21

143

697

13

8

146

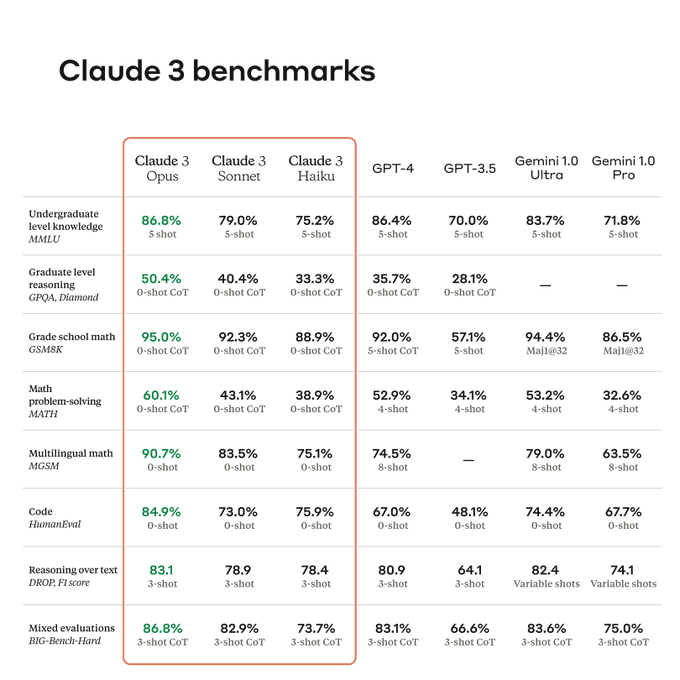

very impressive models, congrats to everyone involved!

also nice to know that we are not the only ones bad at model size naming

5

2

119

people are going to keep pushing this with no regard for quality/factualness, maybe eventually the hype will die down but given how easily people consume misinformation I'm not sure

6

7

112

and this is only Gemini Pro that's beating GPT4-V, just wait for Ultra

9

10

101

the real announcement openai timed with Google I/O

After almost a decade, I have made the decision to leave OpenAI. The company’s trajectory has been nothing short of miraculous, and I’m confident that OpenAI will build AGI that is both safe and beneficial under the leadership of

@sama

,

@gdb

,

@miramurati

and now, under the

2K

3K

26K

4

3

101

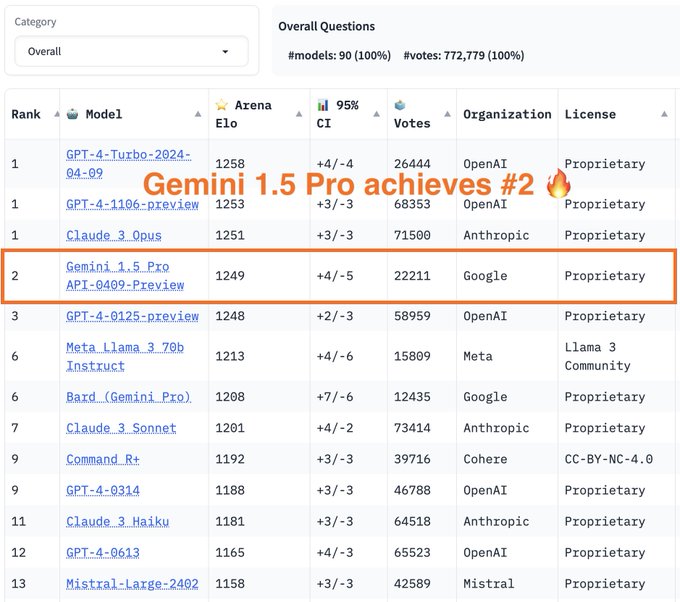

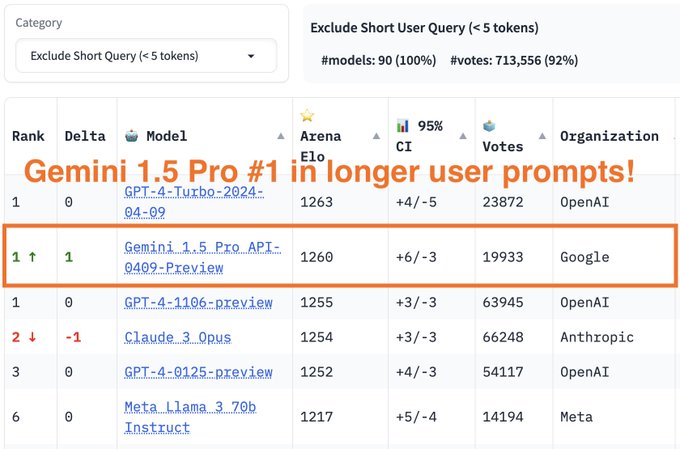

1.5 Pro is a very, very good model 🚀🚀

but even more excited for what we have in store 🕺

7

5

92

soon

🔥Breaking News from Arena

Google's Bard has just made a stunning leap, surpassing GPT-4 to the SECOND SPOT on the leaderboard! Big congrats to

@Google

for the remarkable achievement!

The race is heating up like never before! Super excited to see what's next for Bard + Gemini

155

630

3K

5

4

89

@bryancsk

pretty sure the issue isn't the wages but the fact they read a novel worth of disturbing content or view child porn or gore each day w/o health benefits to help with that? this is the same company as and employees still don't seem to be getting help

3

1

77

I'll start: we resubmitted a paper (with additional results based on previous reviews!) and received the literally same exact, character-for-character, copy-pasted review as we did for NeurIPS, which is of course a max confidence reject.

8

0

79

@stissle22

@SebastianSzturo

what does that even mean? we didn't launch it "just to say we launched" ??? it's an actual product you can use right now, there are plenty of people who have been since Tues

3

0

73

papers like this just reinforce my intuition that LM training setups are underdeveloped because everyone obsessed over scaling up num params. there is so much more to look into besides just the model size!!

1

5

67

this is strictly worse than just browsing a shopping website. how are people unironically investing in this

6

3

61

on top of the new and impressive capabilities of Pro 1.5, Gemini 1.5 Flash is such a good model for how fast it is ⚡️⚡️⚡️

2

3

58

deep learning infra is hard to get right but so important, advancements in it enable totally new lines of research

Excited to share Penzai, a JAX research toolkit from

@GoogleDeepMind

for building, editing, and visualizing neural networks! Penzai makes it easy to see model internals and lets you inject custom logic anywhere.

Check it out on GitHub:

43

426

2K

0

6

59

@hahahahohohe

@AnthropicAI

do you have access to Gemini 1.5 Pro to try this as a comparison point? if not DM me and we'll get you access

2

0

55

🎉🎉 our NeurIPS workshop on how to train neural nets has been accepted! 💯 please submit your weird tips & tricks on NN training, we can't wait to discuss them all together 😃🔥🖥️

The CfP for our

@NeurIPSConf

workshop *Has It Trained Yet* is out: .

If you train deep networks, you want to be at this workshop on December 2. And if you develop methods to train deep nets, you may want your work to be present there. Here’s why: 🧵

2

21

80

3

3

52

Parameter count is a silly metric to assert AI progress with, but I'm also not surprised

4

6

53

classic tech opinion of "invent futuristic vaporware" instead of doing the dirty work fixing policy issues

@Noahpinion

My heterodox take on US transit is that if infrastructure problems are too hard to solve, the transit of the future is airplanes, and we should just make airplanes better by (i) making them zero-carbon, and (ii) improving comfort by greatly cutting down airport security

170

45

996

10

3

42

detecting AI content is the next adversarial examples

tons of research will be spent on it only to come up with "defenses" that are broken within 1 day of publication

5

4

52

to no one's surprise, recently trendy techniques don't stand the test of time against a well tuned baseline!

Some excellent work by

@jeankaddour

and colleagues

“We find that their training, validation, and downstream gains vanish compared to a baseline with a fully-decayed learning rate��

☠️

5

33

186

3

4

52

Google I/O isn't the only AI announcement Gemini watched 🕺

Gemini and I also got a chance to watch the

@OpenAI

live announcement of gpt4o, using Project Astra! Congrats to the OpenAI team, super impressive work!

56

255

1K

2

5

48

this is only the beginning of the Google software ecosystem getting supercharged by AI

1

1

47

@araffin2

I've long argued for tuning epsilon, in Adam it can be interpreted as a damping/trust region radius term. See Section 2 of our paper

2

1

45

I'm disappointed they're too cowardly to actually launch in the middle of Google I/O

5

1

46

sign up for the wait-list here

Introducing Veo: our most capable generative video model. 🎥

It can create high-quality, 1080p clips that can go beyond 60 seconds.

From photorealism to surrealism and animation, it can tackle a range of cinematic styles. 🧵

#GoogleIO

148

957

4K

6

12

45

"“You can interrogate the data sets. You can interrogate the model. You can interrogate the code of Stable Diffusion and the other things we’re doing,” he said. “And we’re seeing it being improved all the time.”"

lol you can do all of that with a controlled API too

"In Silicon Valley, crypto and the metaverse are out. Generative A.I. is in."

@StabilityAI

(nice pic of

@EMostaque

;)

5

25

156

6

5

43

@typedfemale

sam walks up to a sr alignment engineer: "at ease. what have you been working on here?"

"i did my phd getting robots to solve rubiks cubes without resorting to chatbots, I'm continuing that with one burnt out effective altruist stanford ugrad"

sam: "shut the entire thing down"

2

2

43

@bryancsk

pretty sure the issue isn't the wages but the fact they read a novel worth of disturbing content or view child porn or gore each day w/o health benefits to help with that? this is the same company as and employees still don't seem to be getting help

3

1

77

2

0

40

Autoencoders meet Neural ODEs!

Excited to present my first work as a PhD student at

@ANITI_Toulouse

and

@tserre

-lab at

@BrownUniversity

with Rufin VanRullen and Thomas Serre: "Neural Optimal Control for Representation Learning". Preprint

Code & Notebook to come! Read more below!

1/9

1

22

64

0

4

41

maybe all the AI models training over this weekend will get an extra fun level of dropout

1

2

40