Yunzhu Li

@YunzhuLiYZ

Followers

4,747

Following

476

Media

54

Statuses

354

Assistant Professor of Computer Science @ UIUC @UofIllinois @IllinoisCS , Postdoc from @Stanford @StanfordSVL , PhD from @MIT_CSAIL . #Vision #Robotics #Learning

Champaign, IL

Joined January 2018

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

UNCONDITIONAL LOVE BLANK

• 159619 Tweets

Spain

• 132470 Tweets

Eurocopa

• 123477 Tweets

#SixTONESANN

• 102138 Tweets

Drew

• 85702 Tweets

Lamine Yamal

• 76614 Tweets

Croatia

• 75375 Tweets

Suiza

• 57616 Tweets

Ronaldinho

• 56770 Tweets

#عيد_الاضحي

• 50519 Tweets

Morata

• 46329 Tweets

Carvajal

• 42752 Tweets

Pedri

• 42196 Tweets

Modric

• 40826 Tweets

Hungría

• 36874 Tweets

スペイン

• 30752 Tweets

صالح الاعمال

• 23619 Tweets

اسبانيا

• 22312 Tweets

大倉くん

• 19851 Tweets

Cucurella

• 19379 Tweets

Fabian Ruiz

• 18979 Tweets

Rodri

• 16477 Tweets

クロアチア

• 16217 Tweets

VIRAL STELL29

• 14285 Tweets

Eid Mubarak

• 12255 Tweets

Last Seen Profiles

Pinned Tweet

Career update: After a wonderful year at UIUC, our lab will be moving to the Computer Science Department at Columbia University this fall.

@ColumbiaCompSci

My time at UIUC has been incredible, thanks to the support from the entire department, especially Nancy. It was an honor

What do James Bartusek of UC Berkeley, Adam Block of MIT, John Hewitt of Stanford, Aleksander Holynski of Google DeepMind & Berkeley AI Research, Yunzhu Li of UIUC, and Silvia Sellan of U Toronto have in common? They are all joining

@ColumbiaCompSci

- meet the Super Six!

7

9

177

31

11

398

I'll join

@UofIllinois

as an Assistant Professor in

@uofigrainger

and

@IllinoisCS

in Fall'23.

Before that, I'll join

@StanfordSVL

as a Postdoc working with

@jiajunwu_cs

and

@drfeifei

.

Sincere thanks to all who have helped me during the journey! Super excited about what's ahead!

40

12

394

🎉 Excited to share that we've won the Best Systems Paper Award at

#CoRL2023

for our work on RoboCook!

A huge shoutout to the incredible team:

@HaochenShi74

(lead),

@HarryXu12

, Samuel Clarke, and

@jiajunwu_cs

.

Great to catch up with so many familiar faces at

#CoRL2023

today! We have three Orals this year, and two are award finalists!

Nov 7, 8:30-9:30 am (Oral), 2:45-3:30 pm (Poster)

RoboCook ()

- Finalist for Best Systems Paper Award

- Led by

@HaochenShi74

1

4

52

15

8

214

I had the pleasure of visiting

@CMU_Robotics

over the past two days to give a VASC seminar talk and a guest lecture. Thanks

@GuanyaShi

for the amazing host! 🙌

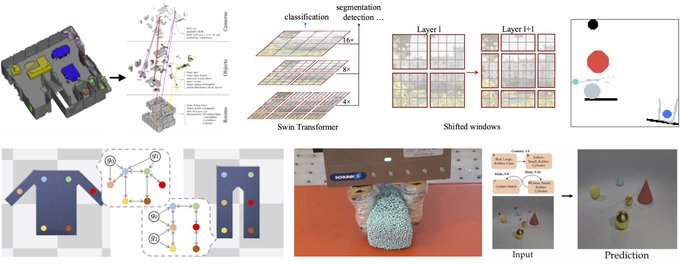

The seminar talk was about our recent work on "Foundation Models for Robotic Manipulation": 🤖

3

30

183

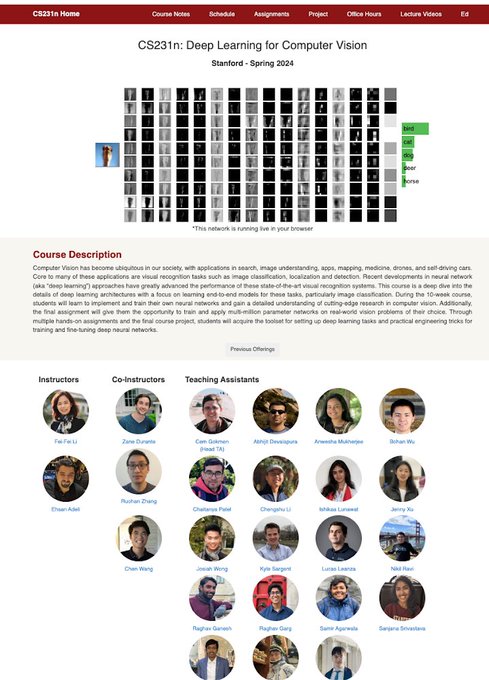

Absolutely thrilled to co-instruct CS231n with

@drfeifei

&

@RuohanGao1

this quarter!

@karpathy

&

@jcjohnss

introduced me to

#DeepLearning

for

#ComputerVision

through this very course 7 yrs ago.

Excited to return and inspire a new wave of students to dive into computer vision! 🚀

4

1

168

Excited to share our work on "Causal Discovery in Physical Systems from Videos" from my internship at

@NVIDIAAI

Paper

Website

Thanks to my amazing collaborators!

@animesh_garg

,

@AnimaAnandkumar

, Dieter Fox, Antonio Torralba

1/7

2

41

154

Introducing RoboEXP, a robotic system that explores! 🤖

When a robot enters a kitchen, it must find all the ingredients before preparing the food for you.

The exploration should not be random, and we use **foundation models** to tell the robot “what” and “how” to explore!

2

18

113

Introducing RoboCook, our new particle-based world modeling framework for dumpling making, a highly complicated long-horizon manipulation task using 15 tools.

Check out

@HaochenShi74

's detailed thread. Here, I discuss our exciting journey to date. (1/7)

1

12

81

Our new work studies a core question in robot manipulation: which **scene representation** to use? 🤖

Introducing D^3Fields: a 3D, dynamic, and semantic representation powered by foundation models. It supports a vast range of real-world manipulation tasks in a ZERO-SHOT manner!

0

13

78

Thank you

@_akhaliq

, for sharing our work recently presented at

#NeurIPS2023

!

Visit our project page for more details, demos, and to try it out on Google Colab:

Watch the full video of our robot manipulating letters to form the word "NeurIPS"! 🤖

1

5

55

In the following work led by Danny

@DannyDriess

, we explore the use of NeRF to learn compositional scene representations for model-based planning with a combination of

(1) implicit object encoders,

(2) graph-structured neural dynamics models,

(3) a latent-space RRT planner. (1/4)

Excited to share our preprint "Learning Multi-Object Dynamics with Compositional Neural Radiance Fields"

Paper:

Videos:

Amazing collaboration between

@DannyDriess

,

@YunzhuLiYZ

,

@huang_zhiao

, Russ Tedrake, Marc Toussaint. (1/7)

3

21

91

1

8

53

Great to catch up with so many familiar faces at

#CoRL2023

today! We have three Orals this year, and two are award finalists!

Nov 7, 8:30-9:30 am (Oral), 2:45-3:30 pm (Poster)

RoboCook ()

- Finalist for Best Systems Paper Award

- Led by

@HaochenShi74

1

4

52

Excited to share our new project, led by amazing

@wenlong_huang

, exploring large language and vision models (

#LLMs

& VLMs) for zero-shot

#Robotics

manipulation!

What's particularly interesting to me is demonstrated ability to **specify the goal** for embodied agents. 🧵👇(1/4)

1

9

43

Welcoming Kaifeng to the team! 🎉 Huge opportunities lie at the intersection of computer vision and robotics. 🚀 Excited for upcoming collaborations!!

Finally the moment. I will be joining the University of Illinois

@IllinoisCS

as a PhD student this fall! I will be working with Prof. Yunzhu Li

@YunzhuLiYZ

on exciting topics across computer vision, machine learning and robotics.

15

2

132

1

1

39

Robots writing “Hello World” in Chinese using granular pieces? 🤖👋

Accepted at

#RSS2023

, we present a dynamic-resolution model learning framework for object pile manipulation.

Adding to

@YXWangBot

's thread, I discuss the comparison with humans. (1/3)

1

3

38

Attending

#RSS2022

in NYC!

Check out our work

- RoboCraft on June 28 ()

- NeRF-RL at L-DOD workshop on June 27 ()

I'm also co-organizing the implicit representation workshop on July 1 (). Come and join us!

0

2

36

Fun fact: this project is inspired by the following Tom & Jerry video.

Key takeaways:

- We identify "what" objects require exploration.

- We understand "how" to interact with these objects.

- We "memorize" the details of what we have seen and explored to support downstream

0

5

36

Check out a perspective I co-authored with

@LuoYiyue

for

@ScienceMagazine

on intelligent textiles.

Intelligent fabrics, which can sense and communicate information scalably and unobtrusively, can fundamentally change how people interact with the world.

0

3

35

Check out our recent work on learning unsupervised keypoints for model-based reinforcement learning! Here is a nice summary of the highlights from

@peteflorence

Can robots model the world with keypoints, and learn how to see, predict, and control them into the future?

"Keypoints into the Future: Self-Supervised Correspondence in Model-Based Reinforcement Learning"

@lucas_manuelli

,

@YunzhuLiYZ

, me,

@rtedrake

(1/n)

5

32

143

1

5

34

Welcome to the team!! Excited to have you join us!! 🎉

1

1

34

I would like to share our ICML 2020 paper on "Visual Grounding of Learned Physical Models".

w Toru Lin, Kexin Yi,

@recursus

,

@dyamins

,

@jiajunwu_cs

, Josh Tenenbaum, & Antonio Torralba

Project page:

Video:

1/8

1

5

33

Our scalable tactile glove introduced in a Nature 2019 paper is collected by the MIT Museum!!

Joint work with Subra (lead author), Petr, Jun-Yan

@junyanz89

, Antonio, and Wojciech.

Thanks to Yiyue

@LuoYiyue

for making a new one specifically for display!

0

1

27

This work is inspired by

@lucacarlone1

's amazing works on building 3D scene graphs and by

@_krishna_murthy

's fantastic ConceptGraph.

We add a critical new treatment: actions. The robot does not merely observe the environment but interacts with it to discover all hidden items.

1

4

27

Drake: Model-based design in the age of robotics and machine learning by Russ and

@ToyotaResearch

0

5

21

Join me at

#ICRA2023

as I present our latest work in learning structured dynamics models for deformable object manipulation, from manipulating dough and granular objects to crafting dumplings.

Don't miss it and the wealth of knowledge from other fantastic speakers!

#Robotics

#AI

Our 3rd workshop on representing and manipulating deformable objects will be happening @

#ICRA2023

next Monday (29 May). We hope to see you there.

0

4

10

0

2

20

Join us this morning at

#CVPR2023

as we present the ObjectFolder Benchmark!

Our work integrates multisensory object representations, incorporating vision, touch, and sound, benchmarked around tasks like recognition, reconstruction, and robotic manipulation.

Come chat with us!

To be presented at

#CVPR2023

on Thursday morning, “The ObjectFolder Benchmark: Multisensory Learning with Neural and Real Objects”

Project page:

Paper:

Demo:

Poster session: THU-AM-076

1

6

29

0

3

18

I will give a talk at the

#ICLR2021

simDL workshop tomorrow about our recent work on learning-based dynamics modeling for physical inference and control. Come and chat with us!

0

2

16

I would also like to refer you to a related work by

@sindy_loewe

,

@david_madras

, Rich Zemel, and

@wellingmax

, which leverages a shared dynamics model and learns to infer causal graphs from time-series data using Amortized Causal Discovery:

1

2

14

Our work shows NeRF learns scene representations better at capturing the 3D structure of the environment, which turn out to be surprisingly useful for RL in tasks that require 3D reasoning!

Kudos to the lead authors,

@DannyDriess

and

@IngmarSchubert

!

New preprint on Reinforcement Learning with Neural Radiance Fields

Paper:

Video:

Amazing collaboration between

@DannyDriess

,

@IngmarSchubert

,

@peteflorence

,

@YunzhuLiYZ

,

@Marc__Toussaint

(1/6)

3

16

57

0

0

14

My talk at

#ICLR2021

simDL workshop is now available on YouTube:

I discussed why we want to learn simulators from data and how different modeling choices affect (1) the generalization power and (2) their usage in physical inference and model-based control.

0

0

13

Specifically, we employ the technique called "Transporter" developed by

@tejasdkulkarni

et al. () as our perception module, which assigns keypoints over the foreground of the images and consistently tracks the objects over time across different frames. 4/7

1

2

13

Thanks for the highlight,

@_akhaliq

! We'll share further details on this project tomorrow.

0

0

13

Real-world data can vary widely across sources (e.g., Amazon warehouses, a fleet of self-driving cars, and photos taken by individual users).

Our new

#iclr2023

paper shows how decentralized self-supervised learning can be robust to such heterogeneity in decentralized datasets!

How can we do self-supervised learning on unlabeled data without sharing them? Super excited to share our work Dec-SSL

@ICLR

! We study decentralized self-supervised learning and try to understand its robustness and communication efficiency of it. Video:

1

4

11

0

0

13

The CVPR Tutorial on Graph-Structured Networks tomorrow will feature the line of works on representing learning with GNN. I will present our works on using GNN for physical inference and modeling-based control. Come and join us!

0

0

11

CNN featured our recent

#cvpr19

paper on cross-modal prediction between vision and touch. Joint work with

@junyanz89

, Russ Tedrake, and Antonio Torralba.

0

3

12

AI-generated 3D content holds immense potential to revolutionize a broad spectrum of applications. The automated creation of diverse 3D environments is crucial for training robots, serving as a key element in achieving widespread generalization. 🤖

Congratulations, Hao!! 🚀

📢Thrilled to announce sudoAI (

@sudoAI_

), founded by a group of leading AI talents and me!🚀

We are dedicated to revolutionizing digital & physical realms by crafting interactive AI-generated 3D environments!

Join our 3D Gen AI model waitlist today!

👉

14

102

427

0

0

12

Check out our

#CVPR2021

paper on building a tactile carpet for human pose estimation!

Imagine that in a workout, it can:

- recognize the activity

- count num of reps

- (potentially) calculate burned calories!

Poster @ Wed June 23, 10 PM – 12:30 AM EDT

1

0

11

Check out our Nature Electronics paper on Interaction Learning with Conformal Tactile Textiles! Joint work w/

@LuoYiyue

(lead author),

@pratyusha_PS

,

@showone20

,

@kui_wu

, Michael Foshey,

@lbc1245

, Tomas Palacios, Antonio Torralba, Wojciech Matusik

BREAKING: MIT "smart clothes" use special tactile fibers to sense a person’s movement & determine what pose they're in.

Potential applications:

🏀 coaching

♿ rehabilitation

👴🏽 elder care

Paper:

More: (v/

@NatureElectron

)

1

22

71

0

3

11

At

#CVPR2023

poster session this AM, we'll present our work on learning object-centric neural scattering functions for dynamic modeling of multi-object scenes, designed for robotic manipulation under extreme lighting.

Come chat with us and check

@stephentian_

's thread for more!

How can we enable robotic manipulation in multi-object scenes with potentially harsh lighting conditions?

At

#CVPR2023

, we’re presenting our recent work combining object-centric neural scattering functions and learned dynamics models to perform robotic control!

(1/6)

1

9

44

1

0

10

Thanks Jim! Like how humans sense the world, the foundation models for robots should be multimodal.

Check out the ObjectFolder Benchmark, our attempt towards a large-scale, real-world, multimodal object dataset, built for tasks like recognition, reconstruction, and manipulation.

0

1

10

There is a huge potential for the use of implicit representations in robotics. Are you interested in learning and advancing the forefront of this direction? Please consider participating and contributing to our

#RSS2022

workshop!

Are you interested in the role of implicit representations within robotics?

Then checkout our

#RSS2022

workshop on July 1st.

We also solicit 2-3 page extended abstracts as contributions! (1/4)

1

10

41

0

0

10

@cs231n

has always been the computer vision and deep learning course I recommend to anyone interested in this area. It introduced me to the field, and I was extremely fortunate to contribute back last year. I'm sure this year will be amazing as well!

0

0

10

New

#CoRL22

paper on long-horizon plasticine manipulation using tools like cutter, pusher, and roller.

We made both *temporal* and *spatial* abstractions for more effective planning of the skill sequence.

Kudos to all authors, especially Xingyu

@Xingyu2017

and Carl

@carl_qi98

!!

Object-centric representations and hierarchical reasoning are key to generalization. How can we manipulate deformables, where “objectness” changes over time? Our method finds a way and solves challenging real-world dough manipulation tasks!

#CoRL2022

2

25

116

0

0

9

The reconstructed low-level memory allows us to inspect what's inside the cabinets.

Check out our website for code and examples including drawers, doors, Matryoshka dolls, fabric, etc.

Kudos to

@jiang_hanxiao

for his fantastic job leading this project!!

1

1

8

Excited to co-organize the workshop on Multi-Agent Interaction and Relational Reasoning at ICCV21! We aim to enable interdisciplinary discussions from areas like multi-agent systems, visual relational reasoning, etc.

Please consider joining and sharing your work at the workshop!

Interested in Multi-Agent Interaction and Relational Reasoning research?

Submit to the ICCV workshop by July 19! (or Aug 30 without proceedings)

Organizers:

@JiachenLi8

,

@xinshuoweng

, Chiho Choi,

@YunzhuLiYZ

,

@ParthKothari17

,

@AlexAlahi

0

2

6

0

0

7

The guest lecture was for

@GuanyaShi

's course on Robot Learning: .

I summarized our work over the years on "Learning Structured World Models From and For Physical Interactions":

Amazing group of students and enjoyable questions!

0

0

7

Impressive policy rollout on various dexterous tasks, powered by scalable, in-the-wild hand capture! Incredible engineering & learning techniques put together! Congrats to

@chenwang_j

and

@HaochenShi74

.

(What's stopping the robot from continuing to pour water into the teapot?)

1

0

6

We have just released the code and the video for our

#NeurIPS2020

paper on "Causal Discovery in Physical Systems from Videos".

Project:

Code:

Video:

Try it out and let us know if you have any questions!

0

2

6

Today @

#RSS2022

,

@HaochenShi74

will present our work on learning particle dynamics for manipulating Play-Doh!

The model is learned directly from real data consisting of just **10 minutes** of random interactions. Coupled with MPC, we manipulate Play-Doh into letter-like shapes!

On Tuesday at

#RSS22

, I will present our paper RoboCraft! The presentation will be in Arledge Lerner Hall between 10:35-10:40am local time! Our poster will be at Arledge Lerner Hall between 4:30-6:00pm. Please come and checkout! (1/n)

2

4

31

0

0

6

Please also check out a nice work by

@recursus

on "Learning Physical Graph Representations from

Visual Scenes" that takes a step further by removing the supervision of scene structures: . 8/8

1

0

5

This is joint work with my amazing collaborators Shuang Li (

@ShuangL13799063

), Vincent Sitzmann (

@vincesitzmann

), Pulkit Agrawal (

@pulkitology

), Antonio Torralba. (2/7)

1

0

5

We then extend the model developed by

@thomaskipf

et al. () to discover the causal structure between the keypoints and identify both the discrete and continuous hidden confounding variables on the directed edges. 5/7

1

1

5

Thank you for the note

@alihkw_

!! We are both inspired by and love your awesome series of work on ConceptFusion and ConceptGraphs.

We could debate on which abstraction level to set the graph, but action-conditioned scene graphs might have to be **the** way to scale things up.

0

2

4

Interested in long-horizon deformable object manipulation? Check out our

#ICLR2022

paper on this problem by combining

(1) a differentiable physics simulator for short-term skill abstraction

(2) a planner to produce intermediate goals and assemble the skills for long-horizon tasks

Robotic manipulation of deformable objects like dough requires long-horizon reasoning over the use of different tools. Our method DiffSkill utilizes a differentiable simulator to learn and compose skills for these challenging tasks.

#ICLR2022

Website:

2

16

94

0

0

4

For more in-depth information, check out

@wenlong_huang

's thread and visit our project page! (4/4)

0

0

4

Here is

@animesh_garg

's excellent summary of our work on "Causal Discovery in Physical Systems from Videos"!

Learning Causal Graphs that capture Physical Systems has high potential yet challenging!

Check out End-to-End Causal Discovery from videos

Site:

Paper:

w\

@YunzhuLiYZ

@AnimaAnandkumar

, A.Torralba, D. Fox

2

32

139

0

0

4

Nov 7, 8:30-9:30 am (Oral), 2:45-3:30 pm (Poster)

Predicting Object Interactions with Behavior Primitives: An Application in Stowing Tasks

- Project page:

- Finalist for Best Paper/Best Student Paper Awards

- Led by

@HaonanChen_

1

0

4

@AvivTamar1

@AnimaAnandkumar

@animesh_garg

Thank you, Aviv! Your work's results look fantastic!

Combining DLPs and causal dynamics prediction will move the frontier forward in cases where the causal/relational mechanisms between components are not directly observable from still images. Super exciting future direction! 🙌

0

0

4

This project naturally extends our CoRL-21 Oral paper () by explicitly accounting for the compositionality/structure of the underlying system, which we show allows much better generalization outside the training distribution. (3/4)

1

1

3

@drfeifei

@Flatironbooks

@melindagates

Congratulations! I can't wait to get a copy and read this book!

0

0

3

We have two papers on learning and reasoning about dynamical systems accepted to

#ICLR2020

as spotlight presentations!

Come and join the live sessions on Wednesday (April 29th, 13:00-15:00 EDT, and 16:00-18:00 EDT)!

(1/3)

1

0

3

@anand_bhattad

@akanazawa

@sarameghanbeery

@unnatjain2010

@CVPR

@IllinoisCS

@berkeley_ai

@MIT

@TTIC_Connect

@CMU_Robotics

@MetaAI

Fantastic workshop!! 🎉

0

0

3

Check out this exciting work from

@NvidiaAI

that builds a vision-based teleoperation system for dexterous manipulation!

0

0

2

@somuSan_

This is mainly for PhD positions. The lab will have internship positions available when it is formally started. Please stay tuned!

1

0

2

More examples of our robot writing “Hello” in Japanese!

Kudos to

@YXWangBot

for showcasing the power of our particle-based graph dynamics model --- a single model, trained solely in simulation, accomplishes all demonstrated tasks (e.g., gather, redistribute, sort). (3/3)

0

0

2

Nov 8, 11:00-12:00 pm (Oral), 5:15-6:00 pm (Poster)

VoxPoser: Composable 3D Value Maps for Robotic Manipulation with Language Models

- Project page:

- Led by

@wenlong_huang

0

0

2

Please consider submitting a poster! We are bringing together simulation researchers that develop deformable-object simulators, with roboticists that leverage these simulators for real-world robotics applications.

0

0

2

Our recent work on Learning Particle Dynamics for Robot Manipulation () is featured by MIT News (). Also, check out our initial attempt on extending to partially observable scenarios ().

@jiajunwu_cs

,

@junyanz89

1

0

2

This thread shows the value of combining particle representation and GNNs for (1) dynamics modeling of diverse objects, and (2) application in long-horizon tasks requiring extensive tool use.

Kudos to all my collaborators, especially

@HaochenShi74

for his phenomenal work!! (7/7)

0

0

2