Jun-Yan Zhu

@junyanz89

Followers

12K

Following

1K

Media

23

Statuses

352

Assistant Professor at Generative Intelligence Lab @CMU_Robotics @CarnegieMellon. Understanding and creating pixels.

Pittsburgh, PA

Joined April 2017

Check out our new unpaired learning method for instruction-following image editing models.

🚀 New preprint! We present NP-Edit, a framework for training an image editing diffusion model without paired supervision. We use differentiable feedback from Vision-Language Models (VLMs) combined with distribution-matching loss (DMD) to learn editing directly. webpage:

1

3

74

Leaving Meta and PyTorch I'm stepping down from PyTorch and leaving Meta on November 17th. tl;dr: Didn't want to be doing PyTorch forever, seemed like the perfect time to transition right after I got back from a long leave and the project built itself around me. Eleven years

501

586

11K

We present MotionStream — real-time, long-duration video generation that you can interactively control just by dragging your mouse. All videos here are raw, real-time screen captures without any post-processing. Model runs on a single H100 at 29 FPS and 0.4s latency.

37

150

1K

@AvaLovelace0 @RuixuanLiu_ @RamananDeva @ChangliuL @junyanz89 If you miss the talk or want to dive deeper, please also check out our poster and our interview! Poster Session Details: - Location: Exhibit Hall I #306 - Time: Wed 22 Oct 2:45 p.m. HST — 4:45 p.m Read the interview in ICCV Daily: https://t.co/K791u7scoo

1

3

19

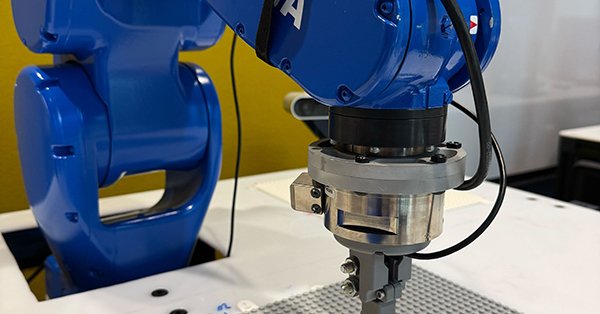

🏆 Excited to share that BrickGPT ( https://t.co/yvi8cyrArX) received the ICCV Best Paper Award! Our first author, @AvaLovelace0, will present it from 1:30 to 1:45 p.m. today in Exhibit Hall III. Huge thanks to all the co-authors @RuixuanLiu_ @RamananDeva @ChangliuL @junyanz89

8

21

187

I will be presenting this work in today's afternoon poster session!! #ICCV2025 📅Oct 22 | 📍Exhibit Hall 1 #157 | ⏲️2:45 pm -4:45 pm HST Github: https://t.co/yzEy0XyqxF Please drop by the poster for any discussion😃

github.com

SynCD: Generating Multi-Image Synthetic Data for Text-to-Image Customization (ICCV 2025) - nupurkmr9/syncd

Can we generate a training dataset of the same object in different contexts for customization? Check out our work SynCD, which uses Objaverse assets and shared attention in text-to-image models for the same. https://t.co/vHa2RTLHoX w/ @xi_yin_ @junyanz89 @imisra_ @smnh_azadi

0

1

14

#ICCV2025 best paper award & best paper honorable mention! RI researchers collaborated with @CSDatCMU and @CMU_ECE to bring some incredible work to the conference this year👏🧠🔥 Check out the SCS news post on BrickGPT, which brought home best paper!

cs.cmu.edu

SCS researchers have developed a tool that uses text prompts to help people — and even robots — bring ideas to life with Lego bricks.

0

8

57

I'll be joining the faculty @JohnsHopkins late next year as a tenure-track assistant professor in @JHUCompSci Looking for PhD students to join me tackling fun problems in robot manipulation, learning from human data, understanding+predicting physical interactions, and beyond!

87

112

865

Really excited to be presenting this work as part of my talk in Higen workshop ( https://t.co/QZPZ1yqUgu) at 2 pm in Room 309 today, Oct 19 😄 Please check out if interested! #ICCV2025

Few-step diffusion isn’t only an acceleration trick. The big deal is that it can generate samples during training, like a GAN generator—so you can apply any differentiable loss. We use this to train an image editor without paired data, with a VLM-based loss, without RL.

0

4

26

Few-step diffusion isn’t only an acceleration trick. The big deal is that it can generate samples during training, like a GAN generator—so you can apply any differentiable loss. We use this to train an image editor without paired data, with a VLM-based loss, without RL.

9

40

399

🚀 New preprint! We present NP-Edit, a framework for training an image editing diffusion model without paired supervision. We use differentiable feedback from Vision-Language Models (VLMs) combined with distribution-matching loss (DMD) to learn editing directly. webpage:

2

29

173

More of what my colleagues and I have been working on in AI for fusion is public now

We’re announcing a research collaboration with @CFS_energy, one of the world’s leading nuclear fusion companies. Together, we’re helping speed up the development of clean, safe, limitless fusion power with AI. ⚛️

53

131

3K

Over the past year, my lab has been working on fleshing out theory/applications of the Platonic Representation Hypothesis. Today I want to share two new works on this topic: Eliciting higher alignment: https://t.co/KY4fjNeCBd Unpaired rep learning: https://t.co/vJTMoyJj5J 1/9

10

119

696

I’m hiring PhD students for 2026 @TTIC_Connect. More details here:

22

172

720

Launch our first real product! Along with agent-based image editing workflows, our method natively allows region editing. https://t.co/CNqWna0yRN

8

15

146

We added 🤗 demos for our group inference on FLUX.1 Schnell and FLUX.1 Kontext. Thanks @multimodalart for helping set this up so quickly! FLUX Schnell: https://t.co/Dgto1pR5ah FLUX Kontext: https://t.co/b89JFpJEBx GitHub:

github.com

Scalable group inference for generating high quality and diverse images with diffusion models. - GaParmar/group-inference

When exploring ideas with generative models, you want a range of possibilities. Instead, you often disappointingly get a gallery of near-duplicates. The culprit is standard I.I.D. sampling. We introduce a new inference method to generate high-quality and varied outputs. 1/n

0

6

35

When exploring ideas with generative models, you want a range of possibilities. Instead, you often disappointingly get a gallery of near-duplicates. The culprit is standard I.I.D. sampling. We introduce a new inference method to generate high-quality and varied outputs. 1/n

6

24

120

🔥 Announcing the Creative Visual Content Generation, Editing & Understanding Workshop at #SIGGRAPH2025! For the first time in SIGGRAPH history, this pioneering workshop is officially part of the Technical Program—and we’re celebrating with an exceptional lineup! ✨ Join us as

0

9

30