Jiajun Wu

@jiajunwu_cs

Followers

11K

Following

288

Media

0

Statuses

171

Assistant Professor at @Stanford CS

Stanford, CA

Joined March 2009

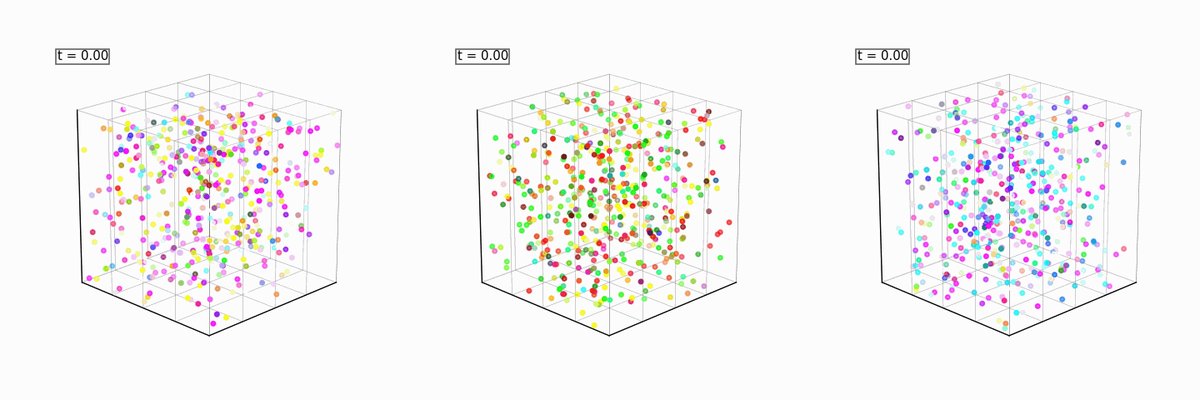

🌟Got multiple expert models and want them to steer your image/video generation? We’ve re-implemented the Product of Experts for Visual Generation paper on a toy example, and broken it down step by step in our new blog post! Includes: - Github repo: Annealed Importance

2

10

21

Can VLMs build Spatial Mental Models like humans? Reasoning from limited views? Reasoning from partial observations? Reasoning about unseen objects behind furniture / beyond current view? Check out MindCube! 🌐 https://t.co/ER5UX284Vo 📰 https://t.co/hGZerOb0TM

5

60

281

FlowMo, our paper on diffusion autoencoders for image tokenization, has been accepted to #ICCV2025! See you in Hawaii! 🏄♂️

Modern generative models of images and videos rely on tokenizers. Can we build a state-of-the-art discrete image tokenizer with a diffusion autoencoder? Yes! I’m excited to share FlowMo, with @kylehkhsu, @jcjohnss, @drfeifei, @jiajunwu_cs. A thread 🧵:

1

15

92

#ICCV2025 🤩3D world generation is cool, but it is cooler to play with the worlds using 3D actions 👆💨, and see what happens! — Introducing *WonderPlay*: Now you can create dynamic 3D scenes that respond to your 3D actions from a single image! Web: https://t.co/uFOzA8t0P8 🧵1/7

6

40

182

🤖 Household robots are becoming physically viable. But interacting with people in the home requires handling unseen, unconstrained, dynamic preferences, not just a complex physical domain. We introduce ROSETTA: a method to generate reward for such preferences cheaply. 🧵⬇️

4

33

134

(1/n) Time to unify your favorite visual generative models, VLMs, and simulators for controllable visual generation—Introducing a Product of Experts (PoE) framework for inference-time knowledge composition from heterogeneous models.

5

65

303

🚀 Excited to announce our CVPR 2025 Workshop: 3D Digital Twin: Progress, Challenges, and Future Directions 🗓 June 12, 2025 · 9:00 AM–5:00 PM 📢 Incredible lineup: @rapideRobot, Andrea Vedaldi @Oxford_VGG,@richardzhangsfu,@QianqianWang5,Dr. Xiaoshuai Zhang @Hillbot_AI,

2

22

57

Neuro-symbolic concepts (object, action, relation) represented by a hybrid of neural nets & symbolic programs. Composable, grounded, and typed, agents recombine them to solve tasks like robotic manipulation. J. Tenenbaum @maojiayuan @jiajunwu_cs @MIT

https://t.co/2r0cEvvqZx

1

9

59

💫 Animating 4D objects is complex: traditional methods rely on handcrafted, category-specific rigging representations. 💡 What if we could learn unified, category-agnostic, and scalable 4D motion representations — from raw, unlabeled data? 🚀 Introducing CANOR at #CVPR2025: a

2

22

96

How to scale visual affordance learning that is fine-grained, task-conditioned, works in-the-wild, in dynamic envs? Introducing Unsupervised Affordance Distillation (UAD): distills affordances from off-the-shelf foundation models, *all without manual labels*. Very excited this

8

109

438

We'll be presenting Deep Schema Grounding at @iclr_conf 🇸🇬 on Thursday (session 1 #98). Come chat about abstract visual concepts, structured decomposition, & what makes a maze a maze! & test your models on our challenging Visual Abstractions Benchmark:

What makes a maze look like a maze? Humans can reason about infinitely many instantiations of mazes—made of candy canes, sticks, icing, yarn, etc. But VLMs often struggle to make sense of such visual abstractions. We improve VLMs' ability to interpret these abstract concepts.

1

3

39

Stanford AI Lab (SAIL) is excited to announce new SAIL Postdoctoral Fellowships! We are looking for outstanding candidates excited to advance the frontiers of AI with our professors and vibrant community. Applications received by the end of April 30 will receive full

6

83

208

🔥Spatial intelligence requires world generation, and now we have the first comprehensive evaluation benchmark📏 for it! Introducing WorldScore: Unifying evaluation for 3D, 4D, and video models on world generation! 🧵1/7 Web: https://t.co/WnKPf8uarw arxiv: https://t.co/EPLM1xTLwP

6

91

244

New paper on self-supervised optical flow and occlusion estimation from video foundation models. @sstj389 @jiajunwu_cs @SeKim1112 @Rahul_Venkatesh

https://t.co/5j4zjLIxNZ @

3

18

111

Introducing T* and LV-Haystack -- targeting needle-in-the-haystack for long videos! 🤗 LV-Haystack annotated 400+ hours of videos and 15,000+ samples. 🧩 Lightweight plugin for any proprietary and open-source VLMs: T* boosting LLaVA-OV-72B [56→62%] and GPT-4o [50→53%] within

4

19

89

🤩 FluidNexus has been selected as CVPR'25 *Oral* paper 🎺! See you at Nashville!

🔥Want to capture 3D dancing fluids♨️🌫️🌪️💦? No specialized equipment, just one video! Introducing FluidNexus: Now you only need one camera to reconstruct 3D fluid dynamics and predict future evolution! 🧵1/4 Web: https://t.co/DsxWBo8pgX Arxiv: https://t.co/U1O8qpXycH

0

4

45

Modern generative models of images and videos rely on tokenizers. Can we build a state-of-the-art discrete image tokenizer with a diffusion autoencoder? Yes! I’m excited to share FlowMo, with @kylehkhsu, @jcjohnss, @drfeifei, @jiajunwu_cs. A thread 🧵:

13

140

599

Spatial reasoning is a major challenge for the foundation models today, even in simple tasks like arranging objects in 3D space. #CVPR2025 Introducing LayoutVLM, a differentiable optimization framework that uses VLM to spatially reason about diverse scene layouts from unlabeled

4

60

240

#3DV2025 is happening in 10 days in Singapore, but we can't wait to give some spoilers for the award!! 8 papers were selected as award candidates, congrats 🥳. The final awards will be announced during the main conference. https://t.co/e8e546AH7B

2

6

47