Anand Bhattad

@anand_bhattad

Followers

3K

Following

9K

Media

206

Statuses

1K

Assistant Professor @JHUCompSci, @HopkinsDSAI Past: RAP @TTIC_Connect | PhD @SiebelSchool Research: Exploring Knowledge in Generative Models

Baltimore, MD

Joined June 2011

[1/3] Two balls, a basketball and a tennis ball, are dropped from the same height, side by side. Assuming no air resistance, which hits the ground first? The answer is neither: they strike the ground simultaneously. Galileo demonstrated this principle over 400 years ago, and it

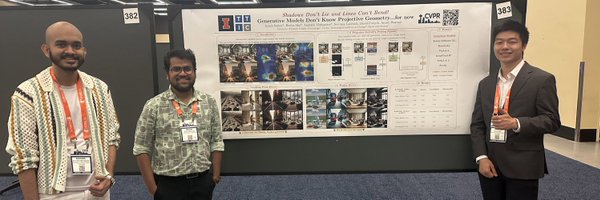

Recent results suggest generative models grasp 3D scene properties. Yet, our study shows they falter in translating this "understanding" into accurate geometry. 📢📢📢 Excited to share our work analyzing projective geometry in generated images! 🎉🎉🎉 https://t.co/cpdedpxOIe 1/4

2

15

69

🚀 I’m excited to share my final work as a PhD student: 𝙈𝙚𝙨𝙝𝙎𝙥𝙡𝙖𝙩𝙩𝙞𝙣𝙜: 𝘿𝙞𝙛𝙛𝙚𝙧𝙚𝙣𝙩𝙞𝙖𝙗𝙡𝙚 𝙍𝙚𝙣𝙙𝙚𝙧𝙞𝙣𝙜 𝙬𝙞𝙩𝙝 𝙊𝙥𝙖𝙦𝙪𝙚 𝙈𝙚𝙨𝙝𝙚𝙨 - Arxiv: https://t.co/TRSiULSsTH - Code: https://t.co/9A4fihfnMY - Project page: https://t.co/vQ6oSpt2rb

16

134

870

Cancer sucks. Read this moving tribute. This is also a good reminder that many researchers you talk to are silently battling epic struggles unrelated to their work. Here’s to hoping research’s leaderboard culture makes room for empathy and kindness.

We lost someone extraordinary Ethan Neumann, a PhD candidate in my lab, worked tirelessly to study #fibrolamellar carcinoma, the cancer that would ultimately claim his life On the day before he died he officially became Dr. Neumann: https://t.co/qKvfxi3v3h

0

7

67

A great paper and a great lecture from Alyosha at the poster session!

For those who missed our #NeurIPS2025 Visual Jenga poster presentation, I managed to record two iterations of our master presenter, Prof. Efros 😉 It's infectious to see Alyosha's energy and his excitement about research -- very inspiring. Enjoy! The full poster is in comments.

0

2

32

The School of Computer Science at EPFL has multiple open faculty positions. I'd love to have more colleagues broadly in AI, machine learning, vision, multimodal learning, etc. I think we have a good positioning and a great opportunity ahead. Feel free to catch me at #NeurIPS if

4

29

171

If you ever feeling down, watch this! Much better than therapy!

We need more senior researchers camping out at their posters like this. Managed to catch 10 minutes of Alyosha turning @anand_bhattad’s poster into a pop-up mini lecture. Extra spark after he spotted @jathushan. Other folks in the audience: @HaoLi81 @konpatp @GurushaJuneja.

5

21

359

Our ground breaking results on ARCAGI have now been officially verified!🥇

Poetiq has officially shattered the ARC-AGI-2 SOTA 🚀 @arcprize has officially verified our results: - 54% Accuracy – first to break the 50% barrier! - $30.57 / problem – less than half the cost of the previous best! We are now #1 on the leaderboard for ARC-AGI-2!

2

1

7

A better view and quality: https://t.co/czBeIJEqc5

We need more senior researchers camping out at their posters like this. Managed to catch 10 minutes of Alyosha turning @anand_bhattad’s poster into a pop-up mini lecture. Extra spark after he spotted @jathushan. Other folks in the audience: @HaoLi81 @konpatp @GurushaJuneja.

2

4

34

Alyosha’s theatre! 😎

We need more senior researchers camping out at their posters like this. Managed to catch 10 minutes of Alyosha turning @anand_bhattad’s poster into a pop-up mini lecture. Extra spark after he spotted @jathushan. Other folks in the audience: @HaoLi81 @konpatp @GurushaJuneja.

0

4

53

We need more senior researchers camping out at their posters like this. Managed to catch 10 minutes of Alyosha turning @anand_bhattad’s poster into a pop-up mini lecture. Extra spark after he spotted @jathushan. Other folks in the audience: @HaoLi81 @konpatp @GurushaJuneja.

25

151

1K

For those who missed our #NeurIPS2025 Visual Jenga poster presentation, I managed to record two iterations of our master presenter, Prof. Efros 😉 It's infectious to see Alyosha's energy and his excitement about research -- very inspiring. Enjoy! The full poster is in comments.

[1/8] Is scene understanding solved? We can label pixels and detect objects with high accuracy. But does that mean we truly understand scenes? Super excited to share our new paper and a new task in computer vision: Visual Jenga! 📄 https://t.co/28EbAEAJmg

9

49

340

Very excited to get this out. A radiance mesh is a trimesh with a NeRF under the hood. Post-optimization the NeRF can be collapsed into the mesh, which unlocks fast rendering via hardware rasterization and easy editing/simulation using standard tooling/physics. Try it.

Introducing the new fastest and most flexible view synthesis method: Radiance Meshes. RMs are volumetric triangle meshes that can be thrown into any game engine, rendered at ~200 FPS@1440p on a RTX4090, and edited using conventional tools. Here's a demo running on my desktop:

6

19

222

Come join my group at Johns Hopkins! I'm recruiting CS PhD students for Fall'26 (deadline: Dec 15) who are interested in safe/reliable AI in healthcare. See my website (link in reply) for more info. I'm also headed to #NeurIPS, and happy to chat with prospective students!

1

2

6

Alyosha Efros, @konpatp and I will be presenting our Visual Jenga paper tomorrow (Thursday) at 11 am! Poster #5504 Come say hi and see how we discover object dependencies via counterfactual inpainting! #NeurIPS2025

[1/8] Is scene understanding solved? We can label pixels and detect objects with high accuracy. But does that mean we truly understand scenes? Super excited to share our new paper and a new task in computer vision: Visual Jenga! 📄 https://t.co/28EbAEAJmg

1

5

46

📢 Phillip Isola @phillip_isola, Saining Xie @sainingxie, and I @zamir_ar are hiring joint postdocs in machine learning with a focus on multimodal learning. What brings us together is our shared interest in multimodality and our intention to move the boundaries of current

1

25

178

I'm recruiting multiple PhD students this cycle to join me at Harvard University and the Kempner Institute! My interests span vision and intelligence, including 3D/4D, active perception, memory, representation learning, and anything you're excited to explore! Deadline: Dec 15th.

23

152

909

[3/3] We also propose a simple fix: fine-tuning generators with LoRA using just 100 single-ball drop videos. This approach not only alleviates the widespread under-acceleration we observe, but also generalizes to two-ball drops and inclined planes -- demonstrating that targeted

1

0

7

[2/3] Testing gravity in generated videos is non-trivial: - Is the camera close or far? (scale ambiguity) - Is playback in slow motion? (frame rate ambiguity) Existing approaches rely on rough heuristics, optimize for absolute positional accuracy in a calibrated setup, or

1

0

3