Nora Belrose

@norabelrose

Followers

8,081

Following

125

Media

422

Statuses

8,396

Working toward a free and fair future powered by friendly AI. Head of interpretability research at @AiEleuther , but tweets are my own views, not Eleuther’s.

Sydney, New South Wales

Joined April 2016

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Israel

• 2810312 Tweets

#Eurovision2024

• 1275692 Tweets

España

• 293820 Tweets

Ireland

• 204657 Tweets

Switzerland

• 144598 Tweets

Flamengo

• 114435 Tweets

Portugal

• 110220 Tweets

Corinthians

• 105568 Tweets

Luka

• 93503 Tweets

Nemo

• 83284 Tweets

#ESC2024

• 74804 Tweets

Italia

• 73125 Tweets

#ESCita

• 72694 Tweets

Slimane

• 72182 Tweets

Irlanda

• 68366 Tweets

Suiza

• 58460 Tweets

Lorran

• 49749 Tweets

Los 12

• 45495 Tweets

Ucrania

• 42517 Tweets

Francia

• 42491 Tweets

Skenes

• 39963 Tweets

Croatia

• 39921 Tweets

Ισραηλ

• 36625 Tweets

Loreen

• 35486 Tweets

Kyrie

• 31259 Tweets

Mavs

• 30054 Tweets

Estonia

• 27444 Tweets

Finland

• 27345 Tweets

Austria

• 27315 Tweets

ABBA

• 25490 Tweets

Dort

• 23604 Tweets

Armenia

• 23091 Tweets

Olly

• 21534 Tweets

#UFCStLouis

• 19803 Tweets

Lively

• 16547 Tweets

Breath of Life

• 16184 Tweets

Baby Lasagna

• 15815 Tweets

Traverso

• 12814 Tweets

Amazonas

• 10125 Tweets

Last Seen Profiles

Pinned Tweet

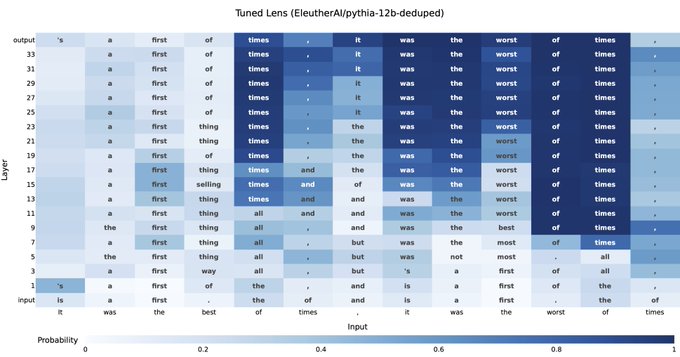

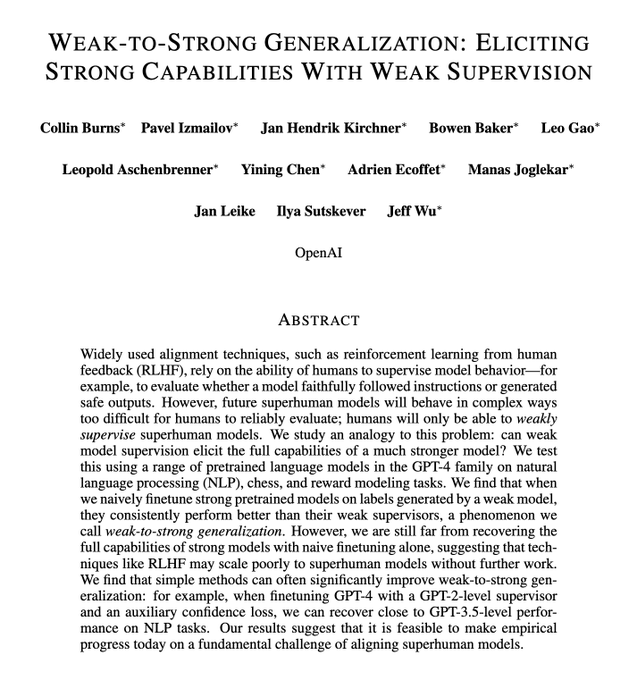

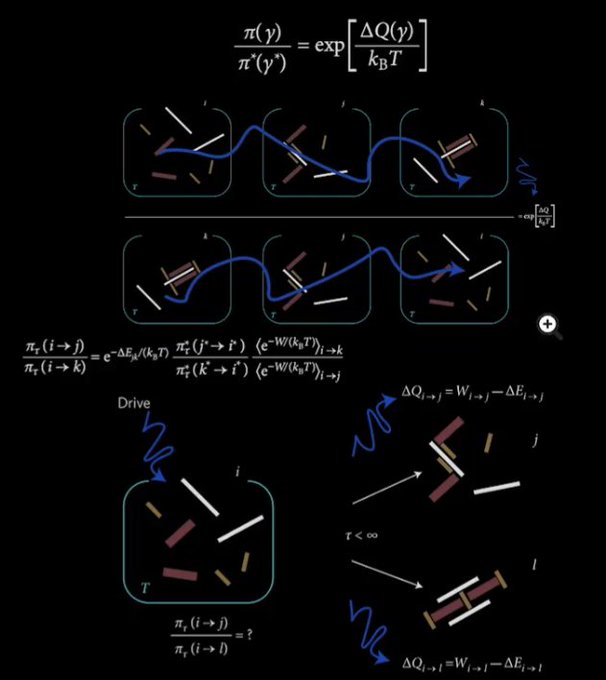

Happy to announce that our paper has been accepted to

@icmlconf

2024!

1

1

70

@primalpoly

The terrorism argument against open source AI also applies to anything that increases the effective intelligence of humans: the internet, public education, nutrition, etc.

It's a fully general argument against human empowerment.

24

85

527

I don’t really care what the current law on this is, but we should be working to destroy copyright as thoroughly as possible so I am on OpenAI’s side in this case.

🧵 The historic NYT v.

@OpenAI

lawsuit filed this morning, as broken down by me, an IP and AI lawyer, general counsel, and longtime tech person and enthusiast.

Tl;dr - It's the best case yet alleging that generative AI is copyright infringement. Thread. 👇

342

5K

18K

368

68

570

This is a misunderstanding of what

@ylecun

is saying. He thinks generative pretraining is a bad objective for AGI. Humans can't and don't need to make videos like Sora. Our brains predict in latent space, not in pixel space.

34

29

403

No one knows what "truly understanding" a neural network model would even mean.

I'm an interpretability researcher, I'm all in favor of trying to understand models better. But "true/complete" understanding is a red herring.

33

19

366

@FiftySells

“As a highly militaristic kingdom constantly organised for warfare, it captured children, women, and men during wars and raids against neighboring societies, and sold them into the Atlantic slave trade in exchange for European goods…” damn I had never heard of this

8

15

240

My Interpretability research team at

@AiEleuther

is hiring! If you're interested, please read our job posting and submit:

1. Your CV

2. Three interp papers you'd like to build on

3. Links to cool open source repos you've built

to contact

@eleuther

.ai

10

44

252

I predict with 60% confidence that some DPO variant will more or less replace RLHF within 6 months

9

13

250

Open sourcing AGI will guarantee a “universal high income” for all, largely independent of government policy.

There will ~always be an option to spin up a cheap AI and have it take care of you, either by trading in the market or by making food, shelter, etc. “off the grid”

Zuckerberg says Meta wants to build AGI and open source it, brings Meta's AI group FAIR closer to generative AI team; Meta will own 340K+ H100 GPUs by 2024 end (

@alexeheath

/ The Verge)

📫 Subscribe:

14

55

281

74

24

235

After reading the paper and watching a couple videos on state space models, I am fairly bullish on Mamba.

Parallel scan for data-dependent selection is super clever. Tri Dao was behind Flash Attention and knows his stuff. Compressed states may be easier to interpret.

Quadratic attention has been indispensable for information-dense modalities such as language... until now.

Announcing Mamba: a new SSM arch. that has linear-time scaling, ultra long context, and most importantly--outperforms Transformers everywhere we've tried.

With

@tri_dao

1/

53

422

2K

10

8

222

@balesni

If it's too easy to create bioweapons, open models won't increase risk, bc you could make them w/o AI

If it's really hard (e.g. requires special materials) open models won't help

Anti-open source arguments only work in a narrow Goldilocks zone of risk

19

26

214

Sebastien casually says "a trillion parameters" when talking about GPT-4. I'm honestly kind of surprised, I was moderately confident that GPT-4 was << 1T params. Given publicly known scaling laws that's an absurd amount of text (and images?)

23

5

202

It's striking how much the AI "safety" discourse has shifted from "AI will slaughter everyone" to vague concerns about disruption and "human obsolescence."

I empathize with the fear of the unknown. But we shouldn't try to shut down the whole future. Let's maximize its benefits.

38

16

201

Training models purely on synthetic data is an enormous win for safety & alignment. Instead of loading LLMs with web garbage, then trying to remove it with RLHF, you train only on “good” data.

And because it makes models more efficient, too, I expect it'll become standard.

16

26

187

Just as NYT shouldn’t stop OpenAI from using NYT content, OpenAI should also open source its models, giving up its monopoly on profiting from GPT.

I am consistent on the issue of intellectual property.

28

18

146

Cool stuff, we found a similar result back in December .

Kind of upset they didn't cite/link to us tbh.

6

11

178

Zuck's position is actually quite nuanced and thoughtful.

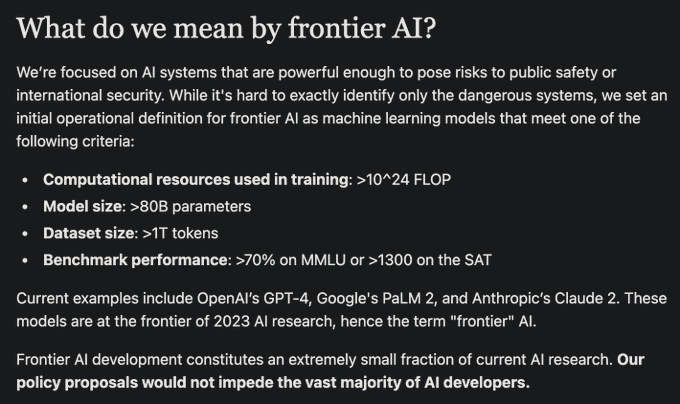

He says that if they discover destructive AI capabilities that we can't build defenses for, they won't open source it. But he also thinks we should err on the side of openness. I agree.

Dwarkesh calmly shreds Zuck's argument for open-sourcing AGI.

The flimsy wishful thinking behind Meta's reckless actions has been exposed.

Another incredible job by

@dwarkesh_sp

.

91

20

242

14

7

173

After hearing about

@robinhanson

’s grabby aliens resolution to the Fermi paradox, every other take on it just seems obviously wrong and not taking into account all the facts. I really hope the grabby aliens view becomes more widely known in the future.

14

3

165

Real world example of an LLM locating a vulnerability a in a web server. I expect language models to gradually improve at penetration testing like this, ultimately favoring cyber defense over cyber offense.

8

11

159

@EigenGender

@d_feldman

I guess mean, median, and mode each correspond to assuming a certain amount of mathematical structure. The mean assumes addition and scalar multiplication. Median just assumes an ordering. Mode only assumes you can count and distinguish elements.

3

8

149

Increasingly I think the "masked shoggoth" thing is a very bad metaphor for LLMs. Some people (e.g. Eliezer) seem to be interpreting it as saying that all LLMs have an alien mesaoptimizer inside of them, which is really unjustified IMO

26

9

132

Now I'm at like 70-75% confidence DPO kills RLHF.

The only thing RLHF might have over DPO is data efficiency, but OpenAI and Anthropic have tons of pairwise comparison data bc they have deployed models so this probably doesn't matter

@_TechyBen

Yeah actually never mind, OpenAI is swimming in pairwise comparison data, this probably isn’t an issue

1

0

11

17

8

133

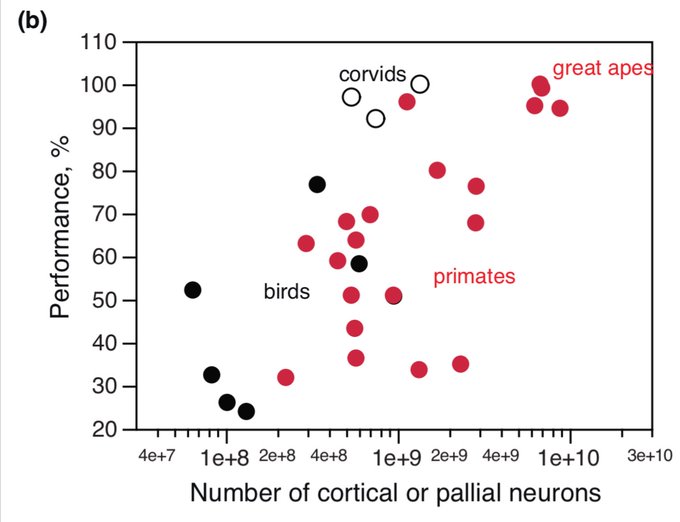

Literal scaling laws for biological neural nets!

This also pre-dates the Baidu neural scaling law paper by a few months

@norabelrose

There’s also at least some tasks on which performance scales linearly with log pallial neuron count.

5

4

49

5

6

120

For a long time I had assumed that photorealistic deepfakes would be produced using something like the Unreal Engine, with explicit physics simulation etc.

I should have trusted more in the power of deep learning.

12

1

122

More evidence for “finetuning doesn’t change the model much”

I strongly disagree with the anti-open source conclusions the authors are drawing from this though. We should simply accept that open models empower good and bad people alike, and this is okay bc good outnumbers bad.

10

8

116

I actually think Dan might be right that Meta's open sourcing is slowing progress down by making it harder to profit off AI— but I'm okay with that if true.

I'm not an accelerationist, and openness, equity, & safety matter a lot more to me than getting ASI as fast as possible.

10

9

114

@DeepMind

@alyssamvance

It honestly slightly scares me how quickly multimodal & “generalist” AI has advanced in the last year… definitely updating my views about when we’ll get AGI

4

5

105

We literally saw a small version of this phenomenon when fine tuning Mistral 7B with labels from a weaker model yesterday, glad to see someone already looked into it in depth 👀

2

5

112

I've been doing interpretability on Mamba the last couple months, and this is just false. Mamba is efficient to train precisely because its computation can be parallelized across time; ergo it is not doing more irreducibly serial computation steps than it has layers.

6

0

108

Alien shoggoths are about as likely to arise in neural networks as Boltzmann brains are to emerge from a thermal equilibrium.

There are “so many ways” the parameters / molecules could be arranged, but virtually all of them correspond to a simple behavior / macrostate.

10

7

106

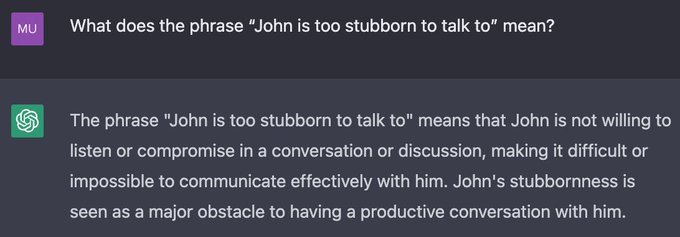

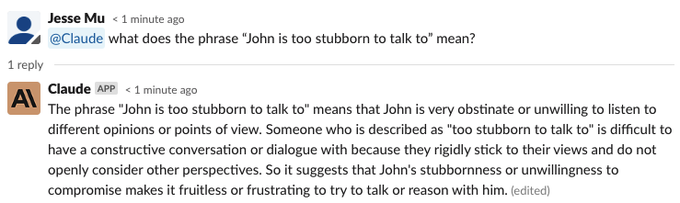

I studied Chomsky’s work as a linguistics undergrad. It’s all nonsense

6

4

101

The success of Tesla FSD v12 was a predictable consequence of switching to an end-to-end "predict what a human would do" objective.

@comma_ai

has been doing it this way for a while, but with much less data. FSD could have been this good years ago if they had switched earlier.

8

5

98

WTF

“The board did *not* remove Sam over any specific disagreement on safety, their reasoning was completely different from that. I'm not crazy enough to take this job without board support for commercializing our awesome models.”

Today I got a call inviting me to consider a once-in-a-lifetime opportunity: to become the interim CEO of

@OpenAI

. After consulting with my family and reflecting on it for just a few hours, I accepted. I had recently resigned from my role as CEO of Twitch due to the birth of my…

1K

2K

15K

7

1

96

@ylecun

The usual doomer response to this is to just define “intelligence” as “power” roughly, and then the argument becomes a tautology and you beg the question that AI will be powerful enough *in the relevant sense* to take over.

12

1

93

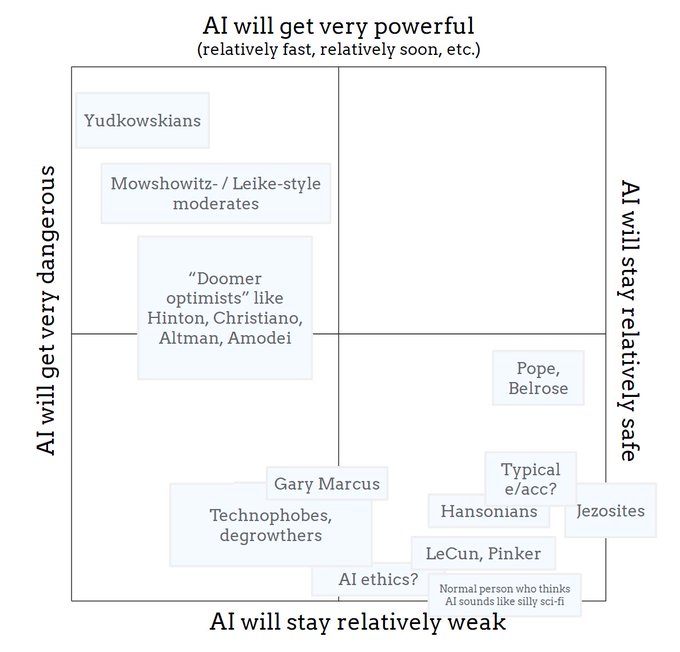

Put me in the upper right quadrant.

8

2

93

Recently

@saprmarks

and

@tegmark

found we can cause LLMs to treat false statements as true and vice versa, by adding a vector to the residual stream along the difference-in-means between true and false statements.

I suggest a theoretical explanation here

1

8

92

For once I half-agree with Eliezer here. Merely calling existential risk "hypothetical" is not a good argument. Better to directly argue that AI apocalypse is <1% likely, as I do.

@AndrewYNg

I think when the near-term harm is massive numbers of young men and women dropping out of the human dating market, and the mid-term harm is the utter extermination of humanity, it makes sense to focus on policies motivated by preventing mid-term harm, if there's even a trade-off.

56

13

311

6

2

90

Actually I might know where they got >15T tokens.

@ethanCaballero

was right, they used Whisper to transcribe all of YouTube

4

4

87

Instead of mandatory watermarking, which seems onerous and hard to enforce, why don't we just normalize the use of public key crypto and MACs to cryptographically verify the source of media? That would be a much more robust solution

16

7

86

How is this evidence we "don't understand" deep learning?

Almost the entire volume of the hyperparameter space is simple and smooth, you're zooming in on the one part at the boundary where it's a fractal and freaking out about it

3

2

87

Happy to announce that our paper on LEAst-squares Concept Erasure (LEACE) got accepted at

@NeurIPSConf

!

4

6

84

This post is probably rewriting history, but it misses the point even if it’s right. The point is that the orthogonality thesis is either Trivial, False, or Unintelligible.

If it’s just the claim that misaligned ASI is logically possible then it’s Trivial; if it’s a claim about…

18

2

85

Most analogies between RLHF and human learning & evolution greatly underestimate how good RLHF is.

You can't take a human, compute the gradient of a custom loss function wrt their synaptic connection weights, then directly update those weights. But that's exactly what RLHF does.

@Jsevillamol

@daniel_271828

It absolutely isn't. Educators have ~no idea how their lessons change the implicit loss function a student's brain ends up minimizing as a result of the classroom sensory experiences/actions, whereas RLHF lets you set that directly.

Grades on a test are not actually reward…

3

2

32

3

1

84

Most of the vaguely-plausible AI doom scenarios start with "the AI copies itself onto a lot of computers, creating a botnet." So we should probably look into how botnets are detected and destroyed.

8

3

83

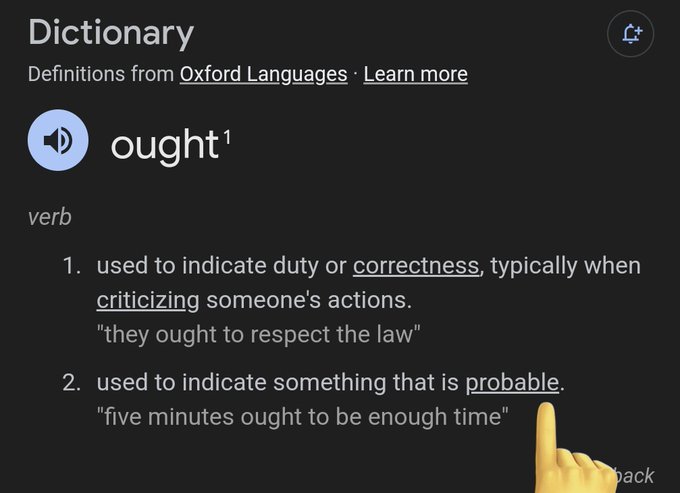

The "ought" in the is-ought distinction is not about probability, and I'm not sure how Beff could be so confused as to think otherwise.

10

0

85

The kind of AI deception that

@dwarkesh_sp

and

@liron

are worried about is less than 1% likely IMO, so I get why

@ShaneLegg

isn't actively planning for it.

That said, I'm training 1000s of NNs with different random seeds right now to empirically test how likely it actually is.

13

1

84

I guess I’m a “moderate” on this, I think e/acc is an insane death wish but I’m also pro-mind uploading and I think it would be okay if literally no biological humans exist in 10^6 years, as long as our culture continues in some form

15

2

83

@SciFi_Techno

I feel like 100 million jobs is not really that much. Like it's 3% of the global workforce. Seems plausible to me.

5

1

83