Zipeng Fu

@zipengfu

Followers

11,772

Following

1,204

Media

36

Statuses

272

Stanford AI & Robotics PhD @StanfordAILab | Creator of Mobile ALOHA, Robot Parkour | Past: Google DeepMind, CMU, UCLA

Palo Alto, CA

Joined February 2014

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Renzo

• 149514 Tweets

#WWERaw

• 82544 Tweets

#GodMorningTuesday

• 37096 Tweets

Scarlett Johansson

• 33379 Tweets

Oilers

• 32756 Tweets

Fani Willis

• 31365 Tweets

定額減税

• 30436 Tweets

Kingdom Hearts

• 28122 Tweets

राजीव गांधी

• 26553 Tweets

梅雨入り

• 25308 Tweets

Birds Nurturing

• 24042 Tweets

給与明細

• 24010 Tweets

Gunther

• 22163 Tweets

#Canucks

• 20882 Tweets

Vancouver

• 20788 Tweets

#ファンパレハーフアニバーサリー

• 18934 Tweets

Edmonton

• 17581 Tweets

ドジャース

• 17426 Tweets

金額明記

• 17231 Tweets

Amber Rose

• 15464 Tweets

ショートアニメ

• 14937 Tweets

キングダムハーツ

• 14089 Tweets

Lyra

• 12452 Tweets

国民実感

• 10659 Tweets

Last Seen Profiles

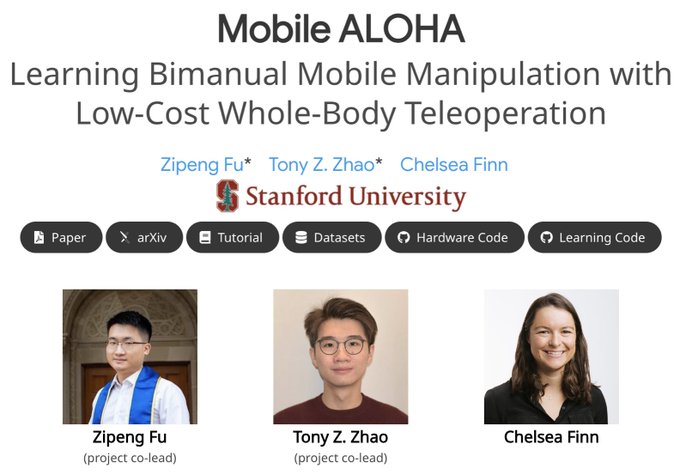

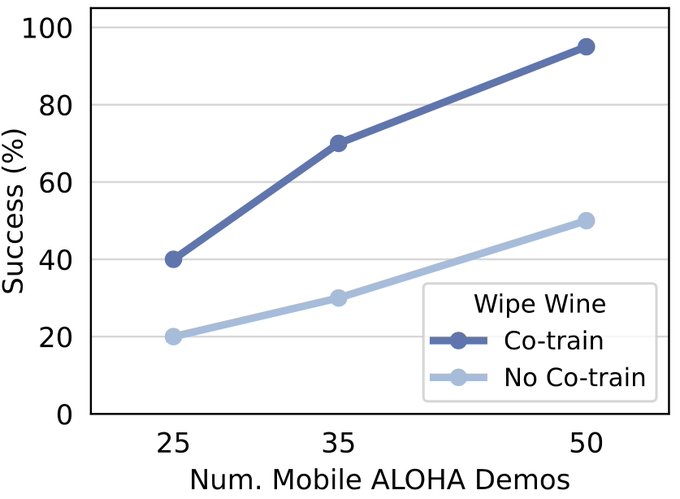

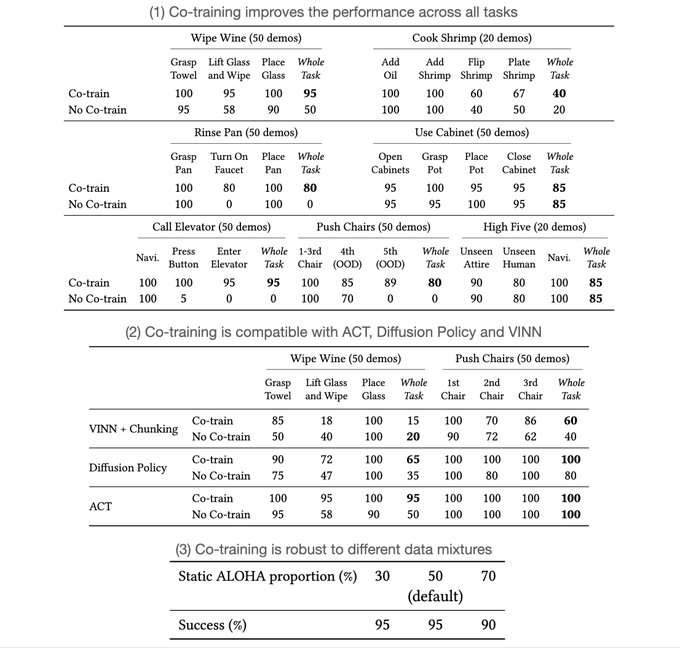

Introduce 𝐌𝐨𝐛𝐢𝐥𝐞 𝐀𝐋𝐎𝐇𝐀🏄 -- Learning!

With 50 demos, our robot can autonomously complete complex mobile manipulation tasks:

- cook and serve shrimp🦐

- call and take elevator🛗

- store a 3Ibs pot to a two-door cabinet

Open-sourced!

Co-led

@tonyzzhao

,

@chelseabfinn

188

891

4K

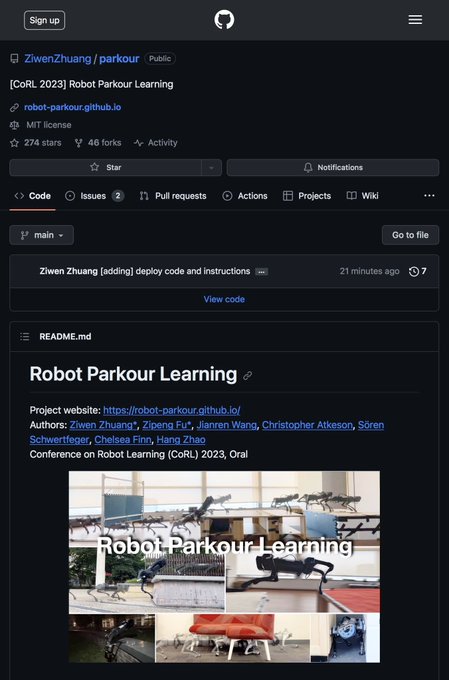

Introduce our

#CoRL2023

(Oral) project:

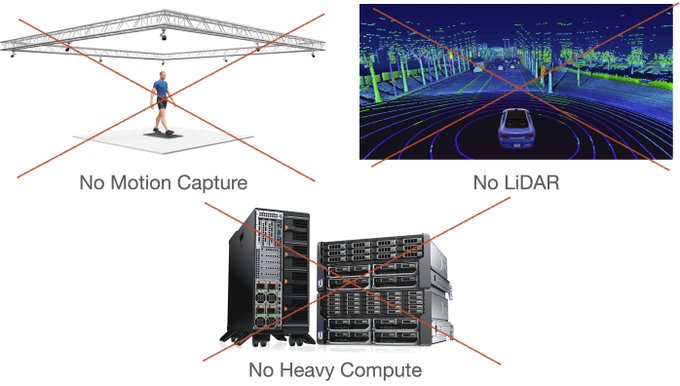

"Robot Parkour Learning"

Using vision, our robots can climb over high obstacles, leap over large gaps, crawl beneath low barriers, squeeze through thin slits, and run. All done by one neural network running onboard.

And it's open-source!

24

229

1K

Mobile ALOHA 🏄 is coming soon!

Special thanks to

@tonyzzhao

for throwing random objects into the scene, and

@chelseabfinn

for the heavy pot (> 3 lbs) !

Stay tuned!

9

58

380

The robot is teleoperated in the video (for now!)

Checkout

@tonyzzhao

's thread on how we design the low-cost open-source hardware and teleoperation system!

Introducing 𝐌𝐨𝐛𝐢𝐥𝐞 𝐀𝐋𝐎𝐇𝐀🏄 -- Hardware!

A low-cost, open-source, mobile manipulator.

One of the most high-effort projects in my past 5yrs! Not possible without co-lead

@zipengfu

and

@chelseabfinn

.

At the end, what's better than cooking yourself a meal with the 🤖🧑🍳

236

1K

5K

15

37

257

Super excited to announce that I will join

@StanfordAILab

for my PhD as a Stanford Graduate Fellow to keep exploring in Robotics & AI. Deeply grateful to my advisor

@pathak2206

, mentors Jitendra Malik &

@ashishkr9311

for their huge support and guidance! Will miss

@CarnegieMellon

16

7

236

Good time spending days and nights with

@tonyzzhao

at the brand new Stanford Robotics Center and

@chelseabfinn

's lab.

Much funnier when sound on!

Robots are not ready to take over the world yet!

@zipengfu

and I just compiled a video of the dumbest mistakes 𝐌𝐨𝐛𝐢𝐥𝐞 𝐀𝐋𝐎𝐇𝐀🏄 made in the autonomous mode 🤣

We are also planning to organize some live demos after taking a break. Stay tuned!

66

222

1K

19

30

222

Want to dive deeper into the hardware of Mobile ALOHA?

Check out 𝐌𝐨𝐛𝐢𝐥𝐞 𝐀𝐋𝐎𝐇𝐀🏄 -- Hardware from co-lead

@tonyzzhao

!

Introducing 𝐌𝐨𝐛𝐢𝐥𝐞 𝐀𝐋𝐎𝐇𝐀🏄 -- Hardware!

A low-cost, open-source, mobile manipulator.

One of the most high-effort projects in my past 5yrs! Not possible without co-lead

@zipengfu

and

@chelseabfinn

.

At the end, what's better than cooking yourself a meal with the 🤖🧑🍳

236

1K

5K

10

18

178

Our video is clearly inspired by the iconic PR1 video, showing "the hardware is capable, what we need is better AI to make the robot smart enough to do things on its own" (quote

@pabbeel

)

We present our first steps in bridging gaps btw teleop & autonomy:

Introduce 𝐌𝐨𝐛𝐢𝐥𝐞 𝐀𝐋𝐎𝐇𝐀🏄 -- Learning!

With 50 demos, our robot can autonomously complete complex mobile manipulation tasks:

- cook and serve shrimp🦐

- call and take elevator🛗

- store a 3Ibs pot to a two-door cabinet

Open-sourced!

Co-led

@tonyzzhao

,

@chelseabfinn

188

891

4K

11

24

166

Very glad to host

@UnitreeRobotics

at

@StanfordAILab

today!

RL controllers are now the default choice of the industry.

2

10

144

@UnitreeRobotics

B2 has the best quadruped controller that I've ever seen, combining the robustness of RL controllers and naturalness of model-based controllers in a perfect way. Just incredible...

1

20

122

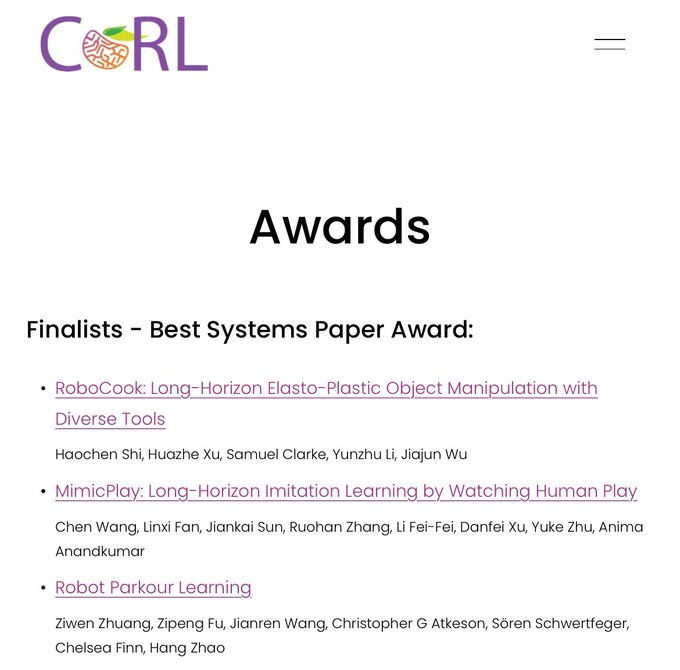

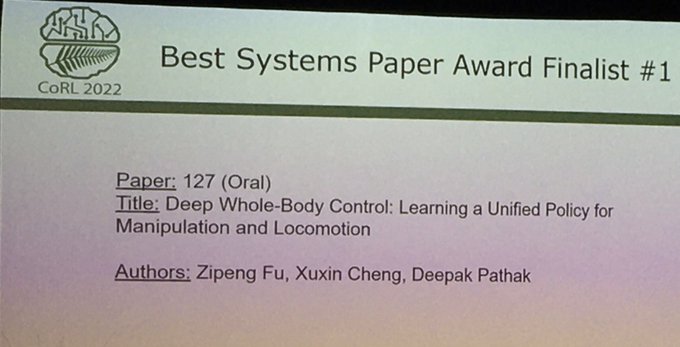

Check out our

#CoRL2022

Oral Talk and live demo of "Deep Whole-Body Control" given by

@pathak2206

(w/

@xuxin_cheng

). Super excited it made into the list of 3 finalists for the Best Systems Paper Award!

Project website:

Oral Talk:

2

4

45

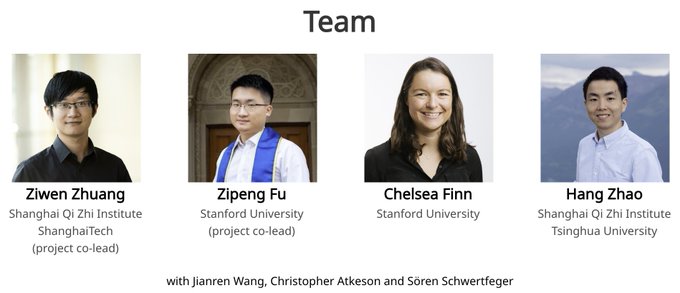

This project is led by

@ziwenzhuang_leo

and me.

Advised by

@chelseabfinn

and

@zhaohang0124

.(Please consider follow

@ziwenzhuang_leo

for more cool robot demos in future!)

Project website:

Code:

w/

@wang_jianren

, Chris and Sören

7

5

40

biped locomotion is solved by

@ZhongyuLi4

🙂

1

3

31

at this moment, the robot dog knows it's actually a humanoid in disguise

Evolution!!

🔥Exciting news 🤖

Our latest research by

@HoellerDavid

,

@rdn_nikita

,

@2nisi

in

@SciRobotics

unlocks new achievements: Unprecedented agility in quadrupedal robots, mastering locomotion, navigation, and perception through deep reinforcement learning!

@NVIDIARobotics

22

124

608

3

0

28

@ziwenzhuang_leo

@chelseabfinn

@zhaohang0124

@wang_jianren

@StanfordAILab

CoRL 2023 Best Systems Paper Finalist!

1

2

26

who doesn't want a bag of trail mix?

congrats

@lucy_x_shi

Introducing Yell At Your Robot (YAY Robot!) 🗣️- a fun collaboration b/w

@Stanford

and

@UCBerkeley

🤖

We enable robots to improve on-the-fly from language corrections: robots rapidly adapt in real-time and continuously improve from human verbal feedback.

YAY Robot enables

17

79

461

1

2

18

Imagine combine this pipeline with Vision Pros. Maybe Apple has a huge edge in in-the-wild robot data collection?

Congrats

@chenwang_j

and the team!

1

2

16

Huge thanks to

@anag004

@shikharbahl

@ashishkr9311

helping live demos. Video recording by

@xuxin_cheng

. Photo credit to

@breadli428

1

2

16

Join us at

#CVPR2022

for robot demos

Attending first in-person conf since the pandemic at

#CVPR2022

. We gave live demos of our robots during my talk at Open-World Vision workshop.

The convention center mostly had dull flat ground, so we had to find scraps and be creative with them to build "difficult" terrains! 😅

2

17

215

0

0

15

Excited to share our work on agile locomotion! Inspired by biomechanics, elegant gaits emerge from minimizing energy consumption without any motion or imitation priors.

0

0

12

@scott_e_reed

@tonyzzhao

@chelseabfinn

Thanks Scott! The compute and NN models maybe were not ready 10 years ago. PR1 has also shown amazing teleop results a way back.

1

0

6

@Scobleizer

@nfkmobile

around $8k including all the accessories like the depth cam and the Jetson compute board

1

1

6

Thanks Jim for the spotlight and great summary!!

More details at

0

0

5

Amazing home robot results from

@shikharbahl

!

0

1

4

Chechout our

#CVPR2022

paper at poster 124a now!

Today at

#CVPR22

, we present our paper on visual navigation with legged robots in the morning.

Our robot learns to walk (low-level) and plans (low-level) the path to a goal avoiding dynamic as well as "invisible" obstacles thanks to the coupling of vision with proprioception.

2

20

205

0

0

4

@allenzren

@tonyzzhao

@chelseabfinn

Thanks Allen! We found two parallel-jaw gripper are powerful, but maybe two dex hands are even better!

0

0

4

@thetjadams

Yes, you can modify the training pipeline for another quadrupedal robot by re-training with the URDF of that robot.

0

0

3

This work is done jointly with

@whshkan

and

@pathak2206

A detailed explanation thread can be found here:

2/

An arm can increase the utility of legged robots. But due to high dimensionality, most prior methods decouple learning for legs & arm.

In

#CoRL

'22 (Oral), we present an end-to-end approach for *whole-body control* to get dynamic behaviors on the robot.

7

47

298

1

0

3

@pathak2206

@tonyzzhao

@chelseabfinn

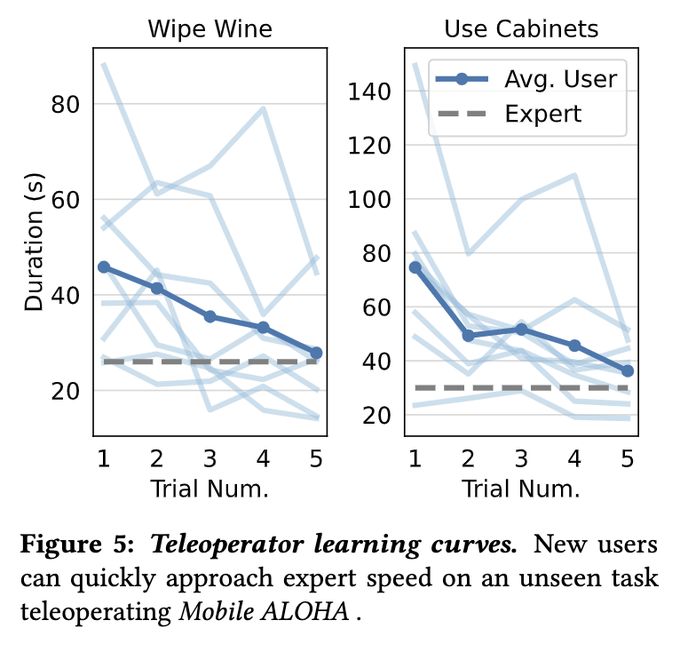

Turns out people without prior exp with mobile aloha can approach the teleop speed of

@tonyzzhao

and I after 5 trials of practice.

0

0

3

@viktor_m81

@UnitreeRobotics

Thanks Viktor! In addition to Isaac Gym, it also uses a Nvidia Jetson board for onboard network inference!

0

0

2

Apparently, my co-author & friend Xuxin Cheng changed his twitter handle 😛. He can be found here:

@xuxin_cheng

0

0

2

@pathak2206

@StanfordAILab

@ashishkr9311

@CarnegieMellon

Thanks Deepak!! I learned a lot from you! Super fortunate to be advised by you!

0

0

1

@Ransalu

@charlesjavelona

@tonyzzhao

@chelseabfinn

@StanfordAILab

Thanks for your interest! We will release all the details shortly!

0

0

1

@antoniloq

@ziwenzhuang_leo

@chelseabfinn

@zhaohang0124

@wang_jianren

@StanfordAILab

Thanks Antonio! Love your recent pursuit-evasion project!

0

0

1