Zhongyu Li

@ZhongyuLi4

Followers

1K

Following

3K

Media

18

Statuses

801

Assist. Prof@CUHK, PhD@UC Berkeley. Doing dynamic robotics + AI. Randomly post robot & cat things here.

Joined March 2021

Excited to share that I’ve recently joined the Chinese University of Hong Kong (CUHK) as an Assistant Professor in Mechanical and Automation Engineering! My research will continue to focus on embodied AI & humanoid robotics — legged locomotion, whole-body and dexterous

9

16

112

It turns out that VLAs learn to align human and robot behavior as we scale up pre-training with more robot data. In our new study at Physical Intelligence, we explored this "emergent" human-robot alignment and found that we could add human videos without any transfer learning!

18

69

744

Coming soon to mjlab and a long time in the making: RGB-D camera rendering! We can solve cube lifting with the YAM arm from 32×32 RGB frames in about <5 minutes of wall-clock time. Here's a clip showing emergent "search" behavior along with our upcoming viser visualization.

12

38

360

I've been working on deformable object manipulation since my PhD. It was totally a nightmare years ago and my PhD advisor was telling me not to work on it for my own good. Today, at ByteDance Seed, we are dropping GR-RL, a new VLA+RL system that manages long-horizon precise

35

147

935

mjlab now supports explicit actuators with custom torque computation in Python/PyTorch. This includes DC motor models with realistic torque-speed curves and learned actuator networks:

github.com

Isaac Lab API, powered by MuJoCo-Warp, for RL and robotics research. - mujocolab/mjlab

2

16

201

Pixels in, contacts out... Perception, interaction, autonomy - next agenda for humanoids. We learn a multi-task humanoid world model from offline datasets and use MPC to plan contact-aware behaviors from ego-vision in the real-world. Project and Code: https://t.co/4SRJ1qD196

12

56

299

MimicKit now has support for motion retargeting with GMR. We also released a bunch of parkour motions recorded from a professional athlete, used in ADD and PARC. Anyone brave enough to deploy a double kong on a G1? 😉

6

57

429

Ever wanted to simulate an entire house in MuJoCo or a very cluttered kitchen? Well now you can with the newly introduced sleeping islands: groups of stationary bodies that drop out of the physics pipeline until disturbed. Check out Yuval's amazing video and documentation 👇

MuJoCo now supports sleeping islands! https://t.co/LWqZ0dRGQn

https://t.co/EThtiDRm6C

5

13

195

Unlimited scenario for dexterous manipulation for unlimited data🤩

😮💨🤖💥 Tired of building dexterous tasks by hand, collecting data forever, and still fighting with building the simulator environment? Meet GenDexHand — a generative pipeline that creates dex-hand tasks, refines scenes, and learns to solve them automatically. No hand-crafted

0

0

16

Excited to share our new work on making VLAs omnimodal — condition on multiple different modalities (one at a time or all at once)! It allows us to train on more data than any single-modality model, and outperforms any such model: more modalities = more data = better models! 🚀

github.com

Official repository for OmniVLA training and inference code - NHirose/OmniVLA

4

24

138

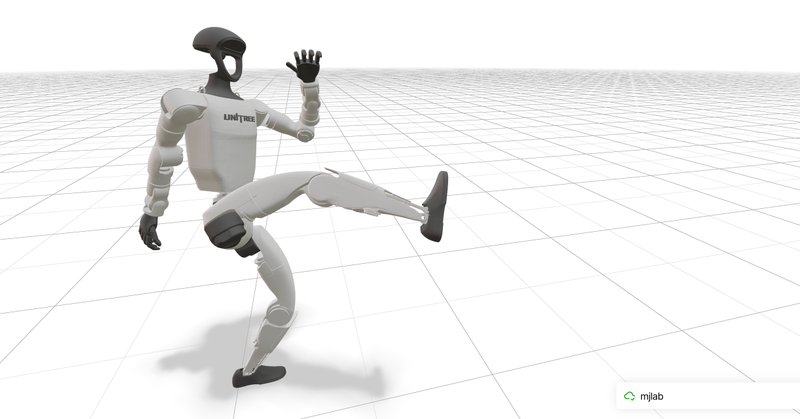

We open-sourced the full pipeline! Data conversion from MimicKit, training recipe, pretrained checkpoint, and deployment instructions. Train your own spin kick with mjlab: https://t.co/KvNQn0Edzr

github.com

Train a Unitree G1 humanoid to perform a double spin kick using mjlab - mujocolab/g1_spinkick_example

7

76

391

Amazing results! Such motion tracking policies can be trivially trained using our open-source code: https://t.co/3Wp74hK3bC

github.com

Contribute to HybridRobotics/whole_body_tracking development by creating an account on GitHub.

Unitree G1 Kungfu Kid V6.0 A year and a half as a trainee — I'll keep working hard! Hope to earn more of your love🥰

3

8

65

It was a joy bringing Jason’s signature spin-kick to life on the @UnitreeRobotics G1. We trained it in mjlab with the BeyondMimic recipe but had issues on hardware last night (the IMU gyro was saturating). One more sim-tuning pass and we nailed it today. With @qiayuanliao and

Implementing motion imitation methods involves lots of nuisances. Not many codebases get all the details right. So, we're excited to release MimicKit! https://t.co/7enUVUkc3h A framework with high quality implementations of our methods: DeepMimic, AMP, ASE, ADD, and more to come!

26

93

660

Training RL agents often requires tedious reward engineering. ADD can help! ADD uses a differential discriminator to automatically turn raw errors into effective training rewards for a wide variety of tasks! 🚀 Excited to share our latest work: Physics-Based Motion Imitation

6

52

294

Implementing motion imitation methods involves lots of nuisances. Not many codebases get all the details right. So, we're excited to release MimicKit! https://t.co/7enUVUkc3h A framework with high quality implementations of our methods: DeepMimic, AMP, ASE, ADD, and more to come!

9

151

774

Humanoid motion tracking performance is greatly determined by retargeting quality! Introducing 𝗢𝗺𝗻𝗶𝗥𝗲𝘁𝗮𝗿𝗴𝗲𝘁🎯, generating high-quality interaction-preserving data from human motions for learning complex humanoid skills with 𝗺𝗶𝗻𝗶𝗺𝗮𝗹 RL: - 5 rewards, - 4 DR

31

157

672