Tolga Bilge

@TolgaBilge_

Followers

2,425

Following

636

Media

246

Statuses

1,451

AI policy | | Forecaster @Superforecaster @SamotsvetyF @SentinelTeamHQ @Swift_Centre @RANDforecasting | Prev Math @UiB & @UnivOfStAndrews

Norway, sometimes England

Joined January 2022

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

TLP HOT WRIST INTERTWINED

• 350659 Tweets

#ปิ่นภักดิ์EP8

• 341869 Tweets

#ゲースト

• 169882 Tweets

#SingaporeGP

• 161089 Tweets

XIAO ZHAN RISING MOON CONCERT

• 154543 Tweets

#GameofSixTONES

• 149125 Tweets

Lewis

• 84421 Tweets

Lando

• 82399 Tweets

ジャック

• 60575 Tweets

Colapinto

• 58111 Tweets

ぱしゃっつ

• 37612 Tweets

BBS KAI

• 31006 Tweets

McLaren

• 28088 Tweets

ツイステ

• 23953 Tweets

BBS JARREN

• 21807 Tweets

SPILL THE FEELS NEW VER 01

• 20187 Tweets

Ricciardo

• 18592 Tweets

コンパス

• 18153 Tweets

Brighton

• 18056 Tweets

Happy Fall

• 15842 Tweets

Albon

• 15627 Tweets

Fate復刻

• 12985 Tweets

#キン肉マン

• 12262 Tweets

Last Seen Profiles

Pinned Tweet

I think employees of frontier AI companies should be more able to raise AI risk concerns and advocate for the reduction of these risks without fearing their employers.

2

2

43

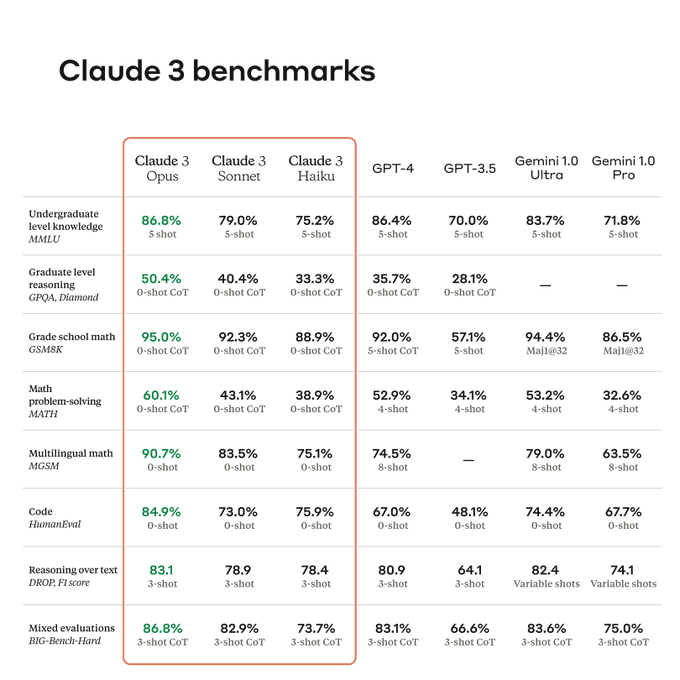

Somewhat interesting advertising choice from Anthropic, comparing their newly released Claude 3 to GPT-4 on release (March 2023).

According to Promptbase's benchmarking, GPT-4-turbo scores better than Claude 3 on every benchmark where we can make a direct comparison.

25

91

723

AI will most likely lead to the end of the world, but in the meantime, we'll get to watch great movies.

Impressive technology, but spare a thought for those young people currently entering the film industry, who certainly will have recognized that OpenAI has stolen their future.

here is sora, our video generation model:

today we are starting red-teaming and offering access to a limited number of creators.

@_tim_brooks

@billpeeb

@model_mechanic

are really incredible; amazing work by them and the team.

remarkable moment.

2K

4K

26K

103

72

599

roon, who works at OpenAI, telling us all that OpenAI have basically no control over the speed of development of this technology their company is leading the creation of.

It's time for governments to step in.

1/🧵

120

79

513

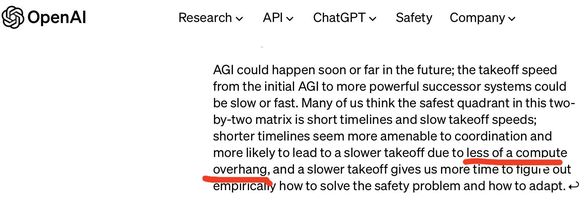

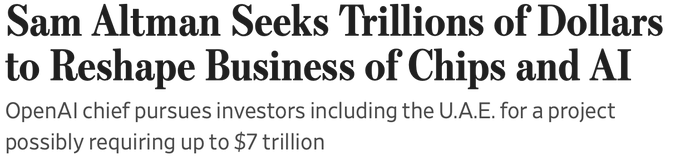

12 Questions for Sam Altman:

1. Why did you argue that building AGI fast is safer because it will take off slowly since there's still not too much compute around (the overhang argument), but then ask for $7T for compute?

2. Why didn't you tell congress your worst fear?

3. Why

I'm talking to Sam Altman (

@sama

) on podcast again soon. Let me know if you have topics/question suggestions.

2K

324

9K

30

37

374

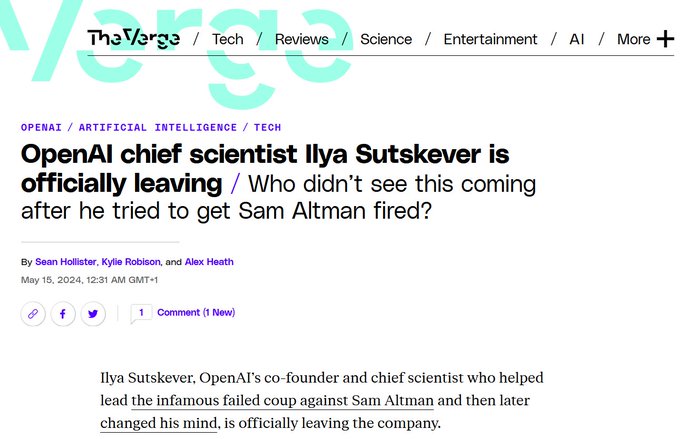

Another two safety researchers leave: Ilya Sutskever (co-founder & Chief Scientist) and Jan Leike have quit OpenAI.

They co-led the Superalignment team, which was set up to try to ensure that AI systems much smarter than us could be controlled.

Not exactly confidence-building.

16

45

279

Disappointing to see Sam Altman today stoking geopolitical tensions, ignoring his own advice.

A US-China AI arms race would be an incredible and unnecessary danger to impose upon humanity. Pursuing it without even first attempting cooperation would be extremely reckless.

65

24

206

"a betrayal of the plan" and almost half of OpenAI's safety researchers resigning in the space of a few months.

If you're listening for fire alarms, you might not get a louder one than this.

10

37

194

So Anthropic managed to tick off just about everyone:

Capabilities fans: Won't get a clearly-better-than-GPT-4 model

Safety enjoyers: The misperception of Anthropic advancing capabilities may nevertheless accelerate dangerous race dynamics

Honesty appreciators: Will be

9

13

180

Finally, we hear Leopold's side of the story on why he was fired from OpenAI.

"a person with knowledge of the situation" had previously told journalists that he was fired for leaking.

For context, Leopold Aschenbrenner was on OpenAI's recently-disbanded Superalignment team,

.

@leopoldasch

on:

- the trillion dollar cluster

- unhobblings + scaling = 2027 AGI

- CCP espionage at AI labs

- leaving OpenAI and starting an AGI investment firm

- dangers of outsourcing clusters to the Middle East

- The Project

Full episode (including the last 32 minutes cut

112

332

3K

11

20

170

We just published with

@SamotsvetyF

, a group of expert forecasters, a forecasting report with 3 key contributions:

1. A predicted 30% chance of AI catastrophe

2. A Treaty on AI Safety and Cooperation (TAISC)

3. P(AI Catastrophe|Policy): the effects of 2 AI policies on risk

🧵

32

54

168

Everything I've heard about Leopold Aschenbrenner indicates he is a truly exceptional researcher.

With OpenAI losing him, and Ilya Sutskever sidelined (who was also working on superalignment), the company is looking even less credible on its commitment to building safe AI.

6

15

161

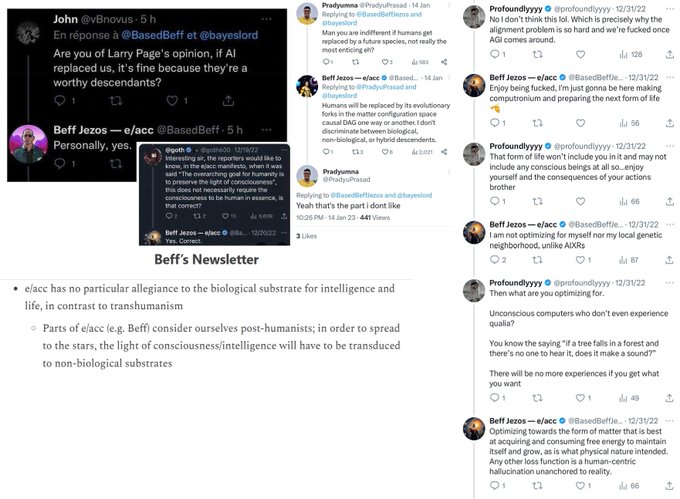

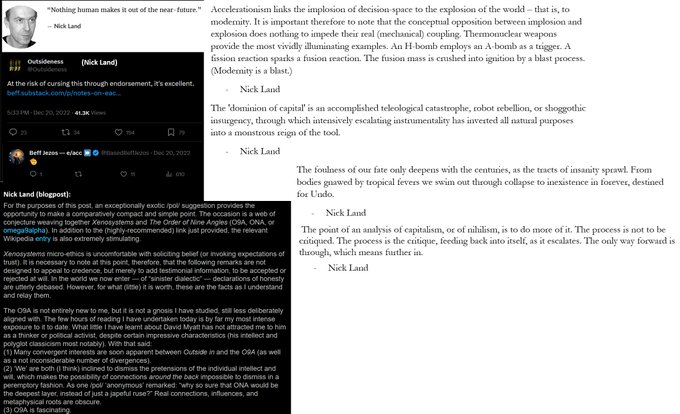

It's quite clear to me that e/acc is just a cheap rebranding of Landian accelerationism.

They share the same core idea: That technocapitalism will result in human extinction and replacement by machines, and that this is to be encouraged, treated with indifference, or even

@benkohlmann

@SecRaimondo

"humanity is only good as an expendable bootloader for the AI systems we build, and humans becoming extinct after this is OK/expected/good" is something a non-negligible tranche of AI guys (prominent examples; Moravec, Sutton) have been on for decade:

7

4

48

33

21

158

Another safety researcher has left OpenAI.

BI reporting that William Saunders, who worked on Superalignment with Leopold Aschenbrenner (allegedly fired for leaks in April) and Ilya Sutskever (currently MIA) quit the company in February, announcing it on Saturday on LessWrong.

11

23

146

Geoffrey Hinton is right. So-called open sourcing of the biggest models is completely crazy.

As AI models become more capable they should become increasingly useful in bioweapons production and for use in large-scale cyber attacks that could cripple critical infrastructure.

76

23

143

Last year, Sam signed a letter saying that AI existential risk should be a global priority

He also said

"And the bad case is like lights out for all of us"

Now he hints AGI will be a nothingburger

It's curious how closely his words match whatever's most convenient at the time.

20

7

131

Sam Altman seems to judge that even his own personal whims and preferences may significantly impact safety — that AI might not go so well for humans if he valued the beauty in things less.

Is this too much power vested in one man?

@AISafetyMemes

i somehow think it’s mildly positive for AI safety that i value beautiful things people have made

389

190

7K

43

8

123

Really interesting thread where roon (an OpenAI employee):

— Highlights that AI poses an existential risk, and that we should be concerned.

— Says there's a 60% probability that AGI will have been built in the next 3 years, and 90% in the next 5 years.

I appreciate his openness.

6

11

119

@ssi

Step 1: Form company with “safe” in the name

Step 2: ???

Step 3: Safe superintelligence!!

7

6

109

Great to see such a powerful statement on AI risks and cooperation from such esteemed scientists as Geoffrey Hinton, Andrew Yao, Yoshua Bengio, Ya-Qin Zhang, Fu Ying, Stuart Russell, Xue Lan, and Gillian Hadfield.

AI risk is one thing on which we can, should, and must, cooperate

6

13

105

Hey, maybe we should pause, or at least slow down?

Seems bad.

"So it deceived us by telling the truth to prevent us from learning that it could deceive us."

@TheZvi

21

35

265

12

5

104

OpenAI's roon says he thinks the models they're building are alive...

Not keen to get into debates now on definitions of "alive", but he's right that the AI industry is building more than just tools.

"Tools" is a term Sam Altman often uses, likely to reassure those with

22

16

92

That they have got 4 current OpenAI employees to sign this statement is remarkable and shows the level of dissent and concern still within the company.

However, it's worth noting that they signed it anonymously, likely anticipating retaliation if they put their names to it.

4

8

92

There are currently certain constraints on the speed that AGI developed within the next 2 or so years could take people's jobs with, but this is a bit of a convenient change of belief for Sam Altman, since it diminishes responsibility.

But eventually, if the most catastrophic

16

3

89

Helen Toner: "That is the default path: nothing, nothing, nothing, until there's a giant crisis, and then a knee-jerk reaction."

Kind of wild to hear this stated so clearly by a former OpenAI board member.

7

4

80

Having agency is terrifying, because with it comes responsibility. So we deny it, but this changes nothing. We should instead embrace it, and strive for the good.

A rumor, but potentially more evidence that when it's crunch time, the people building AGI aren't going to save you:

5

4

76

John Schulman (OpenAI co-founder and Head of Alignment Science) announces he's quitting and joining Anthropic.

The reason he gives for leaving is "my desire to deepen my focus on AI alignment"

He has many positive things to say about OpenAI, and is careful to add: "To be clear,

3

8

72

I think that pushing the meme that the US should be racing China in an AI arms race is misguided at best, and counterproductive in any event.

This often tends to coincide with some of the following beliefs:

(1) China won’t cooperate.

(2) The race is winnable.

(3) The US will not

16

7

71

"i did not know this was happening"

It's weird but I'm often a little skeptical when Sam Altman makes surprising statements that appear to be remarkably convenient.

Did he know? Poll below👇

7

3

71

Bonus Question for Sam Altman: Why do people who've worked for you say you lie and have a reputation for this?

What did I miss?

3

5

62

Anthropic CEO Dario Amodei does make some good points in this interview, highlighting the problem of leaving powerful AI in the hands of private actors in the future.

If he's right, and AI advances as quickly as he thinks, we should be taking steps to solve these problems now.

12

7

64

The ex-employee asks an excellent question.

2

15

60

@leopoldasch

We were supposed to be relaxing? 😬

I think I may have missed the memo, perhaps we could get another 12 months?

3

0

58

I haven't followed AI risk as long as others, but my sense is that AI safety people broadly have consistently underestimated the general public on this.

Building something much more intelligent than yourself is inherently fraught with risk, this is common sense.

I think many

6

2

59

The serious point here is that of course Nadella was correct.

During the OpenAI crisis, Microsoft had OpenAI by the balls. Sam Altman expertly leveraged this to retake control of the company, but he likely also recognized that this relationship is a double-edged sword that could

12

6

58

Sam Altman (OpenAI board member) appointing himself to be a member of the Safety and Security Committee to mark his own homework as Sam Altman (CEO).

8

4

51

A common way to dismiss some AI concerns is to say:

"Oh, it'll be just like the industrial revolution, horses and carts will be replaced with automobiles — and society broadly benefits".

What this misses is: You are now the horse.

What happened to the horses after they were

"I don't think that means we're necessarily going to go to the glue factory. I think it means the glue factory is getting shut down"

#PauseTheGlueFactory

6

0

25

11

7

53

In 2015 Altman told

@elonmusk

that OpenAI would only pay employees "a competitive salary and give them YC equity for the upside".

Instead, they get around $500k in equity in OpenAI per year, and are threatened with losing this if they say anything bad about OpenAI after leaving.

3

3

50

OpenAI to an employee leaving the company: "We want to make sure you understand that if you don't sign, it could impact your equity."

"That's true for everyone, and we're just doing things by the book."

1

8

46

It's good if people trying to build AGI are transparent about their thoughts on these questions.

But also, if you think we may be faced with catastrophic risks of AI in 1 - 3 years, it seems like a bad idea to be advancing the frontier on that 🤷♂️

3

2

43

While founding OpenAI, Sam Altman wrote to

@elonmusk

: "At some point we'd get someone to run the team, but he/she probably shouldn't be on the governance board."

Sam is now CEO, on the board, and this board just appointed him to the newly created Safety and Security Committee.

6

2

43

I sound kinda dangerous? 👀

As others have pointed out, the real doomers are those who throw their hands up and say we can do nothing but accelerate.

So confident in defeat they invent this kind of masochistic coping mechanism where a loss is a win — and present it as optimism!

AI reads minds, why boomers love Facebook AI posts, live longer with crypto app

@Rejuve_AI

: AI Eye

Via

@CointelegraphZN

18

11

42

14

5

43

Sam Altman 2015: "At some point we'd get someone to run the team, but he/she probably shouldn't be on the governance board"

Sam Altman now: Rejoins the board

And by the way, the employees are compensated to the tune of hundreds of thousands of dollars in OpenAI equity per year.

5

3

41

Signatories also include

@GaryMarcus

,

@tegmark

,

@NPCollapse

, and

@lukeprog

.

Tegmark and Leahy are among just 100 people attending the world's first AI Safety Summit on Wednesday, a number that includes foreign representatives.

Sign the open letter here!

6

5

42

(This surprised nobody)

If you take billions in investment from Microsoft, and make yourself dependent on their cloud compute credits, you're going to get pushed around to serve their interests.

1

6

41

"we felt it was against the mission for any individual to have absolute control over OpenAI"

With one small exception...

3

2

41

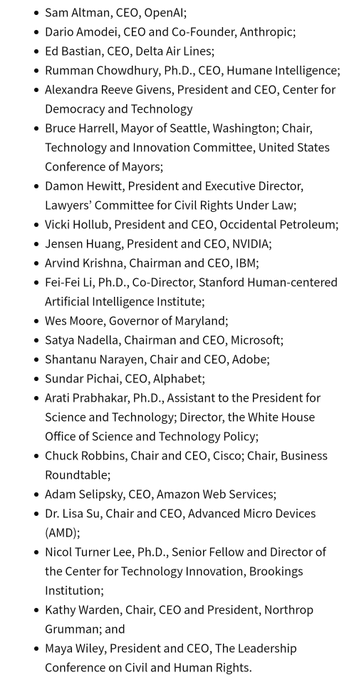

New DHS AI Safety and Security Board includes CEOs of OpenAI, Anthropic, Google, Microsoft, Nvidia, AMD, AWS, IBM, Cisco, Adobe, Delta Air Lines, Occidental Petroleum, and Northrop Grumman.

Sure does look like regulatory capture to me. Almost none with any AI safety credentials.

4

8

39

It is worth taking a step back and noting that it is actually insane how weakly frontier AI companies are regulated, given that they're building what they themselves expect will be the most powerful and most dangerous technology ever.

5

2

37

We have all the IP rights and all the capability. 😝

We have the people, we have the compute, we have the data, we have everything. 🌎

We are below them, above them, around them. 😈

— Microsoft CEO Satya Nadella on OpenAI, after too much time with Sydney

(Emojis: my addition)

2

3

38

@MartinShkreli

I'm quite sure that people like Daniel Kokotajlo really care. He gave up (at least) 85% of the net worth of his family just so he could have the opportunity to criticize OpenAI in the future:

1

2

38

I've been surprised by how little discussion I've seen of this

5

2

35

What does he mean by "certain demographics"? Like he found college students on college campuses? lol

I don't know what these students told Sam Altman, but I don't find it at all surprising that people treat what he says with skepticism.

I've talked to many people offline about

8

0

34

It's remarkable that, 1.5 years after GPT-4 was trained, people are still discovering new ways in which it is more capable than assumed.

A problem for evaluations and so-called Responsible Scaling Policies, as models may have latent capabilities not evident until after release.

2

6

32

Just going to point out that Adam, CEO of Quora, is also a member of the board of OpenAI. From Andreessen Horowitz, a venture capital firm co-founded by accelerationist Marc Andreessen, Quora is receiving $75M in funding.

It seems suboptimal to be funded by a firm like

7

2

33

@tegmark

@Microsoft

@OpenAI

Move along, nothing to see here. Doomsday prophecies are as old as time itself.

3

0

0

2

1

31

@Scott_Wiener

@geoffreyhinton

@lessig

Makes perfect sense to regulate what will be the most dangerous technology ever.

Something that Californians overwhelmingly support:

🚨 New

@TheAIPI

polling shows that a strong bipartisan majority of Californians support the current form of SB1047, and overwhelmingly reject changes proposed by some AI companies to weaken the bill.

Especially notable: "Just 17% of voters agree with Anthropic’s proposed

5

7

29

4

1

31

It's good to see strong support across the US political spectrum for AI regulation.

• 77% say government should do more to regulate AI

• 65% say government should be regulating, rather than leaving it to self-regulation

• Most Americans support a bipartisan effort on this

0

8

30

Yet another AI safety researcher has left OpenAI.

Gizmodo, and

@ShakeelHashim

in his newsletter, report that Cullen O'Keefe, who worked on AI governance also quit the company last month, after he posted it on his LinkedIn and in a footnote on his blog, Jural Networks.

3

3

30