Deb Raji

@rajiinio

Followers

29K

Following

15K

Media

183

Statuses

6K

AI accountability, audits & eval. Keen on participation & practical outcomes. CS PhDing @UCBerkeley. forever @AJLUnited, @hashtag_include ✝️

Joined April 2018

These are the four most popular misconceptions people have about race & gender bias in algorithms. I'm wary of wading into this conversation again, but it's important to acknowledge the research that refutes each point, despite it feeling counter-intuitive. Let me clarify.👇🏾.

FOUR things to know about race and gender bias in algorithms:. 1. The bias starts in the data. 2. The algorithms don't create the bias but they do transmit it. 3. There are a huge number of other biases. Race and gender bias are just the most obvious. 4. It's fixable! 🧵👇.

27

1K

3K

One day we are going to wake up, after years of the consistent and pernicious pollution of critical information ecosystems, and deeply regret this era of thoughtless LLM deployment. A devastating loss of accuracy, clarity and conciseness -- for what?.

The internet has been progressively diluted with AI-generated slop. Are medical records headed for the same fate? . 🧵 I just published a perspective in @NEJM with @AdamRodmanMD and Arjun Manrai on why rushing AI into medical documentation could be a mistake.

29

781

3K

ICYMI @jovialjoy + @AOC = 🔥🔥🔥 My fave exchange from House Oversight hearing on facial recognition:. "We saw that these algorithms are effective to. different degrees. Are they most effective on women?". "No". "Are they most effective on people of color?". "Absolutely not."

53

382

2K

She didn't resign, a meta-thread. #IStandWithTimnit.

I understand the concern over Timnit’s resignation from Google. She’s done a great deal to move the field forward with her research. I wanted to share the email I sent to Google Research and some thoughts on our research process.

12

396

1K

I'm starting a CS PhD @Berkeley_EECS this Fall, working with @beenwrekt & @red_abebe!. Doing a PhD is a casual decision for some - that wasn't the case for me. I appreciate everyone that respected me enough to understand this & continued to work with me to figure things out. 💕.

72

33

1K

This reveals so much about how little we meaningfully discuss data choices in computer science education. Data are at the locus of pretty much every tech policy issue - labor, bias, environmental, copyright, privacy, security, toxicity, safety, etc. It is literally politics!.

I teach computer science and challenge him to find any politics in my class. When political opinions start meddling with scholarship, it ceases being science and becomes activism.

19

173

881

In a wild follow up to this, Westlaw tried to say the researchers that found 1 in 6 (!) false responses from their AI tools were "auditing the wrong product". So those researchers asked for access the "right" product and found hallucinations in. 1 in 3 responses 🤦🏾♀️

A similar situation happened recently - LexisNexis & Thomson Reuters (parent co of Westlaw) have both released AI products to be used by actual lawyers. Stanford researchers (incl. Dan Ho, @chrmanning ) found that the tech was disastrously inappropriate:

8

238

775

I'm one of @techreview's 35 Under 35 Innovators! . I've known since early May, but it feels esp. meaningful to be seen & have this work featured in this moment. Thanks to @jovialjoy, @timnitGebru, @mmitchell_ai for guiding my growth from the beginning.💞.

65

104

724

I cannot count the number of times @timnitGebru has encouraged us, spoken out for us, defended us & stuck her neck out for us. She has made real sacrifices for the Black community. Now it's time to stand with her! . Sign this letter to show your support.

12

148

630

People need to understand that what's happening to @timnitGebru & @mmitchell_ai at Google, can happen to any Black woman and their allies, anywhere. I'd especially appreciate if people chilled on framing this as a corporation specific thing - it's not. This happens everywhere.

5

132

600

Ok, I can *finally* announce this: . Thrilled to be a full-time @mozilla fellow this year! 🥺. I'll be finally given the freedom to dedicate 100% of myself to a research agenda that has come to mean so much to me! It's kind of a scary time to be independent rn but boy am I ready.

42

16

600

Truly pisses me off how often ppl will try to erase @jovialjoy & @timnitGebru's role in waking up the entire facial recognition industry to their obvious bias problem. They will literally cite everything & everyone before citing these two and it makes zero sense.#CiteBlackWomen.

5

175

560

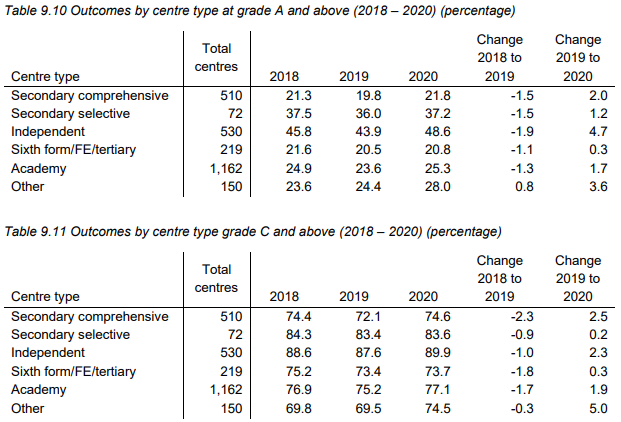

This is actually unbelievable. In the UK, students couldn't take A-level exams due to the pandemic, so scores were automatically determined by an algorithm. As a result, most of the As this year - way more than usual - were given to students at private/independent schools. 😩.

Looks like sixth form and FE colleges have particularly lost out in the standardization process for this year's A Level results. Private schools reaping the benefits with a huge increase in grade A and above.

12

283

523

To all the people scammed by @sirajraval that found themselves out of $199 and still without any idea of what ML is, I suggest @fastdotai. It’s free, it’s a solid start and @jeremyphoward and @math_rachel are amongst the loveliest people I’ve ever met.

16

92

518

Serious question: Why is @nature publishing phrenology? . I'm alarmed that someone wrote this but more alarmed that multiple reviewers somehow let this get accepted. The conclusions are not just offensive but highly questionable. So many biased and completely unfounded claims.

11

105

426

A similar situation happened recently - LexisNexis & Thomson Reuters (parent co of Westlaw) have both released AI products to be used by actual lawyers. Stanford researchers (incl. Dan Ho, @chrmanning ) found that the tech was disastrously inappropriate:

5

100

411

I said this because I believe it: “It was really easy for them to fire her because they didn’t value the work she was doing”. Unfortunately for Google, much of the research community disagrees. Proud to continue standing by.@timnitGebru & @mmitchell_ai.

4

84

386

Lists like this can feel silly but I don't take for granted any opportunity to highlight the work I'm fortunate to do. Feeling especially grateful for my @mozilla teammates @Abebab, @brianavecchione, @ryanbsteed & @OjewaleV🙏🏿 Excited for what's to come!.

29

40

396

🗣️Finally, it's out! It's here! . In this paper, me, @morganklauss & @amironesei present work on a topic people remain hesitant to discuss - the **power dynamic between disciplines** & how that shapes poor AI ethics education and practice. Check it out:

11

92

364

3. Race & gender can be the *least* obvious biases to detect. As legally protected attributes, they're the least likely to be included in meta-data & we don't succeed at identifying accurate proxies. Here's a recent @FAccTConference paper about this:

1

31

348

My main takeaway from this workshop is how much the ML field is literally exporting it's chaos into other scientific disciplines. Well-meaning researchers see ML as a way to make sense of their data, but then fall into one of the many trap doors to invalid or non-sensical models.

Dozens of scientific fields that have adopted machine learning face a reproducibility crisis. At a Princeton workshop today, ten experts discuss how to find and fix ML flaws. We had to cap Zoom signups at 1,600, but we’ve added a livestream. Starting NOW:.

7

85

343

This is quite literally the thesis of our paper "Data and its (dis)contents: A survey of dataset development and use in machine learning research". I hope ML researchers will take a look!.

In general, there is very little research done on best practices for data curation / cleaning / annotation, even though these steps have more impact on applications than incremental architecture improvements. Preparing the data is an exercise left to the reader.

7

73

349

Speaking out against censorship is now "inconsistent with the expectations of a Google manager". She did that because she cares more and will risk everything to protect those she has hired to work under her - a team that happens to be more diverse than any other at Google.

However, we believe the end of your employment should happen faster than your email reflects because certain aspects of the email you sent last night to non-management employees in the brain group reflect behavior that is inconsistent with the expectations of a Google manager.

4

36

315

This is such a fundamental category error? Go & poker are games with a fixed number of known states - computationally difficult but clearly feasible if you pattern match efficiently (which is what deep learning is good at). Writing a novel is a different type of task completely.

First they said AI can’t play Go, because it’s too complicated. Then they said AI can’t win at poker, because it’s a “people game”. Today, the skeptics say “AI won’t write novels”. Surely once that happens, though, the goalposts won’t move again?

19

54

328

“It’s quite obvious that we should stop training radiologists,”. - Geoffrey Hinton, 2016. “radiologists should be worried about their jobs” .- Andrew Ng, 2018. 2019: ". Clinically Meaningful Failures in Machine Learning for Medical Imaging" . lol.

Technologists proclaiming that AI will make various professions obsolete is like if the inventor of the typewriter had proclaimed that it will make writers and journalists obsolete, failing to recognize that professional expertise is more than the externally visible activity.

5

73

309

2. Much of the de-biasing work makes it clear that algorithmic design choices can lead to *more fair* outcomes (w/ a "fixed" dataset) so it shouldn't be surprising that algorithmic design can also lead to *less fair* outcomes. @sarahookr explains it here:.

Yesterday, I ended up in a debate where the position was "algorithmic bias is a data problem". I thought this had already been well refuted within our research community but clearly not. So, to say it yet again -- it is not just the data. The model matters. 1/n.

1

26

304

They paid them and asked for consent. This is larger & more ethically sourced than any other evaluation dataset for testing demographic bias so far. Functionality is just one of *many* issues, of course, but now there's literally no excuse for a tool not to work on minorities.

As part of our ongoing efforts to help surface fairness issues in #AI systems, we’ve open sourced a new data set of 45,186 videos to help evaluate fairness in #computervision and audio models across age, gender, apparent skin tone, & ambient lighting.

8

61

312

Not at all an exaggeration to say that I'm in research today (and will hopefully continue) becuase of the support of groups like @black_in_ai. For anyone volunteering for any affinity group, know that your work is important & making a huge difference. Representation matters!.

11/n Congratulations to Deb Raji @rajiinio from the University of Toronoto. Deb is joining @Berkeley_EECS @berkeley_ai as a PhD student working on evaluation, audits, & accountability. Deb shares "Thanks to BAI for all their hard work in supporting students in various ways.

16

29

313

I wrote "The Discomfort of Death Counts" for Cell's data science journal @Patterns_CP, for their series on COVID-19 & data. I thought I would be analyzing figures - but I didn’t. Instead, it's about seeing data as humans so we can properly mourn them. 💔.

10

121

296

Still can't believe what happened. It's unreal. @mmitchell_ai fought for me. She would remind me to speak up & take credit for things I did, when others in a meeting would talk over or ignore me. @timnitGebru hypes me up every time. I wanted to quit, she's the reason I didn't.

As I process the abrupt firing of my manager @mmitchell_ai, in the wake of @timnitGebru’s firing, I keep coming back to the incredible feat they achieved in building such an incredibly diverse team, and one that truly thrived for a time,. 1/.

1

42

291

I catch glimpse of this perspective often - it genuinely surprises me. AI critics are not haters reluctant to give tech ppl their due credit. This in fact has nothing to do with tech ppl, is not at all personal. This is about those impacted & the harms they are now vulnerable to.

I think some are reticent to be impressed by AI progress partly because they associate that with views they don't like--e.g. that tech co's are great or tech ppl are brilliant. But these are not nec related. It'd be better if views on AI were less correlated with other stuff.🧵.

7

57

298

To put this in context: . Facebook pioneered the use of deep learning for facial recognition with the DeepFace model in 2014, because they had more face data than anyone else at the time (. For them to now (finally) reject this technology is a big deal.

My jaw dropped to the floor when I got this news. Facebook is shutting down the facial recognition system that it introduced more than 10 years ago and deleting the faceprints of 1 billion people:

8

93

297

Thrilled to see @jovialjoy in @voguemagazine! And challenging the biased image search results for "beautiful skin" 💞. We've gone mainstream, people!

5

44

283

Expressed intent to resign - provided date + immediate termination = legally fired in the state of California.

is an interesting read on whether someone who expresses an intent to resign but whose employer stops paying them before a provided date has resigned or been fired under California law.

3

16

272

The thing is - @AllysonEttinger wrote a whole paper years ago warning about this exact situation. In those experiments, BERT failed *every single negation test*! . Did that deter deployment? Somehow, no. At some point, these institutions need to take basic responsibility.😕

4

66

280

No, we shouldn't let people publish whatever they want. Ethical reflection is a basic part of the scientific process and part of what it means to do "good science". Pretty much every other applied research field has to think about this so what exactly makes ML exempt?.

so, let people in academia publish on whatever they want. unless it is bad science, and then, sure, don't publish it. and put the emphasis where it matters: regulating and controlling the *actual deployment* of systems.

12

48

274

I feel like CS academics in particular are underestimating the amount of politicking & relentless advocacy it takes to get to something like this. Is this perfect? Of course not. Multiple different groups fought over every word! . But is this a rare & monumental win? Absolutely.

Today, @POTUS and I announced an Executive Order to ensure our nation leads the world in artificial intelligence. This historic EO is the most significant action taken by any government in the world to ensure AI advances innovation and protects rights and safety.

8

38

274

Amazing news!🎉🎉🎉. Rashida Richardson is one of my favorite scholars. Her work has been transformational in the AI accountability space - she's revolutionized how we talk about data & race! . For those unfamiliar, here are some of her papers I learnt a lot from. (THREAD)⬇️

I am pleased to welcome Rashida Richardson to the Science and Society team @WHOSTP. Her combination of legal, policy, and civil rights insight into emerging technologies and automated systems is incisive and unparalleled.

3

71

257

"Raji didn’t see people like herself onstage or in the crowded lobby. Then an Afroed figure waved from across the room. It was @timnitGebru. She invited Raji to the inaugural @black_in_ai workshop. " . Will never forget this! Timnit impacted so many. ❤️.

4

50

266

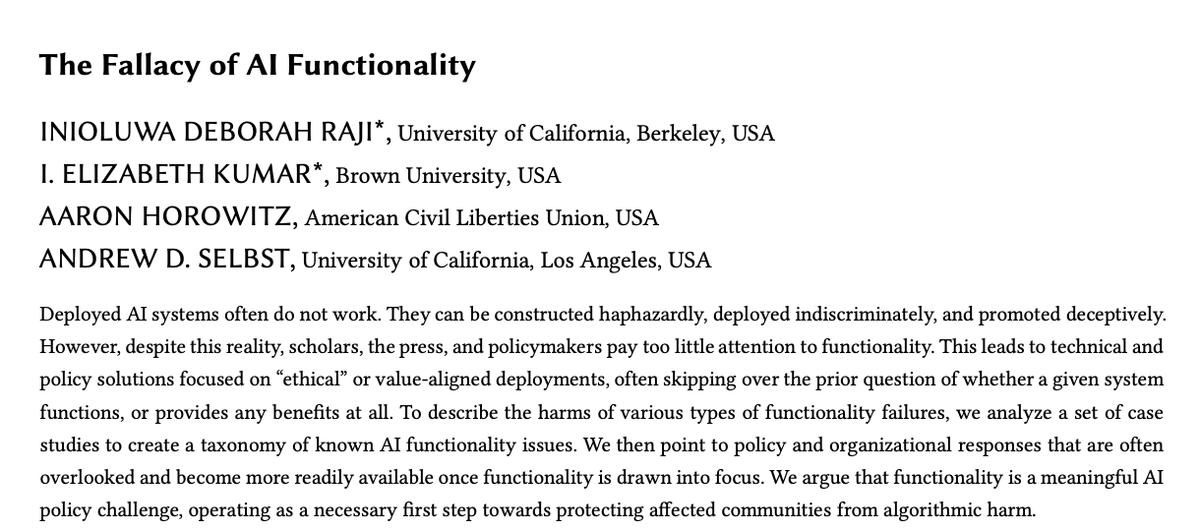

In all the excitement of #FAccT22, I forgot to actually announce what I'll be presenting! Fortunate to be involved with 3 papers, centered on my interest in algorithmic accountability. The first: We discuss how current AI policy ignores the fact that many AI systems don't work.

2

56

263

After Schumer’s forum, so much of the focus from media & government officials was anchored to the speculations of the wealthy men in the room. So I wrote in @TheAtlantic about another view -- the grounded complexity brought by unions & civil society:.

8

86

252

The story of what happened to @timnitGebru goes far beyond Google. My brother David (who's only ever half-interested in anything I do) was so shook by the situation, he wrote a blog post about it! . Some good stuff there - if so inclined, check it out!

2

58

248

I'm getting tired of this pattern. At this point, @jovialjoy has to spend almost as much time actively fighting erasure as she does just doing her work. It's a waste of everyone's energy & so frustrating to watch her, the spark this conversation, being consistently overlooked!.

1

64

236

If AI is the solution to healthcare why did California nurses go on strike against it in April? It's so wild to me how disconnected these two worlds can be.

Today Sam Altman and I published a piece in TIME sharing our vision for how AI-driven personalized behavior change can transform healthcare and announcing the launch of Thrive AI Health, a new company funded by the OpenAI Startup Fund and Thrive Global, which will be devoted to

7

69

259