(((ل()(ل() 'yoav))))👾

@yoavgo

Followers

65K

Following

72K

Media

3K

Statuses

137K

what there is no overwhelming agreement on is what will happen the day after. how do we build a livable future here, for both israelis and palestinians. israel leadership is not thinking of this now, future looks grim. THIS is where protest effort and outrage should be invested.

14

12

125

this "feature" in code editors where you type an opening character and it immediately inserts the closing one for you as well -- why?? at the very very best case, you will have to skip over this character with an arrow. so why?

8

0

19

can you explain "serverless backend" to me? it seems to me that their main/only purpose is to hide API keys that you don't want to expose to the client. am I missing something?

7

0

6

i created this thingy yesterday and now I cannot stop watching it. https://t.co/Dp0r3COZrR

11

4

104

arxiv took their time with this paper because apparently they moved it from AI to HCI. it indeed belongs in both, but I still believe it is much more about AI than it is about HCI. but now we have an opportunity to post it again, and it IS a really neat result, imo. so, a win.

When reading AI reasoning text (aka CoT), we form a narrative about the underlying computation process, which we take as a transparent explanation of model behavior. But what if our narrative is wrong? We measure that and find it usually is. Now on arXiv:

1

1

12

When reading AI reasoning text (aka CoT), we form a narrative about the underlying computation process, which we take as a transparent explanation of model behavior. But what if our narrative is wrong? We measure that and find it usually is. Now on arXiv:

arxiv.org

A new generation of AI models generates step-by-step reasoning text before producing an answer. This text appears to offer a human-readable window into their computation process, and is...

Producing reasoning texts boosts the capabilities of AI models, but do we humans correctly understand these texts? Our latest research suggests that we do not. This highlights a new angle on the "Are they transparent?" debate: they might be, but we misinterpret them. 🧵

2

3

16

agents hold a huge promise. building strong, good, interactive and multipurpose ones turns out to be very hard (happy to discuss the challenges). in this project, we attempt to do that, and push forward both useful functionality *and* the science of agent building.

Introducing Asta—our bold initiative to accelerate science with trustworthy, capable agents, benchmarks, & developer resources that bring clarity to the landscape of scientific AI + agents. 🧵

2

5

83

Everybody posting about AI in programming sounds like this to me. https://t.co/3J62fBK27g

59

116

2K

@connoraxiotes The debate about whether AI is actually conscious is, for now at least, a distraction. It will seem conscious and that illusion is what’ll matter in the near term

20

4

82

crazy that this is considered controversial / surprising in some circles

this mustafa suleyman blog is SO interesting — i'm not sure i've seen an AI leader write such strong opinions *against* model welfare, machine consciousness etc https://t.co/sQiXM3zwTI

9

1

63

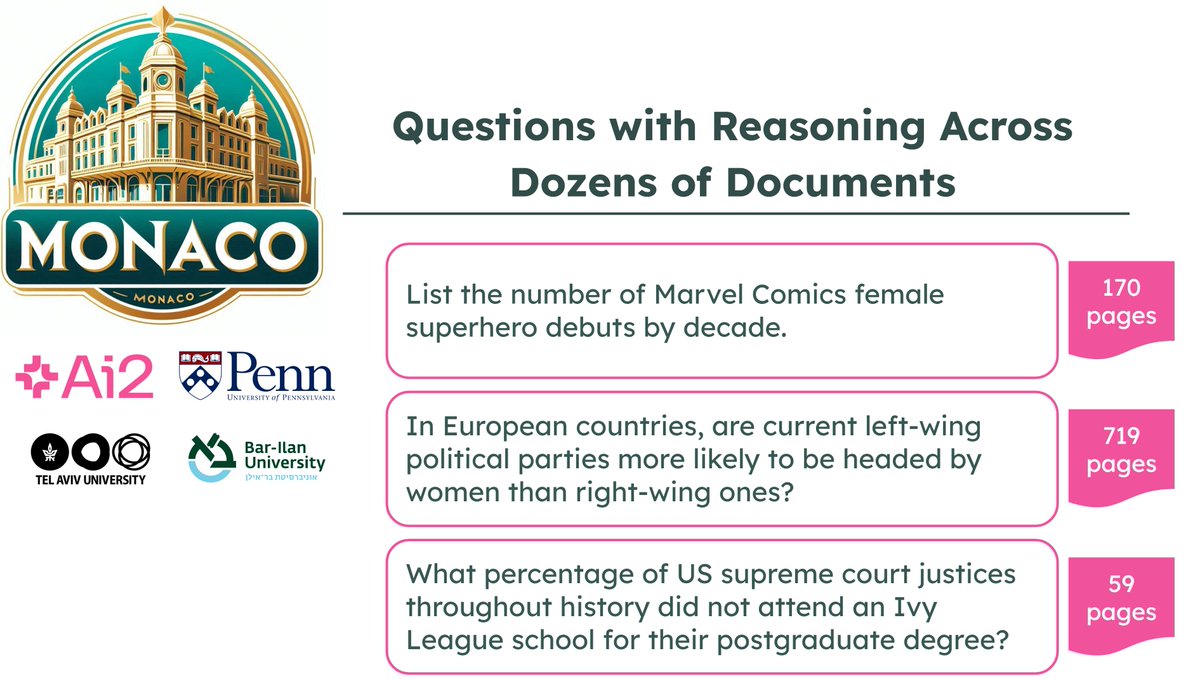

LLMs power research, decision‑making, and exploration—but most benchmarks don’t test how well they stitch together evidence across dozens (or hundreds) of sources. Meet MoNaCo, our new eval for question-answering cross‑source reasoning. 👇

10

40

223

Many factual QA benchmarks have become saturated, yet factuality still poses a very real issue! ✨We present MoNaCo, an Ai2 benchmark of human-written time-consuming questions that, on average, require 43.3 documents per question!✨ 📣Blogpost: https://t.co/GQD83gdHgg 🧵(1/5)

1

14

39

this is really cool to me, as Reasoning LLMs use language *effectively*. The language-use improves the computation's accuracy. But the work shows they use it in a very different way from us. Its a different way of using the same language!

Implication 2: Reasoning models use language effectively yet differently than humans. We believe that now we should look more into how humans interpret AI-produced language, not only how AIs parse ours.

1

0

28

The test is very simple: given a reasoning text with a highlighted target step t, identify the one out of four candidate steps that, if removed, will change the target step. We administered it to 80 participants online. You can test yourself here: https://t.co/le1mw2vd3W

1

3

10

in this work, we show that reasoning texts are really not transparent, in the sense that we (humans) are really bad in inferring how the steps (really) depend on each other for the model. yoo can test yourself here:

Producing reasoning texts boosts the capabilities of AI models, but do we humans correctly understand these texts? Our latest research suggests that we do not. This highlights a new angle on the "Are they transparent?" debate: they might be, but we misinterpret them. 🧵

2

14

98

Even when AI reasoning traces are “transparent”, the narrative they encode can differ from the one humans perceive. The transparency debate isn’t just about revealing the model’s steps, it’s about the mismatch between the model’s story and the one we construct from it.

Producing reasoning texts boosts the capabilities of AI models, but do we humans correctly understand these texts? Our latest research suggests that we do not. This highlights a new angle on the "Are they transparent?" debate: they might be, but we misinterpret them. 🧵

0

2

12

Producing reasoning texts boosts the capabilities of AI models, but do we humans correctly understand these texts? Our latest research suggests that we do not. This highlights a new angle on the "Are they transparent?" debate: they might be, but we misinterpret them. 🧵

8

27

137