Mufan (Bill) Li

@mufan_li

Followers

855

Following

496

Media

78

Statuses

383

Postdoc @Princeton ORFE | Prev: PhD @UofTStatSci @VectorInst

Toronto, Ontario

Joined March 2014

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

George Floyd

• 268575 Tweets

Toni Kroos

• 267619 Tweets

#GMMTVOuting2024

• 156968 Tweets

Gaga

• 106139 Tweets

ダービー

• 91488 Tweets

#precure

• 71379 Tweets

Libertarians

• 62484 Tweets

コミティア

• 54698 Tweets

#ブンブンジャー

• 37241 Tweets

Karoline

• 33630 Tweets

#仮面ライダーガッチャード

• 29369 Tweets

May Special

• 29282 Tweets

キュアニャミー

• 25542 Tweets

プリキュア

• 23380 Tweets

OUTING 2024 X GEMINI FOURTH

• 21677 Tweets

ジャスティンミラノ

• 20905 Tweets

設営完了

• 20344 Tweets

Millonarios

• 18830 Tweets

ホッパー1

• 17060 Tweets

ブンバイオレット

• 16398 Tweets

Medina

• 14136 Tweets

レガレイラ

• 14033 Tweets

東京競馬場

• 13958 Tweets

Saint MSG Insan

• 12610 Tweets

#超超超超ゲーマーズday2

• 12274 Tweets

Ross Ulbricht

• 11675 Tweets

ユキちゃん

• 11284 Tweets

まゆちゃん

• 10617 Tweets

Last Seen Profiles

Pinned Tweet

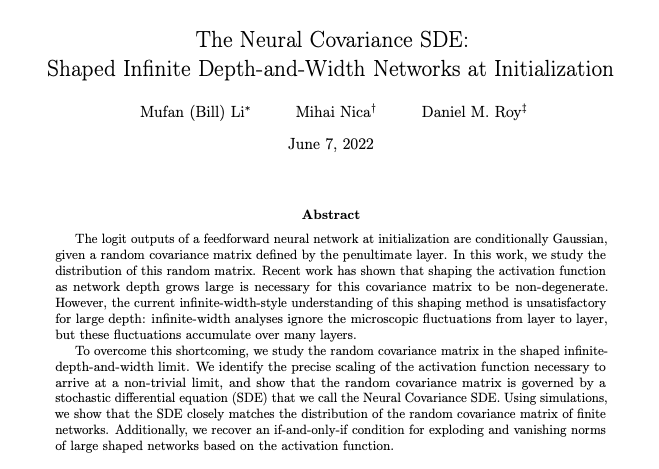

I’m excited to announce that in July 2025 I will be joining

@UWaterloo

as an Assistant Professor in the Department of Statistics and Actuarial Science! Until then, I will continue at Princeton as a DataX Postdoc Fellow, working with Boris Hanin.

I have many exciting projects

27

5

218

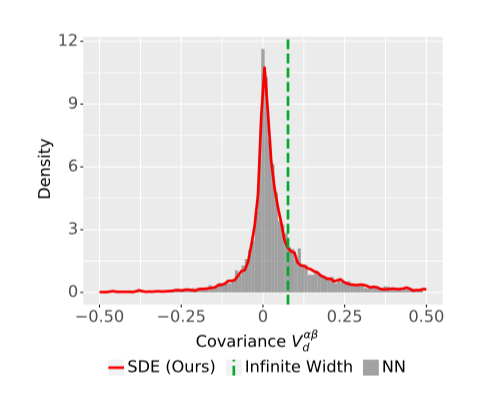

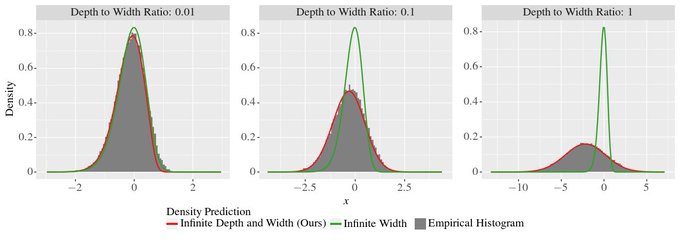

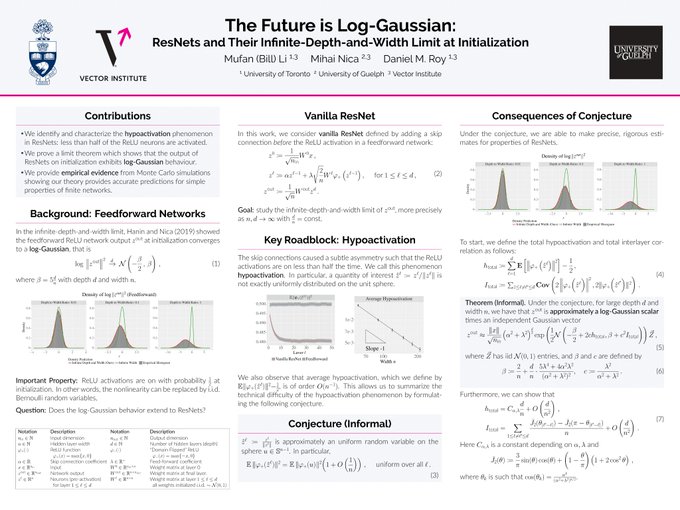

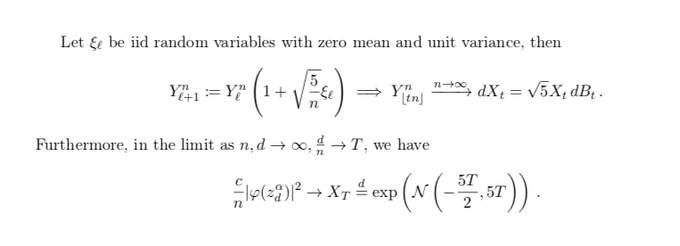

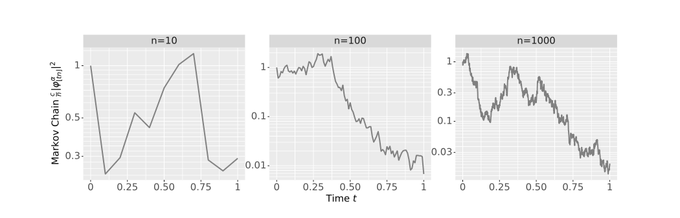

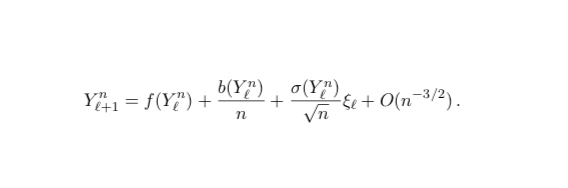

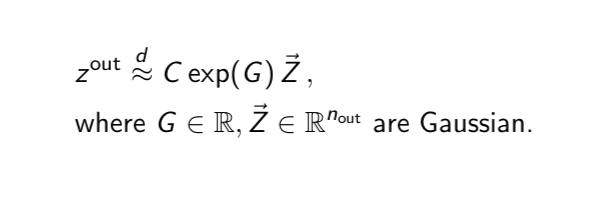

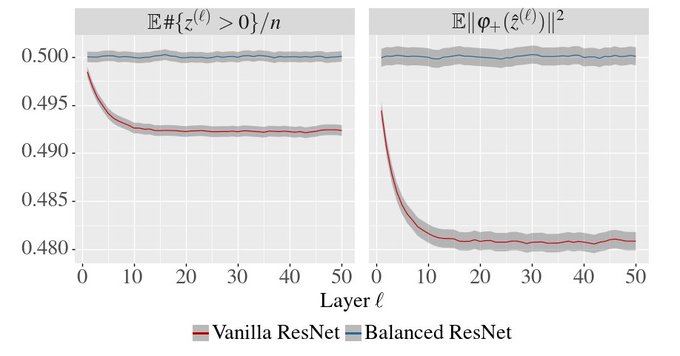

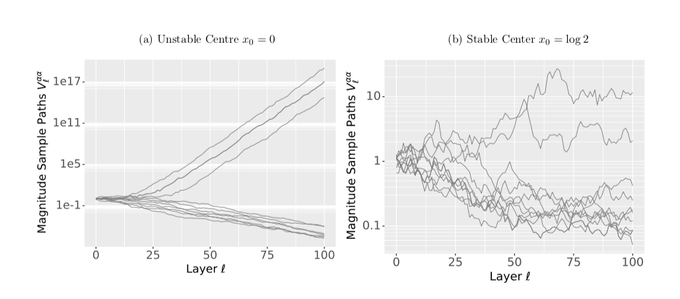

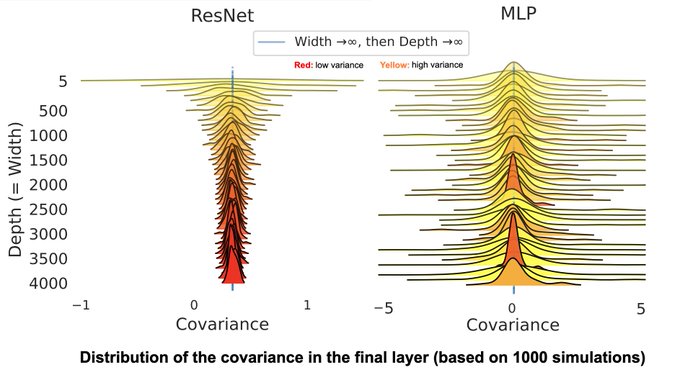

There has been a flurry of work beyond the infinite-width limit. We study the infinite DEPTH-AND-WIDTH limit of ReLU nets with residual connections and see remarkable (!) agreement with STANDARD finite networks. Joint work w/

@MihaiCNica

@roydanroy

4

19

110

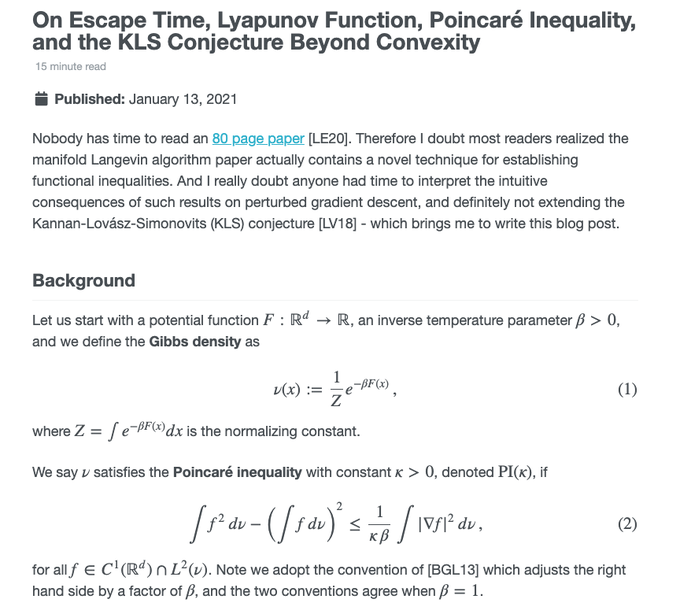

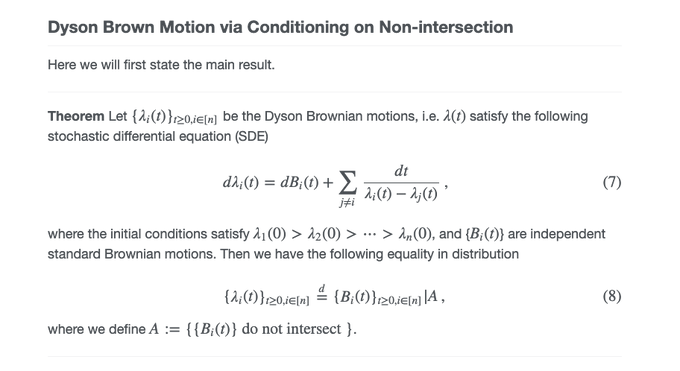

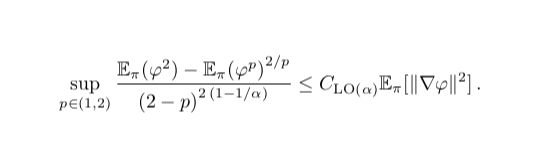

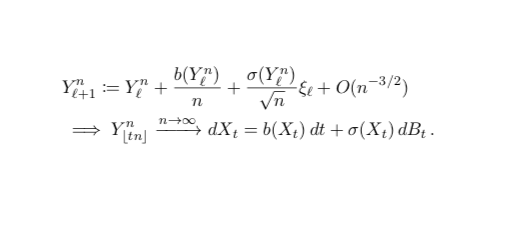

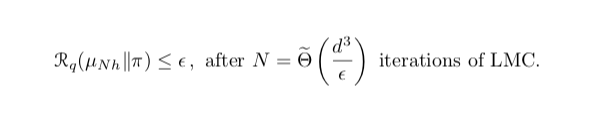

What is the complexity of sampling using Langevin Monte Carlo (LMC) under a Poincaré inequality? We provide the first answer to this open problem.

Joint work with Sinho Chewi,

@MuratAErdogdu

, Ruoqi Shen, and Matthew Zhang

2

15

91

@rasbt

@liranringel

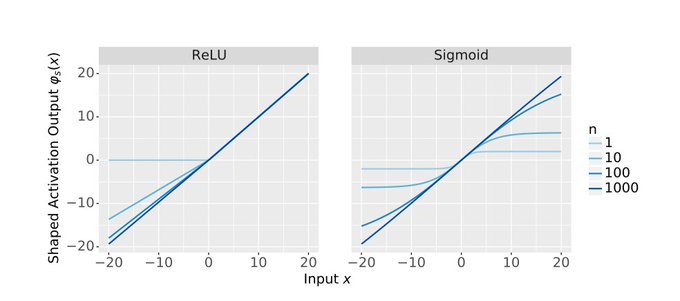

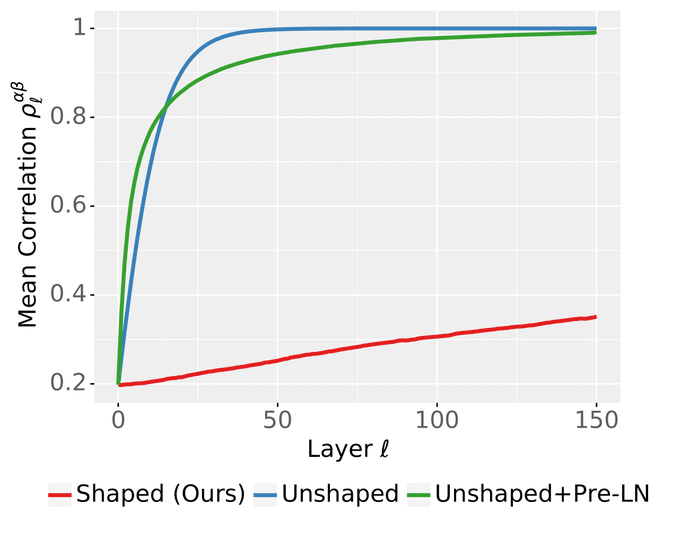

It turns out that stacking non-linearities in deep networks naively is the core reason causing unstable gradients. Shaping them at a precise size dependent rate is the key to extending the network to arbitrary depth.

See eg

1

4

41

Come visit our NeurIPS poster tonight at 7:30-9pm EST to learn about the infinite-DEPTH-AND-WIDTH limit of ResNets!

There has been a flurry of work beyond the infinite-width limit. We study the infinite DEPTH-AND-WIDTH limit of ReLU nets with residual connections and see remarkable (!) agreement with STANDARD finite networks. Joint work w/

@MihaiCNica

@roydanroy

4

19

110

0

9

30

@karen_ullrich

@y0b1byte

@BahareFatemi

I think "systemic" is key here. From my experience talking to other grad students, essentially everyone admits they have the same problems, even the ones that are doing well in terms of publication. There's definitely something wrong with the environment.

1

0

22

@shortstein

@icmlconf

At the same time, I have also received reviews claiming my main theorems are wrong without further explanations 🤷♂️🤷♂️

0

0

17

@sam_power_825

From talking to a former researcher on ADAM, the continuous time ODE system was difficult to work with compared to other simpler algorithms. Even in the convex case, it was non-trivial to construct a Lyapunov function

0

0

14

@sp_monte_carlo

Currently doing a reading group on this set of notes and just finished Hörmander’s. Super grateful that Sinho suggested we start from Eldredge first, things make a lot more sense here

1

0

14

@thesasho

@radcummings

@markmbun

Every time I use Jensen, I check the direction of E(X^2) >= (EX)^2 and confirm the difference is variance

0

0

13

Many great mathematical advances are also just expert usage of integration-by-parts and Cauchy--Schwarz, which seems to be familiar and unimportant to most people.

Bakry, Gentil, and Ledoux certainly agrees with their book dedication.

1

0

11

@mraginsky

@AlexGDimakis

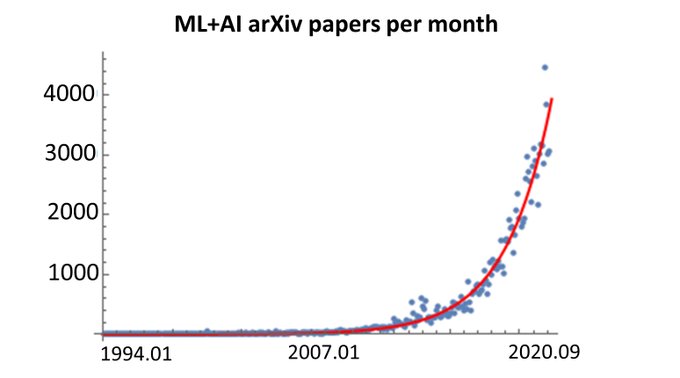

All these papers on scaling laws are hinting that large networks are probably converging. And if we learned anything from statistical physics, it’s probably that we should try to describe the limit instead of banging our heads against the wall with finite size problems

1

0

10

Congratulations Jeff! Very well deserved and I’m so happy for you!

I'm pleased to announce that I've accepted a faculty pos'n in the dept. of statistics and actuarial science at my alma mater,

@WaterlooMath

, and a faculty affiliate pos'n

@VectorInst

, starting summer 2023! Meanwhile, I will be a postdoc

@DSI_UChicago

, starting September!

33

8

264

1

1

10

@roydanroy

@thegautamkamath

I remember Kevin tried to sell nonstandard analysis to first year PhD students with “you get to use cool words like ‘ultrafilters’ and ‘hyperfinite’“ and yeah it didn’t work

1

0

8

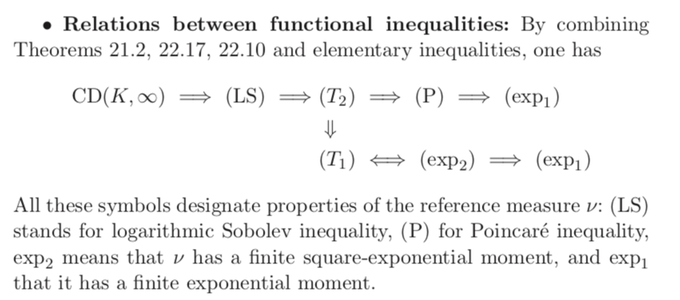

@ccanonne_

I will be forever amazed at this sequence of implications between inequalities, beautifully summarized by Villani in "Optimal Transport, Old and New".

1

0

8

This perfectly describes my experience in academia, certainly wasn’t thinking of OpenAI when I was reading

0

0

8

@sp_monte_carlo

If you can write the two laws as time marginals of two diffusion processes with the same diffusion coefficient, then the KL can be upper bounded by the KL of the path measures, which admits a closed form via Girsanov. See eg

0

0

8

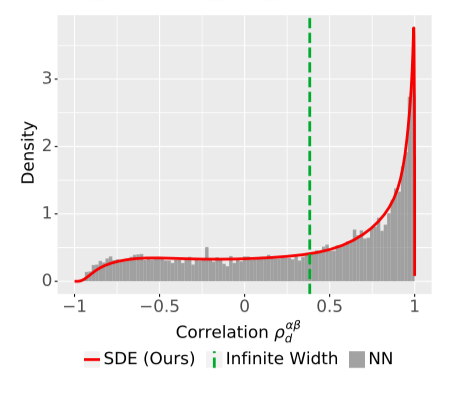

We believe this result can be extended to non-Gaussian weights. See an earlier universality result for MLPs by

@BorisHanin

and

@MihaiCNica

0

0

7

@cjmaddison

@roydanroy

@thegautamkamath

This reminds me of Michel Talagrand writing a new book to make his old book "obsolete"

0

0

7

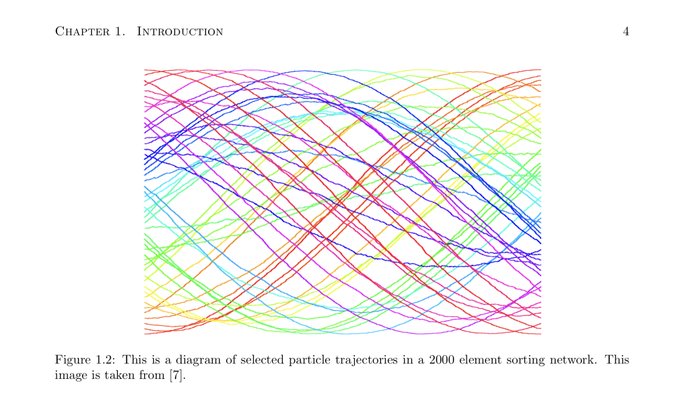

@sam_power_825

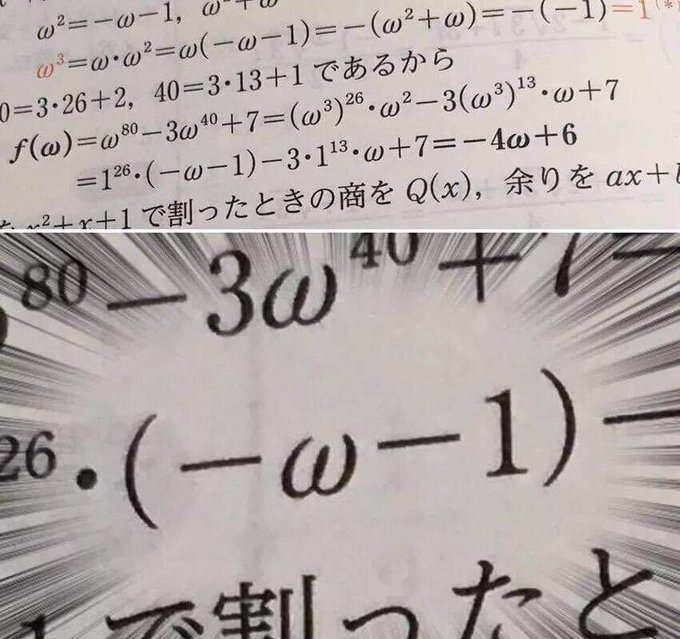

Random sorting networks also has a scaling limit such that each trajectory become sinusoidal.

I don’t think Duncan is on Twitter but he does really good work in probability theory, this is from his thesis

1

1

7

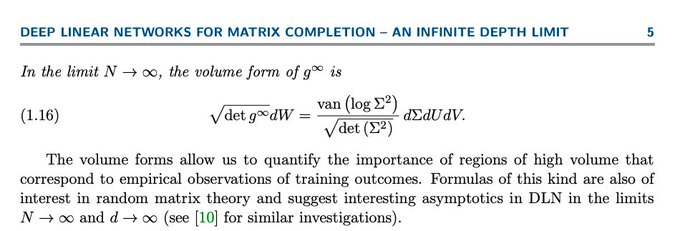

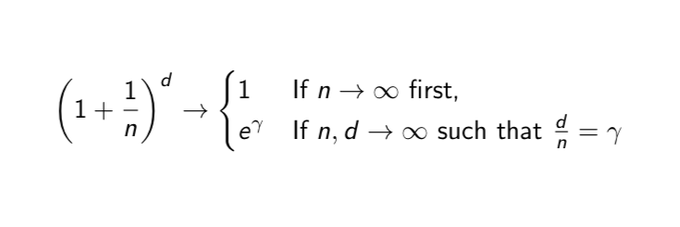

As a result, the infinite depth-and-width limit is not Gaussian. This work extends results for fully connected MLPs where the analysis is much simpler. See

@MihaiCNica

’s youtube video for an introduction.

2

0

7

@sp_monte_carlo

On a related note, I never understood the point of drawing any diagrams for neural network architectures. The transformer diagram was particularly confusing for me.

Just write it out in matrix notation. It’s like two lines and much more clear.

1

0

7

First paper here is easily the most underrated paper of the year. It’s the first algorithm independent sampling lower bound, and gets an unexpected log log dependence on the condition number.

1

0

7

@deepcohen

There is also a strange cognitive dissonance that somehow adding experiments to a theory paper hurts the original paper. Surely some experiments are better than none, if no strong claims are being made with them?

3

0

7

Infinite depth transformers!

It's only

@ChuningLi

's first paper, and I wish my first paper was this good!

How do you scale Transformers to infinite depth while ensuring numerical stability? In fact, LayerNorm is not enough.

But *shaping* the attention mechanism works!

w/

@ChuningLi

@mufan_li

@bobby_he

@THofmann2017

@cjmaddison

@roydanroy

6

35

217

0

0

7

@sp_monte_carlo

It's uniform on a fractal set, but not sure if there is a lot you can say based on this alone

1

0

6

@sam_power_825

@roydanroy

@junpenglao

@colindcarroll

Suppose your gradient is not Lipschitz, for example f(x) = x^4, even gradient descent diverges to infinity.

Also even with ergodicity, the stationary distribution may not be nice at all. Since SGD in finite steps are finite point masses, the limit is likely a fractal like set.

1

0

6

@deepcohen

This is actually a positive sign when training dynamics are consistent. We find this phenomenon implies hyperparameters transfer, see eg Figure 3e and 4 here

0

0

6

Technically the theory is not wrong - neural nets are terrible uniform learners. However, uniform learning is the wrong goal post.

We do need a better theory, and perhaps fewer people driving "disbelief" prematurely.

0

0

5

@miniapeur

Partial differential equations. So much information and consequences contained in a single line of math, really hard to appreciate with courses alone.

1

0

5

Never understood how anyone expects good work without being personally invested in the work, and as a result taking rejections personally.

Dear PhD students with a submission to

@NeurIPSConf

, soon ~20% of you will receive a desk reject. Here some suggestions to deal with it in a healthy way.

First, this is not personal: a paper written by you was rejected, not you. Keep your self-worth unlinked from your work. 1/5

10

99

612

1

0

5

@sp_monte_carlo

Gradient descent with epsilon noise can escape saddle points exponentially fast

0

1

5

@OmarRivasplata

In Markov diffusions, it’s more natural to interpret Q as the target or base measure. If P_t is the law of the diffusion at time t and Q is the stationary measure, then KL(P_t|Q) converges to zero under a log-Sobolev inequality

0

1

5

@NAChristakis

@sinanaral

@TechCrunch

> a glorified curve fitter: overfits data

> media: omg this AI is outsmarting humans

0

2

5

@roydanroy

Last time I was honest about an issue at work during an AMA with the CEO, I pissed off some senior management and got a talk from boss. So I think the comments here are extremely biased. Anonymous poll?

2

0

4

@roydanroy

@togelius

Since the proof of the Poincaré conjecture doesn't fit in 6-8 pages, then Perelman and later authors should have just wrote 100 papers instead. Duh.

0

0

4

So we titled the paper "the future is log-Gaussian:..." just for the memes

There has been a flurry of work beyond the infinite-width limit. We study the infinite DEPTH-AND-WIDTH limit of ReLU nets with residual connections and see remarkable (!) agreement with STANDARD finite networks. Joint work w/

@MihaiCNica

@roydanroy

4

19

110

0

0

4

@roydanroy

@pfau

@gaurav_ven

@jamesgiammona

I want to say in this case, it is still strange. The reason is because before taking any limits, the neurons form a Gaussian process conditional on the previous layer. For this Gaussian structure to go away, we would need the kernel to be non-constant in the large sample limit

1

0

4

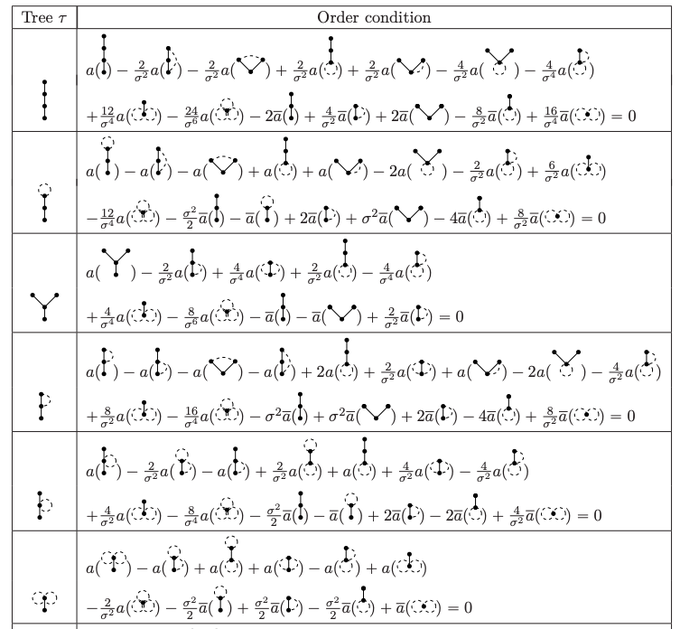

@PreetumNakkiran

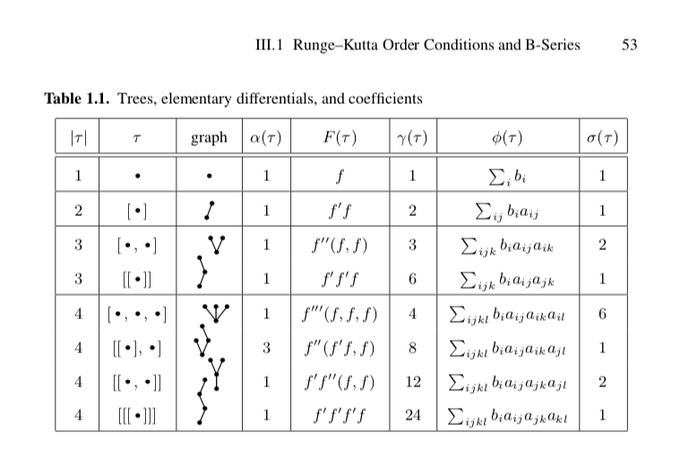

You can write all your series expansions in terms of trees so nobody can read them

2

0

4

@IsomorphicPhi

Brezis is really nice. Evans is more like a reference text, not a good place to learn from imo.

If you really want to get interested in PDEs though, an application is probably important. For me that was the beautiful connection to SDEs

1

0

4

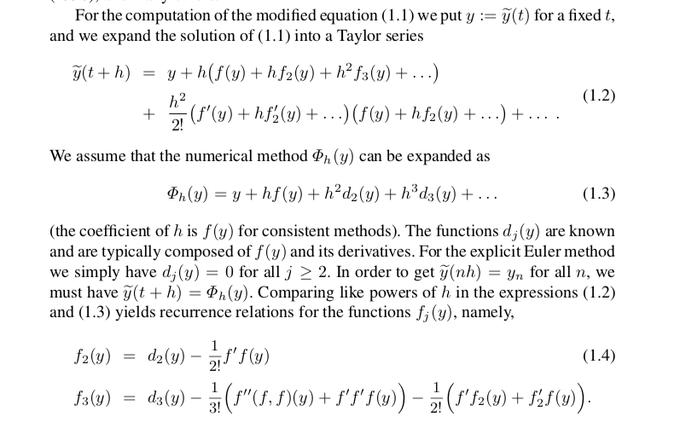

@sp_monte_carlo

I don't know why it's not more celebrated but this simple construction of the modified equation (aka backward error analysis) in Hairer, Lubich, Wanner always blew my mind

1

0

4

@hardmaru

@NeurIPSConf

Wait... am I going to have to use space on the ethical/societal implications of my generalization bound?

8

1

45

0

1

4

@shortstein

They are related by the OU-generator, where the stationary Poisson equation is exactly the second order PDE version of Stein’s equation, and LSI also corresponds to the same generator.

0

0

4

@cjmaddison

@sam_power_825

Some of the craziest notation I have ever seen came out as an extension of Butcher series - these are Runge-Kutta order conditions for SDE weak error

1

0

4

@sam_power_825

Why not both? Sometimes it’s nice to just write d\mu for short hand notation, but for a kernel or marginal of a joint, it’s more clear to writer K(x, dy)

2

0

4

A really nice result!

Q: What happens to the neural covariance when both Width and Depth are taken to infinity?

A: it **depends** how you take that limit.

However, for ResNets, we show (joint work with

@TheGregYang

) that you always get the same covariance structure..

Link:

3

29

154

0

0

4

@ccanonne_

Yes, these are from an older version of Dmitry Panchenko's excellent lecture notes on probability theory. Here's the most updated version posted on his website, Strassen's Theorem is in Section 4.3

1

0

4

@roydanroy

For a lot of exercises like compound lifts, if you can’t breathe fully, then it’s hard to get the full exercise. Having to breathe through a mask in between squat/deadlift sets also feels terrible :/

I’d rather not go if I have to wear a mask

0

0

3

@fentpot

@miniapeur

That’s the beauty of it. Even without an analytical solution, the PDE characterizes a ton of properties of the solution. Usefulness depends on whether a PDE naturally arises in your work.

0

0

3

@wgrathwohl

Imagine making a career out of making incremental changes to other people's methods, adding a ridiculous amount of trial and error, just to show it to a room full of other nerds like you after you beat some SOTA score with pure luck.

Yes I'm talking about speed running.

0

0

3

@Karthikvaz

@sp_monte_carlo

It’s helpful to think of probability distributions as manifolds, equipped with the Wasserstein metric, and the potential is KL divergence.

Then LSI is the PL inequality, which is equivalent to exponential decay of KL. Strong convexity is equivalent to exponential decay of W2.

1

0

3

@moskitos_bite

I really enjoyed Villani’s topics in optimal transportation as an intro. Many proofs had a short special case, which is sufficient as a first read.

1

0

3

@PreetumNakkiran

I actually think it’s because OT is the most natural language to study probability and stochastic processes. Wasserstein metric naturally induces a Riemannian manifold structure on the space of probability distributions, and this fact is incredibly underrated

1

0

3

@shortstein

Isn't it just the total variation (of general signed measures) of the difference for two probability measures?

2

0

3