Brian Scanlan

@brian_scanlan

Followers

3K

Following

23K

Media

309

Statuses

5K

I work at Intercom.

Swords, Dublin, Ireland

Joined May 2009

Tl;dr on going faster at a startup: - fast beats right - teams decay to slowness, fight it - things can go faster than you think - you can’t be fast w bad judgement - don’t add unnecessary artifacts - less meetings - more accountability

1

2

33

Startups: We've partnered with our friends at @stripe, @elevenlabsio, @attio @linear @meetgranola @asana @Lovable and 25 other great companies to build a monster bundle of all every product a startup needs for FREE. → https://t.co/0OlGN2ggOG←

15

24

197

I did this instead:

intercom.com

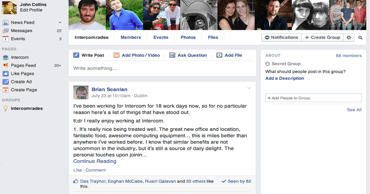

Engineer Brian Scanlan shares his experience of his first 18 days working at Intercom, makers of the platform that helps web businesses talk to customers.

My "favorite" one of these was someone from Google joined GitHub and wrote a post a couple weeks in about how GitHub used google docs wrong (we used GitHub for everything... obviously).

1

0

21

I've been experimenting with a way of editing @intercom Fin procedures in a code editor. You can use all your familiar tools, including AI! I want to put it in the hands of people who enjoy experimenting. If that’s you then please get in touch and tell me what you'd build.

0

1

9

Went to my first ever 500 level talk, about IAM automated reasoning. An excellent talk - the speaker reframed it as “how to read a paper” and talked through a paper on the topic and two related papers. Excellent way to present dense content, often intimidating to non-academics.

1

0

1

May as well start posting some Re:Invent content... The Wizard of Oz at the The Sphere reminded me a lot of Muppetvision 3D at Disney. A good show, but only three times a day - I can see why they're losing a lot of money.

1

0

2

This week at @intercom we reached a pretty impressive AI milestone in team web, generating an entirely pixel perfect Figma design from a URL copied into a single prompt, using advancements within our design system, codebase, @cursor_ai rules, and @figma MCP - let's dive in 🧵

10

3

5

https://t.co/IqCmFqNClX It's an ok talk, just 20 minutes long.

0

0

5

I spoke at @incident_io's conference recently about "writing up your most embarrassing failures". Turns out it's on the Internet...

2

0

12

Cheeky Pints but mandatory minimum of 6 pints, no edits.

11

6

162

Happy to share that today I'm starting as senior principal at Intercom!

28

8

334

Great to see this thread started by @HarryStebbings with Intercom getting incredible feedback from the people that matter….our users!

1

1

3