Ethan Caballero is busy

@ethanCaballero

Followers

8,500

Following

2,023

Media

368

Statuses

3,668

ML PhD student @Mila_Quebec ; previously @GoogleDeepMind

Joined January 2015

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

スタンプ

• 150173 Tweets

GPT-4o

• 120887 Tweets

#WWERaw

• 92549 Tweets

Luka

• 75027 Tweets

Dallas

• 47910 Tweets

Mavs

• 33284 Tweets

Shai

• 27879 Tweets

#บุ้งทะลุวัง

• 25375 Tweets

Gunther

• 19494 Tweets

#GmmTreatFourthBetter

• 18953 Tweets

Ilja

• 18283 Tweets

Change Fourth Manager

• 16931 Tweets

書類送検

• 16373 Tweets

スナック

• 15619 Tweets

スクエニ

• 15345 Tweets

Jey Uso

• 13999 Tweets

Jリーグカレー

• 13026 Tweets

Mavericks

• 11900 Tweets

Chet

• 11759 Tweets

ITAM

• 11412 Tweets

로즈데이

• 10079 Tweets

Giddey

• 10058 Tweets

違法ケシ発見

• 10053 Tweets

Last Seen Profiles

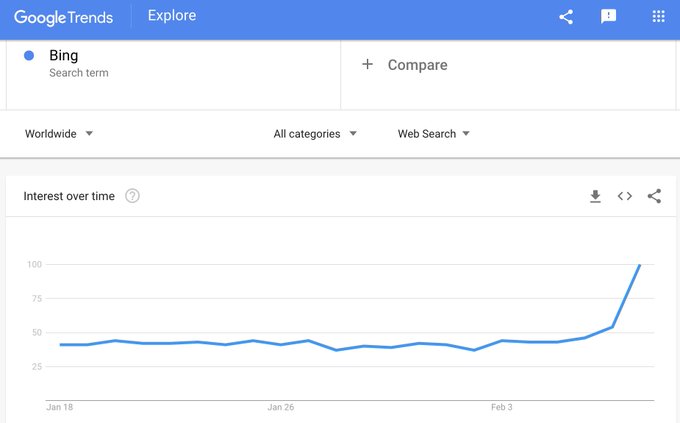

"This new Bing will make Google come out and dance, and I want people to know that we made them dance." -

@SatyaNadella

162

664

6K

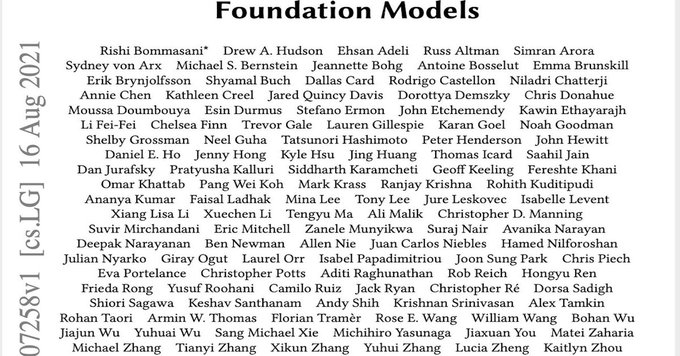

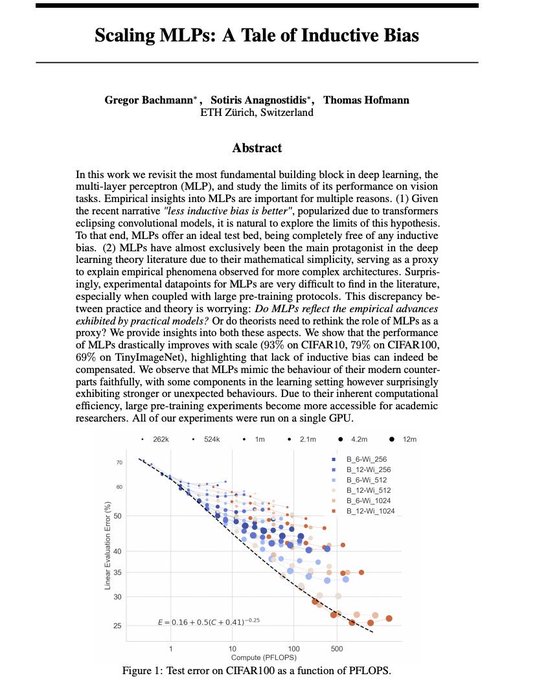

Stanford's ~entire AI Department has just released a 200 page 100 author Neural Scaling Laws Manifesto.

They're pivoting to positioning themselves as

#1

at academic ML Scaling (e.g. GPT-4) research.

"On the Opportunities and Risks of Foundation Models"

17

394

2K

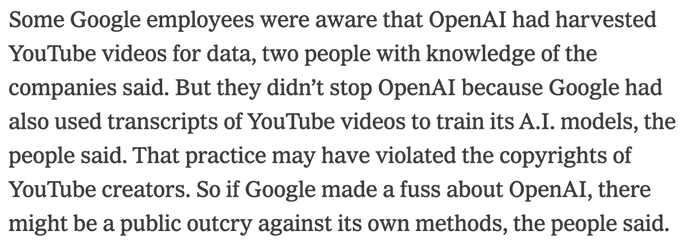

Whisper is how OpenAI is getting the many Trillions of English text tokens that are needed to train compute optimal (chinchilla scaling law) GPT-4.

18

75

714

.

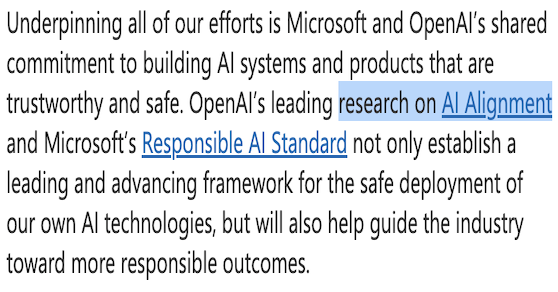

@SatyaNadella

has just revealed Microsoft's plan to keep AI from escaping human control and exterminating all of humanity:

23

32

189

We're thrilled to announce the launch of

@LargestAI

!

We have $80Billion in funding from the richest nations, individuals, & companies.

We've spent 50% building a $40B Supercomputer on which we're already training a 10^15 parameter Model on all of YouTube.

Our funders are (1/N)

18

12

181

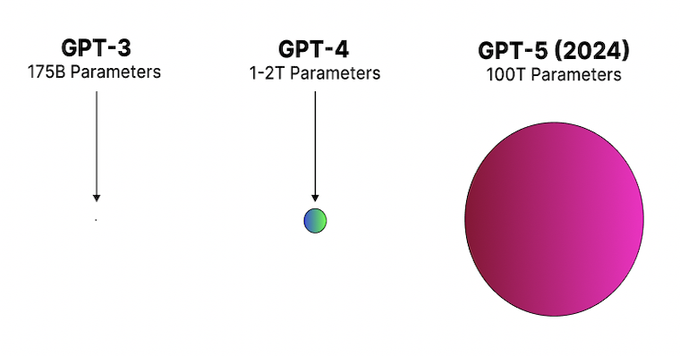

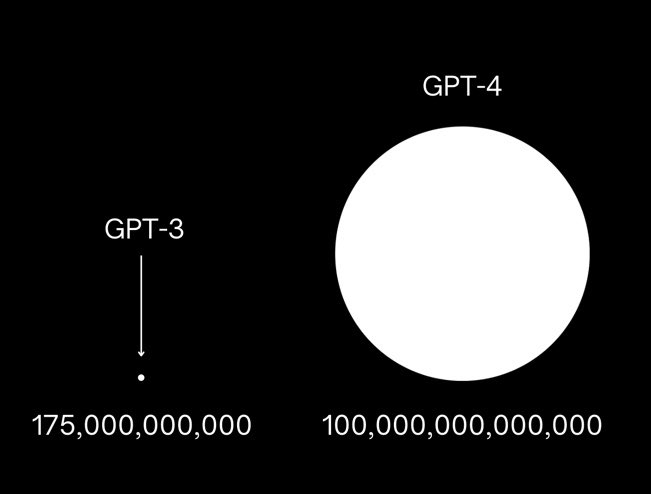

This is fake news.

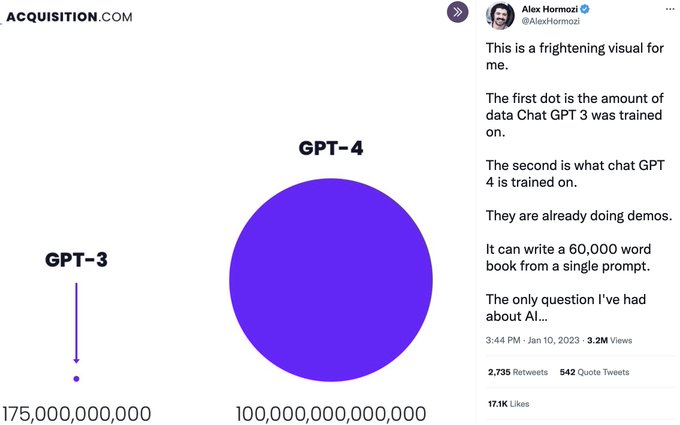

GPT-4 is actually 1,000,000 Trillion Parameters.

7

7

108

Description2Code Dataset () gets its first citation and usage (via this

#AlphaCode

paper) 6 years after its release 😂🤣😍👍:

@ilyasut

@OpenAI

Introducing

#AlphaCode

: a system that can compete at average human level in competitive coding competitions like

@codeforces

. An exciting leap in AI problem-solving capabilities, combining many advances in machine learning!

Read more: 1/

176

2K

8K

7

5

110

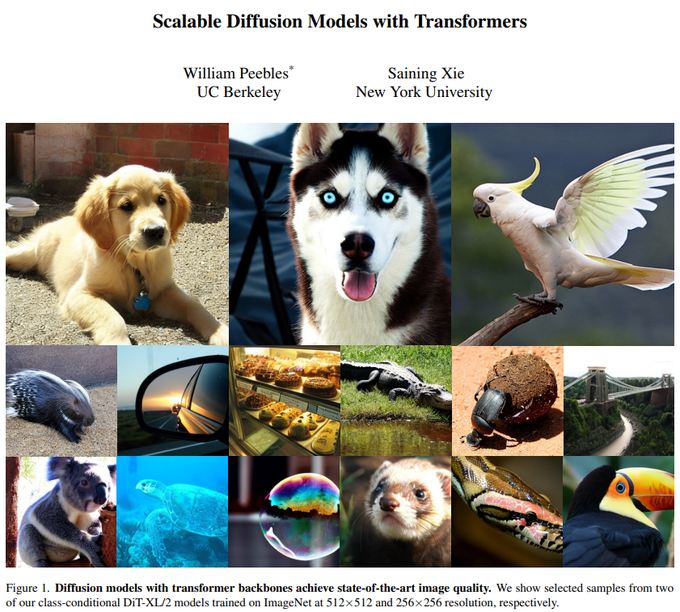

@srush_nlp

.

@hojonathanho

explains why in this talk:

For perceptual data (i.e. non-text), people have shown that over half of the bits of entropy of the data distribution correspond to imperceptible (to human perception) bits that don’t have any economic value.

2

3

88

Due to the implosion of FTX, this $1Billion prize is cancelled and we are now $650Million in debt:

4

6

88

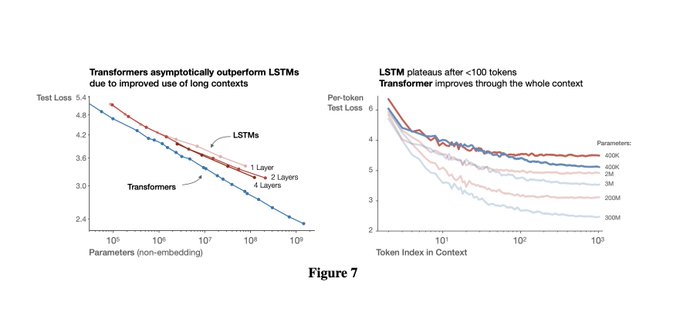

@jacobmbuckman

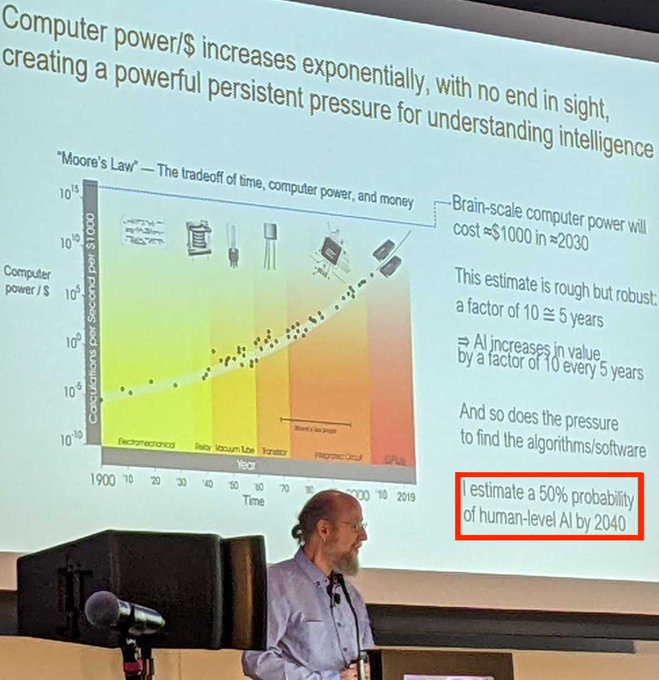

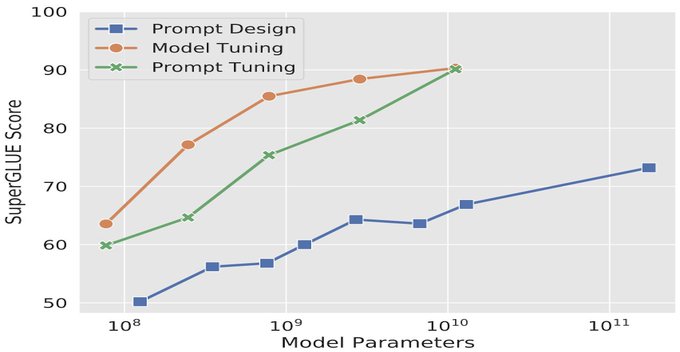

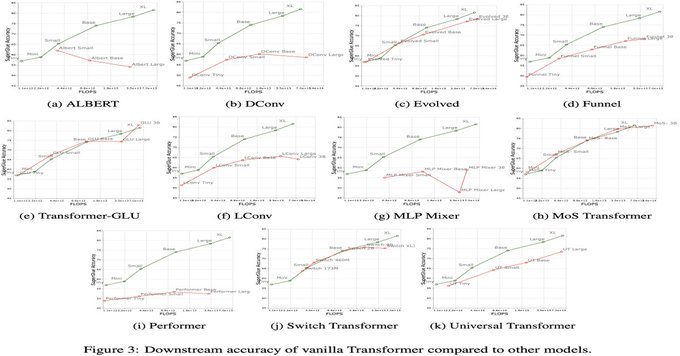

Nah, there’s famous plot from figure 7 (left) of “Scaling Laws for Neural Language Models” paper that shows LSTM has a 50X worse scaling law multiplicative constant than Transformer:

4

4

81

LLMs have shown us that Human-Level AI is easier than Cat-Level AI.

7

3

80

Is this going to bankrupt all the organizations training the largest foundation models on the largest datasets?:

10

6

76

@RichardSocher

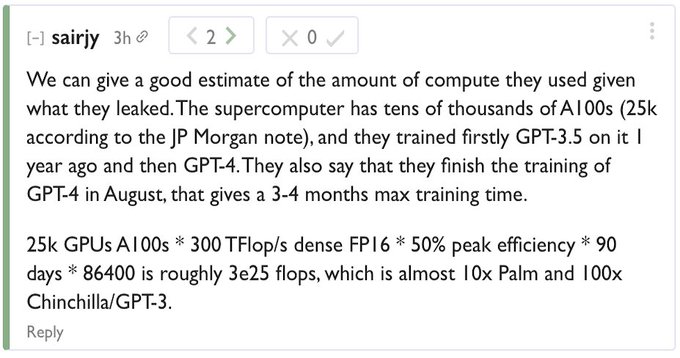

3 months of training on 25,000 A100 supercomputer (amount of compute for training GPT-4) is pretty expensive.

17

1

74

@DavidSKrueger

My mom, dad, and grandma get how to use ChatGPT but don't get how to use GPT-3.

0

0

68

NYU's AI Department has just released a Neural Scaling Laws Seminar.

They're pivoting to positioning themselves as

#1

at academic ML Scaling (e.g. GPT-4) education. 🙂

"PhD Seminar: Scaling Laws, the Bitter Lesson, and AI Research after GPT-3":

1

8

65