Neil Houlsby

@neilhoulsby

Followers

4,229

Following

321

Media

42

Statuses

468

Professional AI researcher; amateur athlete. Senior Staff RS in the Google Deepmind, Zürich. Attempts triathlons.

Zurich, Switzerland

Joined April 2012

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Diddy

• 537412 Tweets

الهلال

• 532436 Tweets

Alito

• 385282 Tweets

Flamengo

• 166722 Tweets

Peter

• 164474 Tweets

Corinthians

• 152047 Tweets

Cássio

• 112408 Tweets

Barron

• 91766 Tweets

رونالدو

• 59186 Tweets

デザフェス

• 51923 Tweets

#Kyrgyzstan

• 36751 Tweets

Gabi

• 34822 Tweets

#Smackdown

• 29867 Tweets

Bianca

• 22594 Tweets

كاس الملك

• 17707 Tweets

Cobasi

• 15556 Tweets

ムビナナ

• 14770 Tweets

the boy is mine

• 13618 Tweets

Dabney Coleman

• 13100 Tweets

Last Seen Profiles

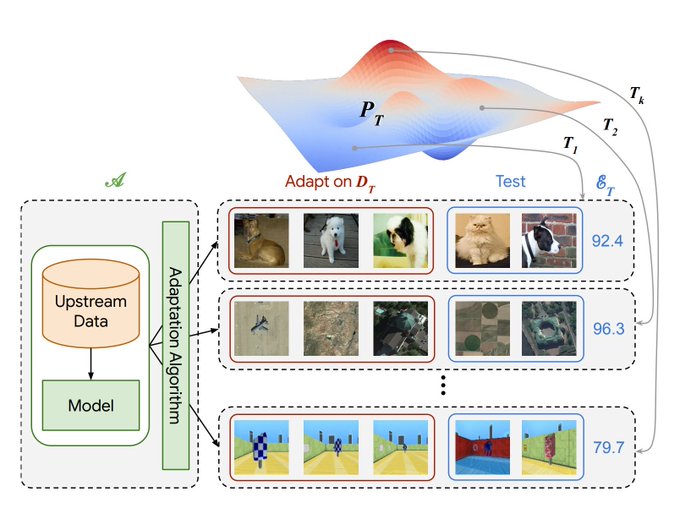

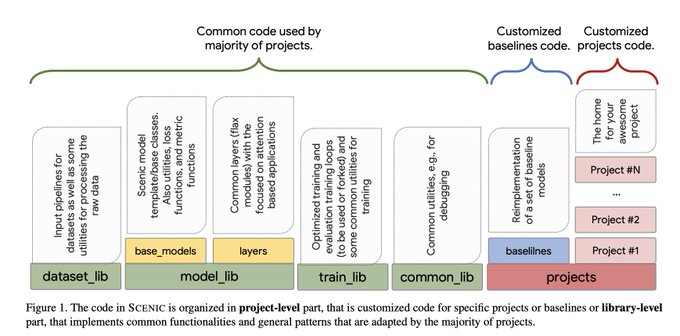

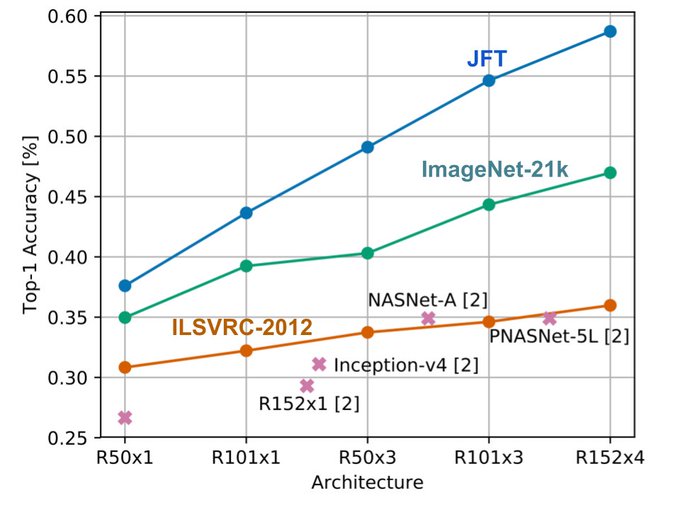

How effective is representation learning? To help answer this, we are pleased to release the Visual Task Adaptation Benchmark: our protocol for benchmarking any visual representations () + lots of findings in .

@GoogleAI

1

60

216

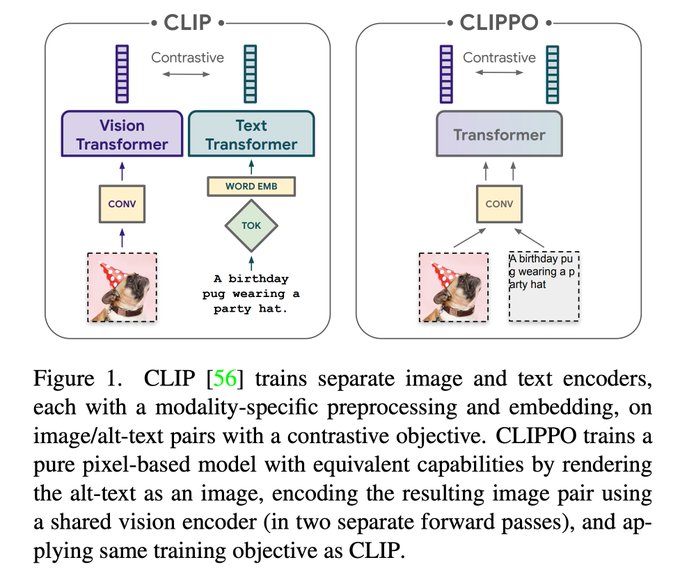

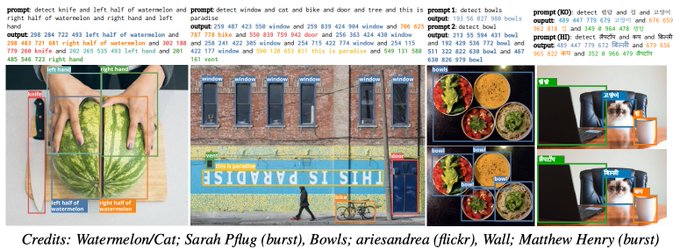

Detect objects in your still life, or anything else for that matter! OWL-ViT---our zero shot detector---is now in 🤗.

OWL-ViT by

@GoogleAI

is now available

@huggingface

Transformers. The model is a minimal extension of CLIP for zero-shot object detection given text queries. 🤯

🥳 It has impressive generalization capabilities and is a great first step for open-vocabulary object detection!

(1/2)

7

267

1K

3

29

180

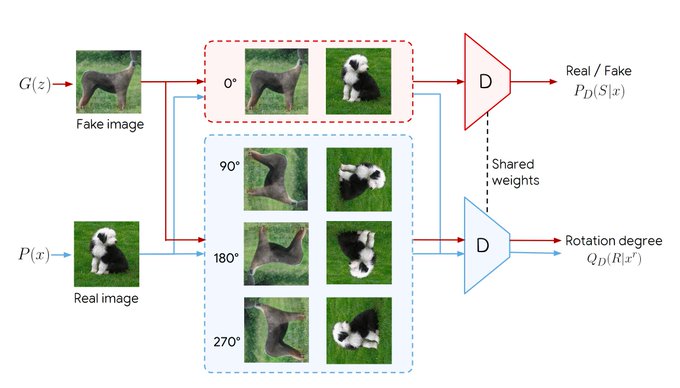

Combining two of the most popular unsupervised learning algorithms: Self-Supervision and GANs. What could go right? Stable generation of ImageNet *with no label information*. FID 23. Work by Brain Zürich to appear in CVPR.

@GoogleAI

#PoweredByCompareGAN

0

49

158

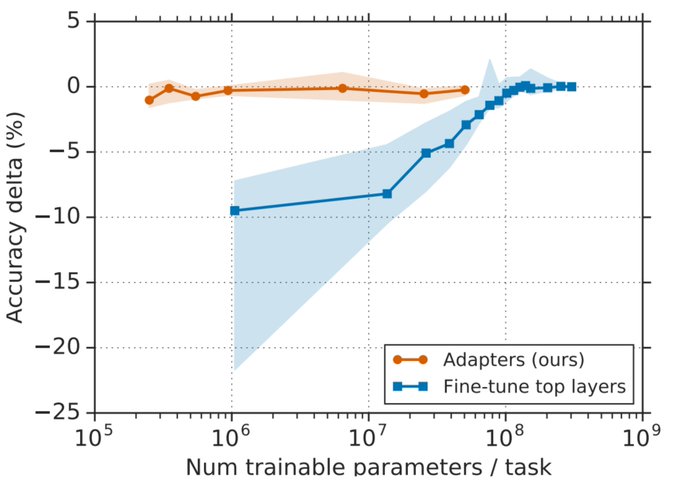

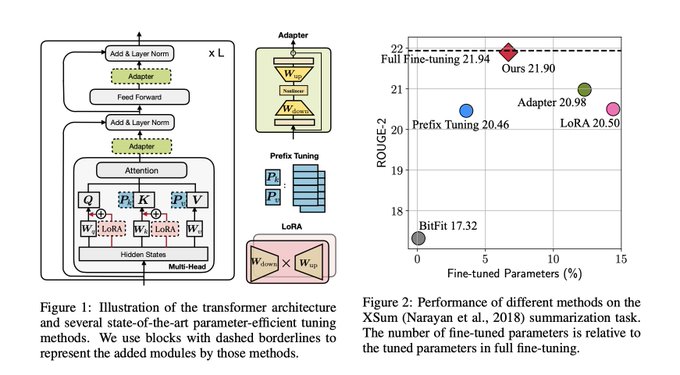

It turns out that only a few parameters need to be trained to fine-tune huge text transformer models. Our latest paper is on arXiv; work

@GoogleAI

Zürich and Kirkland.

#GoogleZurich

#GoogleKirkland

0

43

121

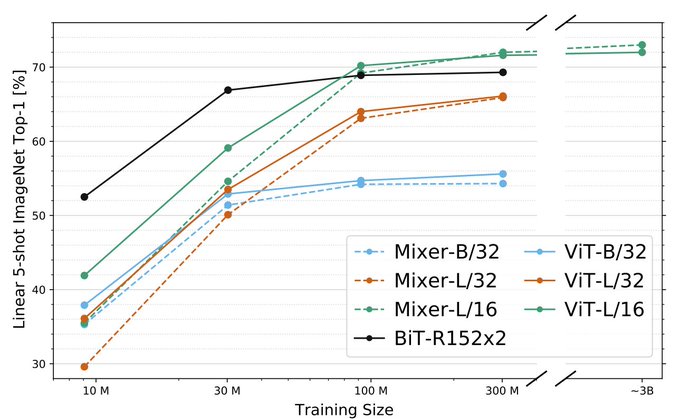

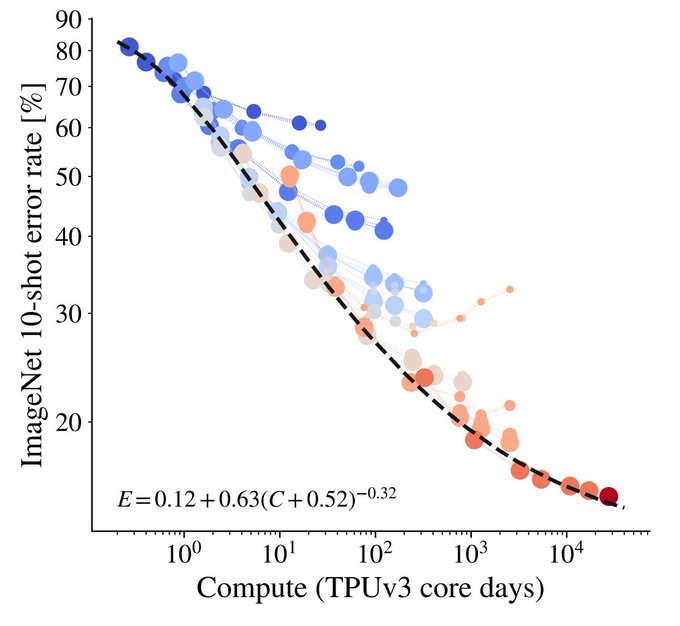

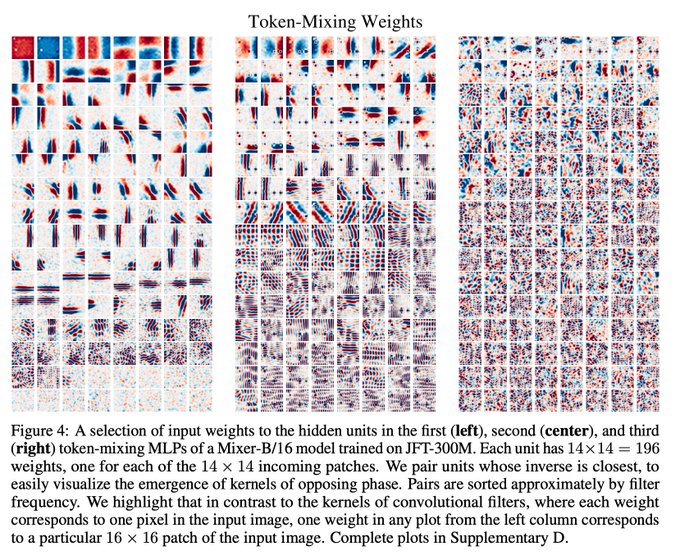

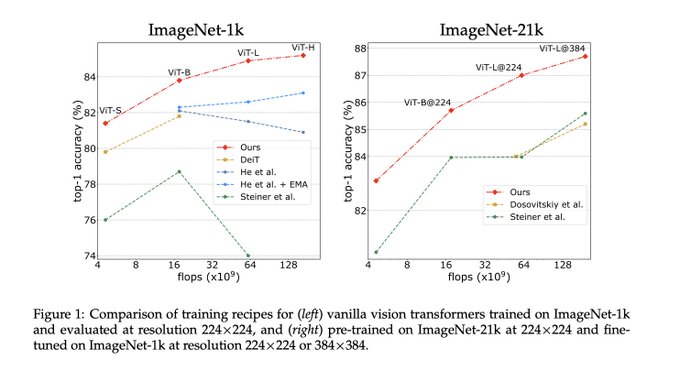

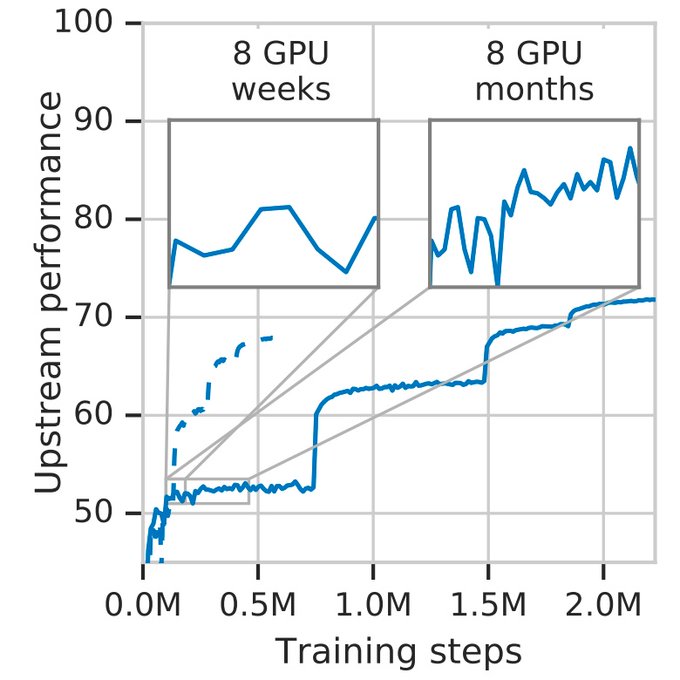

[2/3] Towards big vision.

How does MLP-Mixer fare with even more data? (Question raised in

@ykilcher

video, and by others)

We extended the "data scale" plot to the right, and with 3B images Mixer-L (green dashed line) seems to creep ahead ViT-L (green solid line)!

2

14

107

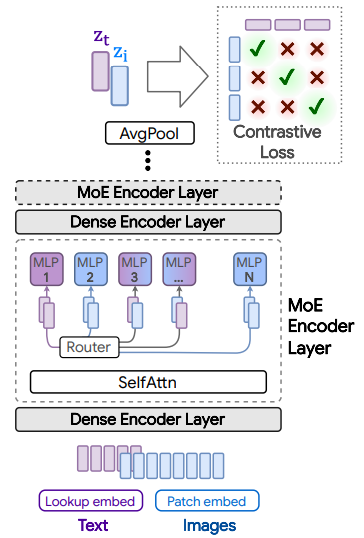

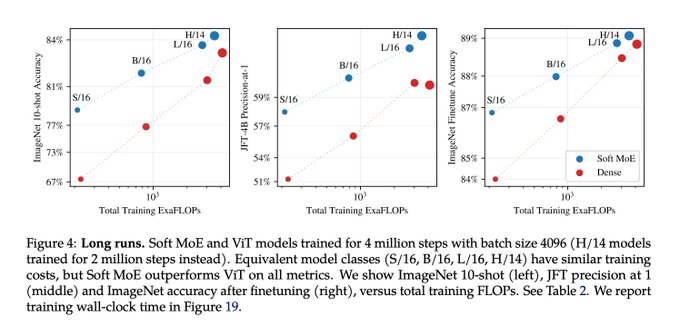

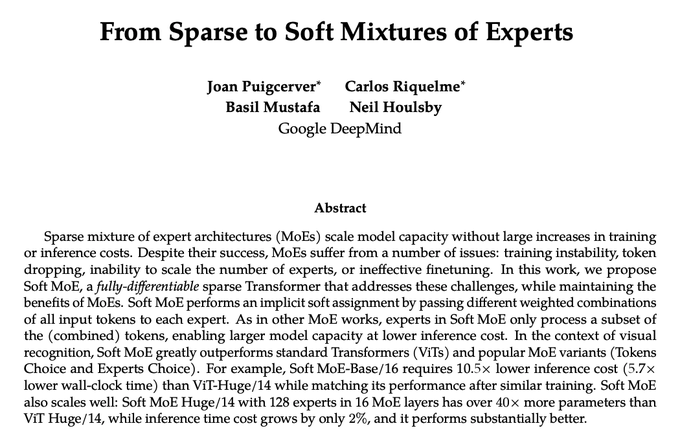

Soft MoE has produced some the largest improvements we have obtained since ViT vs. CNN: ViT-S inference cost at ViT-H performance!

Great idea from the team:

@joapuipe

@rikelhood

and

@_basilM

. Soft MoEs have the advantages of sparse Transformers, without many of the

2

10

86

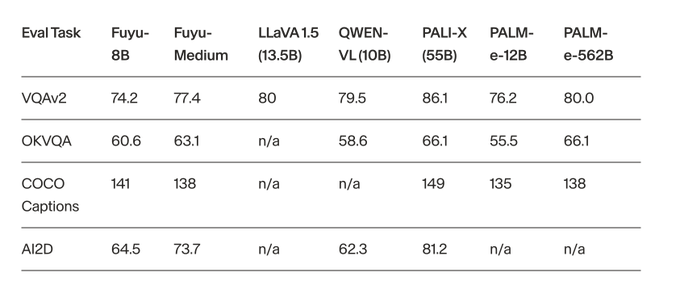

Nice stuff: With a simple design of just a single multimodal Transformer, and a relatively small model, Adept's Fuyu is approaching PaLI-X, which uses the very strong (and large) pre-trained ViT-22B image encoder.

0

21

89

Several requests for the slides/recording for our (

@XiaohuaZhai

,

@__kolesnikov__

, Alexey Dosovitskiy, myself) CVPR Tutorial "Beyond CNNs".

These are now linked from the tutorial website:

0

14

81

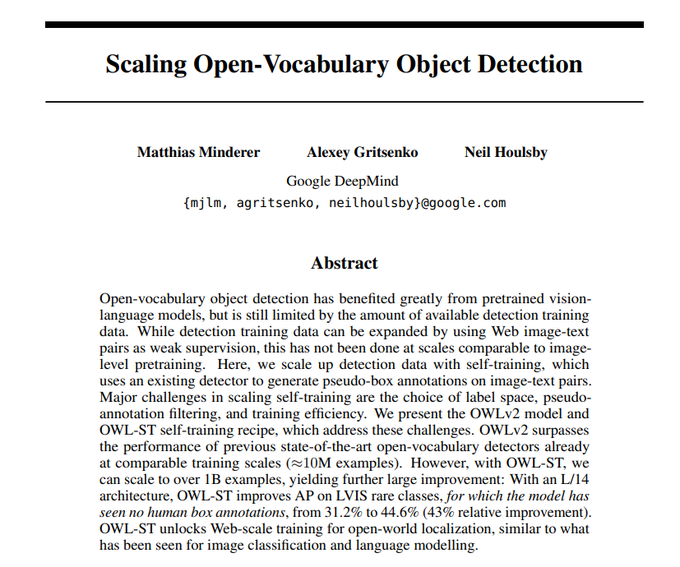

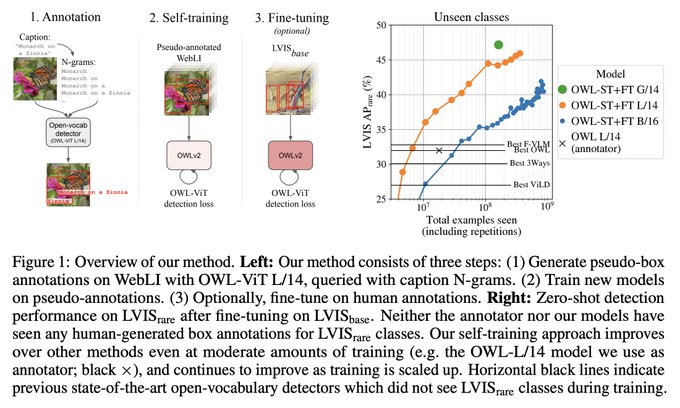

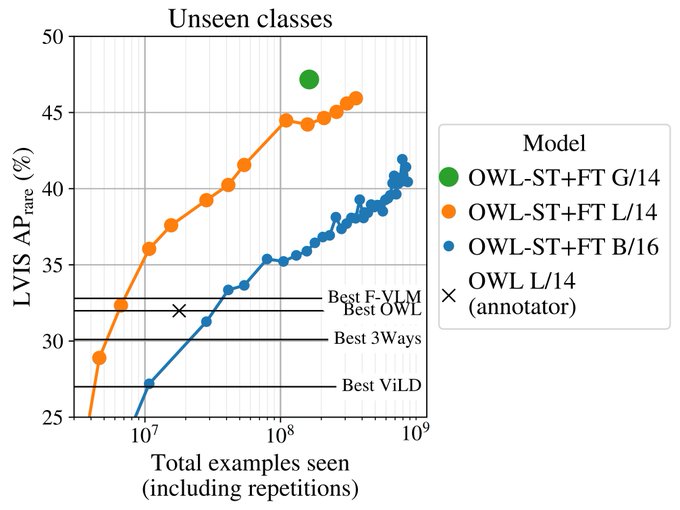

Check out OWLv2; leveraging large scale pre-training---which has been hugely potent for classification, captioning, & LMs---for object detection.

>40% (relative) performance improvement in the open-vocab setting.

2

17

73

Get latest Flax Transformer. Set normalize_qk=True. Larger learning rates and bigger models possible!

See for details

0

13

63

I had a great time at EPFL's AMLD on Gen AI.

If anyone is curious what the "two advances" are (plus a number of other cool talks), I believe the recordings will be made available on EPFL's YT channel in a few weeks.

#Track1

Let us now welcome

@neilhoulsby

before the coffee break. He will be presenting the recent advances in visual pretraining for LLMs.

#AMLDGenAI23

3

2

13

0

1

51

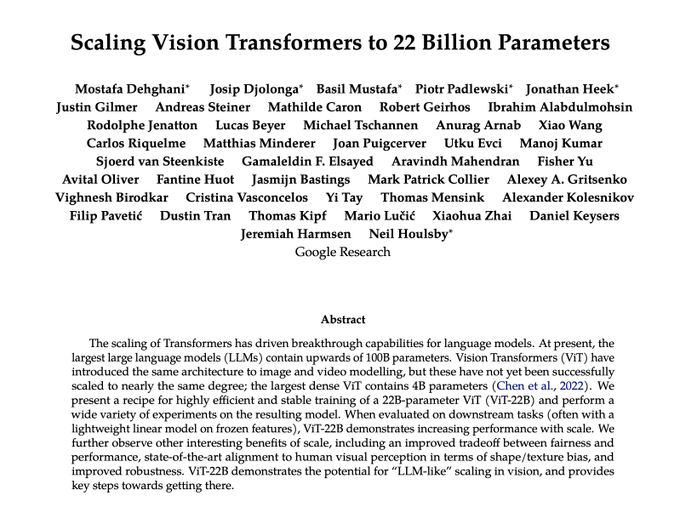

A large team effort from Brain EMEA and collaborators to train and evaluate a large Vision Transformer.

Many of us here believe that vision can be scaled successfully as LLMs have, and are making steps in that direction!

1

6

49

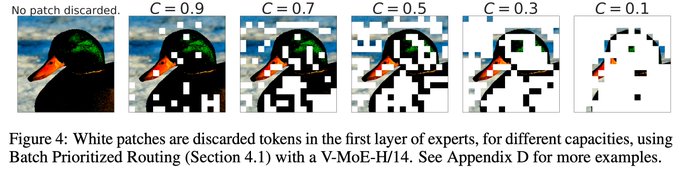

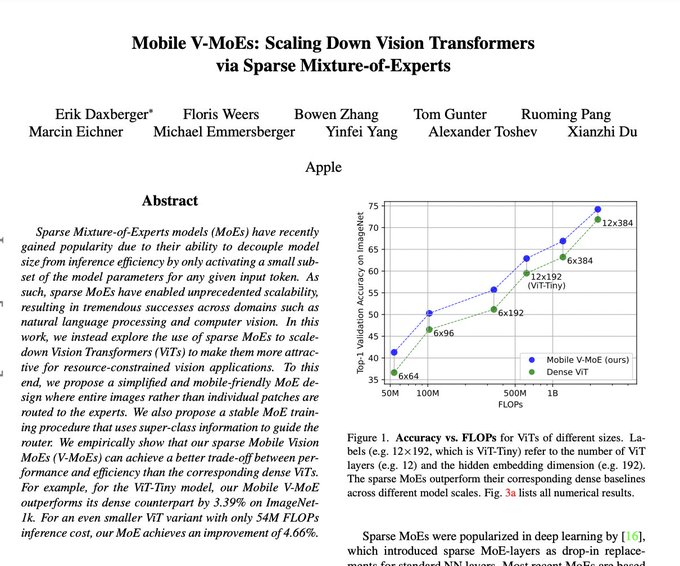

(V-)MoEs working well at the small end of the scale spectrum. Often we actually saw the largest gains with small models so it's a promising direction.

Also, the authors seem to have per-image routing working well, which is nice.

1

5

47

Pseudo-labelling+object detection+🤗

Excited to share that

@Google

's OWLv2 model is now available in 🤗 Transformers! This model is one of the strongest zero-shot object detection models out there, improving upon OWL-ViT v1 which was released last year🔥

How? By self-training on web-scale data of over 1B examples⬇️

4

58

293

0

5

44

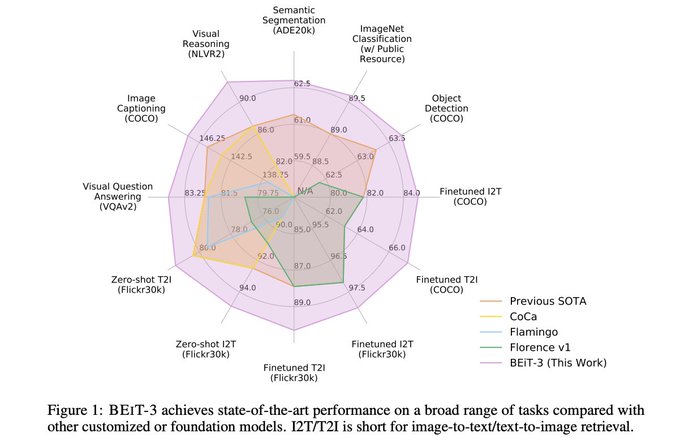

Continued improvements to BeiT. It's satisfyingly simple, and the results are now look really impressive!

(Although not sure I am a massive fan of the radar plot, not sure the areas are entirely reflective of the relative performances across many tasks).

0

7

43

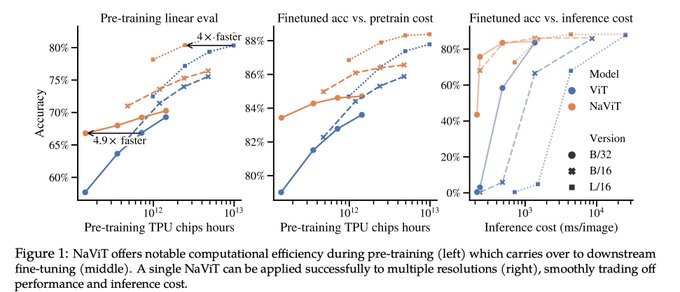

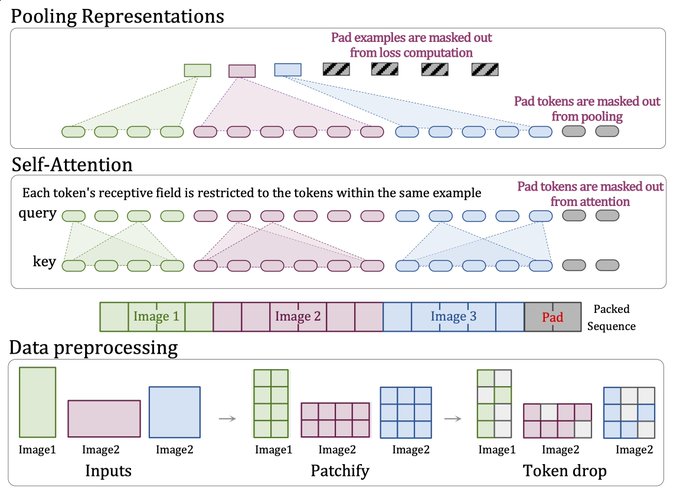

ViT is overdue a modern LM-style input pipeline to exploit Transformer's flexibility to combine inputs.

NaViT (Native ViT) trains on sequences of images of arbitrary resolutions and aspect ratios.

There is lots to unpack, see Mostafa's thread!

A feature that I particularly

1

4

43

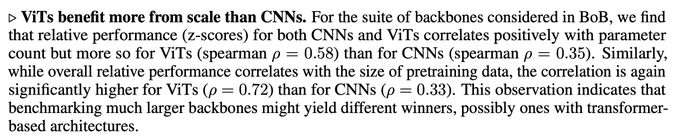

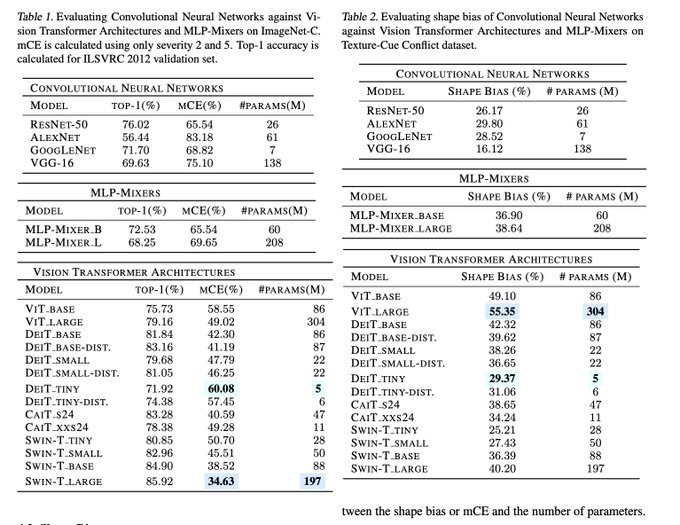

A very nice study, thanks for doing it!

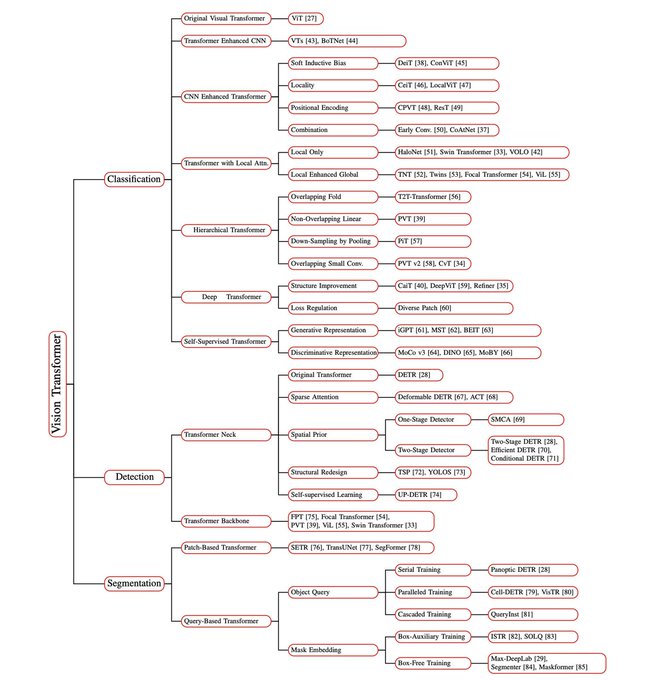

I feel that this paragraph "ViT's benefit more from scale" may give some clue as to why vanilla ViT endures, despite more complex architectures getting better results here.

For me, the key finding of ViT was the nice scaling trends, and

2

3

42

This continues our team’s work in vision, transfer, scalability, and architectures. As always, pleasure to work with a great team:

@tolstikhini

,

@__kolesnikov__

,

@giffmana

,

@XiaohuaZhai

,

@TomUnterthiner

,

@JessicaYung17

,

@keysers

,

@kyosu

,

@MarioLucic_

, Alexey Dosovitskiy

1

4

40

OWL-ViTv2, state-of-the-art open-vocab object detector, is now open-sourced.

We just open-sourced OWL-ViT v2, our improved open-vocabulary object detector that uses self-training to reach >40% zero-shot LVIS APr. Check out the paper, code, and pretrained checkpoints: . With

@agritsenko

and

@neilhoulsby

.

0

25

90

0

4

37

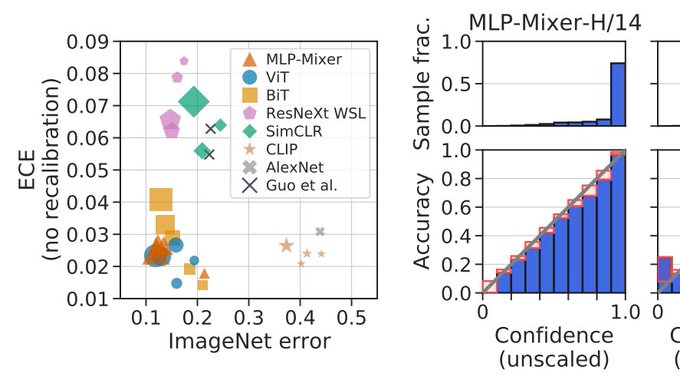

I feel there is some religion when it comes to the question of "do big nets give reasonable confidence estimates?"

Work from Matthias and colleagues provides practical answers, carefully quantifying the calibration, including on OOD data, of modern vision networks.

0

4

35

Very cool to see VLMs working in the real world.

Huge shout out to

@m__dehghani

@_basilM

@JonathanHeek

@PiotrPadlewski

, Josip Djolonga, and collaborators for providing the "eyes" of the robot (via ViT-22B / PaLI-X).

0

7

35

Interested in, or working on, architecture designs of the future? Conditional compute, routing, MoEs, early-exit, sparsification, etc. etc.

Consider checking out/submitting to our ICML 2022 Workshop on Dynamic Neural Networks (July 22nd).

Announcing the 1st Dynamic Neural Networks (DyNN) workshop, a hybrid event

@icmlconf

2022! 👇

We hope DyNN can promote discussion on innovative approaches for dynamic neural networks from all perspectives.

Want to learn more?

1

27

93

1

6

32

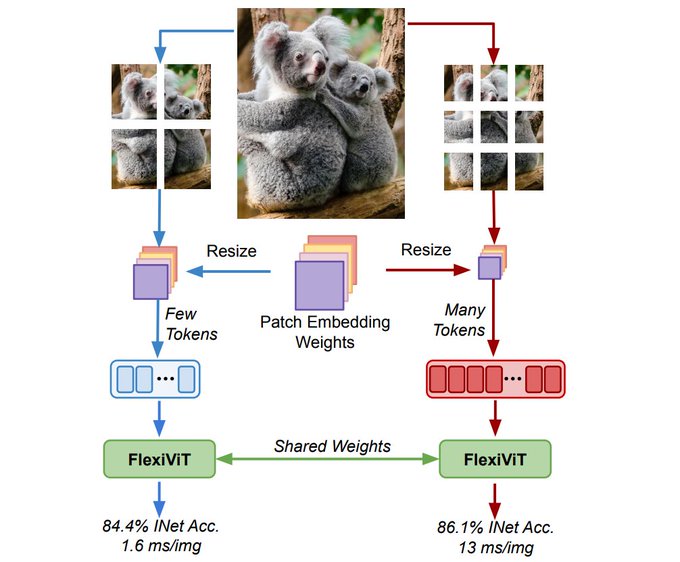

If you are interested in the important questions in vision, like "can my big SOTA model recognize a koala, flying upside-down, in the mountains?", and many others, you might be interested in our new paper:

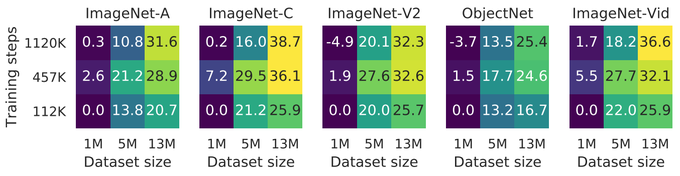

I’m excited to showcase our recent preprint on transferability and robustness of CNNs, where we investigate the impact of pre-training dataset size, model scale, and the preprocessing pipeline, on transfer and robustness performance.

@GoogleAI

2

33

150

1

4

31

Check out PaLM-E (Embodied), feat. ViT-22B, at ICML.

And a few works w/ some of my collaborators:

@DannyDriess

will present PaLM-E, a language model that can understand images and control robots, Tue 2 pm poster

#237

:

Video here:

1

7

18

0

2

26

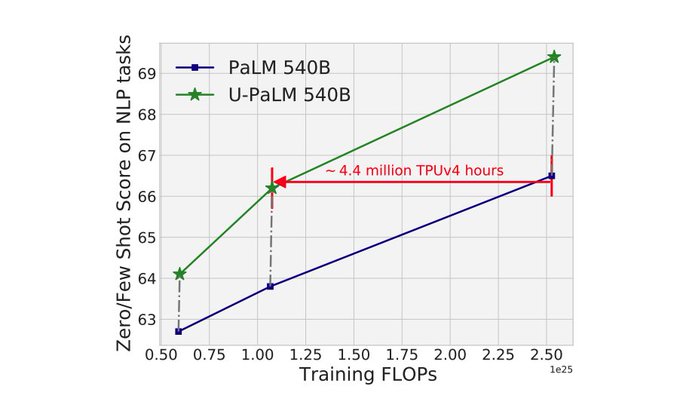

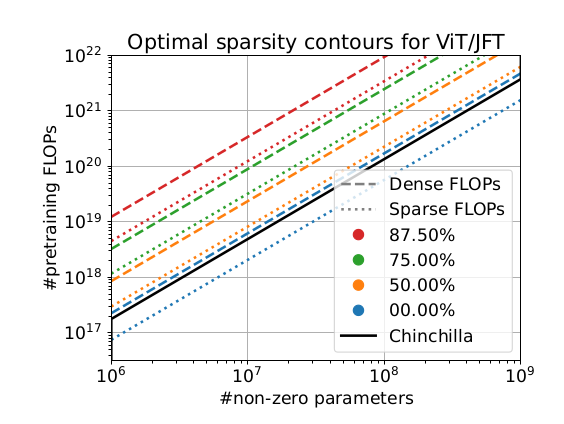

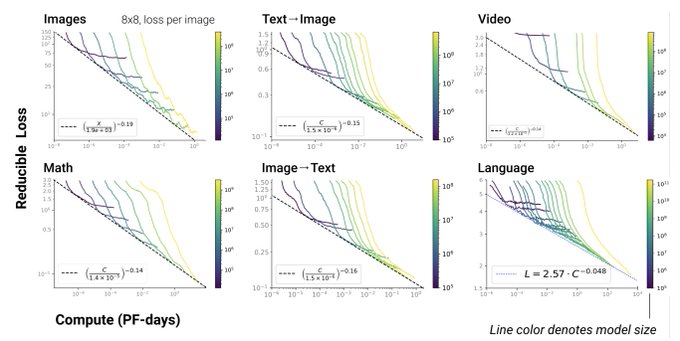

Great work from Elias figuring out optimal scaling for large sparse models.

I find particularly intriguing that sparsity unlocks a (half-)plane of optimal models on the compute/size axes. While regular dense Scaling Laws define only a single line of optimal models (for a given

1

2

26

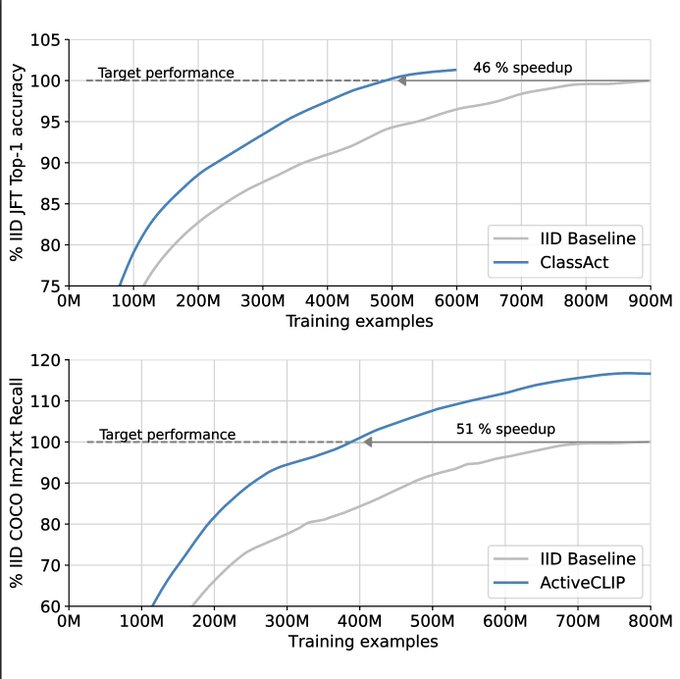

IMO, training data selection for DL is a rather challenging setting to get active learning working in practice (pool-based, little time for evaluation of acquisition fn).

So rather impressive that Talfan, Shreya, Olivier and others got it working so well.

0

2

25

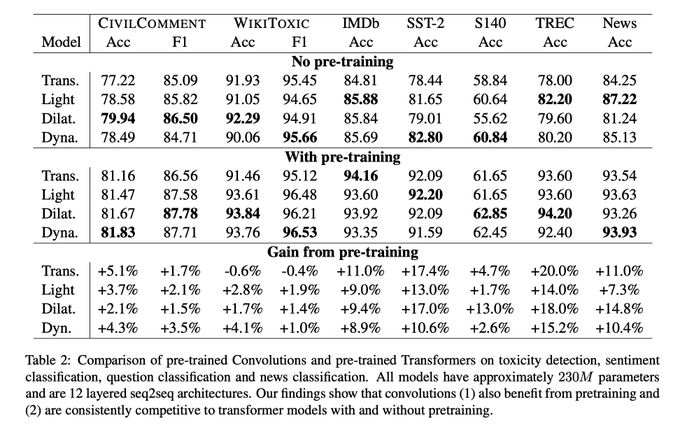

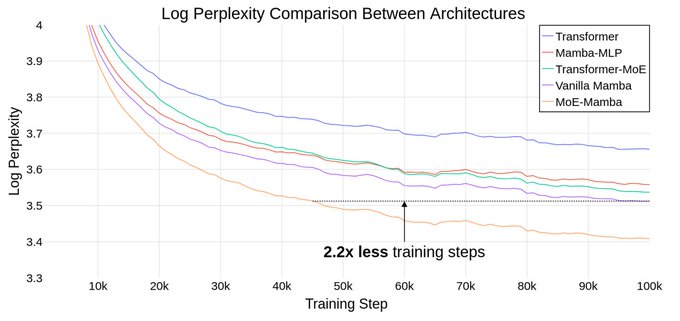

More back-and-forth between major architecture classes! Now in NLP. Will be interesting to see what crystallizes out in a few years time.

Are pre-trained convolutions better than pre-trained Transformers?

Check out our recent paper at

#ACL2021nlp

#NLProc

😀

Joint work with

@m__dehghani

@_jai_gupta

@dara_bahri

@VAribandi

@pierceqin

@metzlerd

at

@GoogleAI

3

37

190

0

1

24

These titles are getting out of hand! (or perhaps they always were). Nice to see some (friendly) competition between architecture classes driving up performance of both.

0

1

24

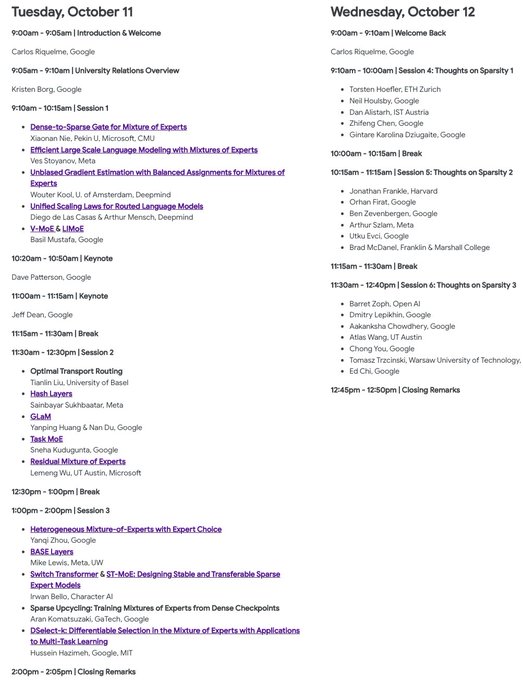

Looking forward to this workshop on MoEs, Sparsity, Adaptive Compute, and all the good stuff, later today and tomorrow. Feel free to stop by!

Youtube stream:

Agenda:

Next Tuesday & Wednesday we'll be hosting a workshop on Sparsity & Adaptive Computation! More than 30 speakers from Google, other industry labs & many universities will share their views on scaling language & vision models. Keynotes by

@JeffDean

& Dave Patterson! See agenda below

2

25

131

0

5

22

Multimodal from the ground up!

0

0

23

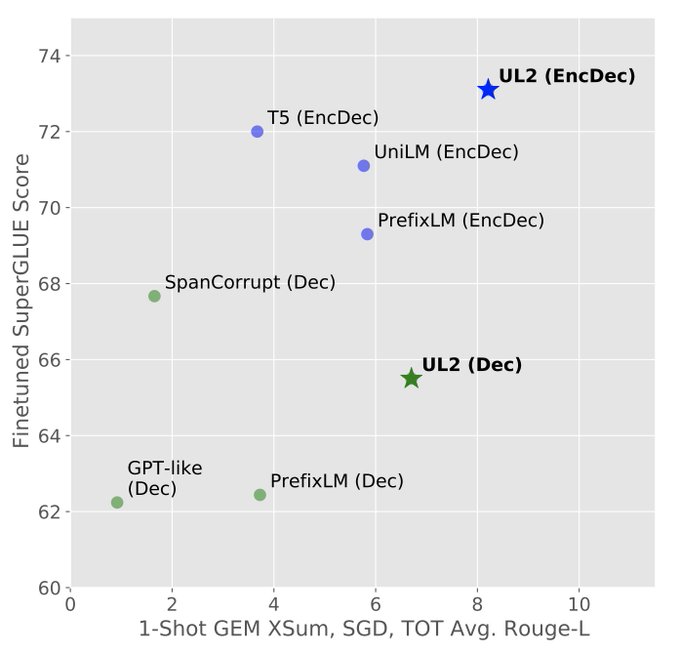

Objective often matters more than architecture. UL2 uses a mixture of denoisers objective, and allows one to switch between different downstream modes (finetuning, prompting) to get the best of both worlds.

0

6

22

Curious application of visual classification in which CNNs and ViT are compared. Although poorer in terms of max accuracy, ViT appears more robust. More interesting back and forth between the two architecture classes.

Convolutional Neural Network (CNN) vs Visual Transformer (ViT) for Digital Holography

by Stéphane Cuenat et al.

#DeepLearning

#ConvolutionalNeuralNetwork

0

5

8

0

2

19

@ylecun

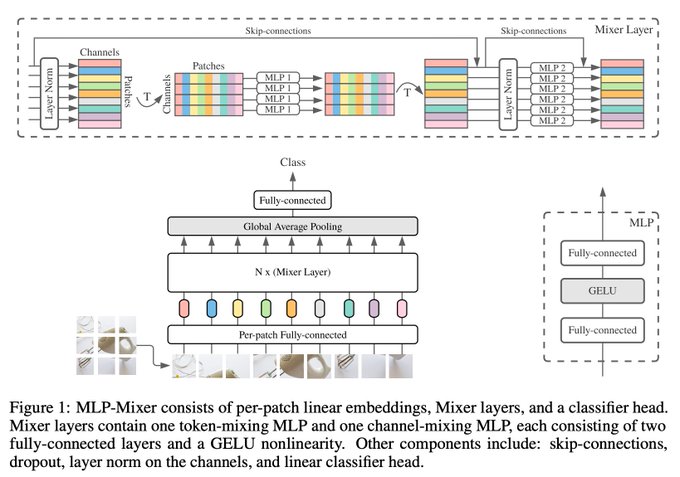

Thanks for the interest Yann! Semantics aside, would love to know your thoughts on the paper. Surprised such a degenerate cnn (i.e. alternating pointwise MLPs) could learn really good features for classification? Expect it to work at all? Or need more data/compute/tricks?

2

0

19

Tired of short, understand-everything-after-a-5-minute-skim, ML conference papers? See the recent work from

@obousquet

and colleagues for some serious bedtime reading.

@ykilcher

video please.

0

1

20

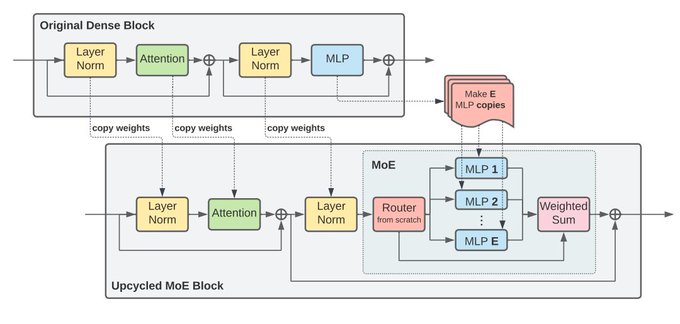

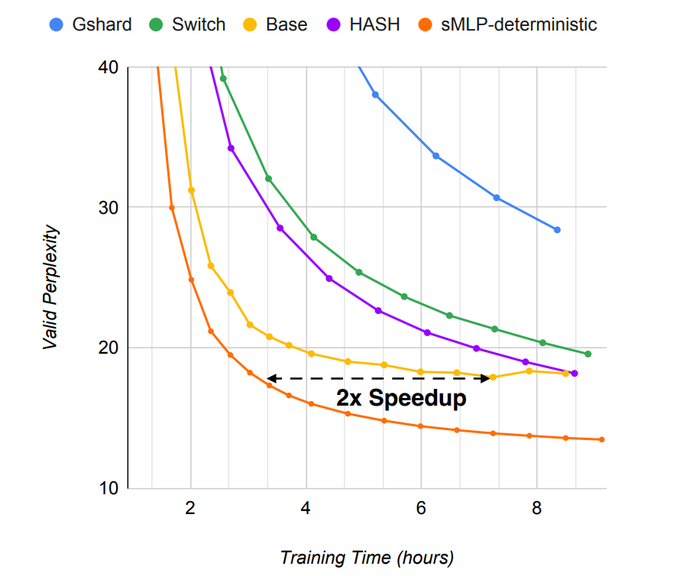

Study on converting existing Transformer checkpoints (there are many around) into MoEs to save on training cost from Aran,

@jamesleethorp

and team, plus us here in Brain EMEA.

0

1

20

@karpathy

One small, but curious, difference with depthwise conv is parameter tying across channels. Slightly surprising (to me at least) that one can get away with it, but a really useful memory saving due to the large (entire image) receptive field.

1

1

20

@giffmana

On this topic, its funny how some things are too well known to cite (they are just part of common language, and often lower-cased), but not others. Adam really hit a sweet spot, being nearly ubiquitous and cited in most usages. My guess is its partialy in the name.

1

0

19

@agihippo

What do you mean? "The laser-focused anticipatory specialty allows decoders to achieve superior language mastery." clearly explains everything.

3

0

18

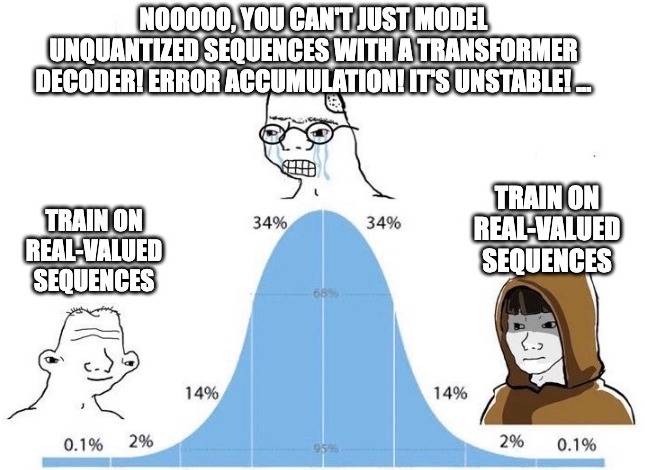

With all the excitement around Gemini, you could be forgiven for missing GiVT!

A really nice result from

@mtschannen

@mentzer_f

and

@CianEastwood

showing that you can model real-valued VAE representations to perform conditional image generation on-par or better than the

0

4

18

A nice summary from Carlos.

0

0

18

ImageNet accuracy is not the end of the story for image classifiers --- this library contains lots of robustness, stability, and uncertainty metrics all in one place! Examples provided in all your favourite languages, and TF.

1

1

18

Into model compression? Here's a challenge...

Today we're releasing all Switch Transformer models in T5X/JAX, including the 1.6T param Switch-C and the 395B param Switch-XXL models. Pleased to have these open-sourced!

All thanks to the efforts of James Lee-Thorp,

@ada_rob

, and

@hwchung27

19

208

1K

0

2

17

If you're in Amsterdam and interested in machine learning, check out the AI in the Loft event, hosted by the Brain team there. Weds 11th May.

Really excited that after almost two years, we are resuming the "AI in the Loft" events. For the next edition, Wednesday 11 May, we will have

@bneyshabur

as our speaker.

Please RSVP at

2

11

76

0

1

18

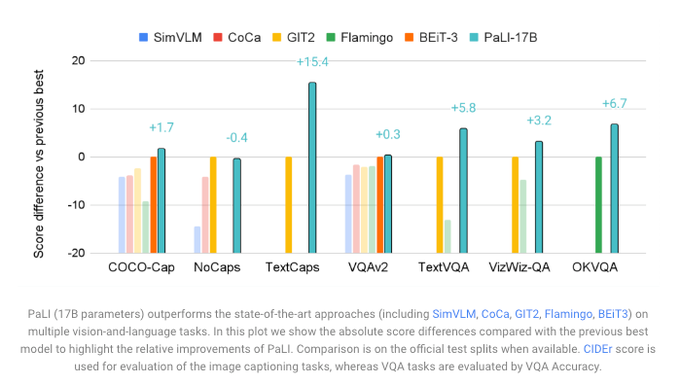

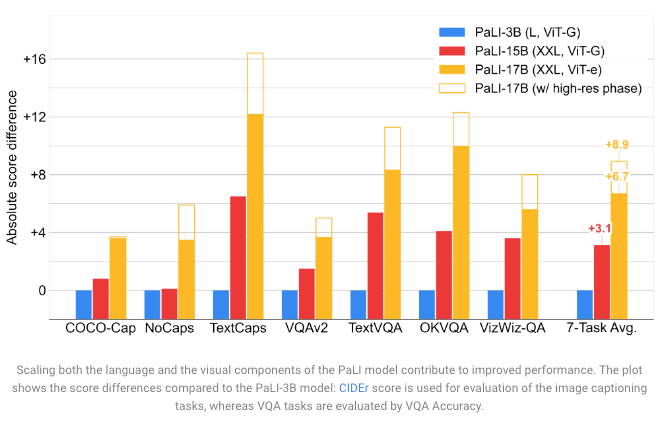

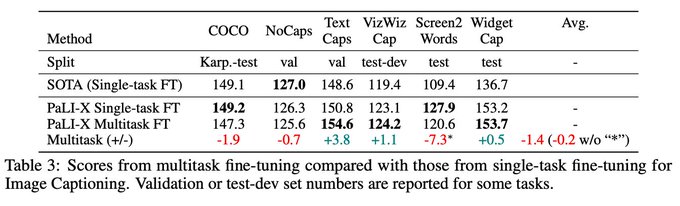

A "few" extra vision params go a long way.

@XiaohuaZhai

@giffmana

@__kolesnikov__

trained a larger ViT (ViT-e). Although it adds only +13% params (PaLI-15B -> PaLI-17B), the boost is enormous.

Lots of headroom for visual representation learning in these "open world" tasks.

1

1

16

All-MLP architecture and MoE layers, what is not to like!

Interesting (and nice) that a simple static (non-learned and input independent) routing works well for the token-mixing layers.

0

1

17

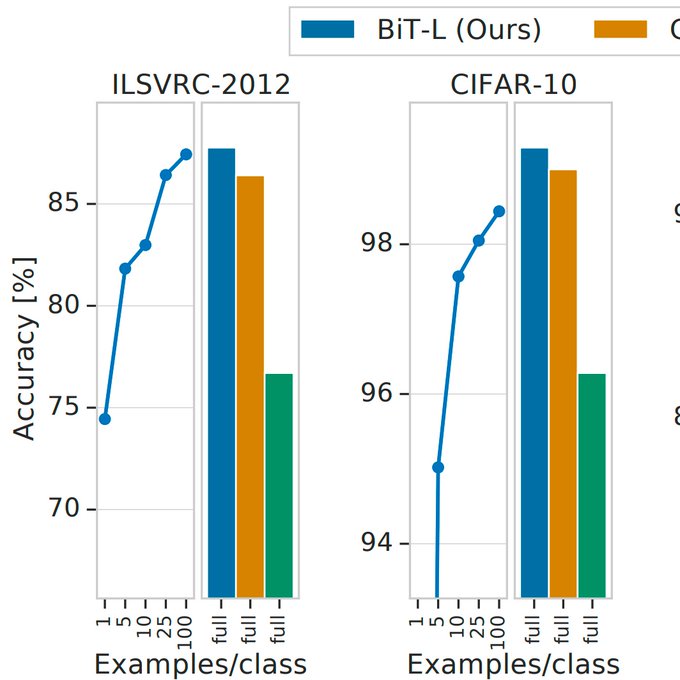

With judicious refinement of modern tricks, and scale, we can push SOTA (on VTAB, ImageNet, Cifar, etc.) using classic transfer learning, without excessive complexity. Works surprisingly well even with <=10 downstream examples per class.

0

4

16

Distil yourself a fine vintage of ResNet50 with the below recipe.

0

4

16

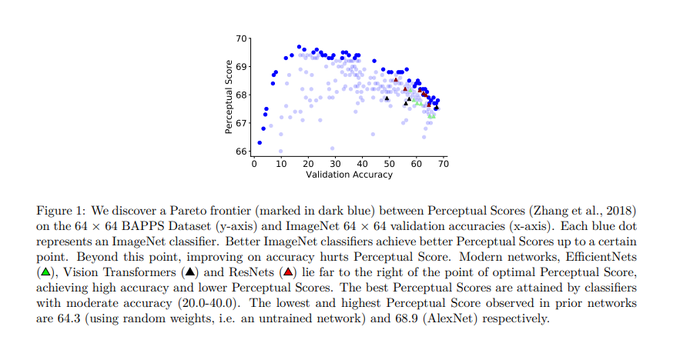

Emergent perceptual similarity of deep nets for vision behaves quite differently than most downstream tasks.

This study by

@mechcoder

in Brain Amsterdam digs into this effect. Still lots of questions remain though!

0

1

14

Fine-tuning look out, adapters are on the rise.

AdapterHub: A Framework for Adapting Transformers (demo)

The framework underlying MAD-X that enables seamless downloading, sharing, and training adapters in

@huggingface

Transformers.

w/

@PfeiffJo

@arueckle

@clifapt

@ashkamath20

et al.

0

6

26

0

4

14

"a 32x32 image is worth only about 2-3 words"

So a reasonable-sized 320x320 image is worth ~256=16x16 words (). Adds up.

1

2

14

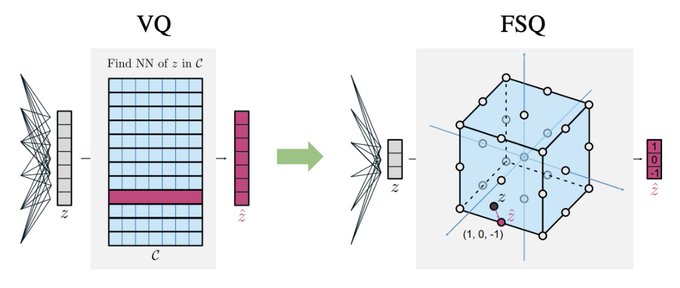

VQ benefits, without the hassle!

0

1

14

@__kolesnikov__

Maybe we not make this clear enough in the papers! Transfer is cheap, cheaper than training even the most efficient architecture from scratch. And it works on datasets much smaller than ImageNet. And the weights are public.

1

3

13

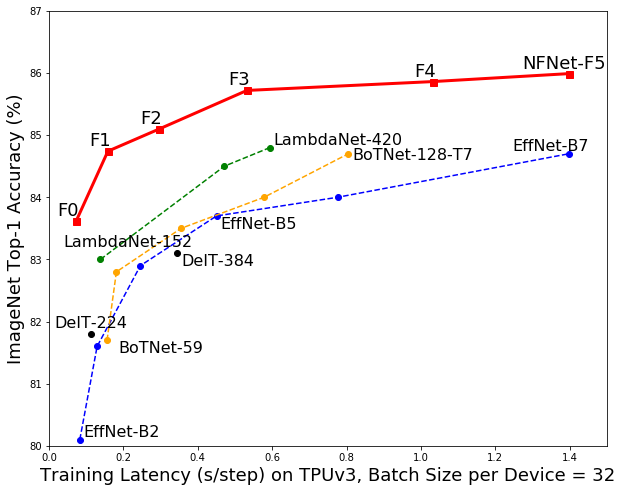

ResNets making a comeback on Vision Transformers!

While nice ImageNet numbers tend to correlate with other tasks, would be nice to see at least one other dataset to know how much of the gain is due to task-specific tricks/tuning.

(but overall the paper appears really thorough)

0

0

13

This work has nice "a good idea is to combine two previous good ideas" vibes.

Although somewhat preliminary, looks pretty promising. Various cool MoE advances coming from this lab 🔎

0

7

13

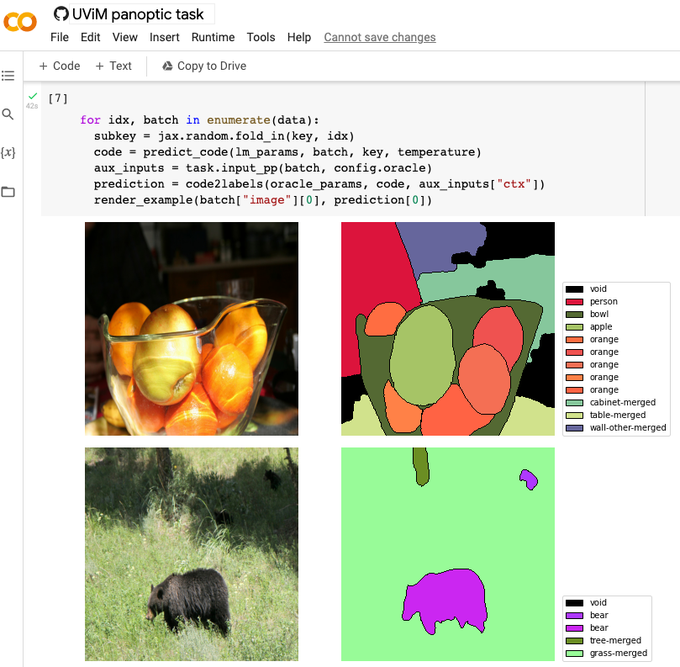

It was satisfyingly straightforward to add new tasks after the initial setup was built.

I think this is a really cool recipe that enables visual transfer to/between many different tasks.

Work with

@__kolesnikov__

,

@ASusanoPinto

,

@giffmana

,

@XiaohuaZhai

,

@JeremiahHarmsen

0

1

12

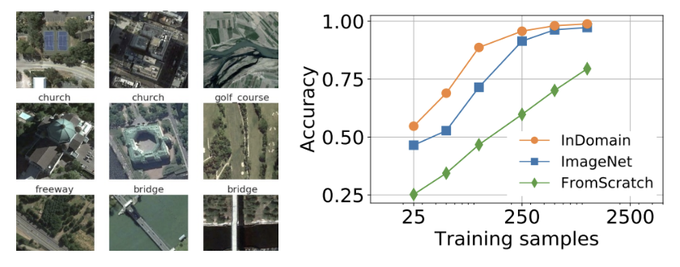

Interested in visual representation learning, but tired of ImageNet, Cifar, & VOC? Remote Sensing is a research area with many important applications. To dig deeper check out our paper with

@neu_maxim

We've looked into representation learning for

#RemoteSensing

with different datasets and fine-tuning using in-domain data. See paper with datasets and models included 🔋: with

@ASusanoPinto

,

@XiaohuaZhai

and

@neilhoulsby

.

1

11

21

0

1

12

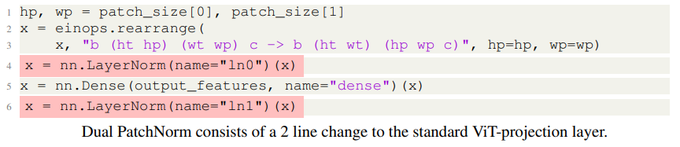

Simple tweak to ViT from

@mechcoder

. Worth a try.

While we await GPT-4 which is expected to have a trillion parameters, here are 3072 parameters, that can make your Vision Transformer better.

Paper:

Joint work w/

@neilhoulsby

@m__dehghani

3

11

153

0

0

12

A nice approach to adaptive computation from

@XueFz

taking advantage of sequence length flexibility in Transformers.

0

2

11