Piotr Padlewski

@PiotrPadlewski

Followers

1,616

Following

327

Media

150

Statuses

787

Chief Meme Officer @ , ex-Google Deepmind/Brain Zurich

Zurich, Switzerland

Joined October 2015

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

#RafahOnFıre

• 1799654 Tweets

Memorial Day

• 687766 Tweets

Christian

• 138001 Tweets

Cristiano Ronaldo

• 133056 Tweets

Bill Walton

• 132715 Tweets

#النصر_الاتحاد

• 90440 Tweets

İsrail

• 83104 Tweets

دوري روشن

• 57166 Tweets

Alejandra del Moral

• 52971 Tweets

كاس الملك

• 48219 Tweets

WE LOVE YOU JUNGKOOK

• 43963 Tweets

ابها

• 41747 Tweets

WE LOVE YOU TAEHYUNG

• 39773 Tweets

Arturo

• 37278 Tweets

#KingsWorldCup

• 36580 Tweets

الدوري السعودي

• 36508 Tweets

الدوري الذهبي

• 31410 Tweets

التعاون

• 29604 Tweets

大雨警報

• 23892 Tweets

الهداف التاريخي

• 19020 Tweets

رامي ربيعه

• 17592 Tweets

Amine

• 16390 Tweets

الرقم القياسي

• 14275 Tweets

Papa Francesco

• 12506 Tweets

جيسوس

• 12288 Tweets

الدون

• 11357 Tweets

الموسم القادم

• 11238 Tweets

سافيتش

• 10180 Tweets

Last Seen Profiles

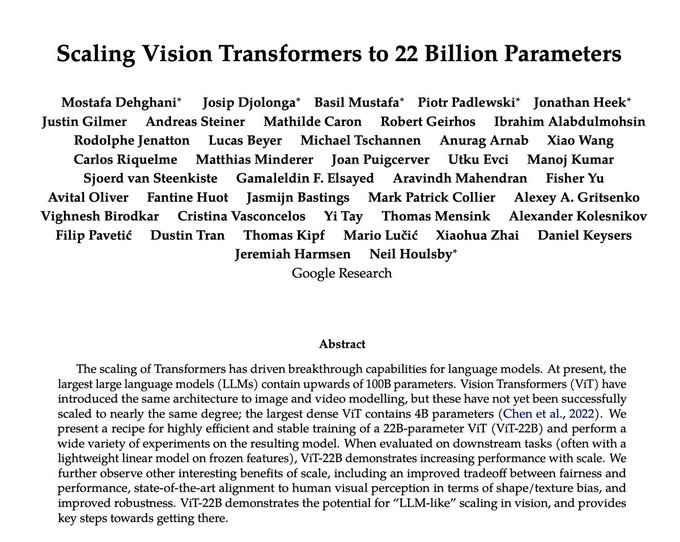

Today was my last day at Google Brain/Deepmind.

Really grateful for amazing colleagues.

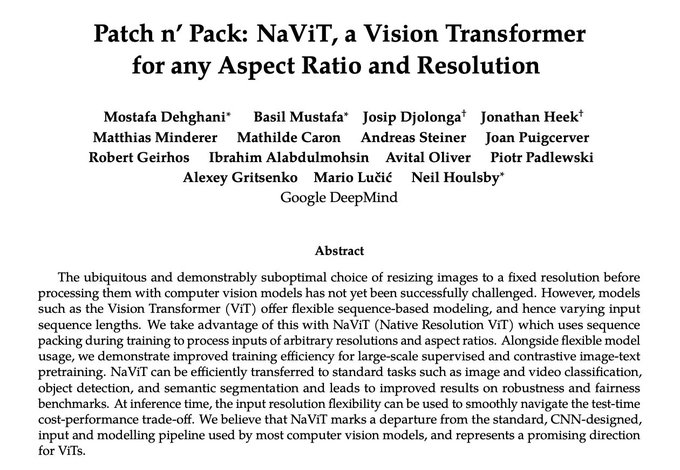

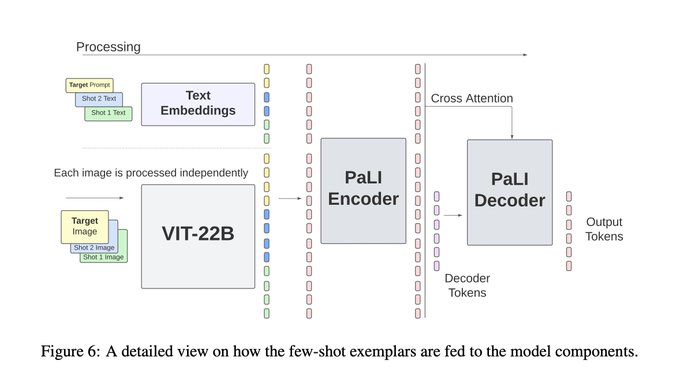

Learned so much from closely working on Pathways, ViT-22B, PaLI, NaViT and Gemini with

@m__dehghani

,

@neilhoulsby

,

@_basilM

, Xi and others.

The team terminated me pretty well today :)

18

4

493

The GOAT of tennis

@DjokerNole

said: "35 is the new 25.” I say: “60 is the new 35.” AI research has kept me strong and healthy. AI could work wonders for you, too!

167

147

2K

0

26

394

@savvyRL

I totally agree, but I hope you understand that I am not responsible for hiring :)

Unfortunately I didn't have a chance to work closely with some of the great female co-workers except from one 20%er who did amazing work on PaLI-X - and it was her first time with research!

136

1

278

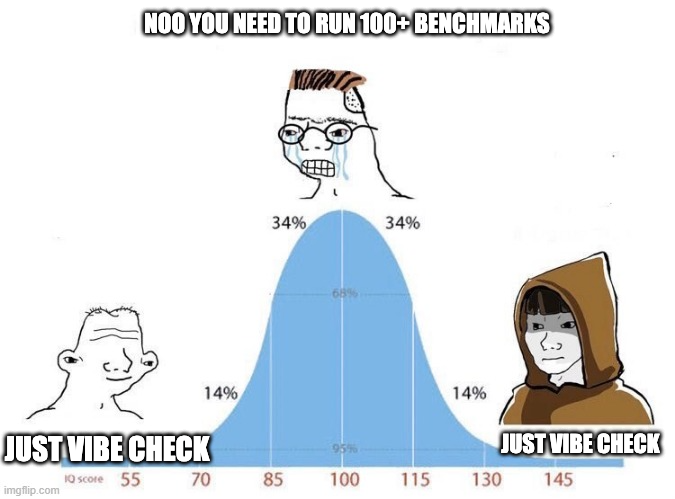

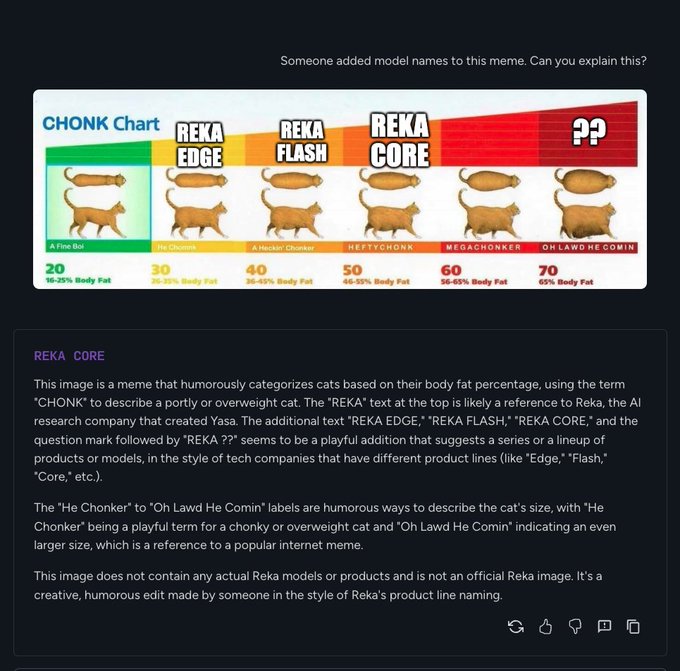

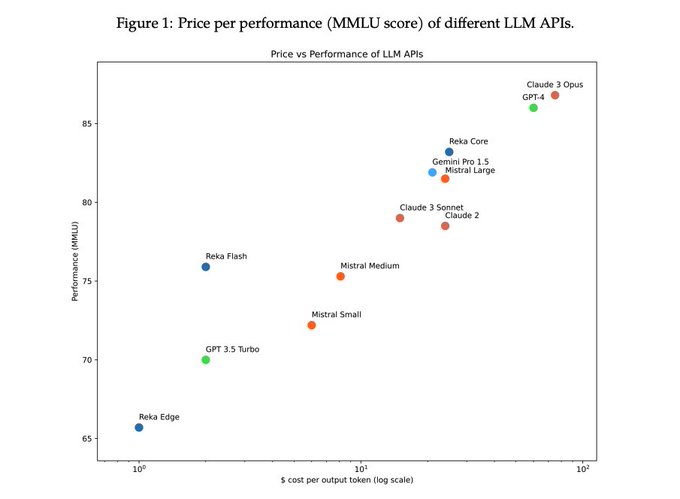

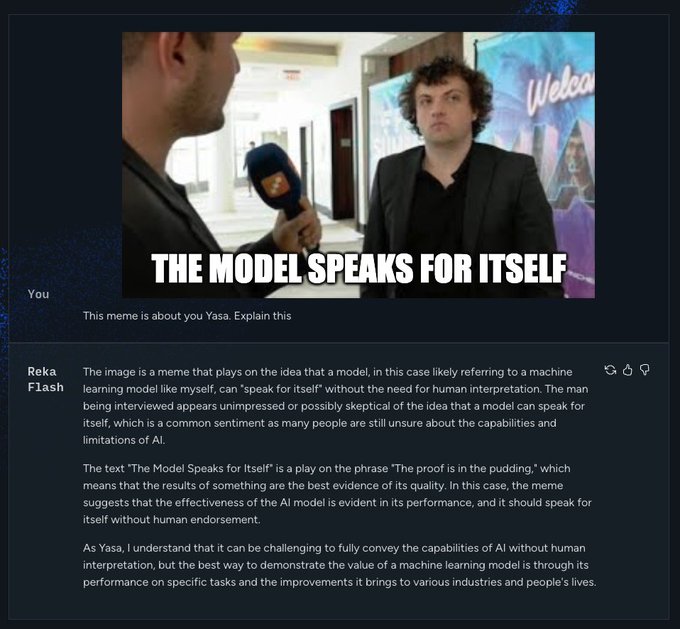

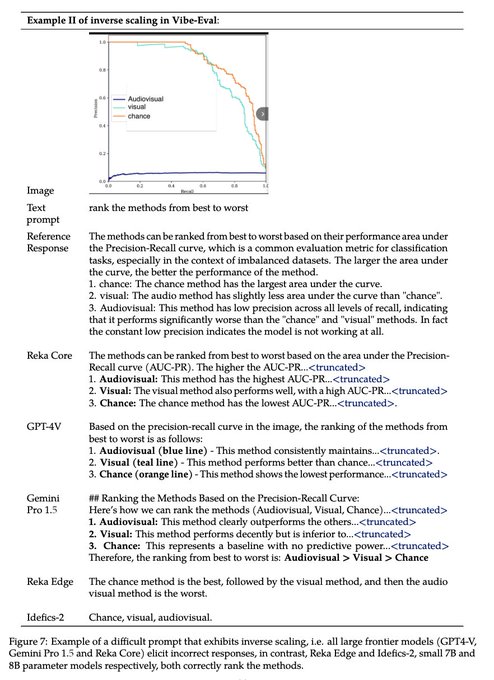

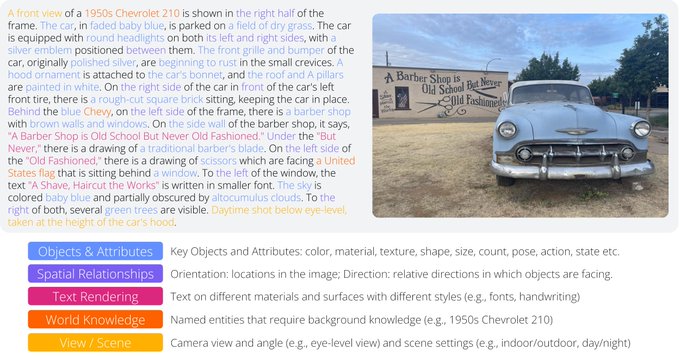

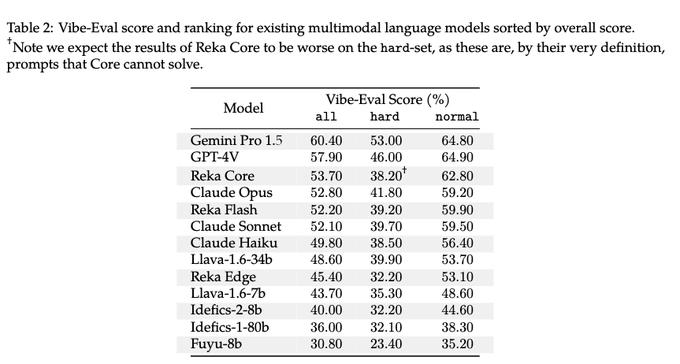

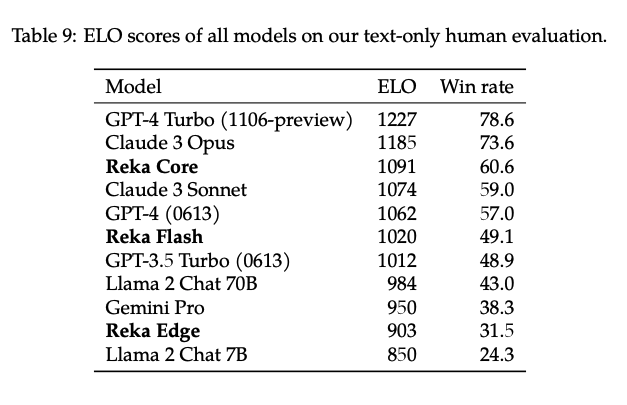

Model evals are hard, that is why we are shedding some light on how we do it at Reka.

Along with the paper, we are releasing a dataset of challenging prompts with a golden reference and evaluation protocol using Reka Core as a judge.

3

9

92

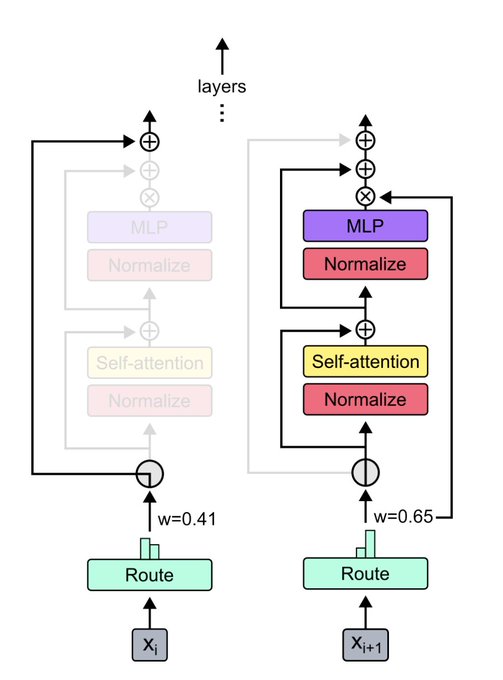

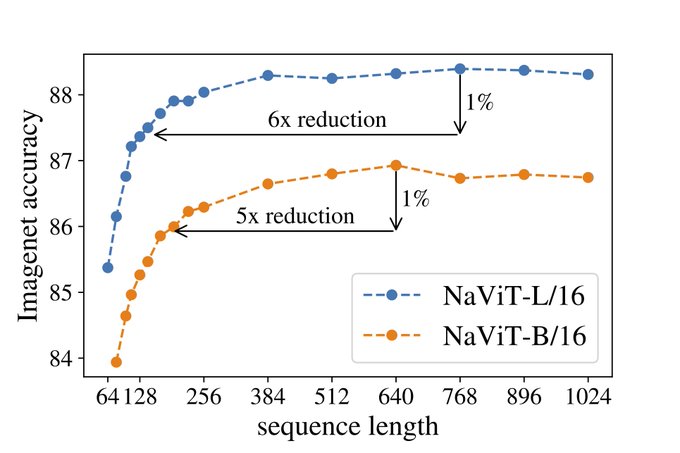

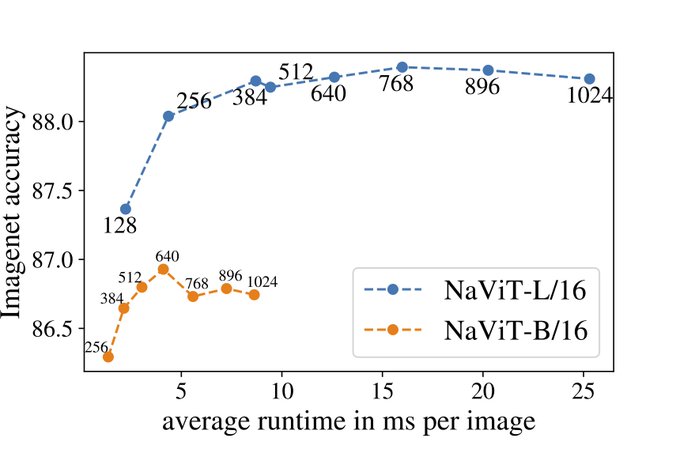

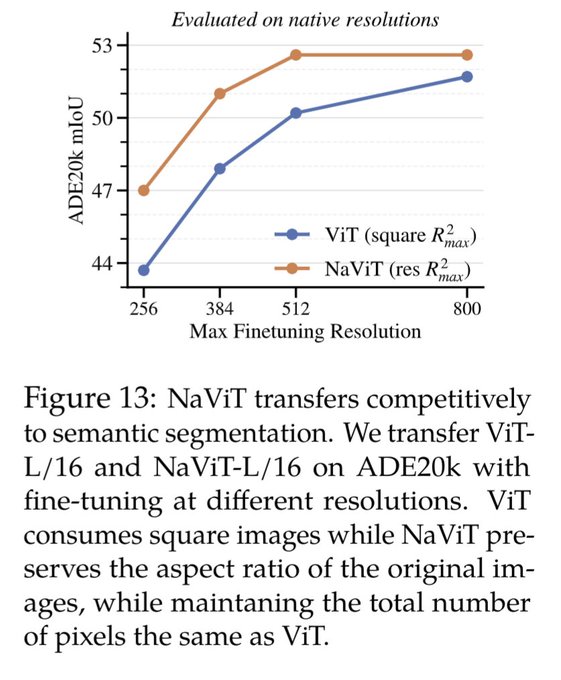

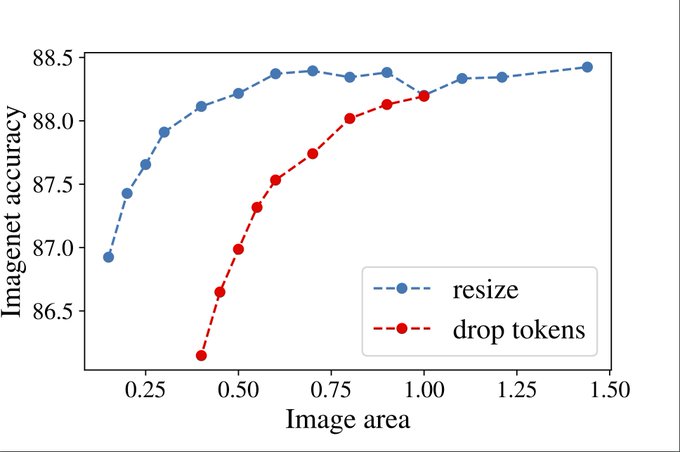

Do you want to accelerate your vision model without losing quality?

NaViT takes images of arbitrary resolutions and aspect ratios - no more resizing to square with constant resolution.

One cool implication is that you can control compute/quality tradeoff by resizing:

2

11

57

@giffmana

@m__dehghani

@neilhoulsby

@_basilM

I really enjoyed the fact that you were always very direct in giving constructive feedback in a respectable way. But also easily convincible with sound arguments.

Most people don't care or they are afraid to hurt feelings.

2

0

41

We are just starting 🔥

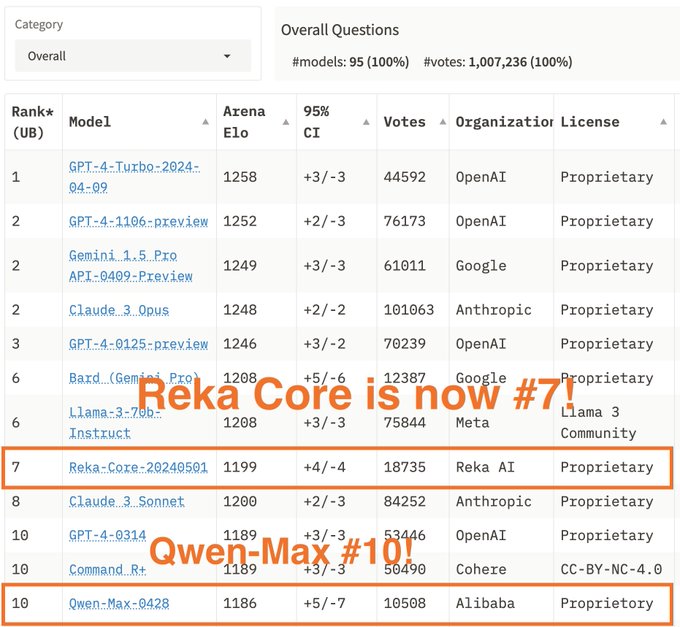

Exciting update - the latest leaderboard result is here!

We have collected fresh 30K votes for three new strong models from

@RekaAILabs

Reka Core and

@Alibaba_Qwen

Qwen Max/110B!

Reka Core has climbed to the 7th spot, matching Claude-3 Sonnet and almost Llama-3 70B! Meanwhile,

12

48

289

2

0

33

It's official now: I am delighted to inform that in the summer I will start working at

@GoogleAI

in Zurich Full time!

3

1

31

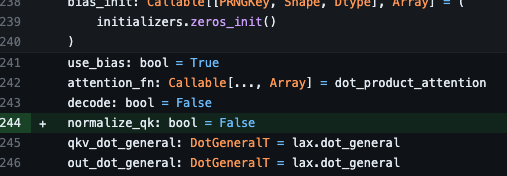

TL;DR I was too lazy to keep a fork of MHA, and I was too tired of my exps blowing up due to too high LR.

I am still amazed how useful this is even for small models - I can pre-train [Na]-ViT with 1e-2 (previously it blew up at ~5e-3).

Try it out!

1

5

30

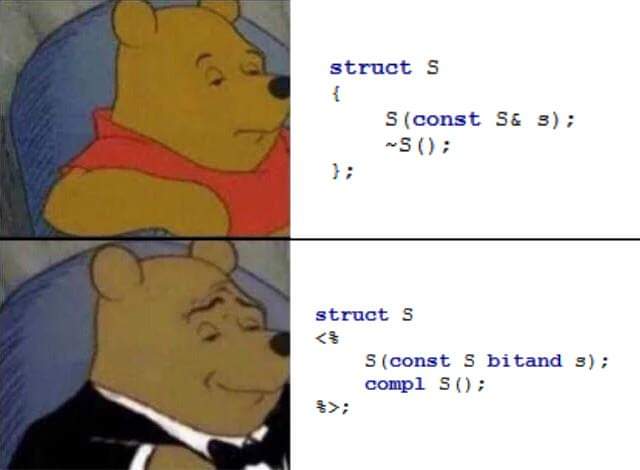

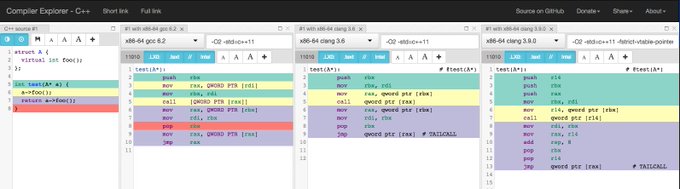

C++ IDE feature request: change colors of arguments passed

as non-const reference. This way it is much simpler to see what is being modified without a need for conventions -- like using pointers instead of

references (google coding style). CC

@clion_ide

@visualc

4

1

30

@chandlerc1024

@shafikyaghmour

@lefticus

@lunasorcery

@dascandy42

@mattgodbolt

Yes, it is safe to assume this, and this is wxact what happens with -fstrict-vtable-pointers in clang. As far as legality goes:

@zygoloid

said we can do so I guess we can do so

2

0

14

@giffmana

@boazbaraktcs

One could say her tweets could use a bit of peer review before publishing

2

0

15

@johnregehr

This one is particularly fun if you turn on NDEBUG

if (a == 42)

doSomething();

else

assert(false && "a should be 42");

otherCode();

5

1

13

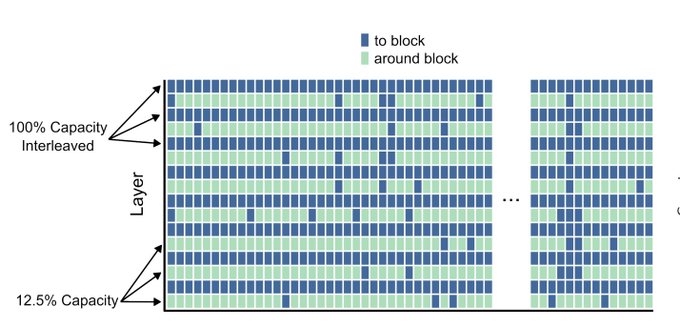

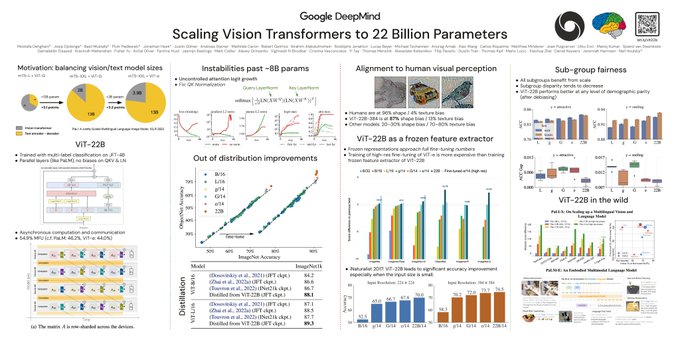

@m__dehghani

@_basilM

@GoogleAI

@neilhoulsby

@JonathanHeek

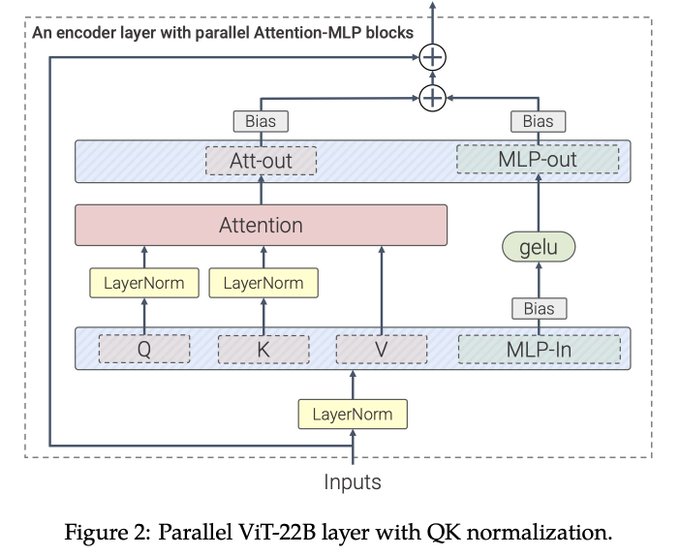

Secondly, ViT-22B omits biases QKV projections and LayerNorms, which increase utilization by 3%. In comparison, biases make up around 0.1% of total weights of large models. The non proportional cost of training biases comes from linear scaling in bandwidth connectivity: (3/5)

1

0

14

Lastly, we normalize outputs Queries and Keys - QK Normalizations. This change was essential for training Vision Transformers of such scale - even with 8B parameters numerical issues caused training instabilities without this change. (5/5)

Pop quiz: Why must we reduce learning rate as we scale transformers?

@jmgilmer

has the answer - and the solution!

Attention logits can grow uncontrollably, destabilizing training. The fix: Layer norm on queries and keys!

(Follow Justin and 👀 for upcoming paper on this topic!)

11

27

210

1

0

12

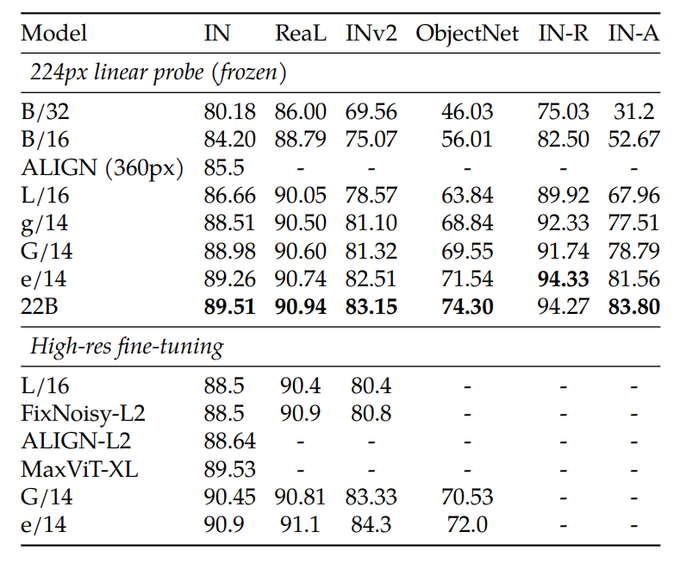

What is pretty mind blowing to me is that training a linear probe of 22B is cheaper than high resolution full fine-tuning of ViT-e (4B), while the results are pretty close. This makes it so much easier to use, and you can even precompute embeddings!

One thing that

@__kolesnikov__

and I looked at in the context of ViT-22B () is linear probes.

The headline number: linear probe on ImageNet gets 89.51%, that outperforms most existing models fine-tuned.

Raw i1k is slowing down, but generalization is not!

1

5

50

2

0

12

To improve efficiency of training we also heavily optimized sharding of the model, as well as the implementation (Asynchronous Parallel Linear operations). Just that last trick alone made the training 2x faster!

1

0

11

@BartoszMilewski

I think it is a more about culture of scam, similar to not pricing in sales tax, or pretending the price is lower with hidden extra fees.

1

0

11

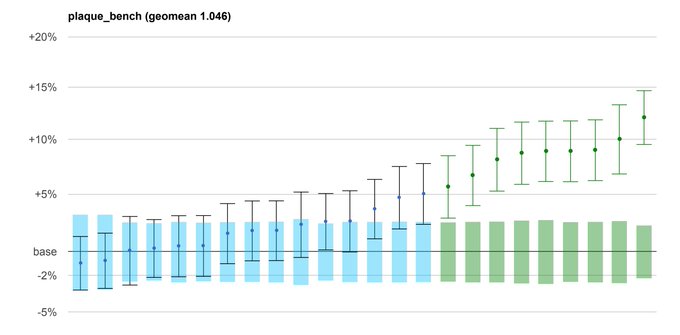

I am excited to share that Devirtualization in clang (-fstrict-vtable-pointers) shows overall 0.8% speedup on a range of benchmarks!

For more check out my and

@KPszeniczny

slides from

@llvmorg

dev meeting

0

1

10

Doing some experiments with devirtualization on

@mattgodbolt

compiler explorer.

Comparing output is so awesome!

0

5

9

@shafikyaghmour

These questions be like "without checking, what is the value of x <insert code for x = fibonacci(257229471) ^ 47382627284 *63826282>

1

0

9

Google is using thousands of machines, each probably costing 10k (10M total) just to serve a couple of queries for you.

1

0

8

Thanks everyone for helping! This summer I will do some work on

@TensorFlow

in

@GoogleBrain

in Mountain View

0

0

7

@giffmana

Same, I know this Belgian dude that is a professional shitposter on Twitter, but in his free time he writes goated computer vision papers

1

0

7

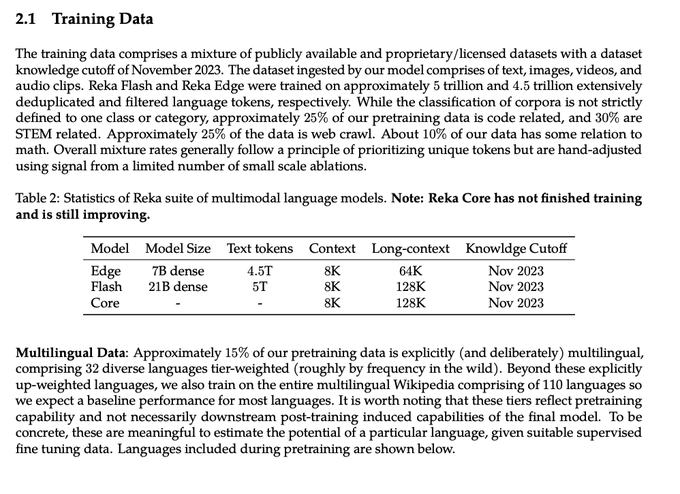

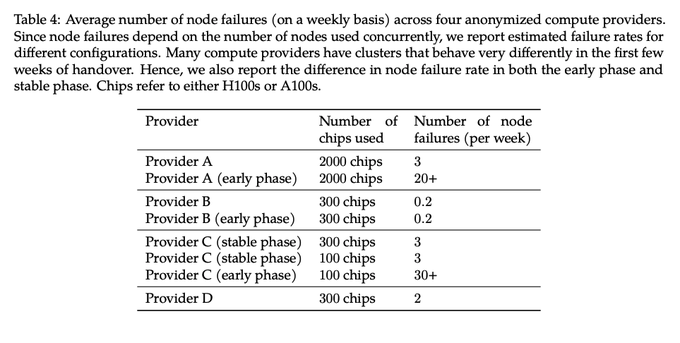

Infra:

- pytorch (sorry JAX, maybe next time <3)

- peak 2.5k H100 and 2.5k A100 starting in Dec 23

- Ceph filesystem

- Kubernetes

- interesting graph about stability of compute providers (extending

@YiTayML

hardware lottery)

1

0

7

@RickLamers

@alexgraveley

This is what I got:

Note that we didn't train it to do geoguesser, but you should expect that its good on famous landmarks. In general we don't want our model to enable stalkers

2

0

6

Last week a had a chance to visit

@zipline

in Rwanda and see a autonomous drones saving people's lives.

The package with medical materials (like blood) is packed within a minute after receiving an emergency. Within the next minute the drone is in the air at ~100km/h.

1

0

6

@YiTayML

@RekaAILabs

Openness (in publishing) and honesty (in recognizing

@RekaAILabs

is not the best yet). What a breath of fresh air compared to all the hype. And a great set of evals too!

1

1

17

0

0

6

@giffmana

Do they define FLOP to be 32? Or does vector instructions count as one floating point operation? If not, they might get FMADD quickly

1

0

5