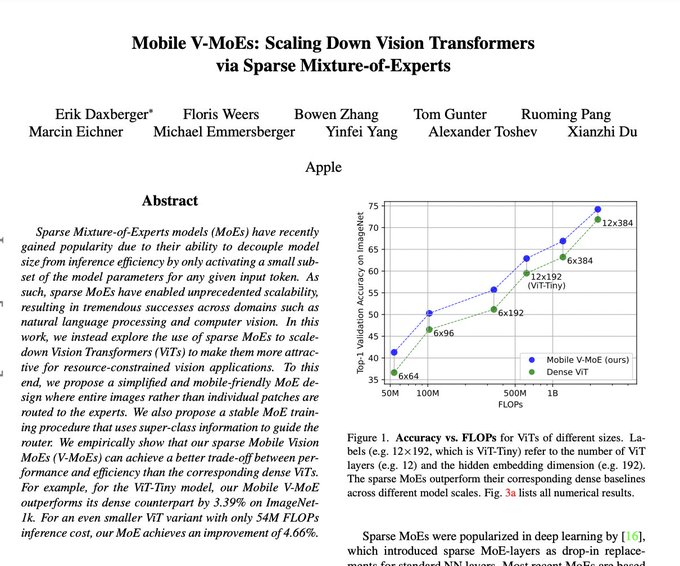

(V-)MoEs working well at the small end of the scale spectrum. Often we actually saw the largest gains with small models so it's a promising direction.

Also, the authors seem to have per-image routing working well, which is nice.

1

5

47

Replies

@neilhoulsby

@_akhaliq

Does 5% gain seem worth the incredible amount of additional complexity?

0

0

1