Peter Chen

@peterxichen

Followers

2,813

Following

1,178

Media

5

Statuses

124

Covariant CEO and Co-Founder. Previously @OpenAI , @UCBerkeley PhD.

Joined December 2017

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Cardi

• 80008 Tweets

casilla

• 61688 Tweets

#鬼滅の刃

• 48169 Tweets

BINI AT ALLSTARGAMES

• 38812 Tweets

桐生戦兎

• 28713 Tweets

ONLYBOO HALF A STORY

• 17589 Tweets

11 YEARS WITH TAEHYUNG

• 16201 Tweets

アニオリ

• 14717 Tweets

アリーナツアー完走

• 12284 Tweets

Erden Timur

• 12193 Tweets

Last Seen Profiles

Excited to announce our Series B and join forces with

@mavolpi

@IndexVentures

and

@JordanJacobs10

@radicalvcfund

to push the boundary of AI Robotics in the real world!!

We’ve raised $40m in Series B funding led by

@IndexVentures

w/ AI-focused

@Radicalvcfund

+ existing investor

@AmplifyPartners

. Grateful for the support of our investors, customers + partners as we continue to bring AI Robotics to the real world!

5

28

251

6

6

82

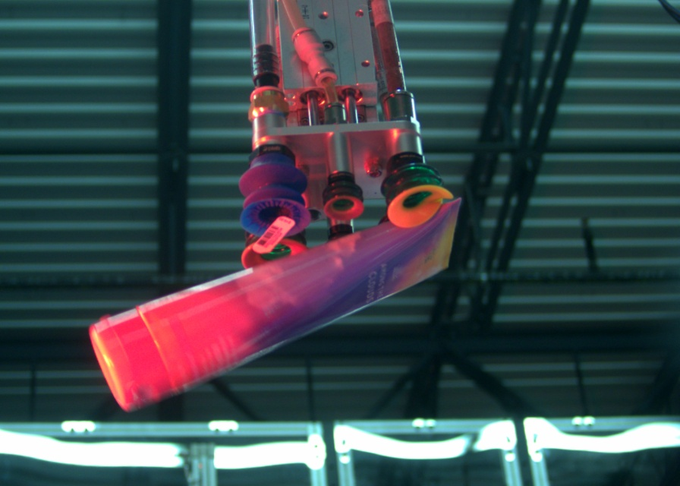

What separates lab robot demos and robots in production? Extremely high reliability. This requires our model to robustly handle many long-tail scenarios like the one in attached picture, where one stray barcode label can tank the 99.95% sortation accuracy requirements. (1/n)

1

9

62

Text foundation models (LLMs) have an incredible ability to adapt to new problems through in-context learning. We show that it’s possible for robots to learn in context as well, in our latest scaling update of RFM-1, Covariant’s robotics foundation model. (1/n)

1

8

58

What makes the training data for RFM-1 unique? A few properties that are distinctive from typical lab data: 1. real-world complexity: picking from extremely cluttered scenes where item occlusion presents a challenge for reliability. 2. high-speed handling: the dynamics of

0

4

34

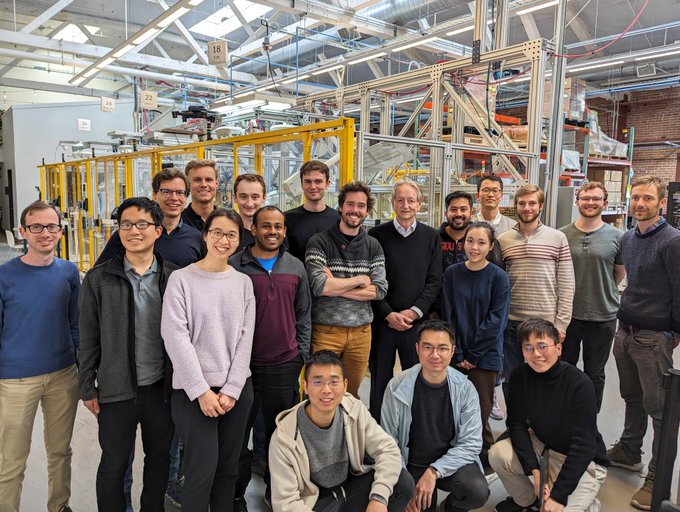

It was great to show

@geoffreyhinton

foundation models meeting robotics at Covariant HQ!

Much of the groundbreaking advancements we've witnessed over the past decade, spanning from computer vision, speech recognition, protein folding prediction, and beyond, hinge on the deep learning work conducted by

@geoffreyhinton

, who has fundamentally changed the focus and

0

6

41

1

3

33

Congrats

@chelseabfinn

@hausman_k

@svlevine

on starting a new company. We need more people to work on solving the physical world data challenge and bring foundation models to robotics!

0

0

25

Had a lot of fun diving deep into how AI Robots learn with

@CadeMetz

&

@satariano

A robot in Germany shows that machines can learn to do the job of a human (*learn* being the key word): (with the great

@satariano

)

1

21

68

0

4

24

Generative models can drastically accelerate database systems! A new learning task was introduced: Range Density Estimation, for more details see thread 👇

Can self-supervised learning help computer systems?

Our

#ICML2020

paper equips autoregressive models to optimize databases. We introduce a new task, range density: estimate the prob. of variables in ranges. A super simple trick gives 10-100x gains.

👇 1/

1

28

151

1

0

15

Thrilled to be working together!

Robotics has been a challenging field for years, AI has changed the game and the time is now. Today, we are announcing our investment in

@CovariantAI

. Excited for our journey with

@pabbeel

and Peter Chen:

3

25

210

0

0

14

Thanks

@alexgkendall

-- we also love the work that

@wayve_ai

is doing to bring foundation models to autonomous driving. It's going to be an exciting year for robotics!

Exciting result showing how robust, accessible and trustworthy robotics is becoming with AI foundation models. And I'm sure lots more to come.. congratulations

@pabbeel

@peterxichen

🎉

2

3

50

1

1

13

This is an amazing effort to collect more robotics data. I especially love that they have both structured data like multi-view stereo and more modern modality like text annotations. The key gap in robotics is data and it’s great to see the progress. Congrats

@SashaKhazatsky

0

0

8

Thanks

@EdLudlow

for having me - it's great to talk about how AI advances make bringing robots to the real world possible!

Giving Robots the Ability to Reason

Today we talked to

@CovariantAI

on

@technology

about their foundation model and why tackling the AI behind advanced robotics in isolation is a good strategy. Thanks for coming on the show

@peterxichen

2

2

5

0

2

8

One more non-Covariant research mention: this type of ability to adapt policy in-context also has parallel in humanoid locomotion. See the amazing work by

@ir413

casting humanoid locomotion as a next token prediction problem (similar to RFM-1): We just

0

0

4

@Joe__Black__

We expect RFM-1 to power humanoid robots and different kinds of hands (like those with fingers) as well! We would need to collect more targeted data for those hardware form factors, which will become easier as they become more mature.

0

0

1

@goodfellow_ian

Good point! "We made sure that the sets of writers of the training set and test set were disjoint." () so yeah this makes the evaluation less interpretable

0

0

2

@goodfellow_ian

I think it's training on test set so it uses more data than other off-line methods. but it's not cheating as long as it only takes one pass through the data and doesn't evaluate NLL on any image that it has performed gradient descent on

2

0

2

@vmcheung

@josh_tobin_

Congrats

@vmcheung

@josh_tobin_

- look forward to seeing what you build together!

0

0

2