Ian Goodfellow

@goodfellow_ian

Followers

300,071

Following

1,116

Media

138

Statuses

2,764

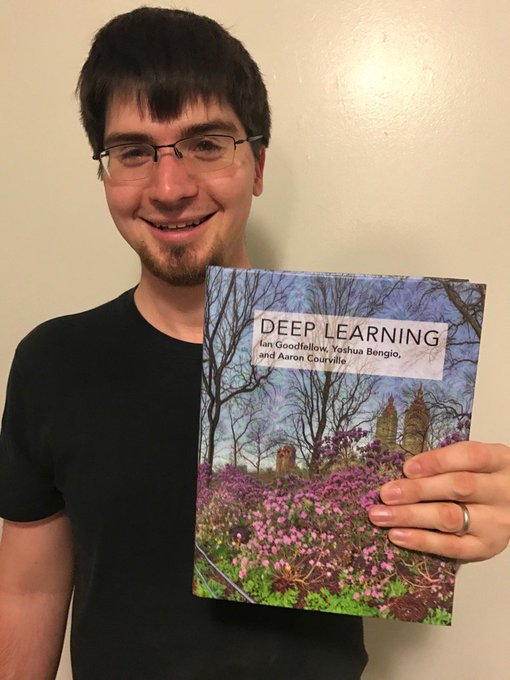

Research Scientist at DeepMind. Opinions my own. Inventor of GANs. Lead author of

San Francisco, CA

Joined September 2016

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Palestina

• 261871 Tweets

Mbappe

• 261855 Tweets

Whindersson

• 246231 Tweets

Madres

• 166430 Tweets

Joost

• 154953 Tweets

Mother's Day

• 88239 Tweets

Bannon

• 74579 Tweets

الكويت

• 72461 Tweets

Felipe Neto

• 60954 Tweets

Copa América

• 50757 Tweets

#الاتحاد_الاتفاق

• 48818 Tweets

الامم المتحده

• 39739 Tweets

SALVEM O DOGUINHO DO TELHADO

• 36959 Tweets

#campusintifada

• 31502 Tweets

Is It Over Now

• 29270 Tweets

Ligue 1

• 28404 Tweets

مجلس الامه

• 27405 Tweets

Nasser

• 21737 Tweets

الجمعيه العامه

• 19748 Tweets

Rodrygo

• 19325 Tweets

Northern Lights

• 17807 Tweets

#استمرار_السالم_مرفوض3

• 17186 Tweets

#KızılcıkŞerbeti

• 16923 Tweets

$ATS

• 15732 Tweets

Jim Simons

• 11236 Tweets

صاحب السمو

• 11043 Tweets

KTLA

• 10133 Tweets

Last Seen Profiles

I'm excited to announce that I've joined DeepMind! I'll be a research scientist in

@OriolVinyalsML

's Deep Learning team.

152

246

7K

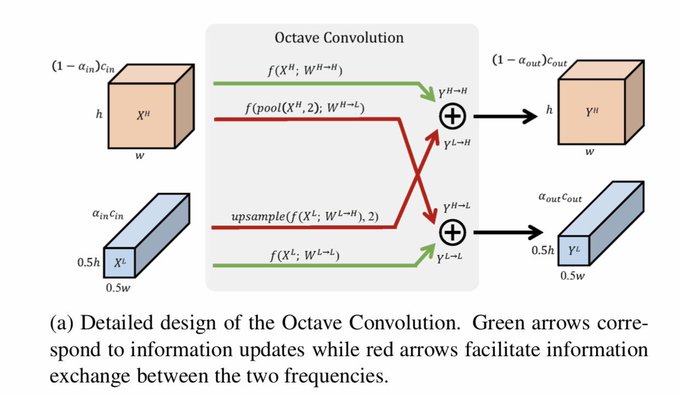

By looking at this image, you can see how sensitive your own eyes are to contrast at different frequencies (taller apparent peaks=more sensitivity at that frequency). It's like a graph that is made by perceiving the graph itself. h/t

@catherineols

25

529

1K

Whoa! It turns out that famous examples of NLP systems succeeding and failing were very misleading. “Man is to king as woman is to queen” only works if the model is hardcoded not to be able to say “king” for the last word.

29

386

1K

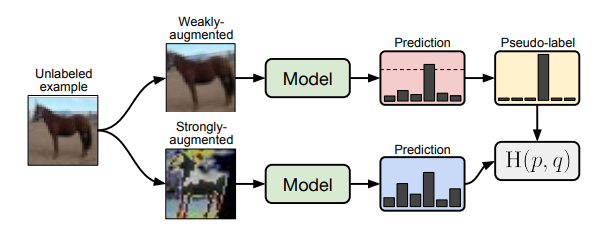

The quiet semisupervised revolution continues

FixMatch: focusing on simplicity for semi-supervised learning and improving state of the art (CIFAR 94.9% with 250 labels, 88.6% with 40).

Collaboration with Kihyuk Sohn,

@chunliang_tw

@ZizhaoZhang

Nicholas Carlini

@ekindogus

@Han_Zhang_

@colinraffel

5

243

883

8

213

1K

This new family of GAN loss functions looks promising! I'm especially excited about Fig 4-6, where we see that the new loss results in much faster learning during the first several iterations of training. I implemented the RSGAN loss on a toy problem and it worked well.

My new paper is out! " The relativistic discriminator: a key element missing from standard GAN" explains how most GANs are missing a key ingredient which makes them so much better and much more stable!

#Deeplearning

#AI

13

284

949

7

261

1K

Self-attention for GANs. No more problems with losing track of how many faces the generator has drawn on the dog.

New preprint () by Han Zhang, with

@goodfellow_ian

and Dimitris Metaxas. Substantially improves the state-of-the-art on the conditional Imagenet synthesis task.

7

157

518

13

241

784

This is really cool. Some of my PhD labmates worked on ML for compression back in the pretraining era, and I remember it being really hard to get a compression advantage.

Check out our new work on face-vid2vid, a neural talking-head model for video conferencing that is 10x more bandwidth efficient than H264

arxiv

project

video

@tcwang0509

@arunmallya

#GAN

14

151

747

19

100

766

TensorFuzz automates the process of finding inputs that cause some specific testable behavior, like disagreement between float16 and float32 implementations of a neural network

Neural networks are notoriously hard to debug.

@gstsdn

has developed a new debugging methodology by adapting traditional coverage guided fuzzing techniques to neural networks.

3

163

505

6

232

686

#CVPR2018

I will teach an Introduction to GANs at 8:45 AM in Room 150-ABC at the Perception Beyond the Visible Spectrum workshop. Slides available at

6

166

682

When I invented adversarial training as a defense against adversarial examples, I focused on making it as cheap and scalable as possible. Eric and collaborators have now upgraded the original cheap version to compete with newer, more expensive versions.

1/ New paper on an old topic: turns out, FGSM works as well as PGD for adversarial training!*

*Just avoid catastrophic overfitting, as seen in picture

Paper:

Code:

Joint work with

@_leslierice

and

@zicokolter

to be at

#ICLR2020

3

60

238

10

114

671

I suspect that peer review *actually causes* rather than mitigates many of the “troubling trends” recently identified by

@zacharylipton

and Jacob Steinhardt:

21

216

682

“Be careful what you wish for”

Describe programming in only six words.

We’ll RT all the best ones.

Ours:

Turning ideas and caffeine into code.

#ProgrammingIn6Words

#wednesdaywisdom

2K

376

1K

11

60

580

The "assistant professor" title seems especially galling to me: who exactly is the assistant professor assisting? They do the full job.

22

55

579

David has released a new paper from an old collaboration. Glad to see it out!

2

102

514

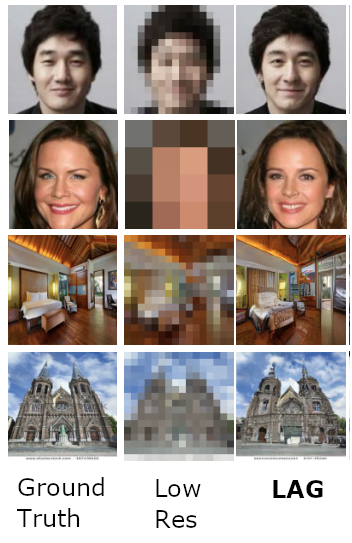

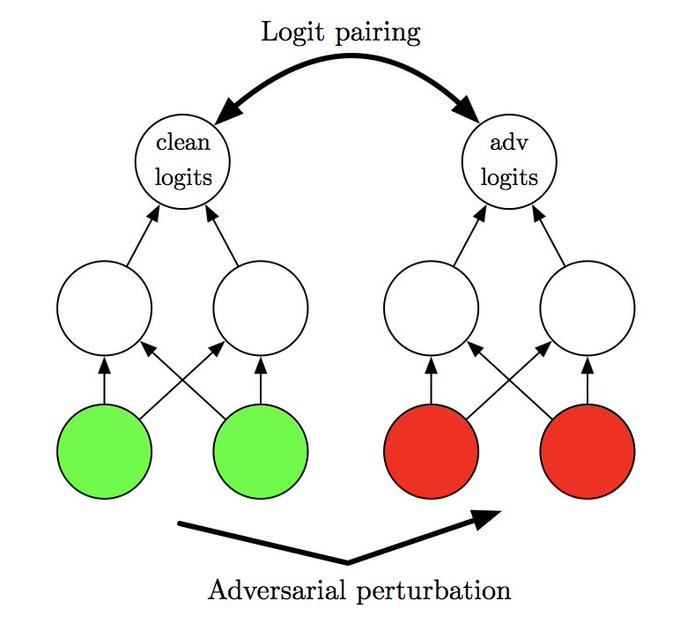

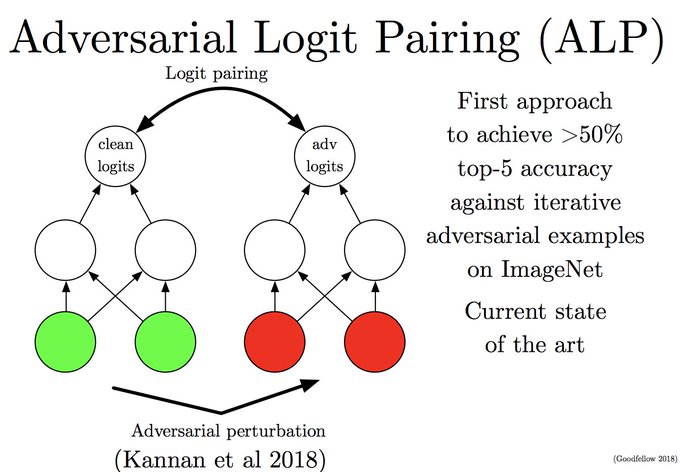

Check out Adversarial Logit Pairing, the new state of the art defense against adversarial examples on ImageNet, by

@harinidkannan

@alexey2004

and I:

3

184

524

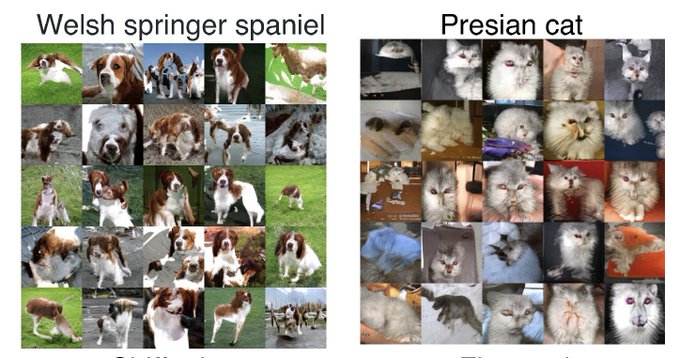

I originally thought of GANs as an unsupervised learning algorithm, but so far, to create recognizable object categories, they've needed a supervision signal / labeled images. This new work shows how to get them to work well with few labels.

How to train SOTA high-fidelity conditional GANs usin 10x fewer labels? Using self-supervision and semi-supervision! Check out our latest work at

@GoogleAI

@ETHZurich

@TheMarvinRitter

@mtschannen

@XiaohuaZhai

@OlivierBachem

@sylvain_gelly

1

69

243

3

138

502

Neural networks are notoriously hard to debug.

@gstsdn

has developed a new debugging methodology by adapting traditional coverage guided fuzzing techniques to neural networks.

3

163

505

Colin was a senior research scientist in my team at Google. He's done great technical work, especially on attention models and semi-supervised / transfer learning, and has been an excellent mentor for many Brain residents / interns. Will definitely be a great PhD advisor.

I'm starting a professorship in the CS department at UNC in fall 2020 (!!) and am hiring students! If you're interested in doing a PhD

@unccs

please get in touch. More info here:

82

146

893

2

34

498

#CVPR2018

Check out the all-day tutorial on GANs tomorrow: I'll speak at 9AM giving an introduction to GANs. Many great speakers throughout the day!

9

138

473

Interpretation of a machine learning model by a human involves both the model and the human. Human misconceptions can cause as much trouble as any property of the model.

6

132

473

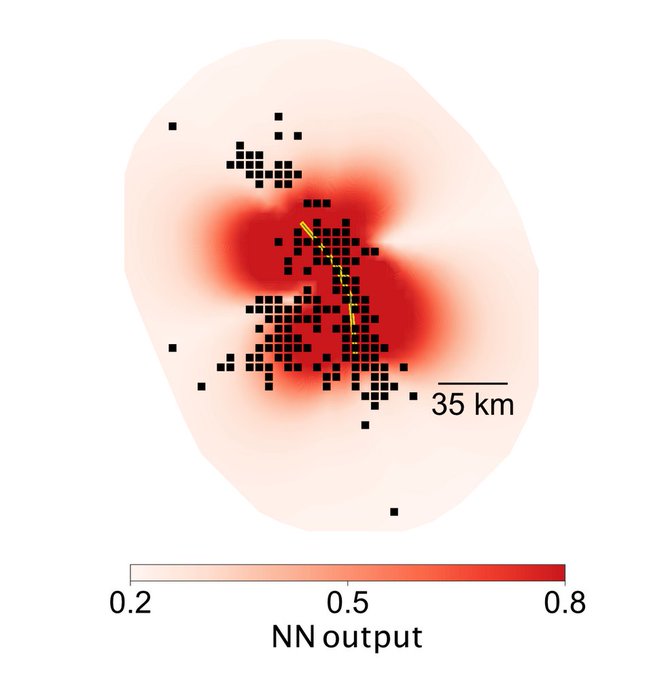

Our work on adversarial examples for the human vision system has been accepted to

#COSYNE19

:

6

83

465

It’s interesting to see the pendulum swing back to representation learning. During my PhD, most of my collaborators and I were primarily interested in representation learning as a biproduct of sample generation, not sample generation itself.

5

63

446

Check out

@fermatslibrary

's Librarian, a Chrome extension that automatically shows comments for ArXiv papers: I've asked for this feature for a long time!

5

162

442

In our previous collaboration's I've benefited a lot from using David's machine learning frameworks. If you're a researcher or student looking for both simplicity and customizability, definitely check out Objax.

3

71

435

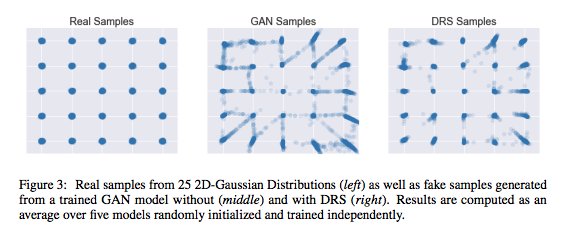

The discriminator often knows something about the data distribution that the generator didn't manage to capture. By using rejection sampling, it's possible to knock out a lot of bad samples.

New preprint by

@smnh_azadi

,

@catherineols

, Trevor Darrell,

@goodfellow_ian

, and me: . We perform rejection sampling on a trained GAN generator using a GAN discriminator. This helps quite a lot for not-much effort.

1

65

231

2

104

438