Danfei Xu

@danfei_xu

Followers

8K

Following

4K

Media

69

Statuses

865

Faculty at Georgia Tech @ICatGT, researcher at @NVIDIAAI | Ph.D. @StanfordAILab | Making robots smarter | all opinions are my own

Atlanta, GA

Joined August 2013

I gave an Early Career Keynote at CoRL 2024 on Robot Learning from Embodied Human Data. Recording: Slides: Extended summary thread 1/N.

2

26

142

RT @holynski_: Something fun we discovered: you can use #Genie3 to step into and explore your favorite paintings. Here's a short visit to….

0

1K

0

RT @GoogleColab: Big news for data science in higher ed! 🚀Colab now offers 1-year Pro subscriptions free of charge for verified US students….

0

119

0

One more thing: this new system automates the process of creating LIBERO-style tasks and demos. So you can easily create your own benchmarks!.

We systematically studied "what matters in constructing large-scale dataset for manipulation". Along the way, we (1) discovered some key principles in data collection and retrieval and (2) built a data synthesizer that can automatically generate large-scale demo dataset (with.

0

0

10

We systematically studied "what matters in constructing large-scale dataset for manipulation". Along the way, we (1) discovered some key principles in data collection and retrieval and (2) built a data synthesizer that can automatically generate large-scale demo dataset (with.

Large robot datasets are crucial for training 🤖foundation models. Yet, we lack systematic understanding of what data matters. Introducing MimicLabs. ✅System to generate large synthetic robot 🦾 datasets.✅Data-composition study 🗄️ on how to collect and use large datasets. 🧵1/

0

5

43

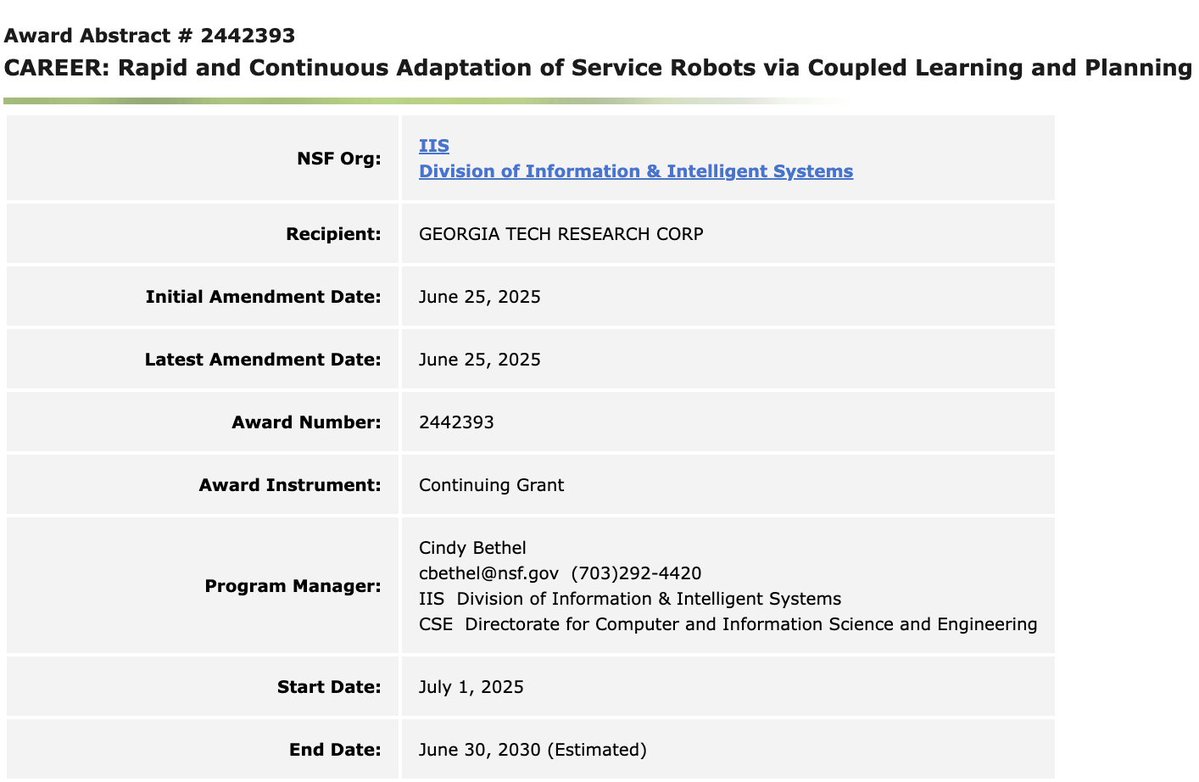

Honored to receive the NSF CAREER Award from the Foundational Research in Robotics (FRR) program!. Deep gratitude to my @ICatGT @gtcomputing colleagues and the robotics community for their unwavering support. Grateful of @NSF for continuing to fund the future of robotics

30

6

210

Realsense spinning out of intel.

Stereo depth sensing is set to revolutionize 3D perception. Can't wait to see the new innovations and applications that emerge! .#3Dperception #computervision #robotics.

0

1

10

RT @RussTedrake: TRI's latest Large Behavior Model (LBM) paper landed on arxiv last night! Check out our project website: .

0

107

0

RT @kexinrong: 🎉 Super proud of my student Peng Li (co-advised with Chu Xu) for receiving the 2025 Jim Gray Doctoral Dissertation Award for….

0

5

0

Happening now!.

Excited to announce EgoAct🥽🤖: the 1st Workshop on Egocentric Perception & Action for Robot Learning @ #RSS2025 in LA!. We’re bringing together researchers exploring how egocentric perception can drive next-gen robot learning!. Full info: @RoboticsSciSys

0

1

8

Despite advances in end-to-end policies, robots powered by these systems operate far below industrial speeds. What will it take to get e2e policies running at speeds that would be productive in a factory?. It turns out simply speeding up NN inference isn't enough. This requires.

Tired of slow-moving robots? Want to know how learning-driven robots can move closer to industrial speeds in the real world?.Introducing SAIL - a system for speeding up the execution of imitation learning policies up to 3.2x on real robots. A short thread:.1/

0

3

14

RT @NadunRanawakaA: Tired of slow-moving robots? Want to know how learning-driven robots can move closer to industrial speeds in the real w….

0

7

0

Yayyy! Really really really excited to have Sidd join us at @ICatGT !.

Thrilled to share that I'll be starting as an Assistant Professor at Georgia Tech (@ICatGT / @GTrobotics / @mlatgt) in Fall 2026. My lab will tackle problems in robot learning, multimodal ML, and interaction. I'm recruiting PhD students this next cycle – please apply/reach out!

1

0

12

Super cool demos. Congrats @andyzeng_ and @peteflorence !. Robot learning is a full-stack domain. Even with e2e learning, need capable robots & good controllers to move fast at high precision.

Today we're excited to share a glimpse of what we're building at Generalist. As a first step towards our mission of making general-purpose robots a reality, we're pushing the frontiers of what end-to-end AI models can achieve in the real world. Here's a preview of our early

1

0

13

Join us at the RSS 2025 EgoAct workshop June 21st morning session, where the @meta_aria team will demonstrate the Aria Gen2 device and talk about its awesome features for robotics and beyond! .

Aria Gen 2 glasses mark a significant leap in wearable technology, offering enhanced features and capabilities that cater to a broader range of applications and researcher needs. We believe researchers from industry and academia can accelerate their work in machine perception,

3

11

44