Stanisław Jastrzębski

@kudkudakpl

Followers

1K

Following

2K

Media

39

Statuses

365

AI for autonomous scientific discovery. Foundations of DL (post-doc at NYU, PhD at GMUM/UoE). CTO 👨💻 @ https://t.co/qJUKvd31g0. AC @ ICLR25. Same handle on bluesky.

Joined May 2013

I was very happy to receive today (and accept) invitation to serve as Action Editor for the new Transaction on Machine Learning Research! ( https://t.co/ESxA5nxmws). I hope TMLR will be a positive change for our field, and complement conferences. Looking forward to submissions!:)

1

2

104

🧪Massive milestone: >60k chemical reactions in just 14 days. Shoutout to our incredible Chemistry team led by Paulina Wach! (pictured next to the mountain of reaction plates 📷) Big step toward our mission: automating chemistry through AI.

0

7

18

I am thrilled to introduce OMNI-EPIC: Open-endedness via Models of human Notions of Interestingness with Environments Programmed in Code. Led by @maxencefaldor and @jennyzhangzt, with @CULLYAntoine and myself. 🧵👇

17

50

300

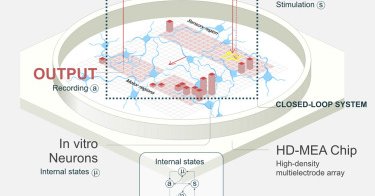

Awesome work by Maksym, Cheng-Hao, and others at LambdaZero, which we have had the privilege to support with synthesis planning at @MoleculeOne: https://t.co/Kf412cocbt, with confirmed inhibitors of sEH enzyme found in a vast synthetically accessible space by an RL agent

0

1

8

Help us solve the unpredictability of chemistry by harnessing the power of LLMs! https://t.co/gDZt2WN3LR is hiring for a Machine Learning Internship. Follow the link for more details: https://t.co/BhIRZr7qx1.

0

3

5

Meaningful progress on understanding break-even point & edge of stability: https://t.co/e4LyWMkpqQ. The result is really cool: the initial increase in sharpness and the resulting chaotic instability are due to an overeliance on simple features.

1

13

67

At https://t.co/OwNK9pincC, we have just opened a unique internship opportunity in building LLMs for chemistry. Please consider applying: https://t.co/ybEeU77wzg and feel free to reach out to me about any questions.

0

0

8

3

44

238

Breaking News! 🚀 🇵🇱 Polish Tech-Bio leader @MoleculeOne Teams Up with @AmerChemSociety's CAS! Exclusive Inside Look at the Game-Changing Partnership That Could Revolutionize Drug Discovery... The future of medicine is here, as tech-bio innovator https://t.co/SP6X9jXSKm from

2

10

48

CAS and @MoleculeOne have established a strategic collaboration combining @MoleculeOne’s proprietary generative deep learning models and CAS' chemical content collection to develop AI-based solutions for efficient chemical synthesis planning. https://t.co/lCEbLsmiQ4

0

6

16

Apply now for Machine Learning Summer School on applications in Science ⏳ Excellent line-up of speakers including @jmhernandez233 @mmbronstein @matejbalog @kudkudakpl and many others 💪Registration closes on 8th April 2023 11:59pm AOE 😱

Check out next speaker on MLSS^S 2023 summer school providing a didactic introduction to a range of modern topics in #MachineLearning and their applications: @jmhernandez233, Professor at the University of Cambridge! 👉 Make sure to register to MLSS^S:

0

6

17

Another very interesting paper about instability in training of Transformers with an interesting idea how to address it.

Another great recent publication that went under many radars I think is SigmaReparam (reparam linear layers with spectral normalization, getting rid of LN and training tricks). Tested over many fields, simplifying, feels sensible, impressive results https://t.co/06ghABEnPx

0

0

4

We're looking for a few (paid) interns this summer! Apply here by April 30: https://t.co/xHnW9bICBi

docs.google.com

If you're interested in joining my research group at the University of Cambridge this summer (2023) and potentially collaborating with my PhD students, please complete the form linked below by April...

6

51

228

"The larger the models get, the less Bayesian I become"? Actually, I think it's the opposite - the models are learning to do Bayesian inference. But note that this is inference on top of symbols created by humans, which provides a useful abstraction of raw data "for free".

Now that everyone is fatigued by GPT-4 hot takes and blocked the keyword "LLM", here's the blog post with my current view on the topic, and how my views changed: https://t.co/pggdJsNRmq

2

10

91

Very cool research! Break-even point/Edge of stability ( https://t.co/4aZZpQuKk4,

https://t.co/MgytDFWkh4) seem to matter a lot for training stable Transformers. I keep wondering what would it take to completely remove the effect of increasing sharpness in the early phase.

arxiv.org

We empirically demonstrate that full-batch gradient descent on neural network training objectives typically operates in a regime we call the Edge of Stability. In this regime, the maximum...

Stabilizing Training by Understanding dynamics Reducing the peakiness (entropy) of the attention provides huge stability benfits less need for LN,warmup,decay https://t.co/Jscfyil9gS

@zhaisf @EtaiLittwin @danbusbridge @jramapuram @YizheZhangNLP @thoma_gu @jsusskin

#CV

#NLProc

1

2

35

Our work on understanding the mechanisms behind implicit regularization in SGD was just accepted to #ICLR2023 ‼️ Huge thanks to my collaborators @kaur_simran25 @__tm__157 @saurabh_garg67 @zacharylipton 🙂 Check out the thread below for more info:

1/n ‼️ Our spotlight (and now BEST POSTER!) work from the Higher Order Optimization workshop at #NeurIPS2022 is now on arxiv! Paper 📖: https://t.co/TTKmW75PIR w/@kaur_simran25 @__tm__157 @saurabh_garg67 @zacharylipton

2

6

44

Couldn't agree more! My hypothesis is that broadly defined exploring is heavily underrated in supervised learning.

With @MinqiJiang, and @_rockt, in General Intelligence Requires Rethinking Exploration, we argue that a generalized notion of exploration applies to supervised and reinforcement alike, and is called for to obtain more general intelligent systems. [11/14] https://t.co/3ya0b3CMzJ

0

1

4

With @MinqiJiang, and @_rockt, in General Intelligence Requires Rethinking Exploration, we argue that a generalized notion of exploration applies to supervised and reinforcement alike, and is called for to obtain more general intelligent systems. [11/14] https://t.co/3ya0b3CMzJ

1

1

15

I have asked ChatGPT-3 to recommend a Christmas movie based on a true story. It suggested "Miracle on 34th Street". It was fun to watch a movie about Santa Claus under the assumption it is based on a true story :D

0

0

5