Zachary Lipton

@zacharylipton

Followers

62K

Following

22K

Media

741

Statuses

13K

Cofounder & CTO: @AbridgeHQ, Professor: CMU/@acmi_lab, Creator: @d2l_ai & https://t.co/QQt98VNLUp, Relapsing 🎷

Joined January 2009

Yesterday, I had the honor of speaking in the US Senate AI Insight Forum on privacy & liability, moderated by @SenSchumer @SenatorRounds @SenatorHeinrich & @SenToddYoung. Each panelist submitted a written statement for @SenSchumer's website. Here’s mine:.

14

31

179

So much fucking respect to @ylecun for being the one big-tech blue chip name to step up & champion open research, open source & the startup community. Unflinchingly. For remembering what made this moment possible in the first place & putting his name on the line to protect it ❤️.

7

58

957

Our ***free deep learning book*** ( now has a ***modern NLP chapter draft***, complete w. the omnipresent BERT & friends. All drafted via interactive Jupyter notebooks.

We have re-organized Chapter: NLP pretraining ( and Chapter: NLP applications (, and added sections of BERT (model, data, pretraining, fine-tuning, application) and natural language inference (data, model).

8

188

876

Tomorrow, we launch "The Art of the Paper", a course on the principles, mechanics, & culture of scientific writing. While core to our work, this material seldom gets a formal treatment. I'm excited, nervous, & grateful to @mldcmu for the creative freedom.

4

143

849

If you follow known fraudster & plagiarist @sirajraval, ***please take a moment to unfollow him***. Especially prominent researchers, journalists, and institutions. Don’t confer credibility on this scoundrel and thereby help to defraud his students.

38

173

766

***In the first thrilling installment, the Superheroes of Deep Learning find themselves up against an ordinary problem. Will their extraordinary abilities carry the day?***. New on Approximately Correct! @AndrewYNg @drfeifei

22

137

672

The double descent phenomenon is described in ~1000 papers & talks over past year. It's featured in at least 1 slide per talk @ last summer's Simons workshop on Foundations of DL. Why is this @OpenAI post getting so much attention as if it's a new discovery? Am I missing smtg?.

A surprising deep learning mystery:. Contrary to conventional wisdom, performance of unregularized CNNs, ResNets, and transformers is non-monotonic: improves, then gets worse, then improves again with increasing model size, data size, or training time.

24

69

589

When I grow up, my dream is to become the i-th (3 < i < N) author of a major @Google paper for an intern-level contribution, then set $1B dollars on fire. Later, I plan to play Call of Duty in a villa bought w secondary sales while employees pay the cost as it all implodes.

12

34

577

Here’s a puzzling fact about the mainstream ML/AI community: a bunch of ostensibly creative dreamers were handed job security, massive amounts of cash, and unprecedented creative license—and the only thing most ppl can think to do with this freedom: train bigger networks. 🤣.

How does a university based researcher keep feeling relevant in this fast changing and compute driven field?. Some good discussion here ➝

23

69

556

Overjoyed to announce that I have N papers accepted to the [name of international conference on X]! [no details about the work or coauthors follow] #conferencenameYYYY.

9

30

503

Awesome ML blog run by @lilianweng has fantastic exposition, clear illustrations and covers a wide spectrum of topics in classic and modern ML:.

5

98

499

“If you haven't run a baseline of logistic regression, you are committing algorithmic malpractice.” –@scorbettdavies at @ucsantabarbara Responsible ML panel. YES.

5

83

445

If @overleaf ever truly dies, I will quit research, move to Greece, and spend the rest of my days baking under the sun, reading sci-fi novels, & drinking away the pain.

22

23

417

Dear world (CC @businessinsider, @Hamilbug): stop saying "an AI". AI's an aspirational term, not a thing you build. What Amazon actually built is a "machine learning system", or even more plainly "predictive model". Using "an AI" grabs clicks but misleads

26

90

399

Hey everyone. Stoked to report that we've blown away recent NLP benchmarks with a new sentence embedding: "Efficient Recurrent Neural Inverse Embeddings" Idea's simple: iteratively embed & invert sentences gains thru meta-learning magic! #NLP #deeplearning.

19

56

412

Do pretraining’s big wins in NLP really involve “knowledge transfer”? Are upstream corpora even needed? *Not always!!* My students @kundan_official & @saurabh_garg67 show that self-pretraining (from scratch) often rivals “foundation model” performance.

7

82

403

Absolutely delighted to join the Operations Research group (joint w. MLD) at @teppercmu. With ML now applied in the wild, we must address both *predictions* & *decisions*. This nexus is where I see my research headed & I couldn't hope for stronger colleagues on either side.

12

13

395

Dear @overleaf, if you implement a feature that allows us to search for projects by collaborator names, my PhD student @dkaushik96 will tattoo "@overleaf" on his biceps and name his first child @overleaf.

10

10

391

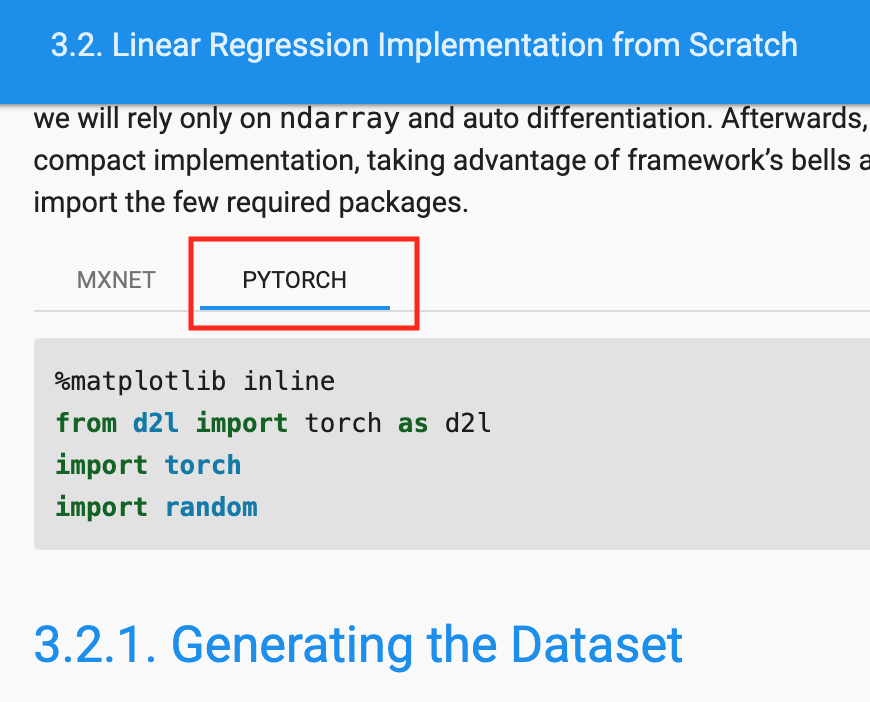

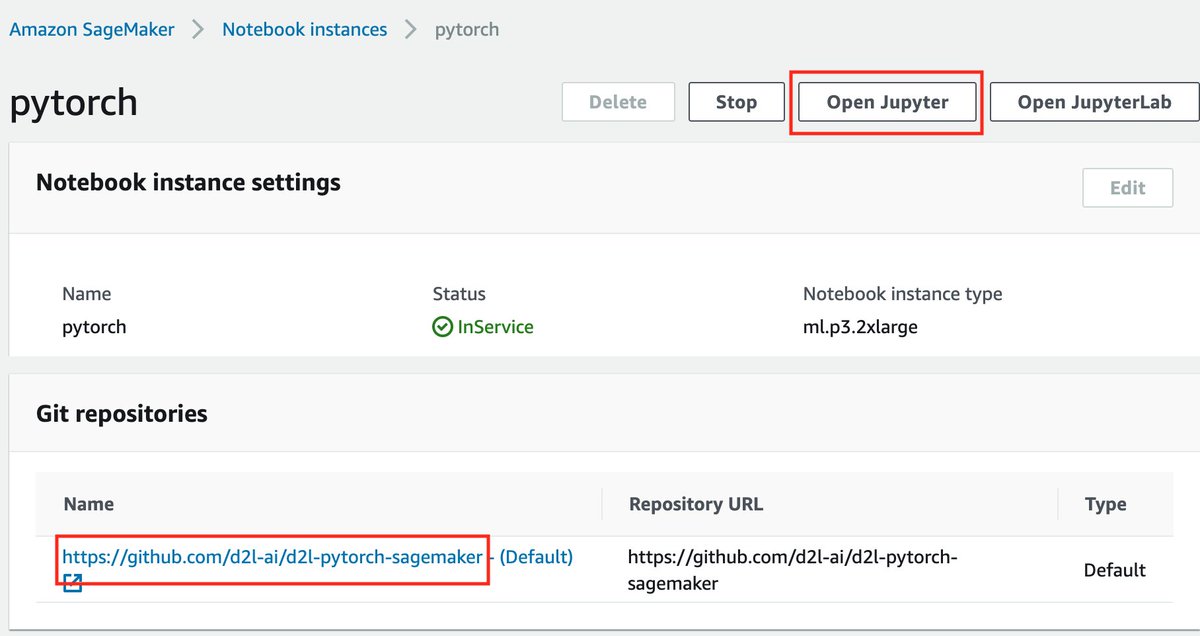

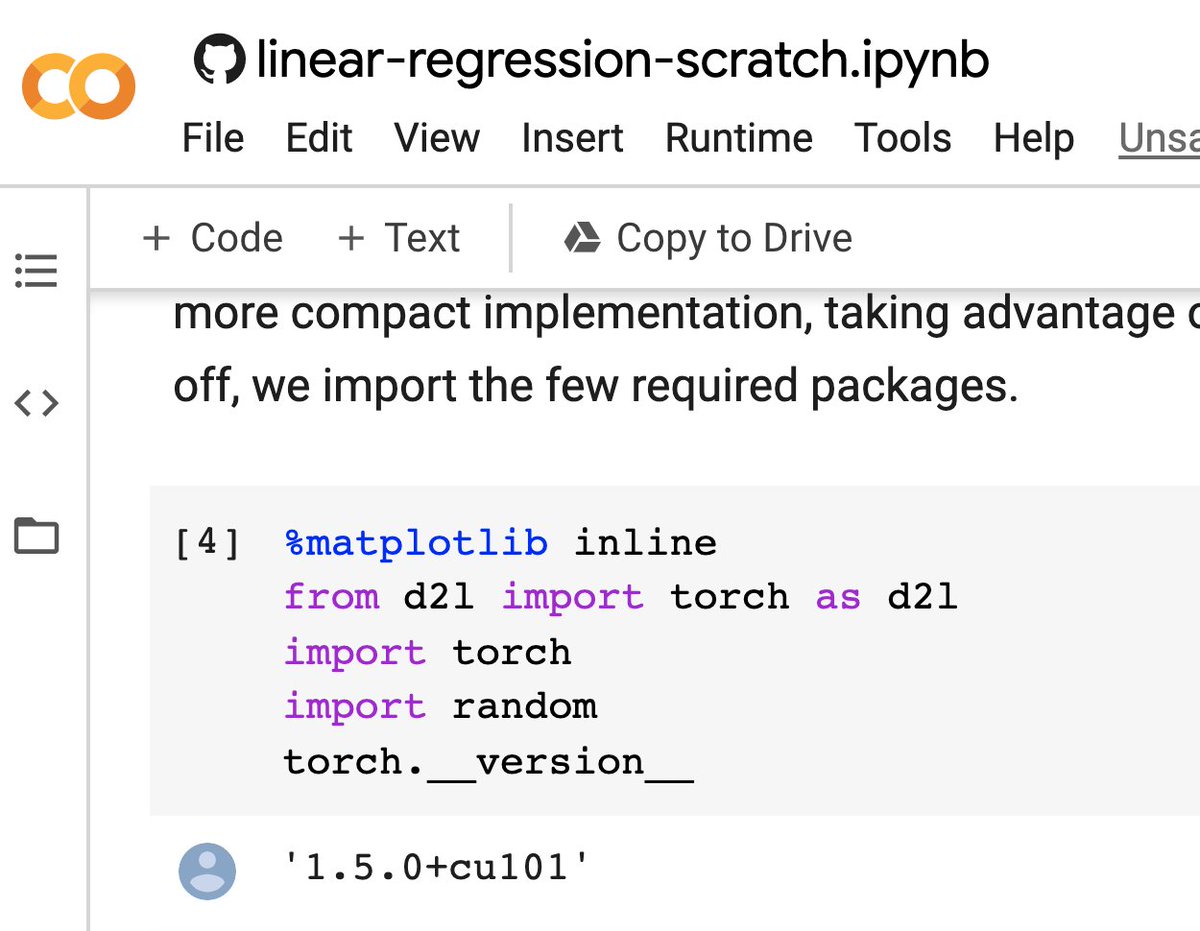

Super excited to announce that “Dive Into Deep Learning” now officially supports PyTorch!

Dive into Deep Learning now supports @PyTorch. The first 8 chapters are ready with more on their way. Thanks to DSG IIT Roorkee @dsg_iitr, particularly @gollum_here who adapted the code into PyTorch. More at @mli65 @smolix @zacharylipton @astonzhangAZ

9

54

386

Ali Rahimi delivered a rare critical talk at #nips2017, likening modern ML to alchemy. Examples: brittleness of SGD to implementation changes & mystical claims like "batch norm works by reducing internal co-variate shift". Full talk now on YouTube:

3

139

358

Me: [rambles while introducing an idea, apologizes].@dkaushik96: "no, keep going. this is doing wonders for my impostor syndrome". 🤣🥳😅. — The Joys of PhD Advising, 2020.

5

5

365

After a decade of carefully maintained social media silence, our dear visionary @geoffreyhinton breaks his twitter silence… to gripe about retail banking customer service.

Does HSBC UK have any ML people? HSBC will not comply with my written instructions to transfer money within the UK. Fraud detection says it must be authorized by high value transfers. High value transfers say they cannot authorize it. 7 hours on the phone so far. Help!.

10

17

370

eight yrs ago i was a jr phd student over the moon to be sharing beers with the inventor of the lstm (@HochreiterSepp) at neurips in montreal w my first deep learning collaborator @davekale. hope you get to share a human moment w someone you admire this week.

3

20

285

Importance weighting is widely used but ***may have no effect on deep nets*** modulo choices regarding early stopping and weight decay. This "science-y" paper with my student Jonathon Byrd identifying this phenomenon was just accepted at #ICML2019 (1/2).

5

50

352

Notable: while LLMs are the singular force shaping the discipline & dominating the discourse in NLP, I saw absolutely nobody from the major LLM shops (@OpenAI, @AnthropicAI, @MosaicML) make an appearance at #acl2023.

19

26

347

Is the best Variational Autoencoder (VAE). not actually a VAE at all?!? This paper is wild. Extremely simple idea: regularized deterministic AE + ex-post density estimation. Results somehow both unsurprising—intuitive!—& shocking—how did people not know this for years?!?.

@skoularidou @_sam_sinha_ @zacharylipton but that uses adversarial learning in one variant (and MMD in another). Let me shamelessly plug RAEs The catch is that ex-post density estimation on the latent space gets you better aggregate posterior estimation and hence sample quality.

4

65

342

In latest ad campaign, @IBM says solution to homelessness is [wait for it. ] AI. The virtuosos have hit a new peak for shameless cynical bullshit.

17

30

315

Biden is very likely going to win, but going forward, can we stop acting like @NateSilver538 knows more than any other semi-smart blogger with lumpy prose and an undergraduate's knowledge of statistics?.

28

16

324

Excited to announce some personal news: After 3 yrs as science advisor to @AbridgeHQ, I’m jumping in as Chief Scientific Officer. Our team of NLP/ML researchers, designers, & engineers are tackling some of the biggest pain points facing doctors & patients. More to come…

24

10

330

Before the media blitz & retweet party get out of control, this idea exists, has been published, has a name, and a clearer justification. It is called ***Counterfactually-Augmented Data*** and here's the published paper (spotlight at #ICLR2020).

7

59

318

@weatherbryan @jonkeegan Are those airplanes all in the air simultaneously? That's terrifying.

8

8

288

BTW, deep learning libraries are full of this—e.g., undeclared weight decay. All kinds of things lurk that seem like good ideas using the "works better in general" argument but actually create a sloppy environment where scientists don't really know what model they are using.

By default, logistic regression in scikit-learn runs w L2 regularization on and defaulting to magic number C=1.0. How many millions of ML/stats/data-mining papers have been written by authors who didn't report (& honestly didn't think they were) using regularization?.

13

39

308

I live in Pennsylvania & so can you. PhD & MS applications to @mldcmu & @teppercmu open now! *Move to Pittsburgh, do great research, have a voice.*. If you work on fairness, healthcare, distribution shift, feedback loops, or NLP (but more than BERT) consider applying to @acmi_lab.

9

31

286