Tanya Marwah

@__tm__157

Followers

612

Following

3K

Media

4

Statuses

347

Using AI to Accelerate Scientific Discovery @ Polymathic AI and Simons Foundation | PhD from MLD @ CMU

New York City

Joined October 2016

Well, it was only a matter of time before Danish's unique insights and thoughtful takes on what is happening in our field got the recognition they deserve! Amazing! Congratulations @danish037 !!!.

Still can’t believe it. Our work on uncovering plagiarism in AI generated research received the outstanding paper award at ACL!!. Effort led by, and envisioned by the amazing @tarungupta360!.

0

0

3

RT @_vaishnavh: Today @ChenHenryWu and I will be presenting our #ICML work on creativity in the Oral 3A Reasoning session (West Exhibition….

0

18

0

RT @sukjun_hwang: Tokenization has been the final barrier to truly end-to-end language models. We developed the H-Net: a hierarchical netw….

0

741

0

RT @kundan_official: Introducing Disentangled Safety Adapters (DSAs) for fast and flexible AI Safety. To block harmful responses from an LL….

0

5

0

RT @jerrywliu: @BaigYasa @rajat_vd @HazyResearch 11/10.BWLer was just presented at the Theory of AI for Scientific Computing (TASC) worksho….

0

6

0

When I first read the BWLer paper and saw it claim near machine-level precision with PINNs, I was intrigued. And it works!!!. This brilliant paper by @jerrywliu is simple, elegant, and genuinely exciting. Highly recommend giving it a read!.

1/10.ML can solve PDEs – but precision🔬is still a challenge. Towards high-precision methods for scientific problems, we introduce BWLer 🎳, a new architecture for physics-informed learning achieving (near-)machine-precision (up to 10⁻¹² RMSE) on benchmark PDEs. 🧵How it works:

1

1

9

RT @nmwsharp: Logarithmic maps are incredibly useful for algorithms on surfaces--they're local 2D coordinates centered at a given source.….

0

66

0

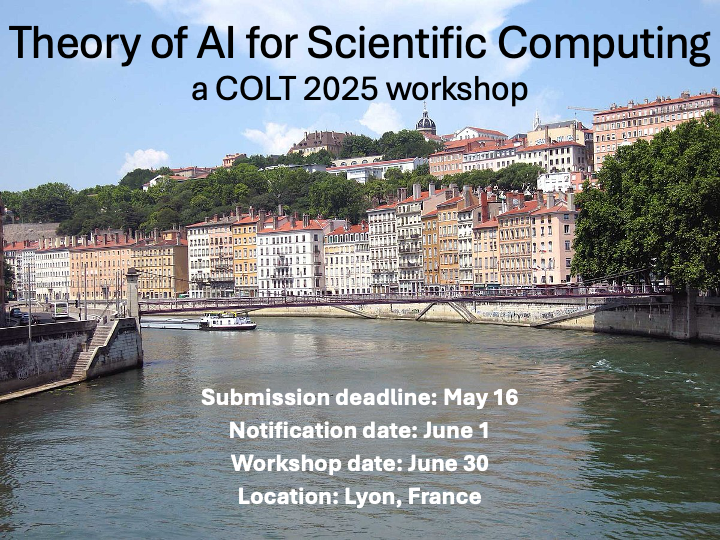

Sad to be missing out on the amazing workshop in Lyon! But if you are at COLT go checkout our workshop on AI for Scientific Computing tomorrow!!.

@khodakmoments, @__tm__157, along with myself, @nmboffi and Jianfeng Lu are organizing a COLT 2025 workshop on the Theory of AI for Scientific Computing, to be held on the first day of the conference (June 30).

0

2

14

RT @ask1729: 1/ Generating transition pathways (e.g., folded ↔ unfolded protein) is a huge challenge: we tackle this by combining the scala….

0

33

0

This work will be presented at #ICML2025. While I will not be able to attend, please say hi to my co-authors!.

0

0

0

I think this work reflects the approach towards research I’ve learned from @risteski_a. Understand the premise and how something works, ask a simple question and see where it leads. A lot of insights can come from this process!.

1

0

0

I've been fascinated with understanding the fundamentals of GNNs lately, for reasons I'm excited to share soon!. This led to combining some of my experimental observations with the amazing theoretical skills of my co-authors to show what happens when we maintain memory state on.

In recent work with D. Rohatgi, @__tm__157 , @zacharylipton, J. Lu and A. Moitra, we revisit fine-grained expressiveness in GNNs---beyond the usual symmetry (Weisfeiler-Lehman) lens. Paper will appear in ICML '25, thread below.

1

0

4

RT @jla_gardner: Extremely excited to be sharing the output of my internship in @MSFTResearch's #AIForScience team: "Understanding multi-fi….

0

18

0

Yep what @JunhongShen1 said. I started working on ML for PDEs during my PhD. And the first three years was just reading books and appreciating the beauty and the difficulty of the subject!.

PDE is definitely one of the hardest 🤯application domains I’ve worked on. Yet LLMs show once again they surpass humans in solving PDEs, leveraging code as an intermediary. LLM4Science is def an important and interesting area, and we hope our work attracts more researchers to.

1

1

18

RT @JunhongShen1: PDE is definitely one of the hardest 🤯application domains I’ve worked on. Yet LLMs show once again they surpass humans in….

0

6

0

This is the first step in a direction that I am very excited about! Using LLMs to solve scientific computing problems and potentially discover faster (or new) algorithms. #AI4Science #ML4PDEs. We show that LLMs can write PDE solver code, choose appropriate algorithms, and produce.

Can LLM solve PDEs? 🤯.We present CodePDE, a framework that uses LLMs to automatically generate solvers for PDE and outperforms human implementation! 🚀.CodePDE demonstrates the power of inference-time algorithms and scaling for PDE solving. More in 🧵:.#ML4PDE #AI4Science

0

11

36

Congratulations @ZongyiLiCaltech. So well deserved!! Can’t wait to catch up when you are in NYC!.

The best undergraduate and graduate research awards at @Caltech commencement this year were presented to research on Neural Operators by my students @ZongyiLiCaltech and Miguel Liu-Schiaffini. This recognition is a testament to their passion and dedication, and the impact

1

0

6

Very interesting! Reading @_vaishnavh’s work is always a treat!!.

📢 New paper on creativity & multi-token prediction! We design minimal open-ended tasks to argue:. → LLMs are limited in creativity since they learn to predict the next token. → creativity can be improved via multi-token learning & injecting noise ("seed-conditioning" 🌱) 1/ 🧵

0

1

5

RT @thuereyGroup: I'd like to highlight PICT, our new differentiable Fluid Solver built for AI & learning: Simulat….

0

5

0