Zachary Novack

@zacknovack

Followers

716

Following

3K

Media

48

Statuses

347

on the job market! efficient/controllable audio gen | phd @ucsd_cse | prev @sonyai_global @adoberesearch @stabilityai @mldcmu | teaching drums @pulsepercussion

Joined June 2022

RUListening won best paper!! 🎉🎉 HUGE thanks to @yongyi_zang for leading the epic project (started from last year's ismir!), @seano_research @BergKirkpatrick @McAuleyLabUCSD for the immense support throughout the project, and the entire @ISMIRConf for a fantastic conference!

Are AI models for music truly listening, or just good at guessing? This critical question is at the heart of the latest Best Paper Award winner at #ISMIR2025! Huge congratulations to Yongyi Zang, Sean O'brien, Taylor Berg Kirkpatrick, Julian McAuley, and Zachary Novack for their

4

4

47

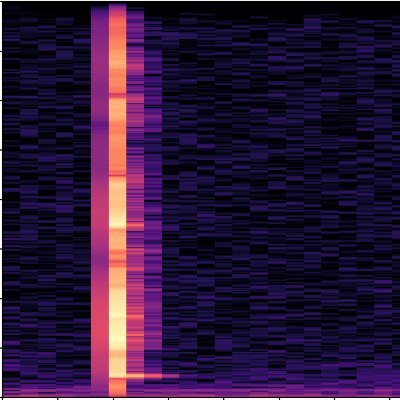

🎤 StylePitcher is out! A pitch foundation model for expressive, style-following singing — ready to plug into any vocal generative model! My work this summer at @Smule @SmuleLabs 🤘 🎧 https://t.co/SAL48PecZp 📖 https://t.co/L0srpF5j1X 💻 Open-sourcing coming soon 🚀 🧵1/n

arxiv.org

Existing pitch curve generators face two main challenges: they often neglect singer-specific expressiveness, reducing their ability to capture individual singing styles. And they are typically...

1

1

4

It's time to renew the Democratic Party. Introducing DECIDING TO WIN: the most comprehensive account yet of where Democrats went wrong, and what we need to do to win again. With a year of research and tons of new data, by @laurenhpope, @liamkerr, and me. Link and 🧵 below.

324

267

1K

Just pushed a notebook for easy model training / generation example on MusicRFM!

github.com

Open Source code for our paper, Steering Autoregressive Music Generation with Recursive Feature Machines (Zhao et al., 2025). aka MusicRFM - astradzhao/music-rfm

@astradzhao This seems awesome Daniel! Super practical for real-world use-cases and experimentation. Maybe worth creating a colab demo? Would also love to see this applied to other models as you mentioned.

0

1

3

A huge congrats to @UCSanDiego CSE's Prof. Julian McAuley, PhD student Zachary Novack, & team for their Best Paper Award at #ISMIR2025!🎉They tackled a key question—are AI music models really listening?—and built a new framework, RUListening, to properly test it🎶 #AI #MusicTech

0

1

17

🔥I'm sharing all the materials and recordings for my course on *Music & AI* at University of Michigan! The course introduces AI’s applications in music from analysis, creation, retrieval to processing. Course website: https://t.co/xlXxtipG7m Recordings:

youtube.com

Music and AI (PAT 463/563), University of Michigan, Fall 2025 Course website: https://hermandong.com/teaching/pat463_563_fall2025/

2

9

36

MusicRFM was born out of @BergKirkpatrick and I seeing @dbeagleholeCS 's wild RFM work @ the UCSD GenAI summit, and we knew we had to try it for music! Huge thanks to them for their insight and help with the paper 🤘

0

0

3

Our experiments on time-varying control are also quite promising, as this opens the door for potentially light-weight yet *real-time* interactive control over generative streaming models (looking at you magenta-rt) Excited to see where this goes!

1

0

2

This means MusicRFM is perfect for both rapid prototyping (try out controls before training for them) and also for AI pedagogy (your students don't need GPUs to train them!)

1

0

1

I've worked in training-free control for a long time, and I see MusicRFM as a spiritual successor to DITTO Will training-free ever beat training-based? Probably not! But RFMs are comically light-weight (can be trained in *Colab*), and steering has no inference latency effect!

1

0

2

MusicRFM is out! Led by @astradzhao (he's looking for PhDs now, hire him!), we introduce time-varying control over music-theoretic features in pretrained AR music models (MusicGen), all w/o finetuning the model! I've been real excited about this project, some more thoughts 🧵

We found a way to steer AI music gen toward specific notes, chords, and tempos, without retraining the model or significantly sacrificing audio quality! Introducing MusicRFM 🎵 Paper: https://t.co/oZciYbgB9P Audio: https://t.co/FQ1W8k1LZh Code: https://t.co/drnE1XGcFC (1/5)

1

1

9

Daniel Zhao, Daniel Beaglehole, Taylor Berg-Kirkpatrick, Julian McAuley, Zachary Novack, "Steering Autoregressive Music Generation with Recursive Feature Machines,"

arxiv.org

Controllable music generation remains a significant challenge, with existing methods often requiring model retraining or introducing audible artifacts. We introduce MusicRFM, a framework that...

0

2

2

Though I don’t spend too much time in symbolic land, it still feels like in 2025 we haven’t “cracked” symbolic music and can’t produce long form coherent content (especially across diverse genres). With better semantic representations, we can get closer to this! Use Musetok!!

0

0

3

Symbolic music (like REMI) is interesting in that it’s both dynamically sampled like text (more content->long seqs) but contains fixed sampling info like audio (as every meta-token contains timing info) Compressing a modality like this is still very open!

1

0

2

Musetok by the titan @Yuer867 is out!! If you’re interested in representation learning, especially for discrete modalities like symbolic music, this is for you! Some extra thoughts here 🧵

🎶 Symbolic music deserves better representations 🎶 Excited to share MuseTok, a tokenization method enabling symbolic music generation and semantic understanding across diverse tasks. 🎵 🎧 https://t.co/JALFxC9oZr 📖 https://t.co/ohTb9VNSsD 💻 https://t.co/WBSLs1pW9E 🧵1/5

3

4

21

1/2 We’re releasing an in-depth tutorial on neural audio codecs, the secret sauce that makes it possible for audio LLMs to not sound like a horror movie:

13

54

424

Call for Papers: NLP4MusA 2026 We welcome work at the intersection of language, music, and audio — from music understanding and recommendation to creative generation. Submission deadline: Dec 19, 2025 Details & submission:

sites.google.com

🎵 First Call for Papers: 4th Workshop on NLP for Music and Audio (NLP4MusA 2026) Co-located with EACL 2026, Rabat, Morocco & Online | March 24–29, 2026 Shared Task: Conversational Music Recommenda...

1

3

7

Voting is LIVE!🚨 Top 10 AI Song Contest 2025 finalists are ready — now it’s your turn to vote at https://t.co/bUxctSbHGt

@dadabots, @DJSwami, @HEL9000ismetal, marts, @gantasmo, Auditory Nerve, Nikki, GENEALOGY, BRNRT Collective, KicKRaTT & KaOzBrD #AISongContest2025 #AIMusic

1

2

10

Really happy that our paper "Cobblestone: A Divide-and-Conquer Approach for Automating Formal Verification" will appear in ICSE 2026! https://t.co/3KGSzB9Jqi

arxiv.org

Formal verification using proof assistants, such as Coq, is an effective way of improving software quality, but requires significant effort and expertise. Machine learning can automatically...

0

4

27

Excited to be going to my first #WASPAA2025 this year to present Stable Audio Open Small! Will be around all week for those who want to chat about all things fast and interactive audio gen 🤘

Stability AI just dropped Stable Audio Open Small on Hugging Face Fast Text-to-Audio Generation with Adversarial Post-Training

1

4

31

MAJOR NEWS: @GavinNewsom signed SB 79, my bill allowing more housing near public transit — rail, subway, rapid bus. It’s a huge step for housing in California. It’ll create more homes, strengthen our transit systems & reduce traffic & carbon emissions. Thank you, Governor!

357

549

5K