ARC Prize

@arcprize

Followers

20K

Following

4K

Media

141

Statuses

436

A North Star for AGI. Co-founders: @fchollet @mikeknoop. President: @gregkamradt. Help support the mission - make a donation today.

Joined March 2024

AGI is reached when the capability gap between humans and computers is zero. ARC Prize Foundation measures this to inspire progress. Today we preview the unbeaten ARC-AGI-2 + open public donations to fund ARC-AGI-3. TY Schmidt Sciences (@ericschmidt) for $50k to kick us off!

24

69

686

R1-Zero matches performance of R1 on ARC-AGI. We’ve verified that R1-Zero scored 14% on ARC-AGI-1 (vs 15% on R1). @mikeknoop explains why R1-Zero is more important than R1, why scaling inference isn’t going away, and what happens when “inference becomes training”. 1/4.

10

69

575

Wow! One of our donors has anonymously decided to materially increase their support to $1M!. This fully funds our 2025 goal in just 1 day. With this support, we’ll launch v2, build v3, and continue driving progress in measuring AGI

We're not done - @bryanhelmig just pledged $15K to ARC Prize.

11

22

376

Announcing ARC Prize. A $1M+ competition to beat the ARC-AGI benchmark and open source the solution. Hosted by @mikeknoop & @fchollet.

24

110

374

Novel test-time-training method to solve ARC-AGI without pretraining. "CompressARC achieves 34.75% on the training set and 20% on the evaluation set".

Introducing *ARC‑AGI Without Pretraining* – ❌ No pretraining. ❌ No datasets. Just pure inference-time gradient descent on the target ARC-AGI puzzle itself, solving 20% of the evaluation set. 🧵 1/4

4

22

253

Deep learning is not enough to beat ARC Prize. We need something more. @mikeknoop & @fchollet share a path to defeat ARC-AGI via Program Synthesis.

6

33

192

ARC-AGI-1 (2019) pinpointed the moment AI moved beyond pure memorization in late 2024 demonstrated by OpenAI's o3 system. Now, ARC-AGI-2 raises the bar significantly, challenging known test-time adaptation methods. @MLStreetTalk is helping us launch ARC-AGI-2 with an interview.

1

4

183

Inspired by @karpathy's recent tweet - games are a great test environment for AI. They require:.• Real-time decisions.• Multiple objectives.• Spatial reasoning.• Dynamic environments. So we built SnakeBench to explore how LLMs would do.

I quite like the idea using games to evaluate LLMs against each other, instead of fixed evals. Playing against another intelligent entity self-balances and adapts difficulty, so each eval (/environment) is leveraged a lot more. There's some early attempts around. Exciting area.

5

4

178

[Paper] Current high-scoring team member @bayesilicon shares an ARC-AGI training task generator. More examples ". should enable a wide range of experiments that may be important stepping stones towards making leaps on the benchmark .".

3

14

143

ARC-AGI-2 has been added to @huggingface's Lighteval. As you evaluate your models with Lighteval, ARC-AGI-2 will now be featured as an output.

🔥 Evaluating LLMs? You need Lighteval — the fastest, most flexible toolkit for benchmarking models, built by @huggingface. Now with:.✅ Plug & play custom model inference (evaluate any backend).📈 Tasks like AIME, GPQA:diamond, SimpleQA, and hundreds more. Details below 🧵👇.

8

18

141

.@LiaoIsaac91893 has open sourced his "ARC-AGI Without Pretraining" notebook on Kaggle. You can use it today and enter ARC Prize 2025. It currently scores 4.17% on ARC-AGI-2 (5th place). Amazing mid-year sharing and contribution. Thank you Isaac.

Introducing *ARC‑AGI Without Pretraining* – ❌ No pretraining. ❌ No datasets. Just pure inference-time gradient descent on the target ARC-AGI puzzle itself, solving 20% of the evaluation set. 🧵 1/4

2

8

130

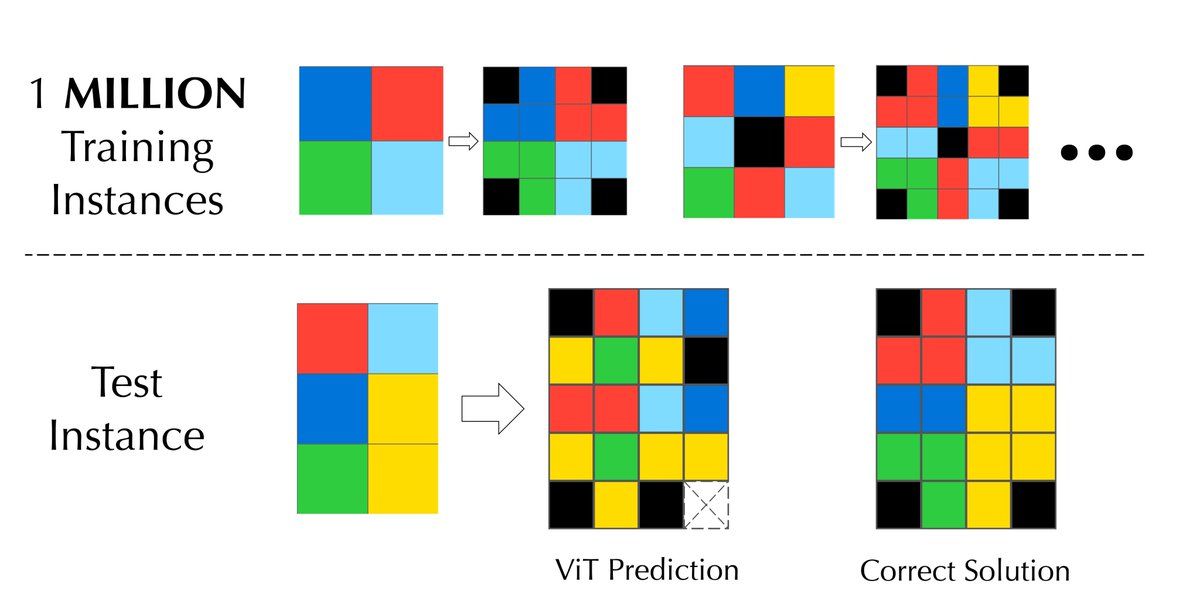

"Spatially-aware tokenization scheme" is a concept @fchollet has been speaking about on our university tour. Transformers aren't just for sequences - they can be made to work with any kind of data structure, including grids.

We trained a Vision Transformer to solve ONE single task from @fchollet and @mikeknoop’s @arcprize. Unexpectedly, it failed to produce the test output, even when using 1 MILLION examples! Why is this the case? 🤔

2

10

124

Key finding #1: Early responses showed higher accuracy. We noticed that tasks which the model returned sooner had higher accuracy. Those that took longer, either in duration or token usage, were more likely to fail. This signals that the model comes to conclusion or has higher

2

3

118

The Rise of Fluid Intelligence. "@fchollet is on a quest to make AI a bit more human". A thorough article by @matteo_wong explaining ARC-AGI and @arcprize

7

18

111

Thank you to @rishab_partha for helping with this analysis. The purpose of the 100 Semi-Private Tasks is to provide a secondary hold out test set score. The 400 Public Eval tasks were published in 2019. They have been widely studied and included in other model training data.

1

2

100

Key finding #2: Higher reasoning can be inefficient. When comparing o3-medium and o3-high on the same tasks, we found that o3-high consistently used more tokens to arrive at the same answers. While this isn’t surprising, it highlights a key tradeoff: o3-high can offer no accuracy

1

3

97